A relational database is a type of database that organizes data into rows and columns, which collectively form a table where the data points are related to each other.

Data is typically structured across multiple tables, which can be joined together via a primary key or a foreign key. These unique identifiers demonstrate the different relationships which exist between tables, and these relationships are usually illustrated through different types of data models . Analysts use SQL queries to combine different data points and summarize business performance, allowing organizations to gain insights, optimize workflows, and identify new opportunities.

For example, imagine your company maintains a database table with customer information, which contains company data at the account level. There may also be a different table, which describes all the individual transactions that align to that account. Together, these tables can provide information about the different industries that purchase a specific software product.

The columns (or fields) for the customer table might be Customer ID , Company Name , Company Address , Industry etc.; the columns for a transaction table might be Transaction Date , Customer ID , Transaction Amount , Payment Method , etc. The tables can be joined together with the common Customer ID field. You can, therefore, query the table to produce valuable reports, such as a sales reports by industry or company, which can inform messaging to prospective clients.

Relational databases are also typically associated with transactional databases, which execute commands, or transactions, collectively. A popular example that is used to illustrate this is a bank transfer. A defined amount is withdrawn from one account, and then it is deposited within another. The total amount of money is withdrawn and deposited, and this transaction cannot occur in any kind of partial sense. Transactions have specific properties. Represented by the acronym, ACID, ACID properties are defined as:

- Atomicity: All changes to data are performed as if they are a single operation. That is, all the changes are performed, or none of them are.

- Consistency: Data remains in a consistent state from state to finish, reinforcing data integrity.

- Isolation: The intermediate state of a transaction is not visible to other transactions, and as a result, transactions that run concurrently appear to be serialized.

- Durability: After the successful completion of a transaction, changes to data persist and are not undone, even in the event of a system failure.

These properties enable reliable transaction processing.

Relational database vs. relational database management system

While a relational database organizes data based off a relational data model, a relational database management system (RDBMS) is a more specific reference to the underlying database software that enables users to maintain it. These programs allow users to create, update, insert, or delete data in the system, and they provide:

- Data structure

- Multi-user access

- Privilege control

- Network access

Examples of popular RDBMS systems include MySQL, PostgreSQL, and IBM DB2. Additionally, a relational database system differs from a basic database management system (DBMS) in that it stores data in tables while a DBMS stores information as files.

Learn key benefits of generative AI and how organizations can incorporate generative AI and machine learning into their business.

Read the guide for data leaders

Invented by Don Chamberlin and Ray Boyce at IBM, Structured Query Language (SQL) is the standard programming language for interacting with relational database management systems, allowing database administrator to add, update, or delete rows of data easily. Originally known as SEQUEL, it was simplified to SQL due to a trademark issue. SQL queries also allows users to retrieve data from databases using only a few lines of code. Given this relationship, it’s easy to see why relational databases are also referred to as “SQL databases” at times.

Using the example from above, you might construct a query to find the top 10 transactions by company for a specific year with the following code:

SELECT COMPANY_NAME, SUM(TRANSACTION_AMOUNT)

FROM TRANSACTION_TABLE A

LEFT JOIN CUSTOMER_TABLE B

ON A.CUSTOMER_ID = B.CUSTOMER_ID

WHERE YEAR(DATE) = 2022

GROUP BY 1

ORDER BY 2 DESC

The ability to join data in this way helps us to reduce redundancy within our data systems, allowing data teams to maintain one master table for customers versus duplicating this information if there was another transaction in the future. To learn more, Don details more of the history of SQL in his paper here (link resides outside ibm.com).

Before relational databases, companies used a hierarchical database system with a tree-like structure for the data tables. These early database management systems (DBMS) enabled users to organize large quantities of data. However, they were complex, often proprietary to a particular application, and limited in the ways in which they could uncover within the data. These limitations eventually led IBM researcher, Edgar F. Codd, to publish a paper (link resides outside ibm.com) in 1970, titled "A Relational Model of Data for Large Shared Data Banks,” which theorized the relational database model. In this proposed model, information could be retrieved without specialized computer knowledge. He proposed arranging data based on meaningful relationships as tuples, or attribute-value pairs. Sets of tuples were referred to as relations, which ultimately enabled the merging of data across tables.

In 1973, the San Jose Research Laboratory—now known as the Almaden Research Center—began a program called System R (R for relational) to prove this relational theory with what it called “an industrial-strength implementation.” It ultimately became a testing ground for SQL as well, enabling it to become more widely adopted in a short period of time. However, Oracle’s adoption of SQL also didn’t hurt its popularity with database administrators.

By 1983, IBM introduced the DB2 family of relational databases, so named because it was IBM’s second family of database management software. Today, it is one of IBM’s most successful products, continuing to handle billions of transactions every day on cloud infrastructure and setting the foundational layer for machine learning applications.

While relational databases structure data into a tabular format, non-relational databases do not have as rigid of a database schema. In fact, non-relational databases organize data differently based on the type of database. Irrespective of the type of non-relational database, they all aim to solve for the flexibility and scalability issues inherent in relational models which are not ideal for unstructured data formats, like text, video, and images. These types of databases include:

- Key-value store: This schema-less data model is organized into a dictionary of key-value pairs, where each item has a key and a value. The key could be like something similar found in a SQL database, like a shopping cart ID, while the value is an array of data, like each individual item in that user’s shopping cart. It’s commonly used for caching and storing user session information, such as shopping carts. However, it's not ideal when you need to pull multiple records at a time. Redis and Memcached are examples of open-source databases with this data model.

- Document store: As suggested by the name, document databases store data as documents. They can be helpful in managing semi-structured data, and data are typically stored in JSON, XML, or BSON formats. This keeps the data together when it is used in applications, reducing the amount of translation needed to use the data. Developers also gain more flexibility since data schemas do not need to match across documents (e.g. name vs. first_name). However, this can be problematic for complex transactions, leading to data corruption. Popular use cases of document databases include content management systems and user profiles. An example of a document-oriented database is MongoDB, the database component of the MEAN stack.

- Wide-column store: These databases store information in columns, enabling users to access only the specific columns they need without allocating additional memory on irrelevant data. This database tries to solve for the shortcomings of key-value and document stores, but since it can be a more complex system to manage, it is not recommended for use for newer teams and projects. Apache HBase and Apache Cassandra are examples of open-source, wide-column databases. Apache HBase is built on top of Hadoop Distributed Files System that provides a way of storing sparse data sets, which is commonly used in many big data applications. Apache Cassandra, on the other hand, has been designed to manage large amounts of data across multiple servers and clustering that spans multiple data centers. It’s been used for a variety of use cases, such as social networking websites and real-time data analytics.

- Graph store: This type of database typically houses data from a knowledge graph. Data elements are stored as nodes, edges and properties. Any object, place, or person can be a node. An edge defines the relationship between the nodes. Graph databases are used for storing and managing a network of connections between elements within the graph. Neo4j (link resides outside IBM), a graph-based database service based on Java with an open-source community edition where users can purchase licenses for online backup and high availability extensions, or pre-package licensed version with backup and extensions included.

NoSQL databases also prioritize availability over consistency.

When computers run over a network , they invariably need to decide to prioritize consistent results (where every answer is always the same) or high uptime, called "availability." This is called the "CAP Theory," which stands for Consistency, Availability, or Partition Tolerance. Relational databases ensure the information is always in-sync and consistent. Some NoSQL databases, like Redis, prefer to always provide a response. That means the information you receive from a query may be incorrect by a few seconds—perhaps up to half a minute. On social media sites, this means seeing an old profile picture when the newest one is only a few moments old. The alternative could be a timeout or error. On the other hand, in banking and financial transactions, an error and resubmit may be better than old, incorrect information.

For a full rundown of the differences between SQL and NoSQL, see " SQL vs. NoSQL Databases: What's the Difference? "

The primary benefit of the relational database approach is the ability to create meaningful information by joining the tables. Joining tables allows you to understand the relations between the data, or how the tables connect. SQL includes the ability to count, add, group, and also combine queries. SQL can perform basic math and subtotal functions and logical transformations. Analysts can order the results by date, name, or any column. These features make the relational approach the single most popular query tool in business today.

Relational databases have several advantages compared to other database formats:

Ease of Use

By virtue of its product lifespan, there is more of a community around relational databases, which partially perpetuates its continued use. SQL also makes it easy to retrieve datasets from multiple tables and perform simple transformations such as filtering and aggregation. The use of indices within relational databases also allows them to locate this information quickly without searching each row in the selected table.

While relational databases have historically been viewed as a more rigid and inflexible data storage option, advances in technology and DBaaS options are changing that perception. While there is still more overhead to develop schemas compared to NoSQL database offerings, relational databases are becoming more flexible as they migrate to cloud environments.

Reduced redundancy

Relational databases can eliminate redundancy in two ways. The relational model itself reduces data redundancy via a process known as normalization. As noted earlier, a customer table should only log unique records of customer information versus duplicating this information for multiple transactions.

Stored procedures also help to reduce repetitive work. For example, if database access is restricted to certain roles, functions or teams, a stored procedure can help to manage access-control. These reusable functions free up coveted application developer time to tackle high impact work.

Ease of backup and disaster recovery

Relational databases are transactional—they guarantee the state of the entire system is consistent at any moment. Most relational databases offer easy export and import options, making backup and restore trivial. These exports can happen even while the database is running, making restore on failure easy. Modern, cloud-based relational databases can do continuous mirroring, making the loss of data on restore measured in seconds or less. Most cloud-managed services allow you to create Read Replicas, like in IBM Cloud® Databases for PostgreSQL . These Read Replicas enable you to store a read-only copy of your data in a cloud data center. Replicas can be promoted to Read/Write instances for disaster recovery as well.

Learn about IBM Db2, the cloud-native database built to power low-latency transactions and real-time analytics at scale.

Discover PostgreSQL as a service, built enterprise-ready with native integration into the IBM Cloud.

Hyper Protect Virtual Servers for Virtual Private Cloud (VPC) is a fully managed confidential compute container runtime that enables the deployment of sensitive containerized workloads in a highly isolated environment with technical assurance.

Develop and run applications on a security-rich, enterprise-class database that's based on open source PostgreSQL.

Look back to the beginning of Db2.

Gain a single, cloud-native engine for database administrators, enterprise architects and developers to keep apps running, store and query anything, and simplify development. No matter the volume or complexity of transactions, protect your applications and make them highly performant and resilient anywhere.

Two-Bit History

Computing through the ages

Important Papers: Codd and the Relational Model

29 Dec 2017

It’s hard to believe today, but the relational database was once the cool new kid on the block. In 2017, the relational model competes with all sorts of cutting-edge NoSQL technologies that make relational database systems seem old-fashioned and boring. Yet, 50 years ago, none of the dominant database systems were relational. Nobody had thought to structure their data that way. When the relational model did come along, it was a radical new idea that revolutionized the database world and spawned a multi-billion dollar industry.

The relational model was introduced in 1970. Edgar F. Codd, a researcher at IBM, published a paper called “A Relational Model of Data for Large Shared Data Banks.” The paper was a rewrite of a paper he had circulated internally at IBM a year earlier. The paper is unassuming; Codd does not announce in his abstract that he has discovered a brilliant new approach to storing data. He only claims to have employed a novel tool (the mathematical notion of a “relation”) to address some of the inadequacies of the prevailing database models.

In 1970, there were two schools of thought about how to structure a database: the hierarchical model and the network model. The hierarchical model was used by IBM’s Information Management System (IMS), the dominant database system at the time. The network model had been specified by a standards committee called CODASYL (which also—random tidbit—specified COBOL) and implemented by several other database system vendors. The two models were not really that different; both could be called “navigational” models. They persisted tree or graph data structures to disk using pointers to preserve the links between the data. Retrieving a record stored toward the bottom of the tree would involve first navigating through all of its ancestor records. These databases were fast (IMS is still used by many financial institutions partly for this reason, see this excellent blog post ) but inflexible. Woe unto those database administrators who suddenly found themselves needing to query records from the bottom of the tree without having an obvious place to start at the top.

Codd saw this inflexibility as a symptom of a larger problem. Programs using a hierarchical or network database had to know about how the stored data was structured. Programs had to know this because they were responsible for navigating down this structure to find the information they needed. This was so true that when Charles Bachman, a major pioneer of the network model, received a Turing Award for his work in 1973, he gave a speech titled “ The Programmer as Navigator .” Of course, if programs were saddled with this responsibility, then they would immediately break if the structure of the database ever changed. In the introduction to his 1970 paper, Codd motivates the search for a better model by arguing that we need “data independence,” which he defines as “the independence of application programs and terminal activities from growth in data types and changes in data representation.” The relational model, he argues, “appears to be superior in several respects to the graph or network model presently in vogue,” partly because, among other benefits, the relational model “provides a means of describing data with its natural structure only.” By this he meant that programs could safely ignore any artificial structures (like trees) imposed upon the data for storage and retrieval purposes only.

To further illustrate the problem with the navigational models, Codd devotes the first section of his paper to an example data set involving machine parts and assembly projects. This dataset, he says, could be represented in existing systems in at least five different ways. Any program \(P\) that is developed assuming one of five structures will fail when run against at least three of the other structures. The program \(P\) could instead try to figure out ahead of time which of the structures it might be dealing with, but it would be difficult to do so in this specific case and practically impossible in the general case. So, as long as the program needs to know about how the data is structured, we cannot switch to an alternative structure without breaking the program. This is a real bummer because (and this is from the abstract) “changes in data representation will often be needed as a result of changes in query, update, and report traffic and natural growth in the types of stored information.”

Codd then introduces his relational model. This model would be refined and expanded in subsequent papers: In 1971, Codd wrote about ALPHA, a SQL-like query language he created; in another 1971 paper, he introduced the first three normal forms we know and love today; and in 1972, he further developed relational algebra and relational calculus, the mathematically rigorous underpinnings of the relational model. But Codd’s 1970 paper contains the kernel of the relational idea:

The term relation is used here in its accepted mathematical sense. Given sets \(S_1, S_i, ..., S_n\) (not necessarily distinct), \(R\) is a relation on these \(n\) sets if it is a set of \(n\)-tuples each of which has its first element from \(S_1\), its second element from \(S_2\), and so on. We shall refer to \(S_j\) as the \(j\)th domain of \(R\). As defined above, \(R\) is said to have degree \(n\). Relations of degree 1 are often called unary , degree 2 binary , degree 3 ternary , and degree \(n\) n-ary .

Today, we call a relation a table , and a domain an attribute or a column . The word “table” actually appears nowhere in the paper, though Codd’s visual representations of relations (which he calls “arrays”) do resemble tables. Codd defines several more terms, some of which we continue to use and others we have replaced. He explains primary and foreign keys, as well as what he calls the “active domain,” which is the set of all distinct values that actually appear in a given domain or column. He then spends some time distinguishing between a “simple” and a “nonsimple” domain. A simple domain contains “atomic” or “nondecomposable” values, like integers. A nonsimple domain has relations as elements. The example Codd gives here is that of an employee with a salary history. The salary history is not one salary but a collection of salaries each associated with a date. So a salary history cannot be represented by a single number or string.

It’s not obvious how one could store a nonsimple domain in a multi-dimensional array, AKA a table. The temptation might be to denote the nonsimple relationship using some kind of pointer, but then we would be repeating the mistakes of the navigational models. Instead. Codd introduces normalization, which at least in the 1970 paper involves nothing more than turning nonsimple domains into simple ones. This is done by expanding the child relation so that it includes the primary key of the parent. Each tuple of the child relation references its parent using simple domains, eliminating the need for a nonsimple domain in the parent. Normalization means no pointers, sidestepping all the problems they cause in the navigational models.

At this point, anyone reading Codd’s paper would have several questions, such as “Okay, how would I actually query such a system?” Codd mentions the possibility of creating a universal sublanguage for querying relational databases from other programs, but declines to define such a language in this particular paper. He does explain, in mathematical terms, many of the fundamental operations such a language would have to support, like joins, “projection” ( SELECT in SQL), and “restriction” ( WHERE ). The amazing thing about Codd’s 1970 paper is that, really, all the ideas are there—we’ve been writing SELECT statements and joins for almost half a century now.

Codd wraps up the paper by discussing ways in which a normalized relational database, on top of its other benefits, can reduce redundancy and improve consistency in data storage. Altogether, the paper is only 11 pages long and not that difficult of a read. I encourage you to look through it yourself. It would be another ten years before Codd’s ideas were properly implemented in a functioning system, but, when they finally were, those systems were so obviously better than previous systems that they took the world by storm.

If you enjoyed this post, more like it come out every four weeks! Follow @TwoBitHistory on Twitter or subscribe to the RSS feed to make sure you know when a new post is out.

Recommendations for Evolving Relational Databases

- Conference paper

- First Online: 03 June 2020

- Cite this conference paper

- Julien Delplanque 13 , 14 ,

- Anne Etien 13 , 14 ,

- Nicolas Anquetil 13 , 14 &

- Stéphane Ducasse 13 , 14

Part of the book series: Lecture Notes in Computer Science ((LNISA,volume 12127))

Included in the following conference series:

- International Conference on Advanced Information Systems Engineering

5062 Accesses

3 Citations

Relational databases play a central role in many information systems. Their schemas contain structural and behavioral entity descriptions. Databases must continuously be adapted to new requirements of a world in constant change while: (1) relational database management systems (RDBMS) do not allow inconsistencies in the schema; (2) stored procedure bodies are not meta-described in RDBMS such as PostgreSQL that consider their bodies as plain text. As a consequence, evaluating the impact of an evolution of the database schema is cumbersome, being essentially manual. We present a semi-automatic approach based on recommendations that can be compiled into a SQL patch fulfilling RDBMS constraints. To support recommendations, we designed a meta-model for relational databases easing computation of change impact. We performed an experiment to validate the approach by reproducing a real evolution on a database. The results of our experiment show that our approach can set the database in the same state as the one produced by the manual evolution in 75% less time.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Evolution of a Relational Schema and Its Impact on SQL Queries

A Generic Approach to Schema Evolution in Live Relational Databases

CoDEL – A Relationally Complete Language for Database Evolution

- Relational database

- Semi-automatic evolution

- Impact analysis

1 Introduction

Relational Database (DB) schemas contain structural entity descriptions ( e.g., tables and columns), but also sometimes descriptions of behavioral entities such as views ( i.e., named SELECT queries), stored procedures ( i.e., functions written in a programming language), triggers ( i.e., entity listening to events happening on a table and reacting to them), etc. Structural and behavioral entities are referencing each others through foreign keys, function calls, or table/column references in queries.

Continuous evolution happens on databases [ 21 ] to adapt to new requirements of a world in constant change. When databases evolve, problems are twofold:

Issue 1: Relational database management systems (RDBMS) do not allow schema inconsistencies . The consistency of databases is ensured by the RDBMS at any moment . This feature makes the evolution of the database complicated because the database runs during the evolution and continues to ensure its consistency. For other kinds of software, the program is stopped during source code edition. Thus, the program can be temporarily in an inconsistent state.

Issue 2: Stored procedure bodies are not meta-described in RDBMS such as PostgreSQL. Unlike references between tables, columns, constraints or views that are kept and managed through metadata, stored procedures bodies are considered only as text and existing references they make are not known. This second problem slightly alters the first one as inconsistencies can be introduced but only in stored procedures (dangling references).

For example, to remove a column from a table, different cases occur:

If the column is not referenced, the change can be performed.

If the column is a primary key and is referenced by a foreign key in another table, the removal is not allowed.

If the column is referenced in a view, either the change is refused or the view must be dropped. In the latter case, views referencing the one to drop must also be transitively dropped.

If the column is referenced in a function, change can be performed but an error might arise at execution.

Cases (ii) and (iii) result from the first issue whereas the second issue leads to case (iv). This shows that the consequences of even a small change can be complex to handle, particularly cases (iii) and (iv). Such changes need to be anticipated to comply with the constraints imposed by the RDBMS. Meurice et al. studied the history of three applications using databases [ 14 ]. They conclude that the impact of renaming or removing a table or a column on programs using the database is not trivial. To ease the evolutions of the database and the management of their impacts on related programs, the authors provide a tool to detect and prevent program inconsistencies under database schema evolution. Their approach has two major drawbacks: First, only a small number of evolutions of the database are taken into account (removing and renaming table or column); second, internal programs stored in behavioral entities such as views or stored procedures are not studied.

In this paper, we propose a tool automating most modifications required after applying a change on a database, similar to a refactoring browser [ 18 , 19 ]. We do not consider only refactorings [ 5 ], which are by definition behavior preserving, but also deal with other evolutions as illustrated by the above example. Thus, we use the term change rather than refactoring .

We propose an approach based on a meta-model to provide recommendations to database architects. The architects initiate a change and, based on impact analysis, our tool proposes recommendations to the architect. Those recommendations allow the model to reach a consistent state after the change – new changes are induced and new recommendations provided until a stable state is reached. Finally, when the architect accepts the various changes, an analysis is done to generate a patch containing all the SQL queries to perform these changes.

This article is organized as follows. Section 2 sets the context and defines the vocabulary. Section 3 introduces the behavior-aware meta-model used to represent relational databases. Section 4 describes our approach based on impact computation and recommendations to generate SQL evolution patch. It also shows an example of how our approach manages such evolutions illustrated with one evolution operator. Section 5 validates our approach by using our implementation to reproduce an evolution that was performed by an architect on a real database. Section 6 discusses related work. Finally, Sect. 7 concludes this article by summarizing our results and proposing future work.

2 Setting the Context

Before getting into the meta-model and approach explanations, let us set the context in which the approach is designed.

Database Schema: The concept of database schema commonly refers to the way data are organized in a database (through tables and referential integrity constraints for relational databases). However, RDBMSs also allows one to define behavior inside the database ( e.g., stored procedure), and this behavior might be used to constrain data ( e.g., triggers or CHECK constraints). Thus, since there is a fine line between the schema as described before and the behavior, in this article when the terms database schema or schema are used, they refer to both structural and behavioral entities.

Impact of a Change: Changing a database will probably affect its structure and behavior. The impact of such a change is defined as the set of database entities that potentially need to be adapted for the change to be applied. For example, RemoveColumn ’s impact set includes constraints applied to the column.

Recommendation: Once the impact of a change has been computed, decisions might need to be taken to handle impacted entities, for example dropping views in cascade in the scenario proposed in the introduction. In the context of this paper, we call each of these potential decisions a recommendation . For example, if one wants to remove a column, we recommend to remove the NOT NULL constraint concerning this column.

Note that Bohnert and Arnold definition of impact [ 1 ] mixes the set of impacted entities and the actions to be done to fix such entities (in the context of this paper, we call these actions “recommendations”): “Identifying the potential consequences of a change, or estimating what needs to be modified to accomplish a change”. To avoid confusion, we decided to use a specific word for each part of the definition.

We identified two kinds of constraints involved in a relational database: (1) data constraints are responsible for data consistency. 5 types of such constraints are available: “primary key”, “foreign key”, “unique”, “not-null” and “check”. (2) schema constraints are responsible for schema consistency and 3 types of such constraints are available: “a table can have a single primary key”, “a column can not have the same constraints applied twice on it” and “foreign key can not reference a column that has no primary key or unique constraint”.

Database Schema Consistency: The RDBMS ensures the consistency of the database schema. This notion of consistency is characterized by the fact that schema constraints are respected and no dangling reference is allowed (except in stored procedure).

Our approach works on a model of the database schema. Using a model allows one to temporarily relax schema constraints and dangling references constraint for the sake of evolution. It allows the developer to focus on changes to be made and not on how to fulfill schema consistency constraints and avoid dangling references at any time.

Operator: An operator represents a change to the database schema. It may impact several entities and require further changes to restore the schema in a consistent state after its application. RemoveColumn is an example of operator.

Entity-Oriented Operator: An entity-oriented operator applies on an element of the model that does not represent a reference. This kind of operator has the particularity to be translatable directly as one or many SQL queries that implement it. An example of such operator is RemoveColumn .

Reference-Oriented Operator: A reference-oriented operator applies on an element of the model representing a reference. RDBMSs do not reify references. Thus, such concepts are implicit and only exist in the source code of DB entities. Because of that, they can not be directly translated as SQL queries. Instead, they need to be converted to entity-oriented operator by interpreting them and generating updated versions of the source code of concerned entities. An example of such operator is ChangeReference Target .

3 A Behavior-Aware Meta-Model for Relational Databases

This section presents our meta-model for relational databases. It takes into account both structural and behavioral entities of the database as well as their relationships.

3.1 Meta-model Objectives

As discussed in the introduction, modifying the structure of a database implies adapting the behavior ( i.e. program) depending on it. Thus, the development of the meta-model is driven by two objectives:

Model the structure and behavior of the database.

Ease the computation of entities impacted by a change.

Objective 1 is fulfilled by modeling tables, columns and constraints. We also model behavioral entities such as CRUD Footnote 1 queries, views ( i.e., named SELECT query stored in the database), stored procedures, and triggers. Objective 2 is fulfilled by reifying references between structural and behavioral entities. The details of these modeling choices are given in Sect. 3.4 .

The implementation of the meta-model is available on github Footnote 2 . The meta-model is instantiated by analysing meta-data provided by the RDBMS and parsing the source code of entities that are not meta-described. The source code of the meta-data reader Footnote 3 and the parser Footnote 4 available on github as well.

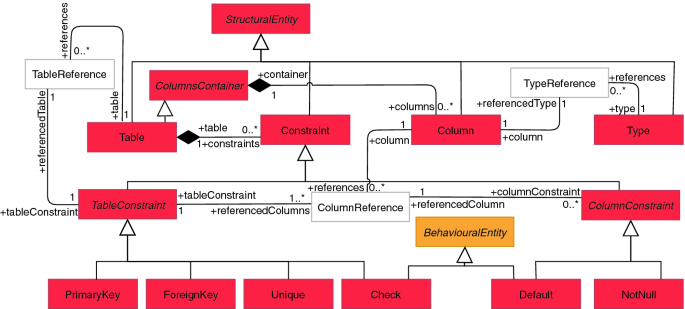

3.2 Structural Entities

Figure 1 shows the structural part of the meta-model. To ease reading, for this UML diagram and the following, inheritance links have straight corners while other links are rounded; classes modeling structural entities are red (such as Table ); classes modeling behavioral entities are orange (such as StoredProcedure ); and classes modeling references are white.

A StructuralEntity defines the structure of data held by the database or defining constraints applied on these data ( e.g., Table, Column, Referential integrity constraint, etc.). The containment relation between Table and Column is modeled through ColumnsContainer which is an abstract entity. This entity also has sub-classes in the behavioral part of the meta-model (see Sect. 3.3 ). A Column has a type. This relation is modeled through a TypeReference . A Column can also be subject to Constraint s. Depending on whether a Constraint concerns a single or multiple columns, it inherits from, respectively, ColumnConstraint or TableConstraint . Six concrete constraints inherit from Constraint : PrimaryKey , ForeignKey , Unique , Check (a developer-defined constraint, described by a boolean expression), NotNull , and Default (a default value assigned when no value is explicitly provided, it can be a literal value or an expression to compute). Note that Check and Default constraints also inherit from BehavioralEntity because they contain source code.

Structural entities of the meta-model.

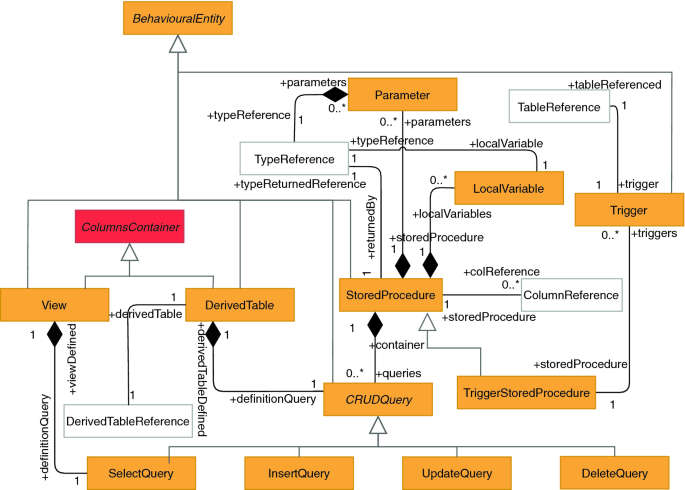

3.3 Behavioral Entities

A behavioral entity is an entity holding behavior that may interact with StructuralEntities . Figure 2 shows the behavioral part of the meta-model. The main entities are as follows.

View is a named entity holding a SELECT query. StoredProcedure is an entity holding developer-defined behavior which includes queries and calls to other StoredProcedure . A StoredProcedure contains Parameter (s) and LocalVariable (s). These entities can be referenced in clauses of queries that are contained in StoredProcedures or Views . Trigger represents actions happening in response to event on a table ( e.g., row inserted, updated or deleted). CRUDQuery (ies) contain multiple clauses depending on the query. For the sake of readability, we did not include the clause classes in the diagram. In a nutshell, the containment relation between CRUD queries and clauses are: SelectQuery contains With , Select , From , Where , Join , Union , Intersect , Except , GroupBy , OrderBy , Having , Limit , Offset , Fetch clauses. InsertQuery contains With , Into , Returning clauses. UpdateQuery contains With , Update , Set , From , Where , Returning clauses. DeleteQuery contains With , Delete , From , Where , Returning clauses. Each clause holds some Reference s to structural or behavioral entities. The references made to structural or behavioral entities from clauses are detailed in Sect. 3.4 . DerivedTable is an anonymous query usually used in another query but can also appear in the body of a StoredProcedure .

Behavioral entities of the meta-model.

3.4 References

The third and last part of the meta-model represents links between entities. It allows one to track relations between behavioral and structural entities. To simplify the approach, all references have been reified. For example, a column is thus referenced through a ColumnReference , a local variable through a LocalVariableReference and a stored procedure through a StoredProcedureCall .

4 Description of the Approach

To evolve a database, the database architect formulates changes on some of its entities. These changes impact other entities that, in turn, need to evolve to maintain the database in a consistent state. To handle evolutions induced by the initial changes, we developed a 3-step approach. The implementation of this approach is available on github Footnote 5 .

Impact computation : The set of impacted entities is computed from the change. The next step treats impacted entities one by one.

Recommendations selection : Second, depending on the change, and the impacted entity, our approach computes a set of recommendations . These recommendations are presented to the database architect that chooses one when several are proposed. This introduces new changes that will have new impacts. Steps A. and B. are recursively applied until all the impacts have been managed.

Compiling operators as a valid SQL patch : Finally, all operators (the recommendations chosen by the architect) are converted as a set of SQL queries that can be run by the RDBMS. The set of SQL queries is used to migrate the database to a state in which the initial architect ’s change has been applied.

4.1 Impact Computation

To compute the entities potentially affected by a change, one needs to collect all the entities referencing this changed entity. For example, if a Column is subject to a modification, our approach identifies the impacted entities by gathering all the ColumnReference s concerning this column. The impact of the change corresponds to the sources of all ColumnReference s since they can potentially be affected by the modification.

4.2 Recommendations Selection

For each operator, the set of impacted entities is split into disjoint sub-sets called categories . For each of these categories , one or several recommendations are available. We determinated those recommendations by analysing how to handle them according to database schema constraints.

The output of step 4.1 combined with this step (4.2) is a tree of operators where the root is the change initiated by the architect and, each other node corresponds to an operator chosen among recommendations .

4.3 Compiling Operators as a Valid SQL Patch

Once all the impact sets have been considered and recommendations chosen, our approach generates a SQL patch. This patch includes queries belonging to the SQL data definition language (DDL). These queries enable migrating the database from its original state to a state where the initial operator and all induced operators have been applied.

We stress that, during the execution of any operator of the patch, the RDBMS cannot be in inconsistent state. This constraint is fundamentally different from source code refactoring where the state of the program can be temporarily inconsistent. Therefore, each operator must lead the database to a state complying with schema consistency constraints. Else the RDBMS will forbid the execution of the SQL patch. For this purpose, the tree of operators resulting from the previous step has to be transformed into a sequence of SQL queries.

The tree resulting from the step described in Sect. 4.2 is composed of operators on references. However, DDL queries only deal with entities. Thus, reference-oriented operators are transformed into entity-oriented operators. As the RDBMS does not allow inconsistencies, operators concerning a given behavioral entity of the database are aggregated into a single operator per view and per stored procedure. This aggregation is performed in two steps: 1. all reference-oriented operators are grouped according to the entity to which belongs to the source code in which the reference appears, and 2. for each group of reference-oriented operators, we create the new version of the source code for this entity. To do so, we iterate the list of reference-oriented operators and update the part of the source code corresponding to the reference to make it reflect the change implemented by the operator. Once the iteration is complete, a new version of the source code has been built with no more dangling reference.

Those entity-oriented operators are ordered to comply with RDBMS constraints of consistency and serialized as SQL queries. Technical details related to this serialization are not provided in this paper because of space limitation.

4.4 Example

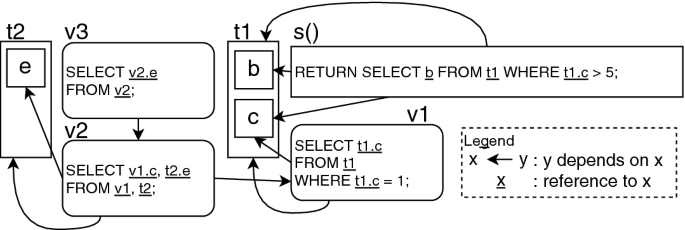

To explain the proposed process, let us take a small example. Consider the simple database shown in Fig. 3 . In this database, there are two tables, t1 with two columns t1.b , t1.c and t2 with column t2.e . Additionally, one stored procedure s() and three views v1 , v2 and v3 are present. On this figure, dependencies between entities are modeled with arrows. These dependencies arrows are a generalization over the various kinds of reference entities of the meta-model. For example, the arrow between s() and t1 is an instance of TableReference and the arrow between s() and b is an instance of ColumnReference. Views and functions have source code displayed inside their box. In this source code, a reference to another entity of the database is underlined.

Example database.

The architect wants to rename the column c of table t1 as d .

Impact Computation. First, we compute the impact of this change. Column c of table t1 is referenced three times: (i) in the WHERE clause of the SELECT query of the stored procedure s() ; (ii) in the WHERE clause of the query defining view v1 ; and (iii) in the SELECT clause of the query defining view v1 . Each of these clauses is added in the impact of renaming t1.c as t1.d .

Recommendations Selection. For each of the three impacted entities , recommendations are produced. For the WHERE clause of the stored procedure s() , the recommendation is to replace the reference to column t1.c with a new one corresponding to t1.d . The result of replacing this reference will be the following source code: RETURN SELECT b FROM t1 WHERE t1.d > 5; . From this operator, the impact is computed but is empty which stops the recursive process.

The recommendation concerning the WHERE clause of v1 is the same: replacing the reference to t1.c by a reference to t1.d . Again, there is no further impact for this operator.

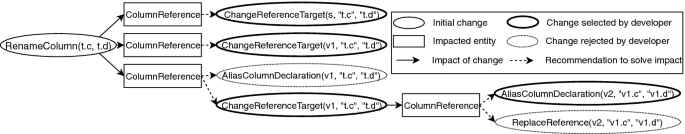

For the reference to t1.c in the SELECT clause of view v1 , two recommendations are proposed to the architect: either aliasing the column and replacing the reference ( i.e., replacing SELECT t1.c by SELECT t1.d AS c ) or replacing the reference ( i.e., replacing SELECT t1.c by SELECT t1.d ). In the latter case, the column c in view v1 becomes d ; it is no longer possible to refer to v1.c . Consequently, the second recommendation leads to rename column v1.c . If the architect choose to replace the reference without aliasing, the recursive process continues: new impacts need to be computed and new changes to be performed. The SELECT clause of view v2 is impacted. Two recommendations are again provided: either aliasing the column and replacing the reference or just replacing the reference. In this case, the architect chooses to alias the column and replace the reference. Thus, the rest of the database can continue to refer to column c of view v2 . Figure 4 illustrates this step.

Recommendations selection.

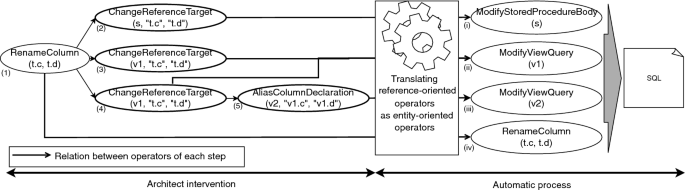

Compiling Operators as a Valid SQL Patch. Figure 5 illustrates the patch generation step. References-oriented operators resulting from the recommendations are transformed into entity-oriented operators. For this purpose, operators concerning the same sourced entity are aggregated. Operators (3) and (4) concern the same sourced entity, v1 . They are thus aggregated into ModifyViewQuery(v1) . At the end, there is a single operator per entity to be modified.

The resulting list of operators is ordered and converted to a SQL patch.

5 Experiment

Our university department uses an information system to manage its members, teams, thematic groups, etc. with 95 tables, 63 views, 109 stored procedures and 20 triggers. This information system is developed by a database architect. Before each migration, he prepares a road map containing, in natural language, the list of operators initially planned for the migration. We observed that these road maps are not complete or accurate [ 4 ]. Following a long manual process, the architect writes a SQL patch ( i.e., a text file containing queries) to migrate from one version of the database to the next one.

Compiling operators as a valid SQL patch.

The architect gave us access to these patches to do a post-mortem analysis of the DB evolutions. One of the patches implements the renaming of a column belonging to a table that is central to the DB. This is interesting because it is a non-trivial evolution.

We had the opportunity to record the architect ’s screen during this migration [ 4 ]. We observed that the architect used a trial-and-error process to find dependencies between entities of the database. He implements part of the patch and runs it in a transaction that is always rolled back. When the patch fails in between, the architect uses the gained knowledge to correct the SQL patch. Using this methodology, the architect built incrementally the SQL patch implementing the patch during approximately 1 h. The patch is \({\sim }200\) LOC and is composed of 19 SQL statements. To validate our approach, we regenerate this SQL patch with our tool but without the architect ’s expertise. Then, we compare our resulting database with the one obtained by the architect.

5.1 Experimental Protocol

The goals of the experiment are multiple: (i) to illustrate on a concrete case the generation of a SQL patch; (ii) to compare the database resulting from our approach with the one originally written by the architect; and (iii) to estimate the time required to generate a SQL patch as compared to the manual generation.

Based on the road map written by the architect and the comments in the patch we extracted the operators initiated by the architect during this migration. A discussion with the architect allowed us to validate the list of initial operators: RenameColumn(person.uid, login) , RemoveFunction(key_for_uid(varchar)) , RemoveFunction(is_responsible_of(int4)) , RemoveFunction(is_responsible_of(int4,int4)) , Re- nameFunction(uid(integer), login(integer)) , RenameLocalVariable(login.uidperson, login.loginperson) , RemoveView(test_member_view) . Details on these operators can be found at: https://hal.inria.fr/hal-02504949v1.

The experiment consists in choosing these operators in our tool and following the recommendations it proposes. Potentially several recommendations might be proposed, particularly as whether to create aliases in some referencing queries or to rename various columns in cascade (see example in Sect. 4.4 ). The architect told us that, as a rule, he preferred to avoid using aliases and renamed the columns. These were the only decision we had to do during the experiment.

We finished the experiment by executing the SQL patch generated by our tool on an empty (no data) copy of the database. Note that having no data in the database to test the patch might be a problem for operators modifying data ( e.g., changing the type of a column implies converting data to the new type). However, in the case of our experiment no operator modifies data stored in the database. First, we checked whether the generated patch ran without errors. Second, we compared the state of the database after the architect ’s migration and ours. For this, we generated a dump of the SQL schema of both databases and compared these two dumps using a textual diff tool. Third, we also considered the time we spent on our migration and the one used by the architect when he did his.

5.2 Results

We entered the seven operators listed previously in our tool and let it guide us through the decision process to generate the SQL migration patch.

Fifteen decisions were taken to choose among the proposed recommendations. They all concerned the renaming or aliasing of column references. From this process, the tool generated a SQL patch of \({\sim }270\) LOC and 27 SQL statements.

To answer the goals of the experiment listed previously: (i) The generated SQL patch was successfully applied on the database. (ii) The diff of the two databases (one being the result of the hand-written patch and the other being the result of the generated patch) showed a single difference: a comment in one function is modified in the hand-written version. Such changes are not taken into account by our approach. (iii) Encoding the list of changes and taking decisions took approximately 15 min. This corresponds to about \(25\%\) of the time necessary to the architect who has a very good knowledge of his database to obtain the same result.

5.3 Discussion

Validating tools predicting the impact of a software change is not easy. Evidence of that claim can be found in Lehnert’s meta-review [ 10 ]. On the 18 approaches reviewed by Lehnert using either call graphs or program dependency graph techniques, only six have experimental results about the size of the system, time, precision and recall. And only one of these has results on all the metrics together.

Accessing industrial databases with their evolutions is more difficult than accessing source code. Since databases are usually at the core of company business, companies are reluctant to provide their schema. The database schema evolutions are not systematically recorded in tools such as version control systems (VCS) probably because the integration between relational database and VCS is poor. Finding database administrators willing to devote some time to our experiment can also be challenging.

It is also possible to analyze the co-evolution between the source code of a database and the source code of its clients. Analyzing only the behavior inside the database has the advantage that the precision is better as queries are usually not built dynamically. When queries are built dynamically via string concatenation, it is hard to determinate what query is executed in the end. However, it is possible to build query dynamically from inside the database (via PERFORM query). We do not handle these kinds of query at the moment but it would be possible to use an approach similar to Meurice et al. approach [ 14 ].

Note that our approach has been applied on AppSI database but is does not rely on AppSI specificities. DBEvolution relies on the meta-model and operators definitions to provide recommendations for a given change. We can import other databases as model in our tool. For example, we were able to load Liquidfeedback database schema Footnote 6 in our tool and we can use DBEvolution on it to get recommendations.

6 Related Work

Our work needs to be compared to impact analysis and database schema evolution research fields.

Impact Analysis. Since the first paper introducing Impact Analysis by Bohnert and Arnold [ 1 ], the research field has been widely investigated by the scientific community. Meta-analyses on this topic exist, e.g., Lehnert did a review of software change impact analysis in 2011 [ 10 ]. We focus on work adapting impact analysis techniques to relational databases as discussed below.

Karahasanovic and Sjøberg proposed a tool, called SEMT, to find impacts of object-database schema changes on applications [ 9 ]. Their tool allows one to identify and visualize the impact. It uses an improved version of the transitive closure algorithm. It also provides a language to graphically walk the impact graph.

Gardikiotis and Malevris [ 6 ] proposes an approach to estimate the impact of a database schema change on the operability of a web application. To achieve that, they proposed a tool named DaSIAn (Database Schema Impact Analyzer) based on their approach. This tool finds CRUD queries and stored procedures affected by a change on the database schema. The authors also presented an approach assessing impact on client applications from schema changes [ 7 ]. They used this approach to assess both affected source code statements and affected test suites in the application using the database after a change in the database.

Maul et al. [ 13 ] created a static analysis technique to assess the impact of changing a relational database on its object-oriented software clients. They implemented Schema Update Impact Tool Environment (SUITE) which takes the source code of the application using the database and a model of the database schema as input. Then, they queried this model to find out the part of the source code application impacted when modifying an entity of the database.

Nagy et al. [ 15 ] compared two methods for computing dependencies between stored procedures and tables in a database: One using Static Execute After/Before relations [ 8 ] and the other analysing CRUD queries and schema to find database access and propagate this dependency at the stored procedure level. The authors concluded that the two approaches provide different results and should thus be used together to assess dependencies safely.

Liu et al. [ 11 , 12 ], proposed a graph called attribute dependency graph to identify dependencies between columns in a database and parts of client software source code using it. They evaluated their approach on 3 databases and their clients written in PHP. Their tool presents to the architect an overview of a change impact as a graph.

Similarly to approaches covered by Lehnert meta-analysis, the validations for impact analysis on databases are usually quite weak because it is a difficult task. To position our approach, it uses static analysis to determine the impact of a change on an entity. This information is directly available in our model because we reify the references between entities. As explained previously, our approach considers that if you change an entity, all entities referencing it are potentially impacted. That set of impacted entities is decomposed into categories and a recommendation is provided for each of them.

Recommendations for Relational Database Schema Evolution. Sjøberg’s work [ 20 ] quantifies schema evolution. They studied the evolution of a relational database and its application forming a health management system during 18 months. To do so they used “the Thesaurus” tool which analyses how many screens, actions and queries may be affected by a potential schema change. This tool does not propose recommendations to users but rather shows code locations to be manually modified. Their results suggest that change management tools are needed to handle this evolution.

Curino et al. [ 2 , 3 ] proposed PRISM, a tool suite allowing one to predict and evaluate schema modification. PRISM also propose database migration feature through rewriting queries and application to take into account the modification. To do so, they provide a language to express schema modification operators, automatic data migration support and documentation of changes applied on the database. They evaluated their approach and tool on Wikimedia showing it is efficient. In PRISM approach, the operators are limited to modification on structural entities of the database, whereas our approach also deals with change on behavioral entities.

Papastefanatos et al. [ 16 , 17 ] developed Hecataeus, a tool representing the database structural entities, the queries and the views, as a uniform directed graph. Hecataeus allows user to create an arbitrary change and to simulate it to predict its impact. From this perspective, it is close to the aim of our tool. The main difference is that our approach ensures no inconsistency is created at some point during database evolution. It is not clear how Hecataeus addresses this problem in these papers.

Meurice et al. [ 14 ] presented a tool-supported approach that can analyze how the client source code and database schema co-evolved in the past and to simulate a database change to determine client source code locations that would be affected by the change. Additionally, the authors provide strategies (recommendations and warnings) for facing database schema change. Their recommendations describe how to modify client program source code depending on the change performed on the database. The approach presented has been evaluated by comparing historical evolution of a database and its client application with recommendations provided by their approach. From the historical analysis the authors observed that the task of manually propagating database schema change to client software is not trivial. Some schema changes required multiple versions of the software application to be fully propagated. Others were never fully propagated. We argue that, according to what we observed in previous research [ 4 ] and the research made in this article, propagating structural change to behavior entities of the database is a hard task as well.

Compared to previous approaches, DBEvolution brings as a novelty that any entity can be subject to an evolution operator. In particular, stored procedures can be modified and DBEvolution will provide recommandations for the modification. The other way around, modifying a structural entity will provide recommandations to accomodate stored procedures with the change. Such capability is absent from above approaches.

7 Conclusion

We have developed an approach to manage relational database evolution. This approach addresses the two main constraints that a RDBMS sets: 1. no schema inconsistency is allowed during the evolution and 2. stored procedures bodies are not described by meta-data . Addressing these problems allowed us to provide three main contributions: i. a meta-model for relational databases easing the computation of the impact of a change, ii. a semi-automatic approach to evolve a database while managing the impact, and iii. an experiment to assess that our approach can reproduce a change that happened on a database used by a real project with a gain of 75% of the time. These results show that this approach is promising to build the future of relational databases integrated development environments.

Our future works are threefold. First, we would like to extend the set of operators supported by our implementation. More specifically, we want higher-level operators such as: historize column which will modify the database schema to keep track of the history of the values of a column through the database life.

Second, the evolution has been reproduced by us which might bias our results in terms of time to implement a change. Indeed, as we have little knowledge on the DB, it is possible that an expert using our tool would be faster than us. Thus, we would like to do another experiment where we compare the performances of an architect using our tool with the performances of an architect using typical tools to implement an evolution.

Finally, some operators will require to transform or move data stored in the database (for example moving a column from a table to another). We plan to support such operators in our methodology by generating CRUD queries in addition to the DDL queries already generated by the operators.

Create Read Update Delete query in SQL: INSERT, SELECT, UPDATE, DELETE .

https://github.com/juliendelplanque/FAMIXNGSQL .

https://github.com/olivierauverlot/PgMetadata .

https://github.com/juliendelplanque/PostgreSQLParser .

https://github.com/juliendelplanque/DBEvolution .

https://liquidfeedback.org .

Arnold, R.S., Bohnert, S.: Software Change Impact Analysis. IEEE Computer Society Press, Los Alamitos (1996)

Google Scholar

Curino, C., Moon, H.J., Zaniolo, C.: Automating database schema evolution in information system upgrades. In: Proceedings of the 2nd International Workshop on Hot Topics in Software Upgrades, p. 5. ACM (2009)

Curino, C.A., Moon, H.J., Zaniolo, C.: Graceful database schema evolution: the prism workbench. Proc. VLDB Endow. 1 (1), 761–772 (2008)

Article Google Scholar

Delplanque, J., Etien, A., Anquetil, N., Auverlot, O.: Relational database schema evolution: an industrial case study. In: 2018 IEEE International Conference on Software Maintenance and Evolution (ICSME) (2018). https://doi.org/10.1109/ICSME.2018.00073 . http://rmod.inria.fr/archives/papers/Delp18c-ICSME-DatabaseSchemaEvolution.pdf

Fowler, M., Beck, K., Brant, J., Opdyke, W., Roberts, D.: Refactoring: Improving the Design of Existing Code. Addison Wesley, Boston (1999)

Gardikiotis, S.K., Malevris, N.: DaSIAn: a tool for estimating the impact of database schema modifications on web applications. In: 2006 IEEE International Conference on Computer Systems and Applications, pp. 188–195. IEEE (2006)

Gardikiotis, S.K., Malevris, N.: A two-folded impact analysis of schema changes on database applications. Int. J. Autom. Comput. 6 (2), 109–123 (2009)

Jász, J., Beszédes, Á., Gyimóthy, T., Rajlich, V.: Static execute after/before as a replacement of traditional software dependencies. In: 2008 IEEE International Conference on Software Maintenance, pp. 137–146. IEEE (2008)

Karahasanovic, A., Sjoberg, D.I.: Visualizing impacts of database schema changes-a controlled experiment. In: 2001 Proceedings IEEE Symposia on Human-Centric Computing Languages and Environments, pp. 358–365. IEEE (2001)

Lehnert, S.: A review of software change impact analysis, p. 39. Ilmenau University of Technology (2011)

Liu, K., Tan, H.B.K., Chen, X.: Extraction of attribute dependency graph from database applications. In: 2011 18th Asia Pacific Software Engineering Conference (APSEC), pp. 138–145. IEEE (2011)

Liu, K., Tan, H.B.K., Chen, X.: Aiding maintenance of database applications through extracting attribute dependency graph. J. Database Manage. 24 (1), 20–35 (2013)

Maule, A., Emmerich, W., Rosenblum, D.: Impact analysis of database schema changes. In: 2008 ACM/IEEE 30th International Conference on Software Engineering, ICSE 2008, pp. 451–460. IEEE (2008)

Meurice, L., Nagy, C., Cleve, A.: Detecting and preventing program inconsistencies under database schema evolution. In: 2016 IEEE International Conference on Software Quality, Reliability and Security (QRS), pp. 262–273. IEEE (2016)

Nagy, C., Pantos, J., Gergely, T., Besz’edes, A.: Towards a safe method for computing dependencies in database-intensive systems. In: 2010 14th European Conference on Software Maintenance and Reengineering (CSMR), pp. 166–175. IEEE (2010)

Papastefanatos, G., Anagnostou, F., Vassiliou, Y., Vassiliadis, P.: Hecataeus: A what-if analysis tool for database schema evolution. In: 2008 12th European Conference on Software Maintenance and Reengineering, CSMR 2008, pp. 326–328. IEEE (2008)

Papastefanatos, G., Vassiliadis, P., Simitsis, A., Vassiliou, Y.: HECATAEUS: regulating schema evolution. In: 2010 IEEE 26th International Conference on Data Engineering (ICDE), pp. 1181–1184. IEEE (2010)

Roberts, D., Brant, J., Johnson, R.E., Opdyke, B.: An automated refactoring tool. In: Proceedings of ICAST 1996, Chicago, IL, April 1996

Roberts, D.B.: Practical Analysis for Refactoring. Ph.D. thesis, University of Illinois (1999). http://historical.ncstrl.org/tr/pdf/uiuc_cs/UIUCDCS-R-99-2092.pdf

Sjøberg, D.: Quantifying schema evolution. Inf. Softw. Technol. 35 (1), 35–44 (1993)

Skoulis, I., Vassiliadis, P., Zarras, A.: Open-source databases: within, outside, or beyond Lehman’s laws of software evolution? In: Jarke, M., et al. (eds.) CAiSE 2014. LNCS, vol. 8484, pp. 379–393. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-07881-6_26

Chapter Google Scholar

Download references

Author information

Authors and affiliations.

Univ. Lille, CNRS, Centrale Lille, Inria UMR 9189 - CRIStAL, Lille, France

Julien Delplanque, Anne Etien, Nicolas Anquetil & Stéphane Ducasse

INRIA Lille Nord Europe, Villeneuve d’Ascq, France

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Julien Delplanque .

Editor information

Editors and affiliations.

TU Wien, Vienna, Austria

Schahram Dustdar

University of Toronto, Toronto, ON, Canada

Université Paris 1 Panthéon-Sorbonne, Paris, France

Camille Salinesi

Université Grenoble Alpes, Saint-Martin-d’Hères, France

Dominique Rieu

Rights and permissions

Reprints and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper.

Delplanque, J., Etien, A., Anquetil, N., Ducasse, S. (2020). Recommendations for Evolving Relational Databases. In: Dustdar, S., Yu, E., Salinesi, C., Rieu, D., Pant, V. (eds) Advanced Information Systems Engineering. CAiSE 2020. Lecture Notes in Computer Science(), vol 12127. Springer, Cham. https://doi.org/10.1007/978-3-030-49435-3_31

Download citation

DOI : https://doi.org/10.1007/978-3-030-49435-3_31

Published : 03 June 2020

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-49434-6

Online ISBN : 978-3-030-49435-3

eBook Packages : Computer Science Computer Science (R0)

Share this paper

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

E.F. Codd and the Success of the Relational Database Model

Edgar Frank Codd (1923-2003)

On August 23 , 1923 , English computer scientist Edgar Frank “Ted” Codd was born. His main achievement besides many contributions to computer science was the invention of the relational model for database management , the theoretical basis for relational databases .

“At the time, Nixon was normalizing relations with China. I figured that if he could normalize relations, then so could I.” — E. F. Codd [5]

When you talk about databases today, usually you are referring to relational databases that store their data within tables, interconnected via so-called keys. Of course there are also modern alternatives such as e.g. graph based databases , but relational databases are widespread and rather common today. And this is also thanks to E.F. Codd and his relational algebra .

Edgar Frank Codd – Early Years

Edgar Frank Codd was born the youngest of seven children in Portland Bill , in Dorset, England , in 1923 . His father was a leather manufacturer , his mother a schoolteacher . After attending Poole Grammar School , he studied mathematics and chemistry at Exeter College, Oxford , before serving as a pilot in the Royal Air Force during the Second World War . In 1948 at age 25, he moved to New York to work for IBM as a mathematical programmer . In 1953 , angered by Senator Joseph McCarthy , Codd moved to Ottawa, Canada . While in Canada , he established a computing center for the Canadian guided missile program. A decade later he returned to the U.S . and received his doctorate in computer science from the University of Michigan in Ann Arbor . His thesis was about self-replication in cellular automata , extending on work of von Neumann and showing that a set of eight states was sufficient for universal computation and construction.

A Relational Model of Data

Two years later he moved to San Jose, California , to work at IBM ‘s San Jose Research Laboratory , where he continued to work until the 1980s . There he found existing data management systems “seat-of-the-pants, with no theory at all,” he recalled in one interview . “ I began reading documentation, ” Codd said, “ and I was disgusted. ” [2]. Subsequently, Codd worked out his theories of data arrangement, issuing his paper “ A Relational Model of Data for Large Shared Data Banks ” in 1970 , after an internal IBM paper one year earlier. In fact, the 1970 paper became one of the most important research papers in computer history . Codd believed that all the information in a database should be represented as values in the rows and columns of tables , and that no information should be represented by pointers or connections among records.[2] To his frustration, IBM largely ignored his work, as the company was investing heavily at the time in commercializing a different type of database system , the IMS /DB [1].

Then IBM included in its Future Systems project a System R subproject — but put in charge of it developers who were not thoroughly familiar with Codd’s ideas , and isolated the team from Codd . As a result, they did not use Codd’s own Alpha language but created a non-relational one, SEQUEL . Even so, SEQUEL was so superior to pre-relational systems that it was copied, in 1979 , based on pre-launch papers presented at conferences, by Larry Ellison , of Relational software Inc, in his Oracle Database , which actually reached market before SQL /DS — because of the then-already proprietary status of the original name, SEQUEL had been renamed SQL . System R was a success, and in 1981 IBM announced its first relational database product , SQL /DS. DB2 , initially for large mainframe machines, was announced in 1983 [3].

Further Development of the Relational Data Model

Codd continued to develop and extend his relational model , sometimes in collaboration with Chris Date . One of the normalized forms, the Boyce–Codd normal form , is named after him. Codd’s theorem, a result proven in his seminal work on the relational model , equates the expressive power of relational algebra and relational calculus (both of which, lacking recursion , are strictly less powerful than first-order logic ). As the relational model started to become fashionable in the early 1980s , Codd fought a sometimes bitter campaign to prevent the term being misused by database vendors who had merely added a relational veneer to older technology. As part of this campaign, he published his 12 rules to define what constituted a relational database . This made his position in IBM increasingly difficult, so he left to form his own consulting company with Chris Date and others.

Turing Award

Nevertheless, Codd was appointed IBM Fellow in 1976 . In 1981 , Codd was honoured with the Turing Award , the most prestigious award in computer science similar to the Fields medal in mathematics . During the 1990s , his health deteriorated and he ceased work. Codd died of heart failure at his home in Williams Island , Florida , at the age of 79 on April 18, 2003 .

References and Further Reading:

- [1] New York Times Obituary on E.F. Codd

- [2] E.F. Codd at TriplexDB2

- [3] E.F. Codd at IBM Research

- [4] E. F. Codd at Wikidata

- [5] Ted Codd, Father of the Relational Database, dead at 79, Burlson Consulting

- [6] Codd, Edgar Frank (9 November 1981). “1981 Turing Award Lecture – Relational Database: A Practical Foundation for Productivity” . Communications of the ACM . 25 (2): 109–117.

- [7] “12 simple rules: How Ted Codd transformed the humble database” . The Register .

- [8] Codd, Edgar Frank (1982). “Relational database: A practical foundation for productivity” . Communications of the ACM . 25 (2): 109–117.

- [9] Edgar F. Codd at the Mathematics Genealogy Project

- [10] Mark Grimes, BZAN 6356 Lecture 2.1: A Brief Overview of Relational Databases, Professor Mark Grimes @ youtube

- [11] Timeline of Turing Award winners, via Wikidata

Harald Sack

Related posts, lewis fry richardson and the accurate weather forecast, the ibm system/360 and the use of microcode, herman kahn and the consequences of nuclear war, jule gregory charney and the science of weather prediction, leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

Further Projects

- February (28)

- January (30)

- December (30)

- November (29)

- October (31)

- September (30)

- August (30)

- January (31)

- December (31)

- November (30)

- August (31)

- February (29)

- February (19)

- January (18)

- October (29)

- September (29)

- February (5)

- January (5)

- December (14)

- November (9)

- October (13)

- September (6)

- August (13)

- December (3)

- November (5)

- October (1)

- September (3)

- November (2)

- September (2)

- Entries RSS

- Comments RSS

- WordPress.org

Legal Notice

- Privacy Statement

A comparative study of relational database and key-value database for big data applications

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

- Accessibility Policy

- Skip to content

- QUICK LINKS

- Oracle Cloud Infrastructure

- Oracle Fusion Cloud Applications

- Download Java

- Careers at Oracle

Database 23ai: Feature Highlights

Learn how Oracle Database 23ai brings AI to your data, making it simple to power app development and mission critical workloads with AI. Each week, we'll share a new feature of Oracle Database 23ai with examples so you can get up and running quickly. Save this page and check back each week to see new highlighted features.

Larry Ellison and Juan Loaiza discuss the GenAI strategy behind Oracle Database 23ai.

Oracle Database 23ai Feature highlights for developers

Check out some of the features we’ve built with developers in mind:

AI Vector Search brings AI to your data by letting you build generative AI pipelines using your business data, directly within the database. Easy-to-use native vector capabilities let your developers build next-gen AI applications that combine relational database processing with similarity search and retrieval augmented generation. Running vector search directly on your business data eliminates data movement as well as the complexity, cost, and data consistency headaches of managing and integrating multiple databases.

Other features developers should get to know include:

- JSON Relational Duality

- Property Graph

Previously highlighted features

- Administration/Performance

- Languages/Drivers

- SQL/Data Types

- Transactions/Microservices

Application availability—zero downtime for database clients

Transparent Application Continuity shields C/C++, Java, .NET, Python, and Node.js applications from the outages of underlying software, hardware, communications, and storage layers...

Automatic Transaction Rollback

If a transaction does not commit or rollback for a long time while holding row locks, it can potentially block other high-priority transactions...

DBMS_Search

DBMS_SEARCH implements Oracle Text ubiquitous search. DBMS_SEARCH makes it very easy to create a single index over multiple tables and views...

Fast Ingest enhancements

We've added enhancements to Memoptimized Rowstore Fast Ingest with support for partitioning, compressed tables, fast flush using direct writes, and direct in-memory column store population support...

Raft-based replication in Globally Distributed Database

Oracle Globally Distributed Database introduced the Raft replication feature in Oracle Database 23c. This allows us to achieve very fast (sub 3 seconds) failover with zero data loss in case of a node or a data center outage...

- SQL Analysis Report