Our websites may use cookies to personalize and enhance your experience. By continuing without changing your cookie settings, you agree to this collection. For more information, please see our University Websites Privacy Notice .

Neag School of Education

Educational Research Basics by Del Siegle

Types of Research

How do we know something exists? There are a numbers of ways of knowing…

- -Sensory Experience

- -Agreement with others

- -Expert Opinion

- -Scientific Method (we’re using this one)

The Scientific Process (replicable)

- Identify a problem

- Clarify the problem

- Determine what data would help solve the problem

- Organize the data

- Interpret the results

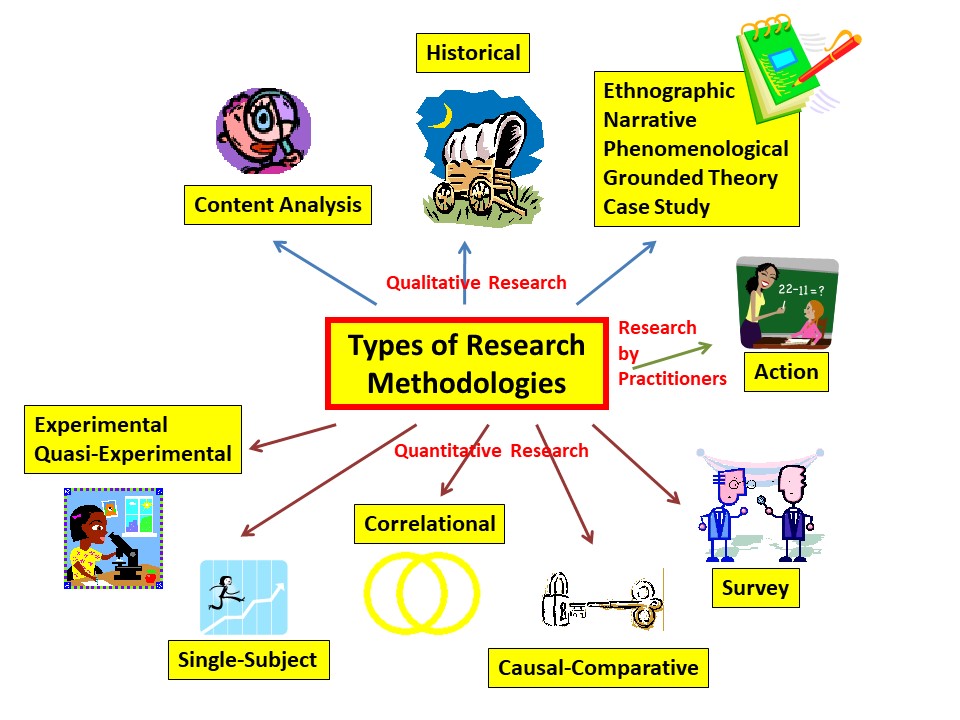

General Types of Educational Research

- Descriptive — survey, historical, content analysis, qualitative (ethnographic, narrative, phenomenological, grounded theory, and case study)

- Associational — correlational, causal-comparative

- Intervention — experimental, quasi-experimental, action research (sort of)

Researchers Sometimes Have a Category Called Group Comparison

- Ex Post Facto (Causal-Comparative): GROUPS ARE ALREADY FORMED

- Experimental: RANDOM ASSIGNMENT OF INDIVIDUALS

- Quasi-Experimental: RANDOM ASSIGNMENT OF GROUPS (oversimplified, but fine for now)

General Format of a Research Publication

- Background of the Problem (ending with a problem statement) — Why is this important to study? What is the problem being investigated?

- Review of Literature — What do we already know about this problem or situation?

- Methodology (participants, instruments, procedures) — How was the study conducted? Who were the participants? What data were collected and how?

- Analysis — What are the results? What did the data indicate?

- Results — What are the implications of these results? How do they agree or disagree with previous research? What do we still need to learn? What are the limitations of this study?

Del Siegle, PhD [email protected]

Last modified 6/18/2019

- What is Educational Research? + [Types, Scope & Importance]

Education is an integral aspect of every society and in a bid to expand the frontiers of knowledge, educational research must become a priority. Educational research plays a vital role in the overall development of pedagogy, learning programs, and policy formulation.

Educational research is a spectrum that bothers on multiple fields of knowledge and this means that it draws from different disciplines. As a result of this, the findings of this research are multi-dimensional and can be restricted by the characteristics of the research participants and the research environment.

What is Educational Research?

Educational research is a type of systematic investigation that applies empirical methods to solving challenges in education. It adopts rigorous and well-defined scientific processes in order to gather and analyze data for problem-solving and knowledge advancement.

J. W. Best defines educational research as that activity that is directed towards the development of a science of behavior in educational situations. The ultimate aim of such a science is to provide knowledge that will permit the educator to achieve his goals through the most effective methods.

The primary purpose of educational research is to expand the existing body of knowledge by providing solutions to different problems in pedagogy while improving teaching and learning practices. Educational researchers also seek answers to questions bothering on learner motivation, development, and classroom management.

Characteristics of Education Research

While educational research can take numerous forms and approaches, several characteristics define its process and approach. Some of them are listed below:

- It sets out to solve a specific problem.

- Educational research adopts primary and secondary research methods in its data collection process . This means that in educational research, the investigator relies on first-hand sources of information and secondary data to arrive at a suitable conclusion.

- Educational research relies on empirical evidence . This results from its largely scientific approach.

- Educational research is objective and accurate because it measures verifiable information.

- In educational research, the researcher adopts specific methodologies, detailed procedures, and analysis to arrive at the most objective responses

- Educational research findings are useful in the development of principles and theories that provide better insights into pressing issues.

- This research approach combines structured, semi-structured, and unstructured questions to gather verifiable data from respondents.

- Many educational research findings are documented for peer review before their presentation.

- Educational research is interdisciplinary in nature because it draws from different fields and studies complex factual relations.

Types of Educational Research

Educational research can be broadly categorized into 3 which are descriptive research , correlational research , and experimental research . Each of these has distinct and overlapping features.

Descriptive Educational Research

In this type of educational research, the researcher merely seeks to collect data with regards to the status quo or present situation of things. The core of descriptive research lies in defining the state and characteristics of the research subject being understudied.

Because of its emphasis on the “what” of the situation, descriptive research can be termed an observational research method . In descriptive educational research, the researcher makes use of quantitative research methods including surveys and questionnaires to gather the required data.

Typically, descriptive educational research is the first step in solving a specific problem. Here are a few examples of descriptive research:

- A reading program to help you understand student literacy levels.

- A study of students’ classroom performance.

- Research to gather data on students’ interests and preferences.

From these examples, you would notice that the researcher does not need to create a simulation of the natural environment of the research subjects; rather, he or she observes them as they engage in their routines. Also, the researcher is not concerned with creating a causal relationship between the research variables.

Correlational Educational Research

This is a type of educational research that seeks insights into the statistical relationship between two research variables. In correlational research, the researcher studies two variables intending to establish a connection between them.

Correlational research can be positive, negative, or non-existent. Positive correlation occurs when an increase in variable A leads to an increase in variable B, while negative correlation occurs when an increase in variable A results in a decrease in variable B.

When a change in any of the variables does not trigger a succeeding change in the other, then the correlation is non-existent. Also, in correlational educational research, the research does not need to alter the natural environment of the variables; that is, there is no need for external conditioning.

Examples of educational correlational research include:

- Research to discover the relationship between students’ behaviors and classroom performance.

- A study into the relationship between students’ social skills and their learning behaviors.

Experimental Educational Research

Experimental educational research is a research approach that seeks to establish the causal relationship between two variables in the research environment. It adopts quantitative research methods in order to determine the cause and effect in terms of the research variables being studied.

Experimental educational research typically involves two groups – the control group and the experimental group. The researcher introduces some changes to the experimental group such as a change in environment or a catalyst, while the control group is left in its natural state.

The introduction of these catalysts allows the researcher to determine the causative factor(s) in the experiment. At the core of experimental educational research lies the formulation of a hypothesis and so, the overall research design relies on statistical analysis to approve or disprove this hypothesis.

Examples of Experimental Educational Research

- A study to determine the best teaching and learning methods in a school.

- A study to understand how extracurricular activities affect the learning process.

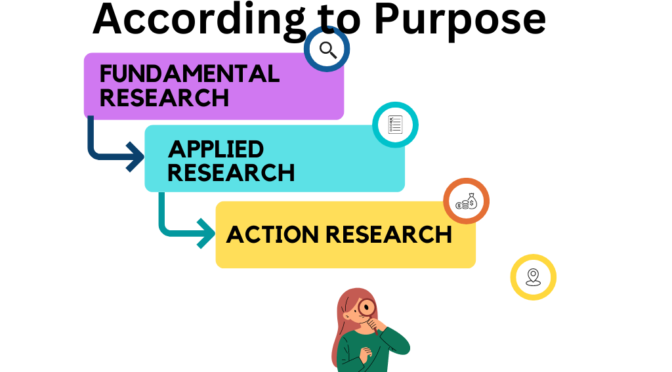

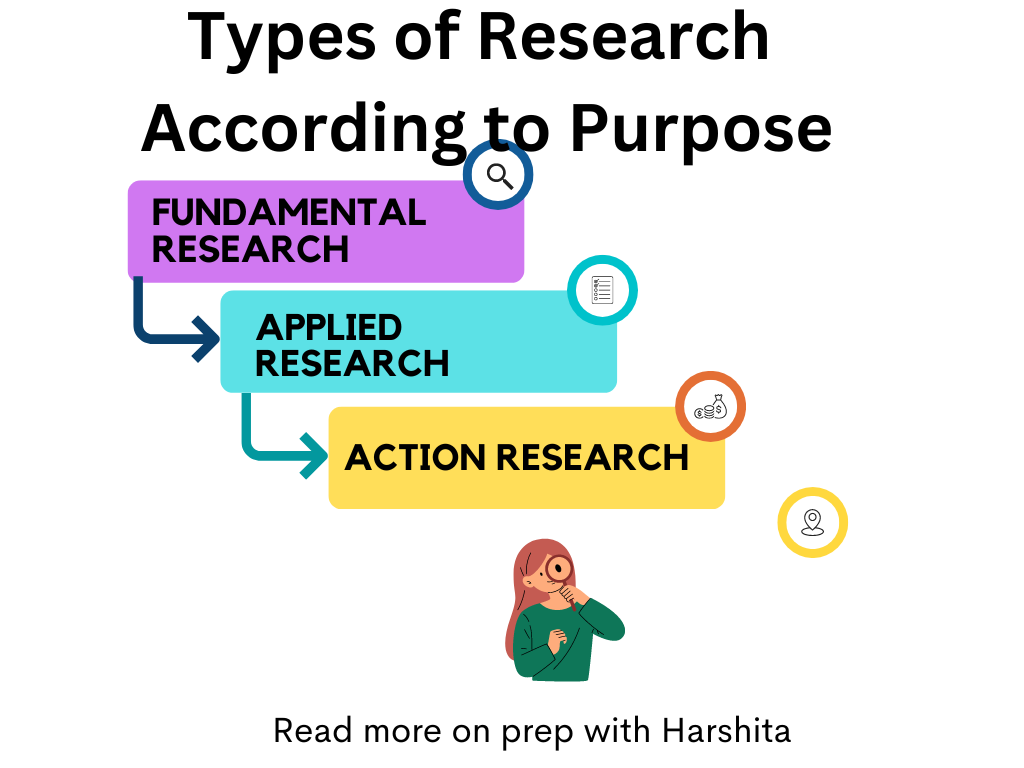

Based on functionality, educational research can be classified into fundamental research , applied research , and action research. The primary purpose of fundamental research is to provide insights into the research variables; that is, to gain more knowledge. Fundamental research does not solve any specific problems.

Just as the name suggests, applied research is a research approach that seeks to solve specific problems. Findings from applied research are useful in solving practical challenges in the educational sector such as improving teaching methods, modifying learning curricula, and simplifying pedagogy.

Action research is tailored to solve immediate problems that are specific to a context such as educational challenges in a local primary school. The goal of action research is to proffer solutions that work in this context and to solve general or universal challenges in the educational sector.

Importance of Educational Research

- Educational research plays a crucial role in knowledge advancement across different fields of study.

- It provides answers to practical educational challenges using scientific methods.

- Findings from educational research; especially applied research, are instrumental in policy reformulation.

- For the researcher and other parties involved in this research approach, educational research improves learning, knowledge, skills, and understanding.

- Educational research improves teaching and learning methods by empowering you with data to help you teach and lead more strategically and effectively.

- Educational research helps students apply their knowledge to practical situations.

Educational Research Methods

- Surveys/Questionnaires

A survey is a research method that is used to collect data from a predetermined audience about a specific research context. It usually consists of a set of standardized questions that help you to gain insights into the experiences, thoughts, and behaviors of the audience.

Surveys can be administered physically using paper forms, face-to-face conversations, telephone conversations, or online forms. Online forms are easier to administer because they help you to collect accurate data and to also reach a larger sample size. Creating your online survey on data-gathering platforms like Formplus allows you to.also analyze survey respondent’s data easily.

In order to gather accurate data via your survey, you must first identify the research context and the research subjects that would make up your data sample size. Next, you need to choose an online survey tool like Formplus to help you create and administer your survey with little or no hassles.

An interview is a qualitative data collection method that helps you to gather information from respondents by asking questions in a conversation. It is typically a face-to-face conversation with the research subjects in order to gather insights that will prove useful to the specific research context.

Interviews can be structured, semi-structured , or unstructured . A structured interview is a type of interview that follows a premeditated sequence; that is, it makes use of a set of standardized questions to gather information from the research subjects.

An unstructured interview is a type of interview that is fluid; that is, it is non-directive. During a structured interview, the researcher does not make use of a set of predetermined questions rather, he or she spontaneously asks questions to gather relevant data from the respondents.

A semi-structured interview is the mid-point between structured and unstructured interviews. Here, the researcher makes use of a set of standardized questions yet, he or she still makes inquiries outside these premeditated questions as dedicated by the flow of the conversations in the research context.

Data from Interviews can be collected using audio recorders, digital cameras, surveys, and questionnaires.

- Observation

Observation is a method of data collection that entails systematically selecting, watching, listening, reading, touching, and recording behaviors and characteristics of living beings, objects, or phenomena. In the classroom, teachers can adopt this method to understand students’ behaviors in different contexts.

Observation can be qualitative or quantitative in approach . In quantitative observation, the researcher aims at collecting statistical information from respondents and in qualitative information, the researcher aims at collecting qualitative data from respondents.

Qualitative observation can further be classified into participant or non-participant observation. In participant observation, the researcher becomes a part of the research environment and interacts with the research subjects to gather info about their behaviors. In non-participant observation, the researcher does not actively take part in the research environment; that is, he or she is a passive observer.

How to Create Surveys and Questionnaires with Formplus

- On your dashboard, choose the “create new form” button to access the form builder. You can also choose from the available survey templates and modify them to suit your need.

- Save your online survey to access the form customization section. Here, you can change the physical appearance of your form by adding preferred background images and inserting your organization’s logo.

- Formplus has a form analytics dashboard that allows you to view insights from your data collection process such as the total number of form views and form submissions. You can also use the reports summary tool to generate custom graphs and charts from your survey data.

Steps in Educational Research

Like other types of research, educational research involves several steps. Following these steps allows the researcher to gather objective information and arrive at valid findings that are useful to the research context.

- Define the research problem clearly.

- Formulate your hypothesis. A hypothesis is the researcher’s reasonable guess based on the available evidence, which he or she seeks to prove in the course of the research.

- Determine the methodology to be adopted. Educational research methods include interviews, surveys, and questionnaires.

- Collect data from the research subjects using one or more educational research methods. You can collect research data using Formplus forms.

- Analyze and interpret your data to arrive at valid findings. In the Formplus analytics dashboard, you can view important data collection insights and you can also create custom visual reports with the reports summary tool.

- Create your research report. A research report details the entire process of the systematic investigation plus the research findings.

Conclusion

Educational research is crucial to the overall advancement of different fields of study and learning, as a whole. Data in educational research can be gathered via surveys and questionnaires, observation methods, or interviews – structured, unstructured, and semi-structured.

You can create a survey/questionnaire for educational research with Formplu s. As a top-tier data tool, Formplus makes it easy for you to create your educational research survey in the drag-and-drop form builder, and share this with survey respondents using one or more of the form sharing options.

Connect to Formplus, Get Started Now - It's Free!

- education research

- educational research types

- examples of educational research

- importance of educational research

- purpose of educational research

- busayo.longe

You may also like:

What is Pure or Basic Research? + [Examples & Method]

Simple guide on pure or basic research, its methods, characteristics, advantages, and examples in science, medicine, education and psychology

User Research: Definition, Methods, Tools and Guide

In this article, you’ll learn to provide value to your target market with user research. As a bonus, we’ve added user research tools and...

Assessment Tools: Types, Examples & Importance

In this article, you’ll learn about different assessment tools to help you evaluate performance in various contexts

Goodhart’s Law: Definition, Implications & Examples

In this article, we will discuss Goodhart’s law in different fields, especially in survey research, and how you can avoid it.

Formplus - For Seamless Data Collection

Collect data the right way with a versatile data collection tool. try formplus and transform your work productivity today..

- AERA Leadership

- AERA Past Presidents

- AERA By The Numbers

- What is Education Research?

- Division Descriptions

- Resources for Division Officers

- SIG Directory

- Resources for SIG Officers

- Consortium of University and Research Institutions (CURI)

- GSC Welcome Message

- GSC Annual Meeting

- GSC Campus Representatives

- GSC Newsletters

- AERA GSC Online Library of Resources

- GSC Officers & Representatives

- GSC Elections

- GSC Paper Submission Tips & Examples

- GSC Meeting Minutes and Announcements

- Communications

- Education Research & Research Policy

- Government Relations

- Professional Development and Training

- Social Justice

- Committee on Scholars of Color in Education Awards

- Distinguished Contributions to Gender Equity in Education Research Award

- Distinguished Contributions to Research in Education Award

- Distinguished Public Service Award

- Early Career Award

- E. F. Lindquist Award

- Excellence In Media Reporting On Education Research Award

- Exemplary Contributions to Practice-Engaged Research Award

- Outstanding Book Award

- Outstanding Public Communication of Education Research Award

- Palmer O. Johnson Memorial Award

- Review of Research Award

- Social Justice in Education Award

- Presidential Citation

- 2022-Division-Awards

- AERA Council & Executive Board

- Standing Committees

- Awards Committees

- Professional Ethics

- Association Policies

- Position Statements

- AERA Centennial

Share

Education Scholarship in Healthcare pp 13–23 Cite as

Introduction to Education Research

- Sharon K. Park 3 ,

- Khanh-Van Le-Bucklin 4 &

- Julie Youm 4

- First Online: 29 November 2023

223 Accesses

Educators rely on the discovery of new knowledge of teaching practices and frameworks to improve and evolve education for trainees. An important consideration that should be made when embarking on a career conducting education research is finding a scholarship niche. An education researcher can then develop the conceptual framework that describes the state of knowledge, realize gaps in understanding of the phenomenon or problem, and develop an outline for the methodological underpinnings of the research project. In response to Ernest Boyer’s seminal report, Priorities of the Professoriate , research was conducted about the criteria and decision processes for grants and publications. Six standards known as the Glassick’s criteria provide a tangible measure by which educators can assess the quality and structure of their education research—clear goals, adequate preparation, appropriate methods, significant results, effective presentation, and reflective critique. Ultimately, the promise of education research is to realize advances and innovation for learners that are informed by evidence-based knowledge and practices.

- Scholarship

- Glassick’s criteria

This is a preview of subscription content, log in via an institution .

Buying options

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Boyer EL. Scholarship reconsidered: priorities of the professoriate. Princeton: Carnegie Foundation for the Advancement of Teaching; 1990.

Google Scholar

Munoz-Najar Galvez S, Heiberger R, McFarland D. Paradigm wars revisited: a cartography of graduate research in the field of education (1980–2010). Am Educ Res J. 2020;57(2):612–52.

Article Google Scholar

Ringsted C, Hodges B, Scherpbier A. ‘The research compass’: an introduction to research in medical education: AMEE Guide no. 56. Med Teach. 2011;33(9):695–709.

Article PubMed Google Scholar

Bordage G. Conceptual frameworks to illuminate and magnify. Med Educ. 2009;43(4):312–9.

Varpio L, Paradis E, Uijtdehaage S, Young M. The distinctions between theory, theoretical framework, and conceptual framework. Acad Med. 2020;95(7):989–94.

Ravitch SM, Riggins M. Reason & Rigor: how conceptual frameworks guide research. Thousand Oaks: Sage Publications; 2017.

Park YS, Zaidi Z, O'Brien BC. RIME foreword: what constitutes science in educational research? Applying rigor in our research approaches. Acad Med. 2020;95(11S):S1–5.

National Institute of Allergy and Infectious Diseases. Writing a winning application—You’re your niche. 2020a. https://www.niaid.nih.gov/grants-contracts/find-your-niche . Accessed 23 Jan 2022.

National Institute of Allergy and Infectious Diseases. Writing a winning application—conduct a self-assessment. 2020b. https://www.niaid.nih.gov/grants-contracts/winning-app-self-assessment . Accessed 23 Jan 2022.

Glassick CE, Huber MT, Maeroff GI. Scholarship assessed: evaluation of the professoriate. San Francisco: Jossey Bass; 1997.

Simpson D, Meurer L, Braza D. Meeting the scholarly project requirement-application of scholarship criteria beyond research. J Grad Med Educ. 2012;4(1):111–2. https://doi.org/10.4300/JGME-D-11-00310.1 .

Article PubMed PubMed Central Google Scholar

Fincher RME, Simpson DE, Mennin SP, Rosenfeld GC, Rothman A, McGrew MC et al. The council of academic societies task force on scholarship. Scholarship in teaching: an imperative for the 21st century. Academic Medicine. 2000;75(9):887–94.

Hutchings P, Shulman LS. The scholarship of teaching new elaborations and developments. Change. 1999;11–5.

Download references

Author information

Authors and affiliations.

School of Pharmacy, Notre Dame of Maryland University, Baltimore, MD, USA

Sharon K. Park

University of California, Irvine School of Medicine, Irvine, CA, USA

Khanh-Van Le-Bucklin & Julie Youm

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Sharon K. Park .

Editor information

Editors and affiliations.

Johns Hopkins University School of Medicine, Baltimore, MD, USA

April S. Fitzgerald

Johns Hopkins Bloomberg School of Public Health, Baltimore, MD, USA

Gundula Bosch

Rights and permissions

Reprints and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter.

Park, S.K., Le-Bucklin, KV., Youm, J. (2023). Introduction to Education Research. In: Fitzgerald, A.S., Bosch, G. (eds) Education Scholarship in Healthcare. Springer, Cham. https://doi.org/10.1007/978-3-031-38534-6_2

Download citation

DOI : https://doi.org/10.1007/978-3-031-38534-6_2

Published : 29 November 2023

Publisher Name : Springer, Cham

Print ISBN : 978-3-031-38533-9

Online ISBN : 978-3-031-38534-6

eBook Packages : Medicine Medicine (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Subject List

- Take a Tour

- For Authors

- Subscriber Services

- Publications

- African American Studies

- African Studies

- American Literature

- Anthropology

- Architecture Planning and Preservation

- Art History

- Atlantic History

- Biblical Studies

- British and Irish Literature

- Childhood Studies

- Chinese Studies

- Cinema and Media Studies

- Communication

- Criminology

- Environmental Science

- Evolutionary Biology

- International Law

- International Relations

- Islamic Studies

- Jewish Studies

- Latin American Studies

- Latino Studies

- Linguistics

- Literary and Critical Theory

- Medieval Studies

- Military History

- Political Science

- Public Health

- Renaissance and Reformation

- Social Work

- Urban Studies

- Victorian Literature

- Browse All Subjects

How to Subscribe

- Free Trials

In This Article Expand or collapse the "in this article" section Methodologies for Conducting Education Research

Introduction, general overviews.

- Experimental Research

- Quasi-Experimental Research

- Hierarchical Linear Modeling

- Survey Research

- Assessment and Measurement

- Qualitative Research Methodologies

- Program Evaluation

- Research Syntheses

- Implementation

Related Articles Expand or collapse the "related articles" section about

About related articles close popup.

Lorem Ipsum Sit Dolor Amet

Vestibulum ante ipsum primis in faucibus orci luctus et ultrices posuere cubilia Curae; Aliquam ligula odio, euismod ut aliquam et, vestibulum nec risus. Nulla viverra, arcu et iaculis consequat, justo diam ornare tellus, semper ultrices tellus nunc eu tellus.

- Action Research in Education

- Data Collection in Educational Research

- Educational Assessment

- Educational Statistics for Longitudinal Research

- Grounded Theory

- Literature Reviews

- Meta-Analysis and Research Synthesis in Education

- Mixed Methods Research

- Multivariate Research Methodology

- Narrative Research in Education

- Performance Objectives and Measurement

- Performance-based Research Assessment in Higher Education

- Qualitative Research Design

- Quantitative Research Designs in Educational Research

- Single-Subject Research Design

- Social Network Analysis

- Social Science and Education Research

- Statistical Assumptions

Other Subject Areas

Forthcoming articles expand or collapse the "forthcoming articles" section.

- Gender, Power, and Politics in the Academy

- Girls' Education in the Developing World

- Non-Formal & Informal Environmental Education

- Find more forthcoming articles...

- Export Citations

- Share This Facebook LinkedIn Twitter

Methodologies for Conducting Education Research by Marisa Cannata LAST REVIEWED: 19 August 2020 LAST MODIFIED: 15 December 2011 DOI: 10.1093/obo/9780199756810-0061

Education is a diverse field and methodologies used in education research are necessarily diverse. The reasons for the methodological diversity of education research are many, including the fact that the field of education is composed of a multitude of disciplines and tensions between basic and applied research. For example, accepted methods of systemic inquiry in history, sociology, economics, and psychology vary, yet all of these disciplines help answer important questions posed in education. This methodological diversity has led to debates about the quality of education research and the perception of shifting standards of quality research. The citations selected for inclusion in this article provide a broad overview of methodologies and discussions of quality research standards across the different types of questions posed in educational research. The citations represent summaries of ongoing debates, articles or books that have had a significant influence on education research, and guides to those who wish to implement particular methodologies. Most of the sections focus on specific methodologies and provide advice or examples for studies employing these methodologies.

The interdisciplinary nature of education research has implications for education research. There is no single best research design for all questions that guide education research. Even through many often heated debates about methodologies, the common strand is that research designs should follow the research questions. The following works offer an introduction to the debates, divides, and difficulties of education research. Schoenfeld 1999 , Mitchell and Haro 1999 , and Shulman 1988 provide perspectives on diversity within the field of education and the implications of this diversity on the debates about education research and difficulties conducting such research. National Research Council 2002 outlines the principles of scientific inquiry and how they apply to education. Published around the time No Child Left Behind required education policies to be based on scientific research, this book laid the foundation for much of the current emphasis of experimental and quasi-experimental research in education. To read another perspective on defining good education research, readers may turn to Hostetler 2005 . Readers who want a general overview of various methodologies in education research and directions on how to choose between them should read Creswell 2009 and Green, et al. 2006 . The American Educational Research Association (AERA), the main professional association focused on education research, has developed standards for how to report methods and findings in empirical studies. Those wishing to follow those standards should consult American Educational Research Association 2006 .

American Educational Research Association. 2006. Standards for reporting on empirical social science research in AERA publications. Educational Researcher 35.6: 33–40.

DOI: 10.3102/0013189X035006033

The American Educational Research Association is the professional association for researchers in education. Publications by AERA are a well-regarded source of research. This article outlines the requirements for reporting original research in AERA publications.

Creswell, J. W. 2009. Research design: Qualitative, quantitative, and mixed methods approaches . 3d ed. Los Angeles: SAGE.

Presents an overview of qualitative, quantitative and mixed-methods research designs, including how to choose the design based on the research question. This book is particularly helpful for those who want to design mixed-methods studies.

Green, J. L., G. Camilli, and P. B. Elmore. 2006. Handbook of complementary methods for research in education . Mahwah, NJ: Lawrence Erlbaum.

Provides a broad overview of several methods of educational research. The first part provides an overview of issues that cut across specific methodologies, and subsequent chapters delve into particular research approaches.

Hostetler, K. 2005. What is “good” education research? Educational Researcher 34.6: 16–21.

DOI: 10.3102/0013189X034006016

Goes beyond methodological concerns to argue that “good” educational research should also consider the conception of human well-being. By using a philosophical lens on debates about quality education research, this article is useful for moving beyond qualitative-quantitative divides.

Mitchell, T. R., and A. Haro. 1999. Poles apart: Reconciling the dichotomies in education research. In Issues in education research . Edited by E. C. Lagemann and L. S. Shulman, 42–62. San Francisco: Jossey-Bass.

Chapter outlines several dichotomies in education research, including the tension between applied research and basic research and between understanding the purposes of education and the processes of education.

National Research Council. 2002. Scientific research in education . Edited by R. J. Shavelson and L. Towne. Committee on Scientific Principles for Education Research. Center for Education. Division of Behavioral and Social Sciences and Education. Washington, DC: National Academy Press.

This book was released around the time the No Child Left Behind law directed that policy decisions should be guided by scientific research. It is credited with starting the current debate about methods in educational research and the preference for experimental studies.

Schoenfeld, A. H. 1999. The core, the canon, and the development of research skills. Issues in the preparation of education researchers. In Issues in education research . Edited by E. C. Lagemann and L. S. Shulman, 166–202. San Francisco: Jossey-Bass.

Describes difficulties in preparing educational researchers due to the lack of a core and a canon in education. While the focus is on preparing researchers, it provides valuable insight into why debates over education research persist.

Shulman, L. S. 1988. Disciplines of inquiry in education: An overview. In Complementary methods for research in education . Edited by R. M. Jaeger, 3–17. Washington, DC: American Educational Research Association.

Outlines what distinguishes research from other modes of disciplined inquiry and the relationship between academic disciplines, guiding questions, and methods of inquiry.

back to top

Users without a subscription are not able to see the full content on this page. Please subscribe or login .

Oxford Bibliographies Online is available by subscription and perpetual access to institutions. For more information or to contact an Oxford Sales Representative click here .

- About Education »

- Meet the Editorial Board »

- Academic Achievement

- Academic Audit for Universities

- Academic Freedom and Tenure in the United States

- Adjuncts in Higher Education in the United States

- Administrator Preparation

- Adolescence

- Advanced Placement and International Baccalaureate Courses

- Advocacy and Activism in Early Childhood

- African American Racial Identity and Learning

- Alaska Native Education

- Alternative Certification Programs for Educators

- Alternative Schools

- American Indian Education

- Animals in Environmental Education

- Art Education

- Artificial Intelligence and Learning

- Assessing School Leader Effectiveness

- Assessment, Behavioral

- Assessment, Educational

- Assessment in Early Childhood Education

- Assistive Technology

- Augmented Reality in Education

- Beginning-Teacher Induction

- Bilingual Education and Bilingualism

- Black Undergraduate Women: Critical Race and Gender Perspe...

- Blended Learning

- Case Study in Education Research

- Changing Professional and Academic Identities

- Character Education

- Children’s and Young Adult Literature

- Children's Beliefs about Intelligence

- Children's Rights in Early Childhood Education

- Citizenship Education

- Civic and Social Engagement of Higher Education

- Classroom Learning Environments: Assessing and Investigati...

- Classroom Management

- Coherent Instructional Systems at the School and School Sy...

- College Admissions in the United States

- College Athletics in the United States

- Community Relations

- Comparative Education

- Computer-Assisted Language Learning

- Computer-Based Testing

- Conceptualizing, Measuring, and Evaluating Improvement Net...

- Continuous Improvement and "High Leverage" Educational Pro...

- Counseling in Schools

- Critical Approaches to Gender in Higher Education

- Critical Perspectives on Educational Innovation and Improv...

- Critical Race Theory

- Crossborder and Transnational Higher Education

- Cross-National Research on Continuous Improvement

- Cross-Sector Research on Continuous Learning and Improveme...

- Cultural Diversity in Early Childhood Education

- Culturally Responsive Leadership

- Culturally Responsive Pedagogies

- Culturally Responsive Teacher Education in the United Stat...

- Curriculum Design

- Data-driven Decision Making in the United States

- Deaf Education

- Desegregation and Integration

- Design Thinking and the Learning Sciences: Theoretical, Pr...

- Development, Moral

- Dialogic Pedagogy

- Digital Age Teacher, The

- Digital Citizenship

- Digital Divides

- Disabilities

- Distance Learning

- Distributed Leadership

- Doctoral Education and Training

- Early Childhood Education and Care (ECEC) in Denmark

- Early Childhood Education and Development in Mexico

- Early Childhood Education in Aotearoa New Zealand

- Early Childhood Education in Australia

- Early Childhood Education in China

- Early Childhood Education in Europe

- Early Childhood Education in Sub-Saharan Africa

- Early Childhood Education in Sweden

- Early Childhood Education Pedagogy

- Early Childhood Education Policy

- Early Childhood Education, The Arts in

- Early Childhood Mathematics

- Early Childhood Science

- Early Childhood Teacher Education

- Early Childhood Teachers in Aotearoa New Zealand

- Early Years Professionalism and Professionalization Polici...

- Economics of Education

- Education For Children with Autism

- Education for Sustainable Development

- Education Leadership, Empirical Perspectives in

- Education of Native Hawaiian Students

- Education Reform and School Change

- Educator Partnerships with Parents and Families with a Foc...

- Emotional and Affective Issues in Environmental and Sustai...

- Emotional and Behavioral Disorders

- Environmental and Science Education: Overlaps and Issues

- Environmental Education

- Environmental Education in Brazil

- Epistemic Beliefs

- Equity and Improvement: Engaging Communities in Educationa...

- Equity, Ethnicity, Diversity, and Excellence in Education

- Ethical Research with Young Children

- Ethics and Education

- Ethics of Teaching

- Ethnic Studies

- Evidence-Based Communication Assessment and Intervention

- Family and Community Partnerships in Education

- Family Day Care

- Federal Government Programs and Issues

- Feminization of Labor in Academia

- Finance, Education

- Financial Aid

- Formative Assessment

- Future-Focused Education

- Gender and Achievement

- Gender and Alternative Education

- Gender-Based Violence on University Campuses

- Gifted Education

- Global Mindedness and Global Citizenship Education

- Global University Rankings

- Governance, Education

- Growth of Effective Mental Health Services in Schools in t...

- Higher Education and Globalization

- Higher Education and the Developing World

- Higher Education Faculty Characteristics and Trends in the...

- Higher Education Finance

- Higher Education Governance

- Higher Education Graduate Outcomes and Destinations

- Higher Education in Africa

- Higher Education in China

- Higher Education in Latin America

- Higher Education in the United States, Historical Evolutio...

- Higher Education, International Issues in

- Higher Education Management

- Higher Education Policy

- Higher Education Research

- Higher Education Student Assessment

- High-stakes Testing

- History of Early Childhood Education in the United States

- History of Education in the United States

- History of Technology Integration in Education

- Homeschooling

- Inclusion in Early Childhood: Difference, Disability, and ...

- Inclusive Education

- Indigenous Education in a Global Context

- Indigenous Learning Environments

- Indigenous Students in Higher Education in the United Stat...

- Infant and Toddler Pedagogy

- Inservice Teacher Education

- Integrating Art across the Curriculum

- Intelligence

- Intensive Interventions for Children and Adolescents with ...

- International Perspectives on Academic Freedom

- Intersectionality and Education

- Knowledge Development in Early Childhood

- Leadership Development, Coaching and Feedback for

- Leadership in Early Childhood Education

- Leadership Training with an Emphasis on the United States

- Learning Analytics in Higher Education

- Learning Difficulties

- Learning, Lifelong

- Learning, Multimedia

- Learning Strategies

- Legal Matters and Education Law

- LGBT Youth in Schools

- Linguistic Diversity

- Linguistically Inclusive Pedagogy

- Literacy Development and Language Acquisition

- Mathematics Identity

- Mathematics Instruction and Interventions for Students wit...

- Mathematics Teacher Education

- Measurement for Improvement in Education

- Measurement in Education in the United States

- Methodological Approaches for Impact Evaluation in Educati...

- Methodologies for Conducting Education Research

- Mindfulness, Learning, and Education

- Motherscholars

- Multiliteracies in Early Childhood Education

- Multiple Documents Literacy: Theory, Research, and Applica...

- Museums, Education, and Curriculum

- Music Education

- Native American Studies

- Note-Taking

- Numeracy Education

- One-to-One Technology in the K-12 Classroom

- Online Education

- Open Education

- Organizing for Continuous Improvement in Education

- Organizing Schools for the Inclusion of Students with Disa...

- Outdoor Play and Learning

- Outdoor Play and Learning in Early Childhood Education

- Pedagogical Leadership

- Pedagogy of Teacher Education, A

- Performance-based Research Funding

- Phenomenology in Educational Research

- Philosophy of Education

- Physical Education

- Podcasts in Education

- Policy Context of United States Educational Innovation and...

- Politics of Education

- Portable Technology Use in Special Education Programs and ...

- Post-humanism and Environmental Education

- Pre-Service Teacher Education

- Problem Solving

- Productivity and Higher Education

- Professional Development

- Professional Learning Communities

- Programs and Services for Students with Emotional or Behav...

- Psychology Learning and Teaching

- Psychometric Issues in the Assessment of English Language ...

- Qualitative Data Analysis Techniques

- Qualitative, Quantitative, and Mixed Methods Research Samp...

- Queering the English Language Arts (ELA) Writing Classroom

- Race and Affirmative Action in Higher Education

- Reading Education

- Refugee and New Immigrant Learners

- Relational and Developmental Trauma and Schools

- Relational Pedagogies in Early Childhood Education

- Reliability in Educational Assessments

- Religion in Elementary and Secondary Education in the Unit...

- Researcher Development and Skills Training within the Cont...

- Research-Practice Partnerships in Education within the Uni...

- Response to Intervention

- Restorative Practices

- Risky Play in Early Childhood Education

- Scale and Sustainability of Education Innovation and Impro...

- Scaling Up Research-based Educational Practices

- School Accreditation

- School Choice

- School Culture

- School District Budgeting and Financial Management in the ...

- School Improvement through Inclusive Education

- School Reform

- Schools, Private and Independent

- School-Wide Positive Behavior Support

- Science Education

- Secondary to Postsecondary Transition Issues

- Self-Regulated Learning

- Self-Study of Teacher Education Practices

- Service-Learning

- Severe Disabilities

- Single Salary Schedule

- Single-sex Education

- Social Context of Education

- Social Justice

- Social Pedagogy

- Social Studies Education

- Sociology of Education

- Standards-Based Education

- Student Access, Equity, and Diversity in Higher Education

- Student Assignment Policy

- Student Engagement in Tertiary Education

- Student Learning, Development, Engagement, and Motivation ...

- Student Participation

- Student Voice in Teacher Development

- Sustainability Education in Early Childhood Education

- Sustainability in Early Childhood Education

- Sustainability in Higher Education

- Teacher Beliefs and Epistemologies

- Teacher Collaboration in School Improvement

- Teacher Evaluation and Teacher Effectiveness

- Teacher Preparation

- Teacher Training and Development

- Teacher Unions and Associations

- Teacher-Student Relationships

- Teaching Critical Thinking

- Technologies, Teaching, and Learning in Higher Education

- Technology Education in Early Childhood

- Technology, Educational

- Technology-based Assessment

- The Bologna Process

- The Regulation of Standards in Higher Education

- Theories of Educational Leadership

- Three Conceptions of Literacy: Media, Narrative, and Gamin...

- Tracking and Detracking

- Traditions of Quality Improvement in Education

- Transformative Learning

- Transitions in Early Childhood Education

- Tribally Controlled Colleges and Universities in the Unite...

- Understanding the Psycho-Social Dimensions of Schools and ...

- University Faculty Roles and Responsibilities in the Unite...

- Using Ethnography in Educational Research

- Value of Higher Education for Students and Other Stakehold...

- Virtual Learning Environments

- Vocational and Technical Education

- Wellness and Well-Being in Education

- Women's and Gender Studies

- Young Children and Spirituality

- Young Children's Learning Dispositions

- Young Children's Working Theories

- Privacy Policy

- Cookie Policy

- Legal Notice

- Accessibility

Powered by:

- [66.249.64.20|81.177.182.154]

- 81.177.182.154

Scientific Research in Education (2002)

Chapter: 5 designs for the conduct of scientific research in education, 5 designs for the conduct of scientific research in education.

The salient features of education delineated in Chapter 4 and the guiding principles of scientific research laid out in Chapter 3 set boundaries for the design and conduct of scientific education research. Thus, the design of a study (e.g., randomized experiment, ethnography, multiwave survey) does not itself make it scientific. However, if the design directly addresses a question that can be addressed empirically, is linked to prior research and relevant theory, is competently implemented in context, logically links the findings to interpretation ruling out counterinterpretations, and is made accessible to scientific scrutiny, it could then be considered scientific. That is: Is there a clear set of questions underlying the design? Are the methods appropriate to answer the questions and rule out competing answers? Does the study take previous research into account? Is there a conceptual basis? Are data collected in light of local conditions and analyzed systematically? Is the study clearly described and made available for criticism? The more closely aligned it is with these principles, the higher the quality of the scientific study. And the particular features of education require that the research process be explicitly designed to anticipate the implications of these features and to model and plan accordingly.

RESEARCH DESIGN

Our scientific principles include research design—the subject of this chapter—as but one aspect of a larger process of rigorous inquiry. How-

ever, research design (and corresponding scientific methods) is a crucial aspect of science. It is also the subject of much debate in many fields, including education. In this chapter, we describe some of the most frequently used and trusted designs for scientifically addressing broad classes of research questions in education.

In doing so, we develop three related themes. First, as we posit earlier, a variety of legitimate scientific approaches exist in education research. Therefore, the description of methods discussed in this chapter is illustrative of a range of trusted approaches; it should not be taken as an authoritative list of tools to the exclusion of any others. 1 As we stress in earlier chapters, the history of science has shown that research designs evolve, as do the questions they address, the theories they inform, and the overall state of knowledge.

Second, we extend the argument we make in Chapter 3 that designs and methods must be carefully selected and implemented to best address the question at hand. Some methods are better than others for particular purposes, and scientific inferences are constrained by the type of design employed. Methods that may be appropriate for estimating the effect of an educational intervention, for example, would rarely be appropriate for use in estimating dropout rates. While researchers—in education or any other field—may overstate the conclusions from an inquiry, the strength of scientific inference must be judged in terms of the design used to address the question under investigation. A comprehensive explication of a hierarchy of appropriate designs and analytic approaches under various conditions would require a depth of treatment found in research methods textbooks. This is not our objective. Rather, our goal is to illustrate that among available techniques, certain designs are better suited to address particular kinds of questions under particular conditions than others.

Third, in order to generate a rich source of scientific knowledge in education that is refined and revised over time, different types of inquiries and methods are required. At any time, the types of questions and methods depend in large part on an accurate assessment of the overall state of knowl-

edge and professional judgment about how a particular line of inquiry could advance understanding. In areas with little prior knowledge, for example, research will generally need to involve careful description to formulate initial ideas. In such situations, descriptive studies might be undertaken to help bring education problems or trends into sharper relief or to generate plausible theories about the underlying structure of behavior or learning. If the effects of education programs that have been implemented on a large scale are to be understood, however, investigations must be designed to test a set of causal hypotheses. Thus, while we treat the topic of design in this chapter as applying to individual studies, research design has a broader quality as it relates to lines of inquiry that develop over time.

While a full development of these notions goes considerably beyond our charge, we offer this brief overview to place the discussion of methods that follows into perspective. Also, in the concluding section of this chapter, we make a few targeted suggestions for the kinds of work we believe are most needed in education research to make further progress toward robust knowledge.

TYPES OF RESEARCH QUESTIONS

In discussing design, we have to be true to our admonition that the research question drives the design, not vice versa. To simplify matters, the committee recognized that a great number of education research questions fall into three (interrelated) types: description—What is happening? cause—Is there a systematic effect? and process or mechanism—Why or how is it happening?

The first question—What is happening?—invites description of various kinds, so as to properly characterize a population of students, understand the scope and severity of a problem, develop a theory or conjecture, or identify changes over time among different educational indicators—for example, achievement, spending, or teacher qualifications. Description also can include associations among variables, such as the characteristics of schools (e.g., size, location, economic base) that are related to (say) the provision of music and art instruction. The second question is focused on establishing causal effects: Does x cause y ? The search for cause, for example,

can include seeking to understand the effect of teaching strategies on student learning or state policy changes on district resource decisions. The third question confronts the need to understand the mechanism or process by which x causes y . Studies that seek to model how various parts of a complex system—like U.S. education—fit together help explain the conditions that facilitate or impede change in teaching, learning, and schooling. Within each type of question, we separate the discussion into subsections that show the use of different methods given more fine-grained goals and conditions of an inquiry.

Although for ease of discussion we treat these types of questions separately, in practice they are closely related. As our examples show, within particular studies, several kinds of queries can be addressed. Furthermore, various genres of scientific education research often address more than one of these types of questions. Evaluation research—the rigorous and systematic evaluation of an education program or policy—exemplifies the use of multiple questions and corresponding designs. As applied in education, this type of scientific research is distinguished from other scientific research by its purpose: to contribute to program improvement (Weiss, 1998a). Evaluation often entails an assessment of whether the program caused improvements in the outcome or outcomes of interest (Is there a systematic effect?). It also can involve detailed descriptions of the way the program is implemented in practice and in what contexts ( What is happening? ) and the ways that program services influence outcomes (How is it happening?).

Throughout the discussion, we provide several examples of scientific education research, connecting them to scientific principles ( Chapter 3 ) and the features of education ( Chapter 4 ). We have chosen these studies because they align closely with several of the scientific principles. These examples include studies that generate hypotheses or conjectures as well as those that test them. Both tasks are essential to science, but as a general rule they cannot be accomplished simultaneously.

Moreover, just as we argue that the design of a study does not itself make it scientific, an investigation that seeks to address one of these questions is not necessarily scientific either. For example, many descriptive studies—however useful they may be—bear little resemblance to careful scientific study. They might record observations without any clear conceptual viewpoint, without reproducible protocols for recording data, and so

forth. Again, studies may be considered scientific by assessing the rigor with which they meet scientific principles and are designed to account for the context of the study.

Finally, we have tended to speak of research in terms of a simple dichotomy— scientific or not scientific—but the reality is more complicated. Individual research projects may adhere to each of the principles in varying degrees, and the extent to which they meet these goals goes a long way toward defining the scientific quality of a study. For example, while all scientific studies must pose clear questions that can be investigated empirically and be grounded in existing knowledge, more rigorous studies will begin with more precise statements of the underlying theory driving the inquiry and will generally have a well-specified hypothesis before the data collection and testing phase is begun. Studies that do not start with clear conceptual frameworks and hypotheses may still be scientific, although they are obviously at a more rudimentary level and will generally require follow-on study to contribute significantly to scientific knowledge.

Similarly, lines of research encompassing collections of studies may be more or less productive and useful in advancing knowledge. An area of research that, for example, does not advance beyond the descriptive phase toward more precise scientific investigation of causal effects and mechanisms for a long period of time is clearly not contributing as much to knowledge as one that builds on prior work and moves toward more complete understanding of the causal structure. This is not to say that descriptive work cannot generate important breakthroughs. However, the rate of progress should—as we discuss at the end of this chapter—enter into consideration of the support for advanced lines of inquiry. The three classes of questions we discuss in the remainder of this chapter are ordered in a way that reflects the sequence that research studies tend to follow as well as their interconnected nature.

WHAT IS HAPPENING?

Answers to “What is happening?” questions can be found by following Yogi Berra’s counsel in a systematic way: if you want to know what’s going on, you have to go out and look at what is going on. Such inquiries are descriptive. They are intended to provide a range of information from

documenting trends and issues in a range of geopolitical jurisdictions, populations, and institutions to rich descriptions of the complexities of educational practice in a particular locality, to relationships among such elements as socioeconomic status, teacher qualifications, and achievement.

Estimates of Population Characteristics

Descriptive scientific research in education can make generalizable statements about the national scope of a problem, student achievement levels across the states, or the demographics of children, teachers, or schools. Methods that enable the collection of data from a randomly selected sample of the population provide the best way of addressing such questions. Questionnaires and telephone interviews are common survey instruments developed to gather information from a representative sample of some population of interest. Policy makers at the national, state, and sometimes district levels depend on this method to paint a picture of the educational landscape. Aggregate estimates of the academic achievement level of children at the national level (e.g., National Center for Education Statistics [NCES], National Assessment of Educational Progress [NAEP]), the supply, demand, and turnover of teachers (e.g., NCES Schools and Staffing Survey), the nation’s dropout rates (e.g., NCES Common Core of Data), how U.S. children fare on tests of mathematics and science achievement relative to children in other nations (e.g., Third International Mathematics and Science Study) and the distribution of doctorate degrees across the nation (e.g., National Science Foundation’s Science and Engineering Indicators) are all based on surveys from populations of school children, teachers, and schools.

To yield credible results, such data collection usually depends on a random sample (alternatively called a probability sample) of the target population. If every observation (e.g., person, school) has a known chance of being selected into the study, researchers can make estimates of the larger population of interest based on statistical technology and theory. The validity of inferences about population characteristics based on sample data depends heavily on response rates, that is, the percentage of those randomly selected for whom data are collected. The measures used must have known reliability—that is, the extent to which they reproduce results. Finally, the value of a data collection instrument hinges not only on the

sampling method, participation rate, and reliability, but also on their validity: that the questionnaire or survey items measure what they are supposed to measure.

The NAEP survey tracks national trends in student achievement across several subject domains and collects a range of data on school, student, and teacher characteristics (see Box 5-1 ). This rich source of information enables several kinds of descriptive work. For example, researchers can estimate the average score of eighth graders on the mathematics assessment (i.e., measures of central tendency) and compare that performance to prior years. Part of the study we feature (see below) about college women’s career choices featured a similar estimation of population characteristics. In that study, the researchers developed a survey to collect data from a representative sample of women at the two universities to aid them in assessing the generalizability of their findings from the in-depth studies of the 23 women.

Simple Relationships

The NAEP survey also illustrates how researchers can describe patterns of relationships between variables. For example, NCES reports that in 2000, eighth graders whose teachers majored in mathematics or mathematics education scored higher, on average, than did students whose teachers did not major in these fields (U.S. Department of Education, 2000). This finding is the result of descriptive work that explores the correlation between variables: in this case, the relationship between student mathematics performance and their teachers’ undergraduate major.

Such associations cannot be used to infer cause. However, there is a common tendency to make unsubstantiated jumps from establishing a relationship to concluding cause. As committee member Paul Holland quipped during the committee’s deliberations, “Casual comparisons inevitably invite careless causal conclusions.” To illustrate the problem with drawing causal inferences from simple correlations, we use an example from work that compares Catholic schools to public schools. We feature this study later in the chapter as one that competently examines causal mechanisms. Before addressing questions of mechanism, foundational work involved simple correlational results that compared the performance of Catholic high school students on standardized mathematics tests with their

counterparts in public schools. These simple correlations revealed that average mathematics achievement was considerably higher for Catholic school students than for public school students (Bryk, Lee, and Holland, 1993). However, the researchers were careful not to conclude from this analysis that attending a Catholic school causes better student outcomes, because there are a host of potential explanations (other than attending a Catholic school) for this relationship between school type and achievement. For example, since Catholic schools can screen children for aptitude, they may have a more able student population than public schools at the outset. (This is an example of the classic selectivity bias that commonly threatens the validity of causal claims in nonrandomized studies; we return to this issue in the next section.) In short, there are other hypotheses that could explain the observed differences in achievement between students in different sectors that must be considered systematically in assessing the potential causal relationship between Catholic schooling and student outcomes.

Descriptions of Localized Educational Settings

In some cases, scientists are interested in the fine details (rather than the distribution or central tendency) of what is happening in a particular organization, group of people, or setting. This type of work is especially important when good information about the group or setting is non-existent or scant. In this type of research, then, it is important to obtain first-hand, in-depth information from the particular focal group or site. For such purposes, selecting a random sample from the population of interest may not be the proper method of choice; rather, samples may be purposively selected to illuminate phenomena in depth. 2 For example, to better understand a high-achieving school in an urban setting with children of predominantly low socioeconomic status, a researcher might conduct a detailed case study or an ethnographic study (a case study with a focus on culture) of such a school (Yin and White, 1986; Miles and Huberman,

1994). This type of scientific description can provide rich depictions of the policies, procedures, and contexts in which the school operates and generate plausible hypotheses about what might account for its success. Researchers often spend long periods of time in the setting or group in order to understand what decisions are made, what beliefs and attitudes are formed, what relationships are developed, and what forms of success are celebrated. These descriptions, when used in conjunction with causal methods, are often critical to understand such educational outcomes as student achievement because they illuminate key contextual factors.

Box 5-2 provides an example of a study that described in detail (and also modeled several possible mechanisms; see later discussion) a small group of women, half who began their college careers in science and half in what were considered more traditional majors for women. This descriptive part of the inquiry involved an ethnographic study of the lives of 23 first-year women enrolled in two large universities.

Scientific description of this type can generate systematic observations about the focal group or site, and patterns in results may be generalizable to other similar groups or sites or for the future. As with any other method, a scientifically rigorous case study has to be designed to address the research question it addresses. That is, the investigator has to choose sites, occasions, respondents, and times with a clear research purpose in mind and be sensitive to his or her own expectations and biases (Maxwell, 1996; Silverman, 1993). Data should typically be collected from varied sources, by varied methods, and corroborated by other investigators. Furthermore, the account of the case needs to draw on original evidence and provide enough detail so that the reader can make judgments about the validity of the conclusions (Yin, 2000).

Results may also be used as the basis for new theoretical developments, new experiments, or improved measures on surveys that indicate the extent of generalizability. In the work done by Holland and Eisenhart (1990), for example (see Box 5-2 ), a number of theoretical models were developed and tested to explain how women decide to pursue or abandon nontraditional careers in the fields they had studied in college. Their finding that commitment to college life—not fear of competing with men or other hypotheses that had previously been set forth—best explained these decisions was new knowledge. It has been shown in subsequent studies to

generalize somewhat to similar schools, though additional models seem to exist at some schools (Seymour and Hewitt, 1997).

Although such purposively selected samples may not be scientifically generalizable to other locations or people, these vivid descriptions often appeal to practitioners. Scientifically rigorous case studies have strengths and weaknesses for such use. They can, for example, help local decision makers by providing them with ideas and strategies that have promise in their educational setting. They cannot (unless combined with other methods) provide estimates of the likelihood that an educational approach might work under other conditions or that they have identified the right underlying causes. As we argue throughout this volume, research designs can often be strengthened considerably by using multiple methods— integrating the use of both quantitative estimates of population characteristics and qualitative studies of localized context.

Other descriptive designs may involve interviews with respondents or document reviews in a fairly large number of cases, such as 30 school districts or 60 colleges. Cases are often selected to represent a variety of conditions (e.g., urban/rural; east/west; affluent/poor). Such descriptive studies can be longitudinal, returning to the same cases over several years to see how conditions change.

These examples of descriptive work meet the principles of science, and have clearly contributed important insights to the base of scientific knowledge. If research is to be used to answer questions about “what works,” however, it must advance to other levels of scientific investigation such as those considered next.

IS THERE A SYSTEMATIC EFFECT?

Research designs that attempt to identify systematic effects have at their root an intent to establish a cause-and-effect relationship. Causal work is built on both theory and descriptive studies. In other words, the search for causal effects cannot be conducted in a vacuum: ideally, a strong theoretical base as well as extensive descriptive information are in place to provide the intellectual foundation for understanding causal relationships.

The simple question of “does x cause y ?” typically involves several different kinds of studies undertaken sequentially (Holland, 1993). In basic

terms, several conditions must be met to establish cause. Usually, a relationship or correlation between the variables is first identified. 3 Researchers also confirm that x preceded y in time (temporal sequence) and, crucially, that all presently conceivable rival explanations for the observed relationship have been “ruled out.” As alternative explanations are eliminated, confidence increases that it was indeed x that caused y . “Ruling out” competing explanations is a central metaphor in medical research, diagnosis, and other fields, including education, and it is the key element of causal queries (Campbell and Stanley 1963; Cook and Campbell 1979, 1986).

The use of multiple qualitative methods, especially in conjunction with a comparative study of the kind we describe in this section, can be particularly helpful in ruling out alternative explanations for the results observed (Yin, 2000; Weiss, in press). Such investigative tools can enable stronger causal inferences by enhancing the analysis of whether competing explanations can account for patterns in the data (e.g., unreliable measures or contamination of the comparison group). Similarly, qualitative methods can examine possible explanations for observed effects that arise outside of the purview of the study. For example, while an intervention was in progress, another program or policy may have offered participants opportunities similar to, and reinforcing of, those that the intervention provided. Thus, the “effects” that the study observed may have been due to the other program (“history” as the counterinterpretation; see Chapter 3 ). When all plausible rival explanations are identified and various forms of data can be used as evidence to rule them out, the causal claim that the intervention caused the observed effects is strengthened. In education, research that explores students’ and teachers’ in-depth experiences, observes their actions, and documents the constraints that affect their day-to-day activities provides a key source of generating plausible causal hypotheses.

We have organized the remainder of this section into two parts. The first treats randomized field trials, an ideal method when entities being examined can be randomly assigned to groups. Experiments are especially well-suited to situations in which the causal hypothesis is relatively simple. The second describes situations in which randomized field trials are not

feasible or desirable, and showcases a study that employed causal modeling techniques to address a complex causal question. We have distinguished randomized studies from others primarily to signal the difference in the strength with which causal claims can typically be made from them. The key difference between randomized field trials and other methods with respect to making causal claims is the extent to which the assumptions that underlie them are testable. By this simple criterion, nonrandomized studies are weaker in their ability to establish causation than randomized field trials, in large part because the role of other factors in influencing the outcome of interest is more difficult to gauge in nonrandomized studies. Other conditions that affect the choice of method are discussed in the course of the section.

Causal Relationships When Randomization Is Feasible

A fundamental scientific concept in making causal claims—that is, inferring that x caused y —is comparison. Comparing outcomes (e.g., student achievement) between two groups that are similar except for the causal variable (e.g., the educational intervention) helps to isolate the effect of that causal agent on the outcome of interest. 4 As we discuss in Chapter 4 , it is sometimes difficult to retain the sharpness of a comparison in education due to proximity (e.g., a design that features students in one classroom assigned to different interventions is subject to “spillover” effects) or human volition (e.g., teacher, parent, or student decisions to switch to another condition threaten the integrity of the randomly formed groups). Yet, from a scientific perspective, randomized trials (we also use the term “experiment” to refer to causal studies that feature random assignment) are the ideal for establishing whether one or more factors caused change in an outcome because of their strong ability to enable fair comparisons (Campbell and Stanley, 1963; Boruch, 1997; Cook and Payne, in press). Random allocation of students, classrooms, schools—whatever the unit of comparison may be—to different treatment groups assures that these comparison groups are, roughly speaking, equivalent at the time an intervention is introduced (that is, they do not differ systematically on account of hidden

influences) and chance differences between the groups can be taken into account statistically. As a result, the independent effect of the intervention on the outcome of interest can be isolated. In addition, these studies enable legitimate statistical statements of confidence in the results.

The Tennessee STAR experiment (see Chapter 3 ) on class-size reduction is a good example of the use of randomization to assess cause in an education study; in particular, this tool was used to gauge the effectiveness of an intervention. Some policy makers and scientists were unwilling to accept earlier, largely nonexperimental studies on class-size reduction as a basis for major policy decisions in the state. Those studies could not guarantee a fair comparison of children in small versus large classes because the comparisons relied on statistical adjustment rather than on actual construction of statistically equivalent groups. In Tennessee, statistical equivalence was achieved by randomly assigning eligible children and teachers to classrooms of different size. If the trial was properly carried out, 5 this randomization would lead to an unbiased estimate of the relative effect of class-size reduction and a statistical statement of confidence in the results.

Randomized trials are used frequently in the medical sciences and certain areas of the behavioral and social sciences, including prevention studies of mental health disorders (e.g., Beardslee, Wright, Salt, and Drezner, 1997), behavioral approaches to smoking cessation (e.g., Pieterse, Seydel, DeVries, Mudde, and Kok, 2001), and drug abuse prevention (e.g., Cook, Lawrence, Morse, and Roehl, 1984). It would not be ethical to assign individuals randomly to smoke and drink, and thus much of the evidence regarding the harmful effects of nicotine and alcohol comes from descriptive and correlational studies. However, randomized trials that show reductions in health detriments and improved social and behavioral functioning strengthen the causal links that have been established between drug use and adverse health and behavioral outcomes (Moses, 1995; Mosteller, Gilbert, and McPeek, 1980). In medical research, the relative effectiveness of the Salk vaccine (see Lambert and Markel, 2000) and streptomycin (Medical Research Council, 1948) was demonstrated through such trials. We have also learned about which drugs and surgical treatments are useless by depending on randomized controlled experiments (e.g., Schulte et al.,

2001; Gorman et al., 2001; Paradise et al., 1999). Randomized controlled trials are also used in industrial, market, and agricultural research.

Such trials are not frequently conducted in education research (Boruch, De Moya, and Snyder, in press). Nonetheless, it is not difficult to identify good examples in a variety of education areas that demonstrate their feasibility (see Boruch, 1997; Orr, 1999; and Cook and Payne, in press). For example, among the education programs whose effectiveness have been evaluated in randomized trials are the Sesame Street television series (Bogatz and Ball, 1972), peer-assisted learning and tutoring for young children with reading problems (Fuchs, Fuchs, and Kazdan, 1999), and Upward Bound (Myers and Schirm, 1999). And many of these trials have been successfully implemented on a large scale, randomizing entire classrooms or schools to intervention conditions. For numerous examples of trials in which schools, work places, and other entities are the units of random allocation and analysis, see Murray (1998), Donner and Klar (2000), Boruch and Foley (2000), and the Campbell Collaboration register of trials at http://campbell.gse.upenn.edu .

Causal Relationships When Randomization Is Not Feasible