Subscribe to the PwC Newsletter

Join the community, trending research, storydiffusion: consistent self-attention for long-range image and video generation.

This module converts the generated sequence of images into videos with smooth transitions and consistent subjects that are significantly more stable than the modules based on latent spaces only, especially in the context of long video generation.

DeepSeek-V2: A Strong, Economical, and Efficient Mixture-of-Experts Language Model

MLA guarantees efficient inference through significantly compressing the Key-Value (KV) cache into a latent vector, while DeepSeekMoE enables training strong models at an economical cost through sparse computation.

Granite Code Models: A Family of Open Foundation Models for Code Intelligence

ibm-granite/granite-code-models • 7 May 2024

Increasingly, code LLMs are being integrated into software development environments to improve the productivity of human programmers, and LLM-based agents are beginning to show promise for handling complex tasks autonomously.

KAN: Kolmogorov-Arnold Networks

Inspired by the Kolmogorov-Arnold representation theorem, we propose Kolmogorov-Arnold Networks (KANs) as promising alternatives to Multi-Layer Perceptrons (MLPs).

QServe: W4A8KV4 Quantization and System Co-design for Efficient LLM Serving

The key insight driving QServe is that the efficiency of LLM serving on GPUs is critically influenced by operations on low-throughput CUDA cores.

Improving Diffusion Models for Virtual Try-on

Finally, we present a customization method using a pair of person-garment images, which significantly improves fidelity and authenticity.

Prometheus 2: An Open Source Language Model Specialized in Evaluating Other Language Models

prometheus-eval/prometheus-eval • 2 May 2024

Proprietary LMs such as GPT-4 are often employed to assess the quality of responses from various LMs.

ImageInWords: Unlocking Hyper-Detailed Image Descriptions

google/imageinwords • 5 May 2024

To address these issues, we introduce ImageInWords (IIW), a carefully designed human-in-the-loop annotation framework for curating hyper-detailed image descriptions and a new dataset resulting from this process.

Plan-and-Solve Prompting: Improving Zero-Shot Chain-of-Thought Reasoning by Large Language Models

assafelovic/gpt-researcher • 6 May 2023

To address the calculation errors and improve the quality of generated reasoning steps, we extend PS prompting with more detailed instructions and derive PS+ prompting.

Inf-DiT: Upsampling Any-Resolution Image with Memory-Efficient Diffusion Transformer

thudm/inf-dit • 7 May 2024

However, due to a quadratic increase in memory during generating ultra-high-resolution images (e. g. 4096*4096), the resolution of generated images is often limited to 1024*1024.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

Machine learning articles from across Nature Portfolio

Machine learning is the ability of a machine to improve its performance based on previous results. Machine learning methods enable computers to learn without being explicitly programmed and have multiple applications, for example, in the improvement of data mining algorithms.

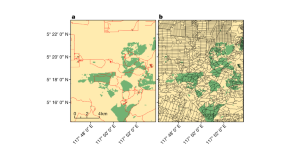

‘Ghost roads’ could be the biggest direct threat to tropical forests

By using volunteers to map roads in forests across Borneo, Sumatra and New Guinea, an innovative study shows that existing maps of the Asia-Pacific region are rife with errors. It also reveals that unmapped roads are extremely common — up to seven times more abundant than mapped ones. Such ‘ghost roads’ are promoting illegal logging, mining, wildlife poaching and deforestation in some of the world’s biologically richest ecosystems.

Adapting vision–language AI models to cardiology tasks

Vision–language models can be trained to read cardiac ultrasound images with implications for improving clinical workflows, but additional development and validation will be required before such models can replace humans.

- Rima Arnaout

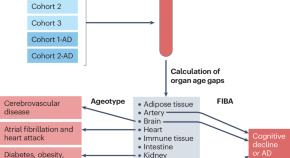

Not every organ ticks the same

A new study describes the development of proteomics-based ageing clocks that calculate the biological age of specific organs and define features of extreme ageing associated with age-related diseases. Their findings support the notion that plasma proteins can be used to monitor the ageing rates of specific organs and disease progression.

- Khaoula Talbi

- Anette Melk

Latest Research and Reviews

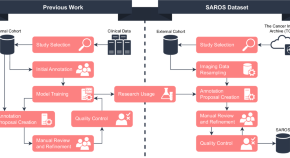

SAROS: A dataset for whole-body region and organ segmentation in CT imaging

- Sven Koitka

- Giulia Baldini

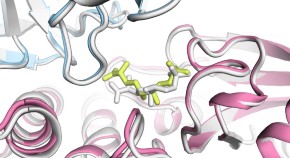

MISATO: machine learning dataset of protein–ligand complexes for structure-based drug discovery

MISATO is a database for structure-based drug discovery that combines quantum mechanics data with molecular dynamics simulations on ~20,000 protein–ligand structures. The artificial intelligence models included provide an easy entry point for the machine learning and drug discovery communities.

- Till Siebenmorgen

- Filipe Menezes

- Grzegorz M. Popowicz

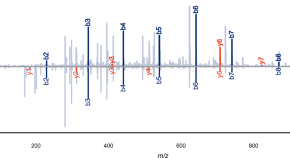

Fragment ion intensity prediction improves the identification rate of non-tryptic peptides in timsTOF

Immunopeptidomics is crucial for the discovery of potential immunotherapy and vaccine candidates. Here, the authors generate a ground truth timsTOF dataset to fine-tune the deep learning model Prosit, improving peptide-spectrum match rescoring by up to 3-fold during immunopeptide identification.

- Charlotte Adams

- Wassim Gabriel

- Kurt Boonen

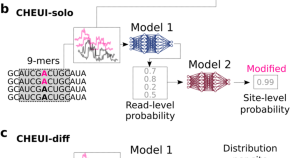

Prediction of m6A and m5C at single-molecule resolution reveals a transcriptome-wide co-occurrence of RNA modifications

The epitranscriptome holds many unexplored RNA functions, but detecting multiple modifications from one sample remains challenging. Here, authors devise a strategy combining AI and nanopore sequencing to uncover a transcriptome-wide co-occurrence of two modification types in individual RNA molecules.

- P Acera Mateos

Scalable and unbiased sequence-informed embedding of single-cell ATAC-seq data with CellSpace

By learning to embed DNA k -mers and cells into a joint space, CellSpace improves single-cell ATAC-seq analysis in multiple tasks such as latent structure discovery, transcription factor activity inference and batch effect mitigation.

- Zakieh Tayyebi

- Allison R. Pine

- Christina S. Leslie

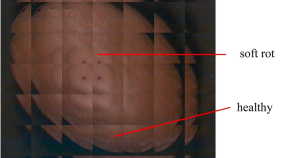

A dual-branch selective attention capsule network for classifying kiwifruit soft rot with hyperspectral images

- Zhiqiang Guo

- Yingfang Ni

- Yunliu Zeng

News and Comment

The US Congress is taking on AI —this computer scientist is helping

Kiri Wagstaff, who temporarily shelved her academic career to provide advice on federal AI legislation, talks about life inside the halls of power.

- Nicola Jones

Major AlphaFold upgrade offers boost for drug discovery

Latest version of the AI models how proteins interact with other molecules — but DeepMind restricts access to the tool.

- Ewen Callaway

Who’s making chips for AI? Chinese manufacturers lag behind US tech giants

Researchers in China say they are finding themselves five to ten years behind their US counterparts as export restrictions bite.

- Jonathan O'Callaghan

Response to “The perpetual motion machine of AI-generated data and the distraction of ChatGPT as a ‘scientist’”

- William Stafford Noble

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Frequently Asked Questions

JMLR Papers

Select a volume number to see its table of contents with links to the papers.

Volume 25 (January 2024 - Present)

Volume 24 (January 2023 - December 2023)

Volume 23 (January 2022 - December 2022)

Volume 22 (January 2021 - December 2021)

Volume 21 (January 2020 - December 2020)

Volume 20 (January 2019 - December 2019)

Volume 19 (August 2018 - December 2018)

Volume 18 (February 2017 - August 2018)

Volume 17 (January 2016 - January 2017)

Volume 16 (January 2015 - December 2015)

Volume 15 (January 2014 - December 2014)

Volume 14 (January 2013 - December 2013)

Volume 13 (January 2012 - December 2012)

Volume 12 (January 2011 - December 2011)

Volume 11 (January 2010 - December 2010)

Volume 10 (January 2009 - December 2009)

Volume 9 (January 2008 - December 2008)

Volume 8 (January 2007 - December 2007)

Volume 7 (January 2006 - December 2006)

Volume 6 (January 2005 - December 2005)

Volume 5 (December 2003 - December 2004)

Volume 4 (Apr 2003 - December 2003)

Volume 3 (Jul 2002 - Mar 2003)

Volume 2 (Oct 2001 - Mar 2002)

Volume 1 (Oct 2000 - Sep 2001)

Special Topics

Bayesian Optimization

Learning from Electronic Health Data (December 2016)

Gesture Recognition (May 2012 - present)

Large Scale Learning (Jul 2009 - present)

Mining and Learning with Graphs and Relations (February 2009 - present)

Grammar Induction, Representation of Language and Language Learning (Nov 2010 - Apr 2011)

Causality (Sep 2007 - May 2010)

Model Selection (Apr 2007 - Jul 2010)

Conference on Learning Theory 2005 (February 2007 - Jul 2007)

Machine Learning for Computer Security (December 2006)

Machine Learning and Large Scale Optimization (Jul 2006 - Oct 2006)

Approaches and Applications of Inductive Programming (February 2006 - Mar 2006)

Learning Theory (Jun 2004 - Aug 2004)

Special Issues

In Memory of Alexey Chervonenkis (Sep 2015)

Independent Components Analysis (December 2003)

Learning Theory (Oct 2003)

Inductive Logic Programming (Aug 2003)

Fusion of Domain Knowledge with Data for Decision Support (Jul 2003)

Variable and Feature Selection (Mar 2003)

Machine Learning Methods for Text and Images (February 2003)

Eighteenth International Conference on Machine Learning (ICML2001) (December 2002)

Computational Learning Theory (Nov 2002)

Shallow Parsing (Mar 2002)

Kernel Methods (December 2001)

- Conferences

- Last updated November 18, 2021

- In AI Origins & Evolution

Top Machine Learning Research Papers Released In 2021

- Published on November 18, 2021

- by Dr. Nivash Jeevanandam

Advances in machine learning and deep learning research are reshaping our technology. Machine learning and deep learning have accomplished various astounding feats this year in 2021, and key research articles have resulted in technical advances used by billions of people. The research in this sector is advancing at a breakneck pace and assisting you to keep up. Here is a collection of the most important recent scientific study papers.

Rebooting ACGAN: Auxiliary Classifier GANs with Stable Training

The authors of this work examined why ACGAN training becomes unstable as the number of classes in the dataset grows. The researchers revealed that the unstable training occurs due to a gradient explosion problem caused by the unboundedness of the input feature vectors and the classifier’s poor classification capabilities during the early training stage. The researchers presented the Data-to-Data Cross-Entropy loss (D2D-CE) and the Rebooted Auxiliary Classifier Generative Adversarial Network to alleviate the instability and reinforce ACGAN (ReACGAN). Additionally, extensive tests of ReACGAN demonstrate that it is resistant to hyperparameter selection and is compatible with a variety of architectures and differentiable augmentations.

This article is ranked #1 on CIFAR-10 for Conditional Image Generation.

For the research paper, read here .

For code, see here .

Dense Unsupervised Learning for Video Segmentation

The authors presented a straightforward and computationally fast unsupervised strategy for learning dense spacetime representations from unlabeled films in this study. The approach demonstrates rapid convergence of training and a high degree of data efficiency. Furthermore, the researchers obtain VOS accuracy superior to previous results despite employing a fraction of the previously necessary training data. The researchers acknowledge that the research findings may be utilised maliciously, such as for unlawful surveillance, and that they are excited to investigate how this skill might be used to better learn a broader spectrum of invariances by exploiting larger temporal windows in movies with complex (ego-)motion, which is more prone to disocclusions.

This study is ranked #1 on DAVIS 2017 for Unsupervised Video Object Segmentation (val).

Temporally-Consistent Surface Reconstruction using Metrically-Consistent Atlases

The authors offer an atlas-based technique for producing unsupervised temporally consistent surface reconstructions by requiring a point on the canonical shape representation to translate to metrically consistent 3D locations on the reconstructed surfaces. Finally, the researchers envisage a plethora of potential applications for the method. For example, by substituting an image-based loss for the Chamfer distance, one may apply the method to RGB video sequences, which the researchers feel will spur development in video-based 3D reconstruction.

This article is ranked #1 on ANIM in the category of Surface Reconstruction.

EdgeFlow: Achieving Practical Interactive Segmentation with Edge-Guided Flow

The researchers propose a revolutionary interactive architecture called EdgeFlow that uses user interaction data without resorting to post-processing or iterative optimisation. The suggested technique achieves state-of-the-art performance on common benchmarks due to its coarse-to-fine network design. Additionally, the researchers create an effective interactive segmentation tool that enables the user to improve the segmentation result through flexible options incrementally.

This paper is ranked #1 on Interactive Segmentation on PASCAL VOC

Learning Transferable Visual Models From Natural Language Supervision

The authors of this work examined whether it is possible to transfer the success of task-agnostic web-scale pre-training in natural language processing to another domain. The findings indicate that adopting this formula resulted in the emergence of similar behaviours in the field of computer vision, and the authors examine the social ramifications of this line of research. CLIP models learn to accomplish a range of tasks during pre-training to optimise their training objective. Using natural language prompting, CLIP can then use this task learning to enable zero-shot transfer to many existing datasets. When applied at a large scale, this technique can compete with task-specific supervised models, while there is still much space for improvement.

This research is ranked #1 on Zero-Shot Transfer Image Classification on SUN

CoAtNet: Marrying Convolution and Attention for All Data Sizes

The researchers in this article conduct a thorough examination of the features of convolutions and transformers, resulting in a principled approach for combining them into a new family of models dubbed CoAtNet. Extensive experiments demonstrate that CoAtNet combines the advantages of ConvNets and Transformers, achieving state-of-the-art performance across a range of data sizes and compute budgets. Take note that this article is currently concentrating on ImageNet classification for model construction. However, the researchers believe their approach is relevant to a broader range of applications, such as object detection and semantic segmentation.

This paper is ranked #1 on Image Classification on ImageNet (using extra training data).

SwinIR: Image Restoration Using Swin Transformer

The authors of this article suggest the SwinIR image restoration model, which is based on the Swin Transformer . The model comprises three modules: shallow feature extraction, deep feature extraction, and human-recognition reconstruction. For deep feature extraction, the researchers employ a stack of residual Swin Transformer blocks (RSTB), each formed of Swin Transformer layers, a convolution layer, and a residual connection.

This research article is ranked #1 on Image Super-Resolution on Manga109 – 4x upscaling.

Access all our open Survey & Awards Nomination forms in one place

Dr. Nivash Jeevanandam

Google Research Introduce PERL, a New Method to Improve RLHF

[Exclusive] Pushpak Bhattacharyya on Understanding Complex Human Emotions in LLMs

Top 7 Hugging Face Spaces to Join

7 Must-Read Generative AI Books

2024 is the Year of AMD

LangChain, Redis Collaborate to Create a Tool to Improve Accuracy in Financial Document Analysis

Apple Smoothly Crafts ‘Mouse Traps’ for Humans

Lights, Camera, Action! Womenpreneur Duo Reinvent Text-to-Video AI

CORPORATE TRAINING PROGRAMS ON GENERATIVE AI

Generative ai skilling for enterprises, our customized corporate training program on generative ai provides a unique opportunity to empower, retain, and advance your talent., upcoming large format conference, data engineering summit 2024, may 30 and 31, 2024 | 📍 bangalore, india, download the easiest way to stay informed.

Top 10 DeepMind AlphaFold 3 Alternatives in 2024

RoseTTAFold, OmegaFold, I-TASSER, are some of the notable names.

Companies Without a Chief AI Officer are Bound to F-AI-L

Robotics will have ChatGPT Moment Soon

Top editorial picks, sml & 3ai launch hanooman genai app on play store in 12 indian languages, perplexity ai partners with soundhound ai to bring llms-powered voice assistants across cars and iot devices, bumble soon to introduce ai concierge for discussing insecurities and breakups, cognitive lab introduces tokenizer arena for devanagari text, subscribe to the belamy: our weekly newsletter, biggest ai stories, delivered to your inbox every week., also in news.

TATA AIG is Building an LLM-Powered WhatsApp Chatbot for Customers

Small Indian IT Firms are Taking the Acquisition Route for Increasing Capabilities

Top 5 Reasons Why You Must Participate in Bhasha Techathon

‘Pronoun Illness’ is Ola’s Problem, Not India’s ‘Rich Culture’

The world needs something better than the transformer .

Why India has Less than 2000 AI Senior Engineers?

OpenAI Introducing Media Manager Tool in India Could Hurt Ola Krutrim’s Ego

Data Science Hiring Process at Razorpay

Ai courses & careers.

7 Leading Data Science and AI Institutes in India

Gyan AI Unveils Smaller-Scale Maths LLM, Paramanu-Ganita, Outperforming LLama, Falcon

10 Free Online AI Courses to Learn from the Best

Industry insights.

Isomorphic Labs Has the Potential to Build Multi-$100 Bn Business

Doctors in India Use Apple Vision Pro to Perform 30+ Surgeries

Google DeepMind Unveils AlphaFold 3, Achieves 50% Better Prediction Accuracy

Check our industry research reports.

AI Forum for India

Our discord community for ai ecosystem, in collaboration with nvidia. .

Interview with Vivek Raghvan Co-founder Sarvam AI

"> "> Flagship Events

Rising 2024 | de&i in tech summit, april 4 and 5, 2024 | 📍 hilton convention center, manyata tech park, bangalore, machinecon gcc summit 2024, june 28 2024 | 📍bangalore, india, machinecon usa 2024, 26 july 2024 | 583 park avenue, new york, cypher india 2024, september 25-27, 2024 | 📍bangalore, india, cypher usa 2024, nov 21-22 2024 | 📍santa clara convention center, california, usa, genai corner.

7 AI Startups that Featured on Shark Tank India Season 3

Top 9 Semiconductor GCCs in India

Top 6 Devin Alternatives to Automate Your Coding Tasks

10 Free AI Courses by NVIDIA

Top 6 AI/ML Hackathons to Participate in 2024

What’s Devin Up to?

10 Underrated Women in AI to Watchout For

10 AI Startups Run by Incredible Women Entrepreneurs

Data dialogues.

Meet the Indian AI Startup Building a Private Perplexity for Enterprise

SAP Has Over 15,000 Customers in India

Soket AI Labs Becomes the First Indian Startup to Build Solutions Towards Ethical AGI

Zerodha cto warns companies to not look at ai as a solution chasing a problem.

Healthify Uses OpenAI’s GPTs to Help Indians Make Better Health Choices

Zerodha CTO Says He Stopped Googling Technical Stuff Over the Past Year

Fibe Leverages Amazon Bedrock to Increase Customer Support Efficiency by 30%

This 18-Year-Old Programmer is Creating an Open Source Alternative to Redis

Future talks.

As GitHub Begins Technical Preview of Copilot Workspace, an Engineer Answers How it Differs from Devin

T-Hub Supported MATH is Launching AI Career Finder to Create AI Jobs

Quora’s Poe Eats Google’s Lunch

Zoho Collaborates with Intel to Optimise & Accelerate Video AI Workloads

Rakuten Certified as Best Firm for Data Scientists for the 2nd Time

This Indian Logistics Company Developed an LLM to Enhance Last-Mile Delivery

Perplexity AI Reviews with Pro Access

What to Expect at the ‘Absolutely Incredible’ Apple WWDC 2024

Developer’s corner.

Software Engineering Jobs are Dying

Open-Source MS-DOS 4.0 Inspires Aspiring Developers to Embrace Retro Revolution

Japan is the Next Big Hub for Indian Tech Talent

Will TypeScript Wipe Out JavaScript?

In case you missed it.

Meta Forces Developers Cite ‘Llama 3’ in their AI Development

Why Developers Hate Jira

Which is the Most Frustrating Programming Language?

AI4Bharat Rolls Out IndicLLMSuite for Building LLMs in Indian Languages

Upcoming AI Conference for Engineers in May 2024

8 Best AI Resume Builders for Freshers in 2024

8 Best AI Libraries for Python Developers

8 Best AI Voice Generators Available for Free to Use in 2024

10 Best React Project Ideas For Beginners

8 Best AI Image Generator Apps Free for Android Users in 2024

Also in trends.

Tredence Appoints Munjay Singh as Chief Operating Officer

GNANI.AI Unveils India’s First Voice-First SLM for Vernacular Languages

Is GenAI Spoiling Gen Z?

“Google is Dancing to its Own Music,” says Sundar Pichai

‘Winners in AI will be those who meet customers where they are,’ says Nandan Nilekani

US is Two to Three Years Ahead of China in AI

Microsoft and OpenAI Announce $2 Million for Societal Resilience Fund

good-gpt-2-chatbot Gone Rogue

World's biggest media & analyst firm specializing in ai, advertise with us, aim publishes every day, and we believe in quality over quantity, honesty over spin. we offer a wide variety of branding and targeting options to make it easy for you to propagate your brand., branded content, aim brand solutions, a marketing division within aim, specializes in creating diverse content such as documentaries, public artworks, podcasts, videos, articles, and more to effectively tell compelling stories., corporate upskilling, adasci corporate training program on generative ai provides a unique opportunity to empower, retain and advance your talent, with machinehack you can not only find qualified developers with hiring challenges but can also engage the developer community and your internal workforce by hosting hackathons., talent assessment, conduct customized online assessments on our powerful cloud-based platform, secured with best-in-class proctoring, research & advisory, aim research produces a series of annual reports on ai & data science covering every aspect of the industry. request customised reports & aim surveys for a study on topics of your interest., conferences & events, immerse yourself in ai and business conferences tailored to your role, designed to elevate your performance and empower you to accomplish your organization’s vital objectives., aim launches the 3rd edition of data engineering summit. may 30-31, bengaluru.

Join the forefront of data innovation at the Data Engineering Summit 2024, where industry leaders redefine technology’s future.

© Analytics India Magazine Pvt Ltd & AIM Media House LLC 2024

- Terms of use

- Privacy Policy

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

🔥Highlighting the top ML papers every week.

dair-ai/ML-Papers-of-the-Week

Folders and files, repository files navigation, ml papers of the week.

Subscribe to our newsletter to get a weekly list of top ML papers in your inbox.

At DAIR.AI we ❤️ reading ML papers so we've created this repo to highlight the top ML papers of every week.

Here is the weekly series:

- Top ML Papers of the Week (April 29 - May 5)

- Top ML Papers of the Week (April 22 - April 28)

- Top ML Papers of the Week (April 15 - April 21)

- Top ML Papers of the Week (April 8 - April 14)

- Top ML Papers of the Week (April 1 - April 7)

- Top ML Papers of the Week (March 26 - March 31)

- Top ML Papers of the Week (March 18 - March 25)

- Top ML Papers of the Week (March 11 - March 17)

- Top ML Papers of the Week (March 4 - March 10)

- Top ML Papers of the Week (February 26 - March 3)

- Top ML Papers of the Week (February 19 - February 25)

- Top ML Papers of the Week (February 12 - February 18)

- Top ML Papers of the Week (February 5 - February 11)

- Top ML Papers of the Week (January 29 - February 4)

- Top ML Papers of the Week (January 22 - January 28)

- Top ML Papers of the Week (January 15 - January 21)

- Top ML Papers of the Week (January 8 - January 14)

- Top ML Papers of the Week (January 1 - January 7)

- Top ML Papers of the Week (December 24 - December 31)

Top ML Papers of the Week (December 18 - December 24)

Top ml papers of the week (december 11 - december 17), top ml papers of the week (december 4 - december 10), top ml papers of the week (november 27 - december 3), top ml papers of the week (november 20 - november 26), top ml papers of the week (november 13 - november 19), top ml papers of the week (november 6 - november 12), top ml papers of the week (october 30 - november 5), top ml papers of the week (october 23 - october 29), top ml papers of the week (october 16 - october 22), top ml papers of the week (october 9 - october 15), top ml papers of the week (october 2 - october 8), top ml papers of the week (september 25 - october 1), top ml papers of the week (september 18 - september 24), top ml papers of the week (september 11 - september 17), top ml papers of the week (september 4 - september 10), top ml papers of the week (august 28 - september 3), top ml papers of the week (august 21 - august 27), top ml papers of the week (august 14 - august 20), top ml papers of the week (august 7 - august 13), top ml papers of the week (july 31 - august 6), top ml papers of the week (july 24 - july 30), top ml papers of the week (july 17 - july 23), top ml papers of the week (july 10 - july 16), top ml papers of the week (july 3 - july 9), top ml papers of the week (june 26 - july 2), top ml papers of the week (june 19 - june 25), top ml papers of the week (june 12 - june 18), top ml papers of the week (june 5 - june 11).

- Top ML Papers of the Week (May 29 - June 4)

- Top ML Papers of the Week (May 22 - 28)

- Top ML Papers of the Week (May 15 - 21)

- Top ML Papers of the Week (May 8 - 14)

Top ML Papers of the Week (May 1-7)

Top ml papers of the week (april 24 - april 30), top ml papers of the week (april 17 - april 23), top ml papers of the week (april 10 - april 16), top ml papers of the week (april 3 - april 9), top ml papers of the week (mar 27 - april 2), top ml papers of the week (mar 20-mar 26), top ml papers of the week (mar 13-mar 19), top ml papers of the week (mar 6-mar 12), top ml papers of the week (feb 27-mar 5), top ml papers of the week (feb 20-26), top ml papers of the week (feb 13 - 19), top ml papers of the week (feb 6 - 12), top ml papers of the week (jan 30-feb 5), top ml papers of the week (jan 23-29), top ml papers of the week (jan 16-22), top ml papers of the week (jan 9-15), top ml papers of the week (jan 1-8).

Follow us on Twitter

Join our Discord

Top ML Papers of the Week (April 29 - May 5) - 2024

Top ml papers of the week (april 22 - april 28) - 2024, top ml papers of the week (april 15 - april 21) - 2024, top ml papers of the week (april 8 - april 14) - 2024, top ml papers of the week (april 1 - april 7) - 2024, top ml papers of the week (march 26 - march 31) - 2024, top ml papers of the week (march 18 - march 25) - 2024, top ml papers of the week (march 11 - march 17) - 2024, top ml papers of the week (march 4 - march 10) - 2024, top ml papers of the week (february 26 - march 3) - 2024, top ml papers of the week (february 19 - february 25) - 2024, top ml papers of the week (february 12 - february 18) - 2024, top ml papers of the week (february 5 - february 11) - 2024, top ml papers of the week (january 29 - february 4) - 2024, top ml papers of the week (january 22 - january 28) - 2024, top ml papers of the week (january 15 - january 21) - 2024, top ml papers of the week (january 8 - january 14) - 2024, top ml papers of the week (january 1 - january 7) - 2024, top ml papers of the week (december 25 - december 31), top ml papers of the week (may 29-june 4), top ml papers of the week (may 22-28), top ml papers of the week (may 15-21), top ml papers of the week (may 8-14).

We use a combination of AI-powered tools, analytics, and human curation to build the lists of papers.

Subscribe to our NLP Newsletter to stay on top of ML research and trends.

Join our Discord .

Contributors 7

Machine Intelligence

Google is at the forefront of innovation in Machine Intelligence, with active research exploring virtually all aspects of machine learning, including deep learning and more classical algorithms. Exploring theory as well as application, much of our work on language, speech, translation, visual processing, ranking and prediction relies on Machine Intelligence. In all of those tasks and many others, we gather large volumes of direct or indirect evidence of relationships of interest, applying learning algorithms to understand and generalize.

Machine Intelligence at Google raises deep scientific and engineering challenges, allowing us to contribute to the broader academic research community through technical talks and publications in major conferences and journals. Contrary to much of current theory and practice, the statistics of the data we observe shifts rapidly, the features of interest change as well, and the volume of data often requires enormous computation capacity. When learning systems are placed at the core of interactive services in a fast changing and sometimes adversarial environment, combinations of techniques including deep learning and statistical models need to be combined with ideas from control and game theory.

Recent Publications

Some of our teams.

Algorithms & optimization

Applied science

Climate and sustainability

Graph mining

Impact-Driven Research, Innovation and Moonshots

Learning theory

Market algorithms

Operations research

Security, privacy and abuse

System performance

We're always looking for more talented, passionate people.

Artificial Intelligence And Machine Learning

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Explore advancements in Machine Learning

Javascript is disabled..

Please enable JavaScript for full page functionality.

All research

- Accessibility

- Computer Vision

- Data Science and Annotation

- Human-Computer Interaction

- Knowledge Bases and Search

- Methods and Algorithms

- Speech and Natural Language Processing

- Tools, Platforms, Frameworks

- Interspeech

- NeurIPS Workshop

- CVPR Workshop

- ICML Workshop

- EMNLP Workshop

- IEEE International Conference on Acoustics, Speech and Signal Processing

- ACM SIGSPATIAL

- ICCV Workshop

- KDD Workshop

- NAACL Workshop

- AAAI Workshop

- ACM Interaction Design and Children

- ACM Multimedia

- Data Problems for Foundation Models Workshop at ICLR

- EACL Workshop

- How Far Are We from AGI?

- ICLR Workshop

- IEEE BITS the Information Theory Magazine

- IEEE Conference on Artificial Intelligence for Medicine, Health, and Care

- IEEE Signal Processing Letters

- IISE Transactions

- LREC-COLING

- Machine Learning: Science and Technology

- Nature Digital Medicine

- Nature - Scientific Reports

- Observational Studies

- Sane Workshop

- SIGGRAPH Asia

- SSW Workshop

- Transaction on Machine Learning Research

- Transactions on Machine Learning Research (TMLR)

- When Creative AI Meets Conversational AI Workshop

Generative Modeling with Phase Stochastic Bridges

Rephrasing the web: a recipe for compute and data-efficient language modeling, conformal prediction via regression-as-classification, guiding instruction-based image editing via multimodal large language models, pseudo-generalized dynamic view synthesis from a video, relu strikes back: exploiting activation sparsity in large language models, compressing llms: the truth is rarely pure and never simple, how far are we from intelligent visual deductive reasoning, large language models as generalizable policies for embodied tasks, manifold diffusion fields, mofi: learning image representation from noisy entity annotated images, poly-view contrastive learning, when can transformers reason with abstract symbols, direct2.5: diverse 3d content creation via multi-view 2.5d diffusion, jointnet: extending text-to-image diffusion for dense distribution modeling, label-efficient sleep staging using transformers pre-trained with position prediction, catlip: clip-level visual recognition accuracy with 2.7× faster pre-training on web-scale image-text data, hummuss: human motion understanding using state space models, matryoshka diffusion models, openelm: an efficient language model family with open training and inference framework, talaria: interactively optimizing machine learning models for efficient inference, think while you write hypothesis verification promotes faithful knowledge-to-text generation, the slingshot effect: a late-stage optimization anomaly in adam-family of optimization methods, hindsight priors for reward learning from human preferences, fedhyper: a universal and robust learning rate scheduler for federated learning with hypergradient descen, frequency-aware masked autoencoders for multimodal pretraining on biosignals, hierarchical and dynamic prompt compression for efficient zero-shot api usage, overcoming the pitfalls of vision-language model finetuning for ood generalization, vanishing gradients in reinforcement finetuning of language models, data filtering networks, model compression in practice: lessons learned from practitioners creating on-device machine learning experiences, mobileclip: fast image-text models through multi-modal reinforced training, streaming anchor loss: augmenting supervision with temporal significance, towards a world-english language model, efficient-3dim: learning a generalizable single image novel view synthesizer in one day, a multi-signal large language model for device-directed speech detection, tic-clip: continual training of clip models, mm1: methods, analysis & insights from multimodal llm pre-training, enhancing paragraph generation with a latent language diffusion model, construction of paired knowledge graph - text datasets informed by cyclic evaluation, personalizing health and fitness with hybrid modeling, corpus synthesis for zero-shot asr domain adaptation using large language models, motionprint: ready-to-use, device-agnostic, and location-invariant motion activity models, randomized algorithms for precise measurement of differentially-private, personalized recommendations, veclip: improving clip training via visual-enriched captions, axnav: replaying accessibility tests from natural language, merge vision foundation models via multi-task distillation, moonwalk: advancing gait-based user recognition on wearable devices with metric learning, vision-based hand gesture customization from a single demonstration, humanizing word error rate for asr transcript readability and accessibility, human following in mobile platforms with person re-identification, privacy-preserving quantile treatment effect estimation for randomized controlled trials, synthdst: synthetic data is all you need for few-shot dialog state tracking, what can clip learn from task-specific experts, multichannel voice trigger detection based on transform-average-concatenate, keyframer: empowering animation design using large language models, resource-constrained stereo singing voice cancellation, efficient convbn blocks for transfer learning and beyond, the entity-deduction arena: a playground for probing the conversational reasoning and planning capabilities of llms, differentially private heavy hitter detection using federated analytics, scalable pre-training of large autoregressive image models, acoustic model fusion for end-to-end speech recognition, co-ml: collaborative machine learning model building for developing dataset design practices, flexible keyword spotting based on homogeneous audio-text embedding, investigating salient representations and label variance modeling in dimensional speech emotion analysis, large-scale training of foundation models for wearable biosignals, user-level differentially private stochastic convex optimization: efficient algorithms with optimal rates, bin prediction for better conformal prediction, fastsr-nerf: improving nerf efficiency on consumer devices with a simple super-resolution pipeline, hybrid model learning for cardiovascular biomarkers inference, improving vision-inspired keyword spotting using a streaming conformer encoder with input-dependent dynamic depth, simulation-based inference for cardiovascular models, unbalanced low-rank optimal transport solvers, personalization of ctc-based end-to-end speech recognition using pronunciation-driven subword tokenization, deploying attention-based vision transformers to apple neural engine, ferret: refer and ground anything anywhere at any granularity, protip: progressive tool retrieval improves planning, transformers learn through gradual rank increase, importance of smoothness induced by optimizers in fl4asr: towards understanding federated learning for end-to-end asr, advancing speech accessibility with personal voice, bootstrap your own variance, context tuning for retrieval augmented generation, datacomp: in search of the next generation of multimodal datasets, leveraging large language models for exploiting asr uncertainty, modality dropout for multimodal device directed speech detection using verbal and non-verbal features, training large-vocabulary neural language model by private federated learning for resource-constrained devices, lidar: sensing linear probing performance in joint embedding ssl architectures, deeppcr: parallelizing sequential operations in neural networks, hugs: human gaussian splats, multimodal data and resource efficient device-directed speech detection with large foundation models, controllable music production with diffusion models and guidance gradients, fast optimal locally private mean estimation via random projections, generating molecular conformers with manifold diffusion fields, how to scale your ema, pre-trained language models do not help auto-regressive text-to-image generation, 4m: massively multimodal masked modeling, adaptive weight decay, fleek: factual error detection and correction with evidence retrieved from external knowledge, one wide feedforward is all you need, agnostically learning single-index models using omnipredictors, characterizing omniprediction via multicalibration, federated learning for speech recognition: revisiting current trends towards large-scale asr, increasing coverage and precision of textual information in multilingual knowledge graphs, sam-clip: merging vision foundation models towards semantic and spatial understanding, what algorithms can transformers learn a study in length generalization, marrs: multimodal reference resolution system, automating behavioral testing in machine translation, diffusion models as masked audio-video learners, improved ddim sampling with moment matching gaussian mixtures, planner: generating diversified paragraph via latent language diffusion model, eelbert: tiny models through dynamic embeddings, semand: self-supervised anomaly detection in multimodal geospatial datasets, steer: semantic turn extension-expansion recognition for voice assistants, identifying controversial pairs in item-to-item recommendations, delphi: data for evaluating llms' performance in handling controversial issues, towards real-world streaming speech translation for code-switched speech, livepose: online 3d reconstruction from monocular video with dynamic camera poses, never-ending learning of user interfaces, slower respiration rate is associated with higher self-reported well-being after wellness training, when does optimizing a proper loss yield calibration, single-stage diffusion nerf: a unified approach to 3d generation and reconstruction, fastvit: a fast hybrid vision transformer using structural reparameterization, hyperdiffusion: generating implicit neural fields with weight-space diffusion, neilf++: inter-reflectable light fields for geometry and material estimation, gender bias in llms, reinforce data, multiply impact: improved model accuracy and robustness with dataset reinforcement, self-supervised object goal navigation with in-situ finetuning, all about sample-size calculations for a/b testing: novel extensions and practical guide, intelligent assistant language understanding on-device, rapid and scalable bayesian ab testing, consistent collaborative filtering via tensor decomposition, dataset and network introspection toolkit (dnikit), finerecon: depth-aware feed-forward network for detailed 3d reconstruction, improving the quality of neural tts using long-form content and multi-speaker multi-style modeling, voice trigger system for siri, conformalization of sparse generalized linear models, duet: 2d structured and equivariant representations, pdp: parameter-free differentiable pruning is all you need, population expansion for training language models with private federated learning, resolving the mixing time of the langevin algorithm to its stationary distribution for log-concave sampling, the role of entropy and reconstruction for multi-view self-supervised learning, upscale: unconstrained channel pruning, learning iconic scenes with differential privacy, nerfdiff: single-image view synthesis with nerf-guided distillation from 3d-aware diffusion, boot: data-free distillation of denoising diffusion models with bootstrapping, referring to screen texts with voice assistants, towards multimodal multitask scene understanding models for indoor mobile agents, 5ider: unified query rewriting for steering, intent carryover, disfluencies, entity carryover and repair, monge, bregman and occam: interpretable optimal transport in high-dimensions with feature-sparse maps, private online prediction from experts: separations and faster rates, spatial librispeech: an augmented dataset for spatial audio learning, stabilizing transformer training by preventing attention entropy collapse, the monge gap: a regularizer to learn all transport maps, cross-lingual knowledge transfer and iterative pseudo-labeling for low-resource speech recognition with transducers, symphony: composing interactive interfaces for machine learning, a unifying theory of distance from calibration, approximate nearest neighbour phrase mining for contextual speech recognition, latent phrase matching for dysarthric speech, matching latent encoding for audio-text based keyword spotting, near-optimal algorithms for private online optimization in the realizable regime, roomdreamer: text-driven 3d indoor scene synthesis with coherent geometry and texture, semi-supervised and long-tailed object detection with cascadematch, less is more: a unified architecture for device-directed speech detection with multiple invocation types, collaborative machine learning model building with families using co-ml, efficient multimodal neural networks for trigger-less voice assistants, fast class-agnostic salient object segmentation, application-agnostic language modeling for on-device asr, actionable data insights for machine learning, growing and serving large open-domain knowledge graphs, robustness in multimodal learning under train-test modality mismatch, learning language-specific layers for multilingual machine translation, modeling spoken information queries for virtual assistants: open problems, challenges and opportunities, learning to detect novel and fine-grained acoustic sequences using pretrained audio representations, pointconvformer: revenge of the point-based convolution, state spaces aren’t enough: machine translation needs attention, improved speech recognition for people who stutter, autofocusformer: image segmentation off the grid, joint speech transcription and translation: pseudo-labeling with out-of-distribution data, from robustness to privacy and back, generalization on the unseen, logic reasoning and degree curriculum, self-supervised temporal analysis of spatiotemporal data, considerations for distribution shift robustness in health, f-dm: a multi-stage diffusion model via progressive signal transformation, high-throughput vector similarity search in knowledge graphs, naturalistic head motion generation from speech, on the role of lip articulation in visual speech perception, facelit: neural 3d relightable faces, angler: helping machine translation practitioners prioritize model improvements, feedback effect in user interaction with intelligent assistants: delayed engagement, adaption and drop-out, text is all you need: personalizing asr models using controllable speech synthesis, pointersect: neural rendering with cloud-ray intersection, continuous pseudo-labeling from the start, mobileone: an improved one millisecond mobile backbone, neural transducer training: reduced memory consumption with sample-wise computation, tract: denoising diffusion models with transitive closure time-distillation, variable attention masking for configurable transformer transducer speech recognition, from user perceptions to technical improvement: enabling people who stutter to better use speech recognition, i see what you hear: a vision-inspired method to localize words, more speaking or more speakers, pre-trained model representations and their robustness against noise for speech emotion analysis, improvements to embedding-matching acoustic-to-word asr using multiple-hypothesis pronunciation-based embeddings, mast: masked augmentation subspace training for generalizable self-supervised priors, paedid: patch autoencoder-based deep image decomposition for unsupervised anomaly detection, rgi: robust gan-inversion for mask-free image inpainting and unsupervised pixel-wise anomaly detection, robust hybrid learning with expert augmentation, self supervision does not help natural language supervision at scale, audio-to-intent using acoustic-textual subword representations from end-to-end asr, fastfill: efficient compatible model update, loss minimization through the lens of outcome indistinguishability, mobilebrick: building lego for 3d reconstruction on mobile devices, diffusion probabilistic fields, heimdal: highly efficient method for detection and localization of wake-words, designing data: proactive data collection and iteration for machine learning, improving human annotation effectiveness for fact collection by identifying the most relevant answers, rangeaugment: efficient online augmentation with range learning, languages you know influence those you learn: impact of language characteristics on multi-lingual text-to-text transfer, active learning with expected error reduction, stable diffusion with core ml on apple silicon, supervised training of conditional monge maps, modeling heart rate response to exercise with wearable data, shift-curvature, sgd, and generalization, destseg: segmentation guided denoising student-teacher for anomaly detection, symbol guided hindsight priors for reward learning from human preferences, beyond cage: investigating generalization of learned autonomous network defense policies, rewards encoding environment dynamics improves preference-based reinforcement learning, homomorphic self-supervised learning, continuous soft pseudo-labeling in asr, elastic weight consolidation improves the robustness of self-supervised learning methods under transfer, mean estimation with user-level privacy under data heterogeneity, learning to break the loop: analyzing and mitigating repetitions for neural text generation, learning to reason with neural networks: generalization, unseen data and boolean measures, subspace recovery from heterogeneous data with non-isotropic noise, a large-scale observational study of the causal effects of a behavioral health nudge, improving generalization with physical equations, maeeg: masked auto-encoder for eeg representation learning, mbw: multi-view bootstrapping in the wild, statistical deconvolution for inference of infection time series, ape: aligning pretrained encoders to quickly learn aligned multimodal representations, emphasis control for parallel neural tts, the slingshot mechanism: an empirical study of adaptive optimizers and the grokking phenomenon, a treatise on fst lattice based mmi training, non-autoregressive neural machine translation: a call for clarity, prompting for a conversation: how to control a dialog model, fusion-id: a photoplethysmography and motion sensor fusion biometric authenticator with few-shot on-boarding, latent temporal flows for multivariate analysis of wearables data, the calibration generalization gap, spin: an empirical evaluation on sharing parameters of isotropic networks, learning bias-reduced word embeddings using dictionary definitions, low-rank optimal transport: approximation, statistics and debiasing, safe real-world reinforcement learning for mobile agent obstacle avoidance, speech emotion: investigating model representations, multi-task learning and knowledge distillation, texturify: generating textures on 3d shape surfaces, 3d parametric room representation with roomplan, generative multiplane images: making a 2d gan 3d-aware, flair: federated learning annotated image repository, privacy of noisy stochastic gradient descent: more iterations without more privacy loss, two-layer bandit optimization for recommendations, gaudi: a neural architect for immersive 3d scene generation, physiomtl: personalizing physiological patterns using optimal transport multi-task regression, toward supporting quality alt text in computing publications, providing insights for open-response surveys via end-to-end context-aware clustering, layer-wise data-free cnn compression, rgb-x classification for electronics sorting, aspanformer: detector-free image matching with adaptive span transformer, mel spectrogram inversion with stable pitch, multi-objective hyper-parameter optimization of behavioral song embeddings, improving voice trigger detection with metric learning, neilf: neural incident light field for material and lighting estimation, combining compressions for multiplicative size scaling on natural language tasks, integrating categorical features in end-to-end asr, cvnets: high performance library for computer vision, space-efficient representation of entity-centric query language models, a dense material segmentation dataset for indoor and outdoor scene parsing, benign, tempered, or catastrophic: a taxonomy of overfitting, forml: learning to reweight data for fairness, regularized training of nearest neighbor language models, minimax demographic group fairness in federated learning, neuman: neural human radiance field from a single video, device-directed speech detection: regularization via distillation for weakly-supervised models, vocal effort modeling in neural tts for improving the intelligibility of synthetic speech in noise, reachability embeddings: self-supervised representation learning from spatiotemporal motion trajectories for multimodal geospatial computer vision, dynamic memory for interpretable sequential optimization, artonomous: introducing middle school students to reinforcement learning through virtual robotics, efficient representation learning via adaptive context pooling, leveraging entity representations, dense-sparse hybrids, and fusion-in-decoder for cross-lingual question answering, optimal algorithms for mean estimation under local differential privacy, position prediction as an effective pre-training strategy, private frequency estimation via projective geometry, self-conditioning pre-trained language models, style equalization: unsupervised learning of controllable generative sequence models, critical regularizations for neural surface reconstruction in the wild, a multi-task neural architecture for on-device scene analysis, deploying transformers on the apple neural engine, neural face video compression using multiple views, efficient multi-view stereo via attention-driven 2d convolutions, robust joint shape and pose optimization for few-view object reconstruction, forward compatible training for large-scale embedding retrieval systems, bilingual end-to-end asr with byte-level subwords, end-to-end speech translation for code switched speech, streaming on-device detection of device directed speech from voice and touch-based invocation, training a tokenizer for free with private federated learning, utilizing imperfect synthetic data to improve speech recognition, data incubation - synthesizing missing data for handwriting recognition, a platform for continuous construction and serving of knowledge at scale, neo: generalizing confusion matrix visualization to hierarchical and multi-output labels, low-resource adaptation of open-domain generative chatbots, differentiable k-means clustering layer for neural network compression, enabling hand gesture customization on wrist-worn devices, learning compressed embeddings for on-device inference, neural fisher kernel: low-rank approximation and knowledge distillation, towards complete icon labeling in mobile applications, understanding screen relationships from screenshots of smartphone applications, mobilevit: light-weight, general-purpose, and mobile-friendly vision transformer, synthetic defect generation for display front-of-screen quality inspection: a survey, differential secrecy for distributed data and applications to robust differentially secure vector summation, information gain propagation: a new way to graph active learning with soft labels, can open domain question answering models answer visual knowledge questions, non-verbal sound detection for disordered speech, hierarchical prosody modeling and control in non-autoregressive parallel neural tts, element level differential privacy: the right granularity of privacy, learning spatiotemporal occupancy grid maps for lifelong navigation in dynamic scenes, collaborative filtering via tensor decomposition, modeling the impact of user mobility on covid-19 infection rates over time, fast and explicit neural view synthesis, federated evaluation and tuning for on-device personalization: system design & applications, lyric document embeddings for music tagging, acoustic neighbor embeddings, model stability with continuous data updates, reconstructing training data from diverse ml models by ensemble inversion, fair sa: sensitivity analysis for fairness in face recognition, learning invariant representations with missing data, interpretable adaptive optimization, robust robotic control from pixels using contrastive recurrent state-space models, challenges of adversarial image augmentations, self-supervised semi-supervised learning for data labeling and quality evaluation, batchquant: quantized-for-all architecture search with robust quantizer, high fidelity 3d reconstructions with limited physical views, arkitscenes - a diverse real-world dataset for 3d indoor scene understanding using mobile rgb-d data, do self-supervised and supervised methods learn similar visual representations, enforcing fairness in private federated learning via the modified method of differential multipliers, individual privacy accounting via a renyi filter, probabilistic attention for interactive segmentation, rim: reliable influence-based active learning on graphs, stochastic contrastive learning, it’s complicated: characterizing the time-varying relationship between cell phone mobility and covid-19 spread in the us, plan-then-generate: controlled data-to-text, randomized controlled trials without data retention, interdependent variables: remotely designing tactile graphics for an accessible workflow, breiman's two cultures: you don't have to choose sides, cross-domain data integration for entity disambiguation in biomedical text, evaluating the fairness of fine-tuning strategies in self-supervised learning, learning compressible subspaces for adaptive network compression at inference time, mmiu: dataset for visual intent understanding in multimodal assistants, on-device neural speech synthesis, on-device panoptic segmentation for camera using transformers, entity-based knowledge conflicts in question answering, finding experts in transformer models, deeppro: deep partial point cloud registration of objects, using pause information for more accurate entity recognition, conditional generation of synthetic geospatial images from pixel-level and feature-level inputs, multi-task learning with cross attention for keyword spotting, screen parsing: towards reverse engineering of ui models from screenshots, self-supervised learning of lidar segmentation for autonomous indoor navigation, a survey on privacy from statistical, information and estimation-theoretic views, improving neural network subspaces, combining speakers of multiple languages to improve quality of neural voices, audiovisual speech synthesis using tacotron2, user-initiated repetition-based recovery in multi-utterance dialogue systems, dexter: deep encoding of external knowledge for named entity recognition in virtual assistants, managing ml pipelines: feature stores and the coming wave of embedding ecosystems, smooth sequential optimization with delayed feedback, learning to generate radiance fields of indoor scenes, estimating respiratory rate from breath audio obtained through wearable microphones, online automatic speech recognition with listen, attend and spell model, subject-aware contrastive learning for biosignals, hypersim: a photorealistic synthetic dataset for holistic indoor scene understanding, model-based metrics: sample-efficient estimates of predictive model subpopulation performance, retrievalfuse: neural 3d scene reconstruction with a database, joint learning of portrait intrinsic decomposition and relighting, non-parametric differentially private confidence intervals for the median, recognizing people in photos through private on-device machine learning, unconstrained scene generation with locally conditioned radiance fields, trinity: a no-code ai platform for complex spatial datasets, a simple and nearly optimal analysis of privacy amplification by shuffling, when is memorization of irrelevant training data necessary for high-accuracy learning, implicit acceleration and feature learning in infinitely wide neural networks with bottlenecks, a discriminative entity aware language model for virtual assistants, analysis and tuning of a voice assistant system for dysfluent speech, implicit greedy rank learning in autoencoders via overparameterized linear networks, learning neural network subspaces, lossless compression of efficient private local randomizers, private adaptive gradient methods for convex optimization, private stochastic convex optimization: optimal rates in ℓ1 geometry, streaming transformer for hardware efficient voice trigger detection and false trigger mitigation, tensor programs iib: architectural universality of neural tangent kernel training dynamics, uncertainty weighted actor-critic for offline reinforcement learning, spatio-temporal context for action detection, bootleg: self-supervision for named entity disambiguation, instance-level task parameters: a robust multi-task weighting framework, morphgan: controllable one-shot face synthesis, evaluating entity disambiguation and the role of popularity in retrieval-based nlp, hdr environment map estimation for real-time augmented reality, extracurricular learning: knowledge transfer beyond empirical distribution, learning to optimize black-box evaluation metrics, on the role of visual cues in audiovisual speech enhancement, an attention free transformer, cread: combined resolution of ellipses and anaphora in dialogues, dynamic curriculum learning via data parameters for noise robust keyword spotting, error-driven pruning of language models for virtual assistants, knowledge transfer for efficient on-device false trigger mitigation, multimodal punctuation prediction with contextual dropout, noise-robust named entity understanding for virtual assistants, on the transferability of minimal prediction preserving inputs in question answering, open-domain question answering goes conversational via question rewriting, optimize what matters: training dnn-hmm keyword spotting model using end metric, progressive voice trigger detection: accuracy vs latency, sapaugment: learning a sample adaptive policy for data augmentation, making mobile applications accessible with machine learning, video frame interpolation via structure-motion based iterative feature fusion, when can accessibility help an exploration of accessibility feature recommendation on mobile devices, streaming models for joint speech recognition and translation, neural feature selection for learning to rank, generating natural questions from images for multimodal assistants, a comparison of question rewriting methods for conversational passage retrieval, screen recognition: creating accessibility metadata for mobile applications from pixels, set distribution networks: a generative model for sets of images, sep-28k: a dataset for stuttering event detection from podcasts with people who stutter, question rewriting for end to end conversational question answering, leveraging query resolution and reading comprehension for conversational passage retrieval, learning soft labels via meta learning, whispered and lombard neural speech synthesis, frame-level specaugment for deep convolutional neural networks in hybrid asr systems, cinematic l1 video stabilization with a log-homography model, on the generalization of learning-based 3d reconstruction, improving human-labeled data through dynamic automatic conflict resolution, what neural networks memorize and why: discovering the long tail via influence estimation, collegial ensembles, faster differentially private samplers via rényi divergence analysis of discretized langevin mcmc, on the error resistance of hinge-loss minimization, representing and denoising wearable ecg recordings, stability of stochastic gradient descent on nonsmooth convex losses, stochastic optimization with laggard data pipelines, a wrong answer or a wrong question an intricate relationship between question reformulation and answer selection in conversational question answering, conversational semantic parsing for dialog state tracking, efficient inference for neural machine translation, how effective is task-agnostic data augmentation for pre-trained transformers, generating synthetic images by combining pixel-level and feature-level geospatial conditional inputs, making smartphone augmented reality apps accessible, mage: fluid moves between code and graphical work in computational notebooks, rescribe: authoring and automatically editing audio descriptions, class lm and word mapping for contextual biasing in end-to-end asr, complementary language model and parallel bi-lrnn for false trigger mitigation, controllable neural text-to-speech synthesis using intuitive prosodic features, hybrid transformer and ctc networks for hardware efficient voice triggering, improving on-device speaker verification using federated learning with privacy, stacked 1d convolutional networks for end-to-end small footprint voice trigger detection, downbeat tracking with tempo-invariant convolutional neural networks, modality dropout for improved performance-driven talking faces, enhanced direct delta mush, learning insulin-glucose dynamics in the wild, double-talk robust multichannel acoustic echo cancellation using least squares mimo adaptive filtering: transversal, array, and lattice forms, mkqa: a linguistically diverse benchmark for multilingual open domain question answering, improving discrete latent representations with differentiable approximation bridges, adascale sgd: a user-friendly algorithm for distributed training, a generative model for joint natural language understanding and generation, equivariant neural rendering, learning to branch for multi-task learning, variational neural machine translation with normalizing flows, predicting entity popularity to improve spoken entity recognition by virtual assistants, robust multichannel linear prediction for online speech dereverberation using weighted householder least squares lattice adaptive filter, scalable multilingual frontend for tts, generalized reinforcement meta learning for few-shot optimization, learning to rank intents in voice assistants, detecting emotion primitives from speech and their use in discerning categorical emotions, lattice-based improvements for voice triggering using graph neural networks, automatic class discovery and one-shot interactions for acoustic activity recognition, tempura: query analysis with structural templates, understanding and visualizing data iteration in machine learning, multi-task learning for voice trigger detection, speech translation and the end-to-end promise: taking stock of where we are, embedded large-scale handwritten chinese character recognition, generating multilingual voices using speaker space translation based on bilingual speaker data, leveraging gans to improve continuous path keyboard input models, least squares binary quantization of neural networks, unsupervised style and content separation by minimizing mutual information for speech synthesis, sndcnn: self-normalizing deep cnns with scaled exponential linear units for speech recognition, on modeling asr word confidence, capsules with inverted dot-product attention routing, improving language identification for multilingual speakers, multi-task learning for speaker verification and voice trigger detection, stochastic weight averaging in parallel: large-batch training that generalizes well, adversarial fisher vectors for unsupervised representation learning, app usage predicts cognitive ability in older adults, filter distillation for network compression, multiple futures prediction, an exploration of data augmentation and sampling techniques for domain-agnostic question answering, data parameters: a new family of parameters for learning a differentiable curriculum, nonlinear conjugate gradients for scaling synchronous distributed dnn training, modeling patterns of smartphone usage and their relationship to cognitive health, worst cases policy gradients, empirical evaluation of active learning techniques for neural mt, skip-clip: self-supervised spatiotemporal representation learning by future clip order ranking, single training dimension selection for word embedding with pca, overton: a data system for monitoring and improving machine-learned products, leveraging user engagement signals for entity labeling in a virtual assistant, reverse transfer learning: can word embeddings trained for different nlp tasks improve neural language models, variational saccading: efficient inference for large resolution images, jointly learning to align and translate with transformer models, connecting and comparing language model interpolation techniques, active learning for domain classification in a commercial spoken personal assistant, coarse-to-fine optimization for speech enhancement, developing measures of cognitive impairment in the real world from consumer-grade multimodal sensor streams, raise to speak: an accurate, low-power detector for activating voice assistants on smartwatches, language identification from very short strings, learning conditional error model for simulated time-series data, bridging the domain gap for neural models, improving knowledge base construction from robust infobox extraction, protection against reconstruction and its applications in private federated learning, data platform for machine learning, speaker-independent speech-driven visual speech synthesis using domain-adapted acoustic models, addressing the loss-metric mismatch with adaptive loss alignment, lower bounds for locally private estimation via communication complexity, exploring retraining-free speech recognition for intra-sentential code-switching, parametric cepstral mean normalization for robust speech recognition, voice trigger detection from lvcsr hypothesis lattices using bidirectional lattice recurrent neural networks, neural network-based modeling of phonetic durations, mirroring to build trust in digital assistants, foundationdb record layer: a multi-tenant structured datastore, leveraging acoustic cues and paralinguistic embeddings to detect expression from voice, bandwidth embeddings for mixed-bandwidth speech recognition, sliced wasserstein discrepancy for unsupervised domain adaptation, towards learning multi-agent negotiations via self-play, optimizing siri on homepod in far‑field settings, can global semantic context improve neural language models, a new benchmark and progress toward improved weakly supervised learning, finding local destinations with siri’s regionally specific language models for speech recognition, personalized hey siri, structured control nets for deep reinforcement learning, learning with privacy at scale, an on-device deep neural network for face detection, hey siri: an on-device dnn-powered voice trigger for apple’s personal assistant, real-time recognition of handwritten chinese characters spanning a large inventory of 30,000 characters, deep learning for siri’s voice: on-device deep mixture density networks for hybrid unit selection synthesis, inverse text normalization as a labeling problem, improving neural network acoustic models by cross-bandwidth and cross-lingual initialization, learning from simulated and unsupervised images through adversarial training, improving the realism of synthetic images.

Discover opportunities in Machine Learning.

Our research in machine learning breaks new ground every day.

Work with us

Purdue Online Writing Lab Purdue OWL® College of Liberal Arts

Welcome to the Purdue Online Writing Lab

Welcome to the Purdue OWL

This page is brought to you by the OWL at Purdue University. When printing this page, you must include the entire legal notice.

Copyright ©1995-2018 by The Writing Lab & The OWL at Purdue and Purdue University. All rights reserved. This material may not be published, reproduced, broadcast, rewritten, or redistributed without permission. Use of this site constitutes acceptance of our terms and conditions of fair use.

The Online Writing Lab at Purdue University houses writing resources and instructional material, and we provide these as a free service of the Writing Lab at Purdue. Students, members of the community, and users worldwide will find information to assist with many writing projects. Teachers and trainers may use this material for in-class and out-of-class instruction.

The Purdue On-Campus Writing Lab and Purdue Online Writing Lab assist clients in their development as writers—no matter what their skill level—with on-campus consultations, online participation, and community engagement. The Purdue Writing Lab serves the Purdue, West Lafayette, campus and coordinates with local literacy initiatives. The Purdue OWL offers global support through online reference materials and services.

A Message From the Assistant Director of Content Development

The Purdue OWL® is committed to supporting students, instructors, and writers by offering a wide range of resources that are developed and revised with them in mind. To do this, the OWL team is always exploring possibilties for a better design, allowing accessibility and user experience to guide our process. As the OWL undergoes some changes, we welcome your feedback and suggestions by email at any time.

Please don't hesitate to contact us via our contact page if you have any questions or comments.

All the best,

Social Media

Facebook twitter.

Help | Advanced Search

Computer Science > Machine Learning

Title: kan: kolmogorov-arnold networks.

Abstract: Inspired by the Kolmogorov-Arnold representation theorem, we propose Kolmogorov-Arnold Networks (KANs) as promising alternatives to Multi-Layer Perceptrons (MLPs). While MLPs have fixed activation functions on nodes ("neurons"), KANs have learnable activation functions on edges ("weights"). KANs have no linear weights at all -- every weight parameter is replaced by a univariate function parametrized as a spline. We show that this seemingly simple change makes KANs outperform MLPs in terms of accuracy and interpretability. For accuracy, much smaller KANs can achieve comparable or better accuracy than much larger MLPs in data fitting and PDE solving. Theoretically and empirically, KANs possess faster neural scaling laws than MLPs. For interpretability, KANs can be intuitively visualized and can easily interact with human users. Through two examples in mathematics and physics, KANs are shown to be useful collaborators helping scientists (re)discover mathematical and physical laws. In summary, KANs are promising alternatives for MLPs, opening opportunities for further improving today's deep learning models which rely heavily on MLPs.

Submission history

Access paper:.

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

METHODS article

This article is part of the research topic.

Novel Nuclear Reactors and Research Reactors

HPR1000 Pressurizer Degassing System Design and Analysis Provisionally Accepted

- 1 Nuclear Power Institute of China (NPIC), China

The final, formatted version of the article will be published soon.

In HUALONG-1 Unit (HPR1000), the hydrogen (H2) concentration should be reduced to 15 ml(STP)/kg 24 hours before reactor shutdown when Reactor Vessel is scheduled to be opened. Traditional degassing method by letting down the reactor coolant through Chemical and Volume Control System will take longer time and its operation is more complicated. To shorten the degassing time and simplify the operation, this paper proposes a pressurizer degassing system design for HPR1000 by applying the pressurizer as a thermal degassing equipment. Then, the degassing system optimization analysis is carried out under full range of steady operating conditions during shutdown, and the optimal size of the flow limiting orifice plate is obtained.Meanwhile, in order to verify the transient characteristic during the entire degassing process to ensure the operating safety, a dedicated transient degassing program based on an improved non-equilibrium multi-region pressurizer model and a transient degassing model is used then to carry out a transient simulation analysis on this process.The transient simulation results show that, under bounding condition of hot-zero power operation, during the entire degassing process, the pressurizer's pressure drops by a maximum of 0.038 MPa, and the water level rises by 0.016 m above the normal level. As can be seen, both of the pressure and water level are within the normal operation band and shall not initiate any safety signal. Meanwhile, the entire transient process lasts about 24 minutes, and then enters into a stable degassing period. It takes about 5.2 hours to remove the gas dissolved in Reactor Coolant from 35 ml(STP)/kg to 15 ml(STP)/kg. The analysis shows the pressurizer degassing system designed for HPR1000 is safe, effective and reliable.

Keywords: Pressurizer Degassing, HUALONG-1 Unit (HPR1000), Non-equilibrium multi-region model, Degassing Transient, Degassing Optimize, System design

Received: 26 Mar 2024; Accepted: 10 May 2024.