Linux Internals

This blog is intended to help understand internals about linux environment. It stack together very basic and important contents, to help naive kernel users.

Search This Blog

Explanation of "struct task_struct", post a comment, popular posts from this blog, spinlock implementation in arm architecture, macro "container_of".

- Next (Exercise 3)

Process management

According to Andrew Tanenbaum's book "Modern Operating Systems",

All the runnable software on the computer, sometimes including the operating system, is organized into a number of sequential processes, or just processes for short. A process is just an instance of an executing program, including the current values of the program counter, registers, and variables.

Each process has its own address space –- in modern processors it is implemented as a set of pages which map virtual addresses to a physical memory. When another process has to be executed on CPU, context switch occurs: after it processor special registers point to a new set of page tables, thus new virtual address space is used. Virtual address space also contains all binaries and libraries and saved process counter value, so another process will be executed after context switch. Processes may also have multiple threads . Each thread has independent state, including program counter and stack, thus threads may be executed in parallel, but they all threads share same address space.

Process tree in Linux

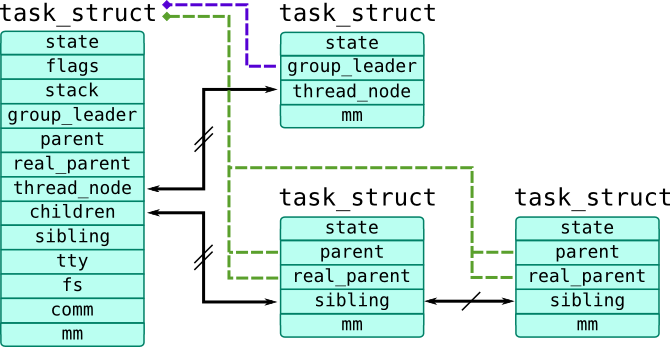

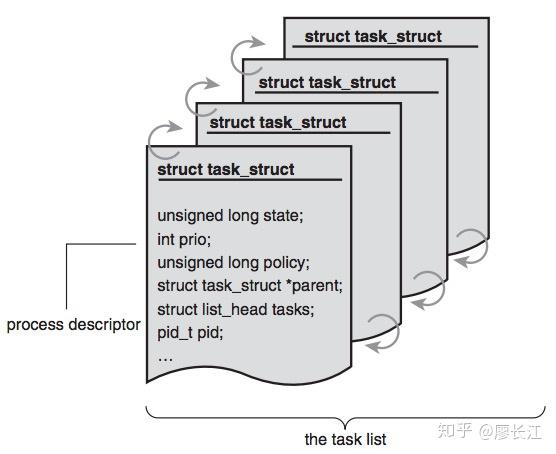

Processes and threads are implemented through universal task_struct structure (defined in include/linux/sched.h ), so we will refer in our book as tasks . The first thread in process is called task group leader and all other threads are linked through list node thread_node and contain pointer group_leader which references task_struct of their process, that is , the task_struct of task group leader . Children processes refer to parent process through parent pointer and link through sibling list node. Parent process is linked with its children using children list head.

Relations between task_struct objects are shown in the following picture:

- task_pid() and task_tid() –- ID of the process ID (stored in tgid field) and thread (stored in pid field) respectively. Note that kernel most of the kernel code doesn't check cached pid and tgid but use namespace wrappers.

- task_parent() –- returns pointer to a parent process, stored in parent / real_parent fields

- task_state() –- returns state bitmask stored in state , such as TASK_RUNNING (0), TASK_INTERRUPTIBLE (1), TASK_UNINTTERRUPTIBLE (2). Last 2 values are for sleeping or waiting tasks –- the difference that only interruptible tasks may receive signals.

- task_execname() –- reads executable name from comm field, which stores base name of executable path. Note that comm respects symbolic links.

- task_cpu() –- returns CPU to which task belongs

- mm (pointer to struct mm_struct ) refers to a address space of a process. For example, exe_file (pointer to struct file ) refers to executable file, while arg_start and arg_end are addresses of first and last byte of argv passed to a process respectively

- fs (pointer to struct fs_struct ) contains filesystem information: path contains working directory of a task, root contains root directory (alterable using chroot system call)

- start_time and real_start_time (represented as struct timespec until 3.17, replaced with u64 nanosecond timestamps) –- monotonic and real start time of a process.

- files (pointer to struct files_struct ) contains table of files opened by process

- utime and stime ( cputime_t ) contain amount of time spent by CPU in userspace and kernel respectively. They can be accessed through Task Time tapset.

Script dumptask.stp demonstrates how these fields may be useful to get information about current process.

Process tree in Solaris

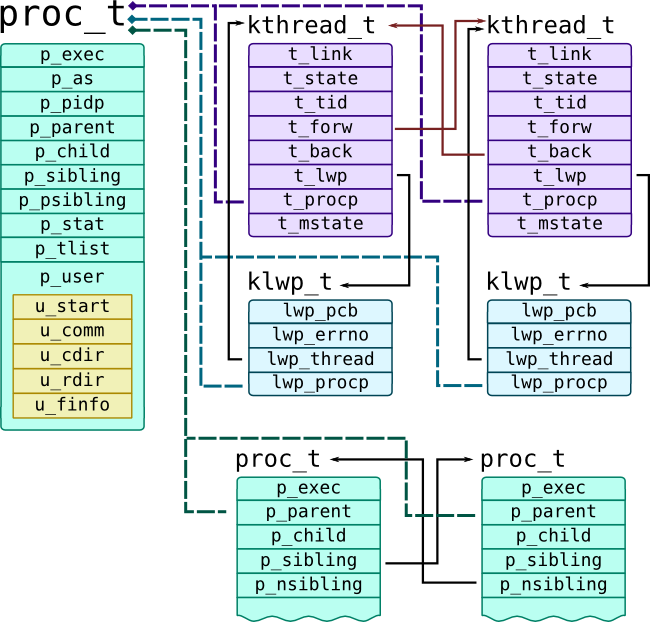

Solaris Kernel distinguishes threads and processes: on low level all threads represented by kthread_t , which are presented to userspace as Light-Weight Processes (or LWPs ) defined as klwp_t . One or multiple LWPs constitute a process proc_t . They all have references to each other, as shown on the following picture:

Current thread is passed as curthread built-in variable to probes. Solaris proc provider has lwpsinfo_t and psinfo_t providers that extract useful information from corresponding thread, process and LWP structures.

Parent process has p_child pointer that refers its first child, while list of children is doubly-linked list with p_sibling pointer (next) and p_psibling (previous) pointers. Each child contains p_parent pointer and p_ppid process ID which refers his parent. Threads of the process is also a doubly-linked list with t_forw (next) and t_prev pointers. Thread references corresponding LWP with t_lwp pointer and its process with t_procp pointer. LWP refers to a thread through lwp_thread pointer, and to a process through lwp_procp pointer.

The following script dumps information about current thread and process. Because DTrace doesn't support loops and conditions, it can read only first 9 files and 9 arguments and does that by generating multiple probes with preprocessor.

(thread->t_procp) #define LWPSINFO(thread) xlate (thread) #define PUSER(thread) thread->t_procp->p_user /** * Extract pointer depending on data model: 8 byte for 64-bit * programs and 4 bytes for 32-bit programs. */ #define DATAMODEL_ILP32 0x00100000 #define GETPTR(proc, array, idx) \ ((uintptr_t) ((proc->p_model == DATAMODEL_ILP32) \ ? ((uint32_t*) array)[idx] : ((uint64_t*) array)[idx])) #define GETPTRSIZE(proc) \ ((proc->p_model == DATAMODEL_ILP32)? 4 : 8) #define FILE(list, num) list[num].uf_file #define CLOCK_TO_MS(clk) (clk) * (`nsec_per_tick / 1000000) /* Helper to extract vnode path in safe manner */ #define VPATH(vn) \ ((vn) == NULL || (vn)->v_path == NULL) \ ? "unknown" : stringof((vn)->v_path) /* Prints process root - can be not `/` for zones */ #define DUMP_TASK_ROOT(thread) \ printf("\troot: %s\n", \ PUSER(thread).u_rdir == NULL \ ? "/" \ : VPATH(PUSER(thread).u_rdir)); /* Prints current working directory of a process */ #define DUMP_TASK_CWD(thread) \ printf("\tcwd: %s\n", \ VPATH(PUSER(thread).u_cdir)); /* Prints executable file of a process */ #define DUMP_TASK_EXEFILE(thread) \ printf("\texe: %s\n", \ VPATH(thread->t_procp->p_exec)); /* Copy up to 9 process arguments. We use `psinfo_t` tapset to get number of arguments, and copy pointers to them into `argvec` array, and strings into `pargs` array. See also kernel function `exec_args()` */ #define COPYARG(t, n) \ pargs[n] = (n t_procp, argvec, n)) : "???" #define DUMP_TASK_ARGS_START(thread) \ printf("\tpsargs: %s\n", PSINFO(thread)->pr_psargs); \ argnum = PSINFO(thread)->pr_argc; \ argvec = (PSINFO(thread)->pr_argv != 0) ? \ copyin(PSINFO(thread)->pr_argv, \ argnum * GETPTRSIZE(thread->t_procp)) : 0;\ COPYARG(thread, 0); COPYARG(thread, 1); COPYARG(thread, 2); \ COPYARG(thread, 3); COPYARG(thread, 4); COPYARG(thread, 5); \ COPYARG(thread, 6); COPYARG(thread, 7); COPYARG(thread, 8); /* Prints start time of process */ #define DUMP_TASK_START_TIME(thread) \ printf("\tstart time: %ums\n", \ (unsigned long) thread->t_procp->p_mstart / 1000000); /* Processor time used by a process. Only for conformance with dumptask.d, it is actually set when process exits */ #define DUMP_TASK_TIME_STATS(thread) \ printf("\tuser: %ldms\t kernel: %ldms\n", \ CLOCK_TO_MS(thread->t_procp->p_utime), \ CLOCK_TO_MS(thread->t_procp->p_stime)); #define DUMP_TASK_FDS_START(thread) \ fdlist = PUSER(thread).u_finfo.fi_list; \ fdcnt = 0; \ fdnum = PUSER(thread).u_finfo.fi_nfiles; #define DUMP_TASK(thread) \ printf("Task %p is %d/%d@%d %s\n", thread, \ PSINFO(thread)->pr_pid, \ LWPSINFO(thread)->pr_lwpid, \ LWPSINFO(thread)->pr_onpro, \ PUSER(thread).u_comm); \ DUMP_TASK_EXEFILE(thread) \ DUMP_TASK_ROOT(thread) \ DUMP_TASK_CWD(thread) \ DUMP_TASK_ARGS_START(thread) \ DUMP_TASK_FDS_START(thread) \ DUMP_TASK_START_TIME(thread) \ DUMP_TASK_TIME_STATS(thread) #define _DUMP_ARG_PROBE(probe, argi) \ probe /argi f_vnode)); } #define DUMP_FILE_PROBE(probe) \ _DUMP_FILE_PROBE(probe, 0) _DUMP_FILE_PROBE(probe, 1) \ _DUMP_FILE_PROBE(probe, 2) _DUMP_FILE_PROBE(probe, 3) \ _DUMP_FILE_PROBE(probe, 4) _DUMP_FILE_PROBE(probe, 5) \ _DUMP_FILE_PROBE(probe, 6) _DUMP_FILE_PROBE(probe, 7) BEGIN { proc = 0; argnum = 0; fdnum = 0; } tick-1s { DUMP_TASK(curthread); } DUMP_ARG_PROBE(tick-1s) DUMP_FILE_PROBE(tick-1s)

psinfo_t provider features pr_psargs field that contains first 80 characters of process arguments. This script uses direct extraction of arguments only for demonstration purposes and to be conformant with dumptask.stp. Like in SystemTap case, this approach doesn't allow to read non-current process arguments.

Lifetime of a process

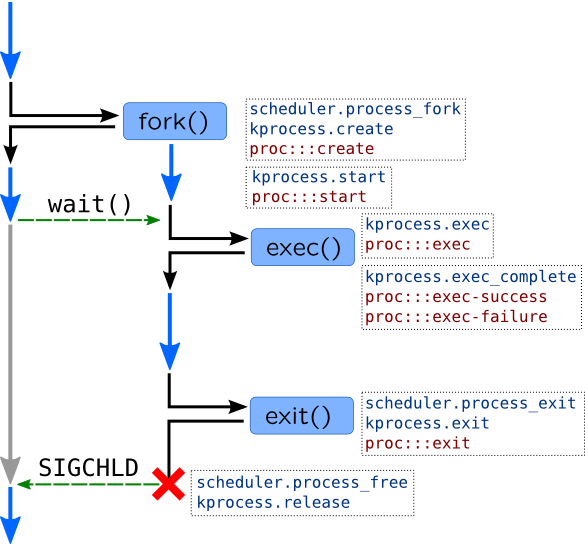

Lifetime of a process and corresponding probes are shown in the following image:

- Parent process calls fork() system call. Kernel creates exact copy of a parent process including address space (which is available in copy-on-write mode) and open files, and gives it a new PID. If fork() is successful, it will return in the context of two processes (parent and child), with the same instruction pointer. Following code usually closes files in child, resets signals, etc.

- Child process calls execve() system call, which replaces address space of a process with a new one based on binary which is passed to execve() call.

There is a simpler call, vfork() , which will not cause copying of an address space and make it a bit more efficient. Linux features universal clone() call which allows to choose which features of a process should be cloned, but in the end, all these calls are wrappers for do_fork() function.

When child process finishes its job, it will call exit() system call. However, process may be killed by a kernel due to incorrect condition (like triggering kernel oops) or machine fault. If parent wants to wait until child process finishes, it will call wait() system call (or waitid() and similar calls), which will stop parent from executing until child exits. wait() call also receives process exit code, so only after that corresponding task_struct will be destroyed. If no process waits on a child, and child is exited, it will be treated as zombie process. Parent process may be also notified by kernel with SIGCHLD signal.

Processes may be traced with kprocess and scheduler tapsets in SystemTap, or DTrace proc provider. System calls may be traced with appropriate probes too. Here are some useful probes:

These probes are demonstrated in the following scripts.

#!/usr/bin/stap probe scheduler.process*, scheduler.wakeup_new, syscall.fork, syscall.exec*, syscall.exit, syscall.wait*, kprocess.* { printf("%6d[%8s]/%6d[%8s] %s\n", pid(), execname(), ppid(), pid2execname(ppid()), pn()); } probe scheduler.process_fork { printf("\tPID: %d -> %d\n", parent_pid, child_pid); } probe kprocess.exec { printf("\tfilename: %s\n", filename); } probe kprocess.exit { printf("\treturn code: %d\n", code); }

2578[ bash]/ 2576[ sshd] syscall.fork 2578[ bash]/ 2576[ sshd] kprocess.create 2578[ bash]/ 2576[ sshd] scheduler.process_fork PID: 2578 -> 3342 2578[ bash]/ 2576[ sshd] scheduler.wakeup_new 3342[ bash]/ 2578[ bash] kprocess.start 2578[ bash]/ 2576[ sshd] syscall.wait4 2578[ bash]/ 2576[ sshd] scheduler.process_wait filename: /bin/uname 3342[ bash]/ 2578[ bash] kprocess.exec 3342[ bash]/ 2578[ bash] syscall.execve 3342[ uname]/ 2578[ bash] kprocess.exec_complete return code: 0 3342[ uname]/ 2578[ bash] kprocess.exit 3342[ uname]/ 2578[ bash] syscall.exit 3342[ uname]/ 2578[ bash] scheduler.process_exit 2578[ bash]/ 2576[ sshd] kprocess.release

#!/usr/sbin/dtrace -qCs #define PARENT_EXECNAME(thread) \ (thread->t_procp->p_parent != NULL) \ ? stringof(thread->t_procp->p_parent->p_user.u_comm) \ : "???" proc:::, syscall::fork*:entry, syscall::exec*:entry, syscall::wait*:entry { printf("%6d[%8s]/%6d[%8s] %s::%s:%s\n", pid, execname, ppid, PARENT_EXECNAME(curthread), probeprov, probefunc, probename); } proc:::create { printf("\tPID: %d -> %d\n", curpsinfo->pr_pid, args[0]->pr_pid); } proc:::exec { printf("\tfilename: %s\n", args[0]); } proc:::exit { printf("\treturn code: %d\n", args[0]); }

16729[ bash]/ 16728[ sshd] syscall::forksys:entry 16729[ bash]/ 16728[ sshd] proc::lwp_create:lwp-create 16729[ bash]/ 16728[ sshd] proc::cfork:create PID: 16729 -> 17156 16729[ bash]/ 16728[ sshd] syscall::waitsys:entry 17156[ bash]/ 16729[ bash] proc::lwp_rtt_initial:start 17156[ bash]/ 16729[ bash] proc::lwp_rtt_initial:lwp-start 17156[ bash]/ 16729[ bash] syscall::exece:entry 17156[ bash]/ 16729[ bash] proc::exec_common:exec filename: /usr/sbin/uname 17156[ uname]/ 16729[ bash] proc::exec_common:exec-success 17156[ uname]/ 16729[ bash] proc::proc_exit:lwp-exit 17156[ uname]/ 16729[ bash] proc::proc_exit:exit return code: 1 0[ sched]/ 0[ ???] proc::sigtoproc:signal-send

- Up (Dynamic Tracing with DTrace & SystemTap)

- BitBucket |

- Published under terms of CC-BY-NC-SA 3.0 license.

- View page source

Processes ¶

View slides

Lecture objectives ¶

- Process and threads

- Context switching

- Blocking and waking up

- Process context

Processes and threads ¶

A process is an operating system abstraction that groups together multiple resources:

All this information is grouped in the Process Control Group (PCB). In Linux this is struct task_struct .

Overview of process resources ¶

A summary of the resources a process has can be obtain from the /proc/<pid> directory, where <pid> is the process id for the process we want to look at.

struct task_struct ¶

Lets take a close look at struct task_struct . For that we could just look at the source code, but here we will use a tool called pahole (part of the dwarves install package) in order to get some insights about this structure:

As you can see it is a pretty large data structure: almost 8KB in size and 155 fields.

Inspecting task_struct ¶

The following screencast is going to demonstrate how we can inspect the process control block ( struct task_struct ) by connecting the debugger to the running virtual machine. We are going to use a helper gdb command lx-ps to list the processes and the address of the task_struct for each process.

Quiz: Inspect a task to determine opened files ¶

Use the debugger to inspect the process named syslogd.

- What command should we use to list the opened file descriptors?

- How many file descriptors are opened?

- What command should we use the determine the file name for opened file descriptor 3?

- What is the filename for file descriptor 3?

A thread is the basic unit that the kernel process scheduler uses to allow applications to run the CPU. A thread has the following characteristics:

- Each thread has its own stack and together with the register values it determines the thread execution state

- A thread runs in the context of a process and all threads in the same process share the resources

- The kernel schedules threads not processes and user-level threads (e.g. fibers, coroutines, etc.) are not visible at the kernel level

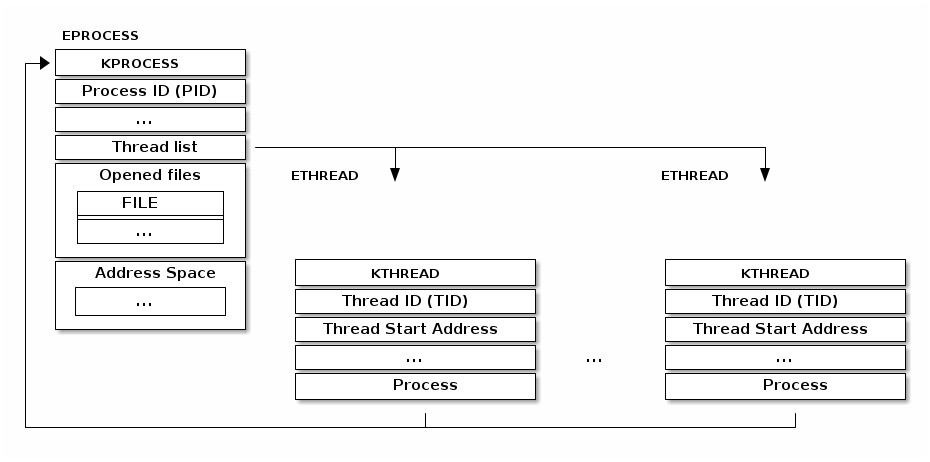

The typical thread implementation is one where the threads is implemented as a separate data structure which is then linked to the process data structure. For example, the Windows kernel uses such an implementation:

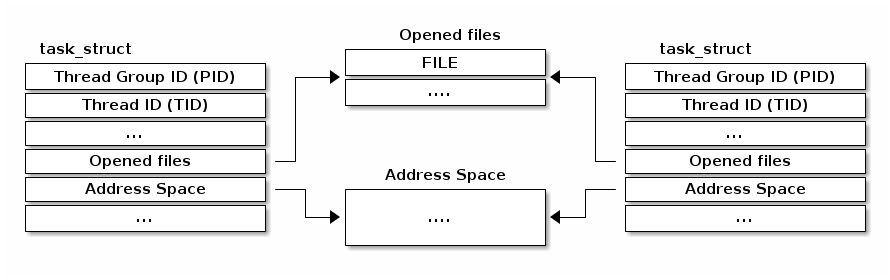

Linux uses a different implementation for threads. The basic unit is called a task (hence the struct task_struct ) and it is used for both threads and processes. Instead of embedding resources in the task structure it has pointers to these resources.

Thus, if two threads are the same process will point to the same resource structure instance. If two threads are in different processes they will point to different resource structure instances.

The clone system call ¶

In Linux a new thread or process is create with the clone() system call. Both the fork() system call and the pthread_create() function uses the clone() implementation.

It allows the caller to decide what resources should be shared with the parent and which should be copied or isolated:

- CLONE_FILES - shares the file descriptor table with the parent

- CLONE_VM - shares the address space with the parent

- CLONE_FS - shares the filesystem information (root directory, current directory) with the parent

- CLONE_NEWNS - does not share the mount namespace with the parent

- CLONE_NEWIPC - does not share the IPC namespace (System V IPC objects, POSIX message queues) with the parent

- CLONE_NEWNET - does not share the networking namespaces (network interfaces, routing table) with the parent

For example, if CLONE_FILES | CLONE_VM | CLONE_FS is used by the caller then effectively a new thread is created. If these flags are not used then a new process is created.

Namespaces and "containers" ¶

"Containers" are a form of lightweight virtual machines that share the same kernel instance, as opposed to normal virtualization where a hypervisor runs multiple VMs, each with its one kernel instance.

Examples of container technologies are LXC - that allows running lightweight "VM" and docker - a specialized container for running a single application.

Containers are built on top of a few kernel features, one of which is namespaces. They allow isolation of different resources that would otherwise be globally visible. For example, without containers, all processes would be visible in /proc. With containers, processes in one container will not be visible (in /proc or be killable) to other containers.

To achieve this partitioning, the struct nsproxy structure is used to group types of resources that we want to partition. It currently supports IPC, networking, cgroup, mount, networking, PID, time namespaces. For example, instead of having a global list for networking interfaces, the list is part of a struct net . The system initializes with a default namespace ( init_net ) and by default all processes will share this namespace. When a new namespace is created a new net namespace is created and then new processes can point to that new namespace instead of the default one.

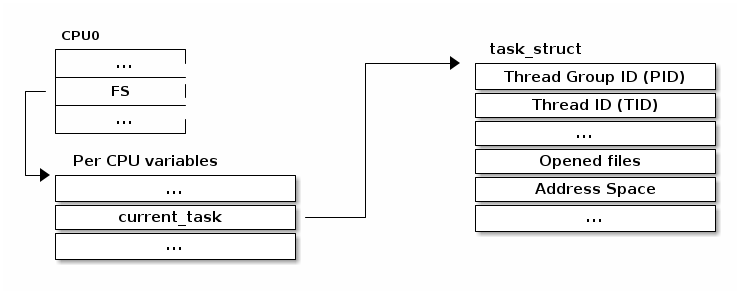

Accessing the current process ¶

Accessing the current process is a frequent operation:

- opening a file needs access to struct task_struct 's file field

- mapping a new file needs access to struct task_struct 's mm field

- Over 90% of the system calls needs to access the current process structure so it needs to be fast

- The current macro is available to access to current process's struct task_struct

In order to support fast access in multi processor configurations a per CPU variable is used to store and retrieve the pointer to the current struct task_struct :

Previously the following sequence was used as the implementation for the current macro:

Quiz: previous implementation for current (x86) ¶

What is the size of struct thread_info ?

Which of the following are potential valid sizes for struct thread_info : 4095, 4096, 4097?

Context switching ¶

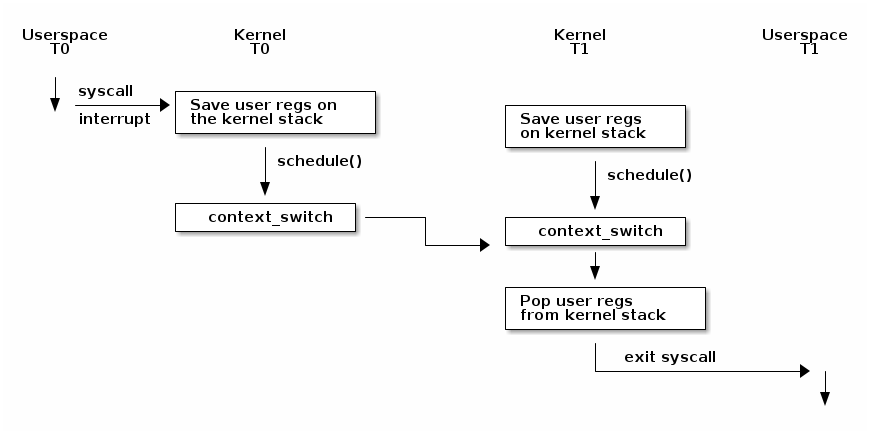

The following diagram shows an overview of the Linux kernel context switch process:

Note that before a context switch can occur we must do a kernel transition, either with a system call or with an interrupt. At that point the user space registers are saved on the kernel stack. At some point the schedule() function will be called which can decide that a context switch must occur from T0 to T1 (e.g. because the current thread is blocking waiting for an I/O operation to complete or because it's allocated time slice has expired).

At that point context_switch() will perform architecture specific operations and will switch the address space if needed:

Then it will call the architecture specific switch_to implementation to switch the registers state and kernel stack. Note that registers are saved on stack and that the stack pointer is saved in the task structure:

You can notice that the instruction pointer is not explicitly saved. It is not needed because:

a task will always resume in this function the schedule() ( context_switch() is always inlined) caller's return address is saved on the kernel stack a jmp is used to execute __switch_to() which is a function and when it returns it will pop the original (next task) return address from the stack

The following screencast uses the debugger to setup a breaking in __switch_to_asm and examine the stack during the context switch:

Quiz: context switch ¶

We are executing a context switch. Select all of the statements that are true.

- the ESP register is saved in the task structure

- the EIP register is saved in the task structure

- general registers are saved in the task structure

- the ESP register is saved on the stack

- the EIP register is saved on the stack

- general registers are saved on the stack

Blocking and waking up tasks ¶

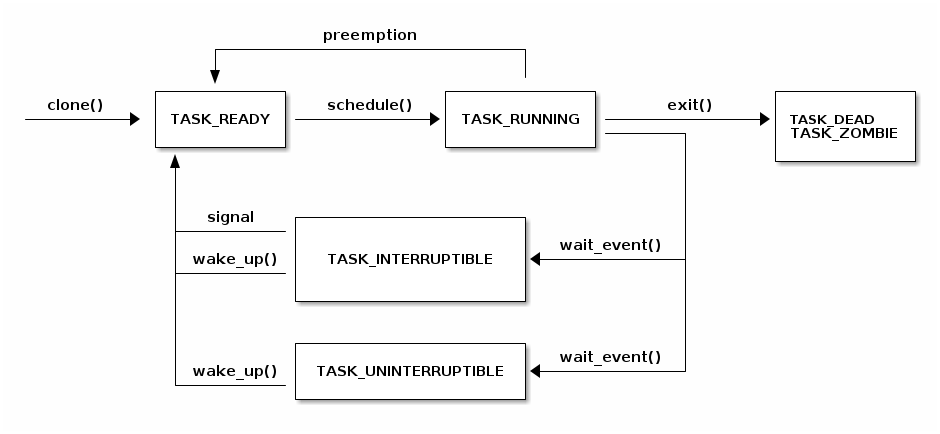

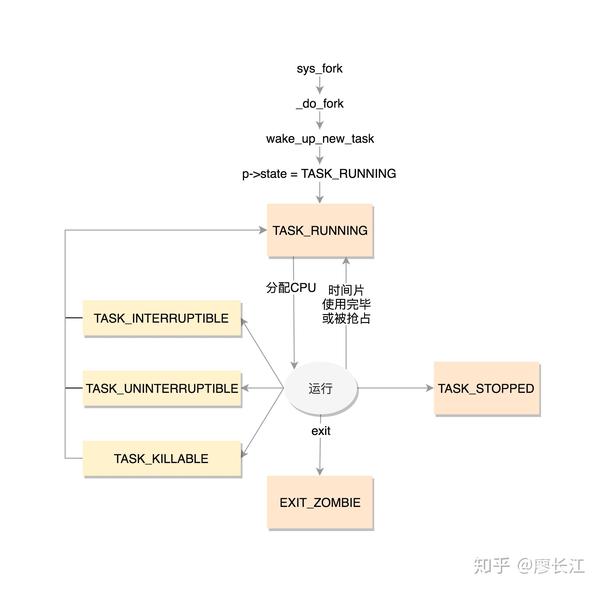

Task states ¶.

The following diagram shows to the task (threads) states and the possible transitions between them:

Blocking the current thread ¶

Blocking the current thread is an important operation we need to perform to implement efficient task scheduling - we want to run other threads while I/O operations complete.

In order to accomplish this the following operations take place:

- Set the current thread state to TASK_UINTERRUPTIBLE or TASK_INTERRUPTIBLE

- Add the task to a waiting queue

- Call the scheduler which will pick up a new task from the READY queue

- Do the context switch to the new task

Below are some snippets for the wait_event implementation. Note that the waiting queue is a list with some extra information like a pointer to the task struct.

Also note that a lot of effort is put into making sure no deadlock can occur between wait_event and wake_up : the task is added to the list before checking condition , signals are checked before calling schedule() .

Waking up a task ¶

We can wake-up tasks by using the wake_up primitive. The following high level operations are performed to wake up a task:

- Select a task from the waiting queue

- Set the task state to TASK_READY

- Insert the task into the scheduler's READY queue

- On SMP system this is a complex operation: each processor has its own queue, queues need to be balanced, CPUs needs to be signaled

Preempting tasks ¶

Up until this point we look at how context switches occurs voluntary between threads. Next we will look at how preemption is handled. We will start wight the simpler case where the kernel is configured as non preemptive and then we will move to the preemptive kernel case.

Non preemptive kernel ¶

- At every tick the kernel checks to see if the current process has its time slice consumed

- If that happens a flag is set in interrupt context

- Before returning to userspace the kernel checks this flag and calls schedule() if needed

- In this case tasks are not preempted while running in kernel mode (e.g. system call) so there are no synchronization issues

Preemptive kernel ¶

In this case the current task can be preempted even if we are running in kernel mode and executing a system call. This requires using a special synchronization primitives: preempt_disable and preempt_enable .

In order to simplify handling for preemptive kernels and since synchronization primitives are needed for the SMP case anyway, preemption is disabled automatically when a spinlock is used.

As before, if we run into a condition that requires the preemption of the current task (its time slices has expired) a flag is set. This flag is checked whenever the preemption is reactivated, e.g. when exiting a critical section through a spin_unlock() and if needed the scheduler is called to select a new task.

Process context ¶

Now that we have examined the implementation of processes and threads (tasks), how context switching occurs, how we can block, wake-up and preempt tasks, we can finally define what the process context is what are its properties:

The kernel is executing in process context when it is running a system call.

In process context there is a well defined context and we can access the current process data with current

In process context we can sleep (wait on a condition).

In process context we can access the user-space (unless we are running in a kernel thread context).

Kernel threads ¶

Sometimes the kernel core or device drivers need to perform blocking operations and thus they need to run in process context.

Kernel threads are used exactly for this and are a special class of tasks that don't "userspace" resources (e.g. no address space or opened files).

The following screencast takes a closer look at kernel threads:

Using gdb scripts for kernel inspection ¶

The Linux kernel comes with a predefined set of gdb extra commands we can use to inspect the kernel during debugging. They will automatically be loaded as long gdbinit is properly setup

All of the kernel specific commands are prefixed with lx-. You can use TAB in gdb to list all of them:

The implementation of the commands can be found at script/gdb/linux . Lets take a closer look at the lx-ps implementation:

Quiz: Kernel gdb scripts ¶

What is the following change of the lx-ps script trying to accomplish?

The Linux Kernel

Quick search.

- Development process

- Submitting patches

- Code of conduct

- Maintainer handbook

- All development-process docs

- Driver APIs

- Licensing rules

- Writing documentation

- Development tools

- Testing guide

- Hacking guide

- Fault injection

- Livepatching

- Linux kernel release 6.x <http://kernel.org/>

- The kernel’s command-line parameters

- Linux allocated devices (4.x+ version)

- Documentation for /proc/sys

- Linux ABI description

- Feature status on all architectures

- Hardware vulnerabilities

- Reporting issues

- Reporting regressions

- How to quickly build a trimmed Linux kernel

- How to verify bugs and bisect regressions

- Bug hunting

- Bisecting a bug

- Tainted kernels

- Ramoops oops/panic logger

- Dynamic debug

- Explaining the “No working init found.” boot hang message

- Documentation for Kdump - The kexec-based Crash Dumping Solution

- Performance monitor support

- pstore block oops/panic logger

- Rules on how to access information in sysfs

- Discovering Linux kernel subsystems used by a workload

- ACPI Support

- ATA over Ethernet (AoE)

- Auxiliary Display Support

- A block layer cache (bcache)

- The Android binderfs Filesystem

- Kernel Support for miscellaneous Binary Formats (binfmt_misc)

- Block Devices

- Boot Configuration

- Linux Braille Console

- btmrvl driver

- Control Groups

- Block IO Controller

- CPU Accounting Controller

- Device Whitelist Controller

- Cgroup Freezer

- HugeTLB Controller

- Memory Resource Controller(Memcg) Implementation Memo

- Memory Resource Controller

- Misc controller

- Network classifier cgroup

- Network priority cgroup

- Process Number Controller

- RDMA Controller

- Control Group v2

- Clearing WARN_ONCE

- How CPU topology info is exported via sysfs

- Dell Remote BIOS Update driver (dell_rbu)

- Device Mapper

- The EFI Boot Stub

- ext4 General Information

- File system Monitoring with fanotify

- Notes on the change from 16-bit UIDs to 32-bit UIDs

- Hardware random number generators

- Using the initial RAM disk (initrd)

- I/O statistics fields

- Java(tm) Binary Kernel Support for Linux v1.03

- IBM’s Journaled File System (JFS) for Linux

- Reducing OS jitter due to per-cpu kthreads

- Laptop Drivers

- Parallel port LCD/Keypad Panel support

- LDM - Logical Disk Manager (Dynamic Disks)

- Softlockup detector and hardlockup detector (aka nmi_watchdog)

- Linux Security Module Usage

- RAID arrays

- Media subsystem admin and user guide

- Memory Management

- Kernel module signing facility

- Mono(tm) Binary Kernel Support for Linux

- Numa policy hit/miss statistics

- Perf events and tool security

- Power Management

- Set udev rules for PMF Smart PC Builder

- Linux Plug and Play Documentation

- RapidIO Subsystem Guide

- Reliability, Availability and Serviceability (RAS)

- Error decoding

- Address translation

- Real Time Clock (RTC) Drivers for Linux

- Linux Serial Console

- Video Mode Selection Support 2.13

- Syscall User Dispatch

- Linux Magic System Request Key Hacks

- Thermal Subsystem

- USB4 and Thunderbolt

- Unicode support

- Software cursor for VGA

- Video Output Switcher Control

- The SGI XFS Filesystem

- Build system

- Userspace tools

- Userspace API

- Firmware and Devicetree

- CPU architectures

- Unsorted documentation

- Translations

- Show Source

Control Groups ¶

Written by Paul Menage < menage @ google . com > based on CPUSETS

Original copyright statements from CPUSETS :

Portions Copyright (C) 2004 BULL SA.

Portions Copyright (c) 2004-2006 Silicon Graphics, Inc.

Modified by Paul Jackson < pj @ sgi . com >

Modified by Christoph Lameter < cl @ linux . com >

1. Control Groups ¶

1.1 what are cgroups ¶.

Control Groups provide a mechanism for aggregating/partitioning sets of tasks, and all their future children, into hierarchical groups with specialized behaviour.

Definitions:

A cgroup associates a set of tasks with a set of parameters for one or more subsystems.

A subsystem is a module that makes use of the task grouping facilities provided by cgroups to treat groups of tasks in particular ways. A subsystem is typically a “resource controller” that schedules a resource or applies per-cgroup limits, but it may be anything that wants to act on a group of processes, e.g. a virtualization subsystem.

A hierarchy is a set of cgroups arranged in a tree, such that every task in the system is in exactly one of the cgroups in the hierarchy, and a set of subsystems; each subsystem has system-specific state attached to each cgroup in the hierarchy. Each hierarchy has an instance of the cgroup virtual filesystem associated with it.

At any one time there may be multiple active hierarchies of task cgroups. Each hierarchy is a partition of all tasks in the system.

User-level code may create and destroy cgroups by name in an instance of the cgroup virtual file system, specify and query to which cgroup a task is assigned, and list the task PIDs assigned to a cgroup. Those creations and assignments only affect the hierarchy associated with that instance of the cgroup file system.

On their own, the only use for cgroups is for simple job tracking. The intention is that other subsystems hook into the generic cgroup support to provide new attributes for cgroups, such as accounting/limiting the resources which processes in a cgroup can access. For example, cpusets (see CPUSETS ) allow you to associate a set of CPUs and a set of memory nodes with the tasks in each cgroup.

1.2 Why are cgroups needed ? ¶

There are multiple efforts to provide process aggregations in the Linux kernel, mainly for resource-tracking purposes. Such efforts include cpusets, CKRM/ResGroups, UserBeanCounters, and virtual server namespaces. These all require the basic notion of a grouping/partitioning of processes, with newly forked processes ending up in the same group (cgroup) as their parent process.

The kernel cgroup patch provides the minimum essential kernel mechanisms required to efficiently implement such groups. It has minimal impact on the system fast paths, and provides hooks for specific subsystems such as cpusets to provide additional behaviour as desired.

Multiple hierarchy support is provided to allow for situations where the division of tasks into cgroups is distinctly different for different subsystems - having parallel hierarchies allows each hierarchy to be a natural division of tasks, without having to handle complex combinations of tasks that would be present if several unrelated subsystems needed to be forced into the same tree of cgroups.

At one extreme, each resource controller or subsystem could be in a separate hierarchy; at the other extreme, all subsystems would be attached to the same hierarchy.

As an example of a scenario (originally proposed by vatsa @ in . ibm . com ) that can benefit from multiple hierarchies, consider a large university server with various users - students, professors, system tasks etc. The resource planning for this server could be along the following lines:

Browsers like Firefox/Lynx go into the WWW network class, while (k)nfsd goes into the NFS network class.

At the same time Firefox/Lynx will share an appropriate CPU/Memory class depending on who launched it (prof/student).

With the ability to classify tasks differently for different resources (by putting those resource subsystems in different hierarchies), the admin can easily set up a script which receives exec notifications and depending on who is launching the browser he can:

With only a single hierarchy, he now would potentially have to create a separate cgroup for every browser launched and associate it with appropriate network and other resource class. This may lead to proliferation of such cgroups.

Also let’s say that the administrator would like to give enhanced network access temporarily to a student’s browser (since it is night and the user wants to do online gaming :)) OR give one of the student’s simulation apps enhanced CPU power.

With ability to write PIDs directly to resource classes, it’s just a matter of:

Without this ability, the administrator would have to split the cgroup into multiple separate ones and then associate the new cgroups with the new resource classes.

1.3 How are cgroups implemented ? ¶

Control Groups extends the kernel as follows:

Each task in the system has a reference-counted pointer to a css_set. A css_set contains a set of reference-counted pointers to cgroup_subsys_state objects, one for each cgroup subsystem registered in the system. There is no direct link from a task to the cgroup of which it’s a member in each hierarchy, but this can be determined by following pointers through the cgroup_subsys_state objects. This is because accessing the subsystem state is something that’s expected to happen frequently and in performance-critical code, whereas operations that require a task’s actual cgroup assignments (in particular, moving between cgroups) are less common. A linked list runs through the cg_list field of each task_struct using the css_set, anchored at css_set->tasks. A cgroup hierarchy filesystem can be mounted for browsing and manipulation from user space. You can list all the tasks (by PID) attached to any cgroup.

The implementation of cgroups requires a few, simple hooks into the rest of the kernel, none in performance-critical paths:

in init/main.c, to initialize the root cgroups and initial css_set at system boot. in fork and exit, to attach and detach a task from its css_set.

In addition, a new file system of type “cgroup” may be mounted, to enable browsing and modifying the cgroups presently known to the kernel. When mounting a cgroup hierarchy, you may specify a comma-separated list of subsystems to mount as the filesystem mount options. By default, mounting the cgroup filesystem attempts to mount a hierarchy containing all registered subsystems.

If an active hierarchy with exactly the same set of subsystems already exists, it will be reused for the new mount. If no existing hierarchy matches, and any of the requested subsystems are in use in an existing hierarchy, the mount will fail with -EBUSY. Otherwise, a new hierarchy is activated, associated with the requested subsystems.

It’s not currently possible to bind a new subsystem to an active cgroup hierarchy, or to unbind a subsystem from an active cgroup hierarchy. This may be possible in future, but is fraught with nasty error-recovery issues.

When a cgroup filesystem is unmounted, if there are any child cgroups created below the top-level cgroup, that hierarchy will remain active even though unmounted; if there are no child cgroups then the hierarchy will be deactivated.

No new system calls are added for cgroups - all support for querying and modifying cgroups is via this cgroup file system.

Each task under /proc has an added file named ‘cgroup’ displaying, for each active hierarchy, the subsystem names and the cgroup name as the path relative to the root of the cgroup file system.

Each cgroup is represented by a directory in the cgroup file system containing the following files describing that cgroup:

tasks: list of tasks (by PID) attached to that cgroup. This list is not guaranteed to be sorted. Writing a thread ID into this file moves the thread into this cgroup. cgroup.procs: list of thread group IDs in the cgroup. This list is not guaranteed to be sorted or free of duplicate TGIDs, and userspace should sort/uniquify the list if this property is required. Writing a thread group ID into this file moves all threads in that group into this cgroup. notify_on_release flag: run the release agent on exit? release_agent: the path to use for release notifications (this file exists in the top cgroup only)

Other subsystems such as cpusets may add additional files in each cgroup dir.

New cgroups are created using the mkdir system call or shell command. The properties of a cgroup, such as its flags, are modified by writing to the appropriate file in that cgroups directory, as listed above.

The named hierarchical structure of nested cgroups allows partitioning a large system into nested, dynamically changeable, “soft-partitions”.

The attachment of each task, automatically inherited at fork by any children of that task, to a cgroup allows organizing the work load on a system into related sets of tasks. A task may be re-attached to any other cgroup, if allowed by the permissions on the necessary cgroup file system directories.

When a task is moved from one cgroup to another, it gets a new css_set pointer - if there’s an already existing css_set with the desired collection of cgroups then that group is reused, otherwise a new css_set is allocated. The appropriate existing css_set is located by looking into a hash table.

To allow access from a cgroup to the css_sets (and hence tasks) that comprise it, a set of cg_cgroup_link objects form a lattice; each cg_cgroup_link is linked into a list of cg_cgroup_links for a single cgroup on its cgrp_link_list field, and a list of cg_cgroup_links for a single css_set on its cg_link_list.

Thus the set of tasks in a cgroup can be listed by iterating over each css_set that references the cgroup, and sub-iterating over each css_set’s task set.

The use of a Linux virtual file system (vfs) to represent the cgroup hierarchy provides for a familiar permission and name space for cgroups, with a minimum of additional kernel code.

1.4 What does notify_on_release do ? ¶

If the notify_on_release flag is enabled (1) in a cgroup, then whenever the last task in the cgroup leaves (exits or attaches to some other cgroup) and the last child cgroup of that cgroup is removed, then the kernel runs the command specified by the contents of the “release_agent” file in that hierarchy’s root directory, supplying the pathname (relative to the mount point of the cgroup file system) of the abandoned cgroup. This enables automatic removal of abandoned cgroups. The default value of notify_on_release in the root cgroup at system boot is disabled (0). The default value of other cgroups at creation is the current value of their parents’ notify_on_release settings. The default value of a cgroup hierarchy’s release_agent path is empty.

1.5 What does clone_children do ? ¶

This flag only affects the cpuset controller. If the clone_children flag is enabled (1) in a cgroup, a new cpuset cgroup will copy its configuration from the parent during initialization.

1.6 How do I use cgroups ? ¶

To start a new job that is to be contained within a cgroup, using the “cpuset” cgroup subsystem, the steps are something like:

For example, the following sequence of commands will setup a cgroup named “Charlie”, containing just CPUs 2 and 3, and Memory Node 1, and then start a subshell ‘sh’ in that cgroup:

2. Usage Examples and Syntax ¶

2.1 basic usage ¶.

Creating, modifying, using cgroups can be done through the cgroup virtual filesystem.

To mount a cgroup hierarchy with all available subsystems, type:

The “xxx” is not interpreted by the cgroup code, but will appear in /proc/mounts so may be any useful identifying string that you like.

Note: Some subsystems do not work without some user input first. For instance, if cpusets are enabled the user will have to populate the cpus and mems files for each new cgroup created before that group can be used.

As explained in section 1.2 Why are cgroups needed? you should create different hierarchies of cgroups for each single resource or group of resources you want to control. Therefore, you should mount a tmpfs on /sys/fs/cgroup and create directories for each cgroup resource or resource group:

To mount a cgroup hierarchy with just the cpuset and memory subsystems, type:

While remounting cgroups is currently supported, it is not recommend to use it. Remounting allows changing bound subsystems and release_agent. Rebinding is hardly useful as it only works when the hierarchy is empty and release_agent itself should be replaced with conventional fsnotify. The support for remounting will be removed in the future.

To Specify a hierarchy’s release_agent:

Note that specifying ‘release_agent’ more than once will return failure.

Note that changing the set of subsystems is currently only supported when the hierarchy consists of a single (root) cgroup. Supporting the ability to arbitrarily bind/unbind subsystems from an existing cgroup hierarchy is intended to be implemented in the future.

Then under /sys/fs/cgroup/rg1 you can find a tree that corresponds to the tree of the cgroups in the system. For instance, /sys/fs/cgroup/rg1 is the cgroup that holds the whole system.

If you want to change the value of release_agent:

It can also be changed via remount.

If you want to create a new cgroup under /sys/fs/cgroup/rg1:

Now you want to do something with this cgroup:

# cd my_cgroup

In this directory you can find several files:

Now attach your shell to this cgroup:

You can also create cgroups inside your cgroup by using mkdir in this directory:

To remove a cgroup, just use rmdir:

This will fail if the cgroup is in use (has cgroups inside, or has processes attached, or is held alive by other subsystem-specific reference).

2.2 Attaching processes ¶

Note that it is PID, not PIDs. You can only attach ONE task at a time. If you have several tasks to attach, you have to do it one after another:

You can attach the current shell task by echoing 0:

You can use the cgroup.procs file instead of the tasks file to move all threads in a threadgroup at once. Echoing the PID of any task in a threadgroup to cgroup.procs causes all tasks in that threadgroup to be attached to the cgroup. Writing 0 to cgroup.procs moves all tasks in the writing task’s threadgroup.

Note: Since every task is always a member of exactly one cgroup in each mounted hierarchy, to remove a task from its current cgroup you must move it into a new cgroup (possibly the root cgroup) by writing to the new cgroup’s tasks file.

Note: Due to some restrictions enforced by some cgroup subsystems, moving a process to another cgroup can fail.

2.3 Mounting hierarchies by name ¶

Passing the name=<x> option when mounting a cgroups hierarchy associates the given name with the hierarchy. This can be used when mounting a pre-existing hierarchy, in order to refer to it by name rather than by its set of active subsystems. Each hierarchy is either nameless, or has a unique name.

The name should match [w.-]+

When passing a name=<x> option for a new hierarchy, you need to specify subsystems manually; the legacy behaviour of mounting all subsystems when none are explicitly specified is not supported when you give a subsystem a name.

The name of the subsystem appears as part of the hierarchy description in /proc/mounts and /proc/<pid>/cgroups.

3. Kernel API ¶

3.1 overview ¶.

Each kernel subsystem that wants to hook into the generic cgroup system needs to create a cgroup_subsys object. This contains various methods, which are callbacks from the cgroup system, along with a subsystem ID which will be assigned by the cgroup system.

Other fields in the cgroup_subsys object include:

subsys_id: a unique array index for the subsystem, indicating which entry in cgroup->subsys[] this subsystem should be managing.

name: should be initialized to a unique subsystem name. Should be no longer than MAX_CGROUP_TYPE_NAMELEN.

early_init: indicate if the subsystem needs early initialization at system boot.

Each cgroup object created by the system has an array of pointers, indexed by subsystem ID; this pointer is entirely managed by the subsystem; the generic cgroup code will never touch this pointer.

3.2 Synchronization ¶

There is a global mutex, cgroup_mutex, used by the cgroup system. This should be taken by anything that wants to modify a cgroup. It may also be taken to prevent cgroups from being modified, but more specific locks may be more appropriate in that situation.

See kernel/cgroup.c for more details.

Subsystems can take/release the cgroup_mutex via the functions cgroup_lock()/cgroup_unlock().

Accessing a task’s cgroup pointer may be done in the following ways: - while holding cgroup_mutex - while holding the task’s alloc_lock (via task_lock()) - inside an rcu_read_lock() section via rcu_dereference()

3.3 Subsystem API ¶

Each subsystem should:

add an entry in linux/cgroup_subsys.h

define a cgroup_subsys object called <name>_cgrp_subsys

Each subsystem may export the following methods. The only mandatory methods are css_alloc/free. Any others that are null are presumed to be successful no-ops.

struct cgroup_subsys_state *css_alloc(struct cgroup *cgrp) (cgroup_mutex held by caller)

Called to allocate a subsystem state object for a cgroup. The subsystem should allocate its subsystem state object for the passed cgroup, returning a pointer to the new object on success or a ERR_PTR() value. On success, the subsystem pointer should point to a structure of type cgroup_subsys_state (typically embedded in a larger subsystem-specific object), which will be initialized by the cgroup system. Note that this will be called at initialization to create the root subsystem state for this subsystem; this case can be identified by the passed cgroup object having a NULL parent (since it’s the root of the hierarchy) and may be an appropriate place for initialization code.

int css_online(struct cgroup *cgrp) (cgroup_mutex held by caller)

Called after @cgrp successfully completed all allocations and made visible to cgroup_for_each_child/descendant_*() iterators. The subsystem may choose to fail creation by returning -errno. This callback can be used to implement reliable state sharing and propagation along the hierarchy. See the comment on cgroup_for_each_descendant_pre() for details.

void css_offline(struct cgroup *cgrp); (cgroup_mutex held by caller)

This is the counterpart of css_online() and called iff css_online() has succeeded on @cgrp. This signifies the beginning of the end of @cgrp. @cgrp is being removed and the subsystem should start dropping all references it’s holding on @cgrp. When all references are dropped, cgroup removal will proceed to the next step - css_free(). After this callback, @cgrp should be considered dead to the subsystem.

void css_free(struct cgroup *cgrp) (cgroup_mutex held by caller)

The cgroup system is about to free @cgrp; the subsystem should free its subsystem state object. By the time this method is called, @cgrp is completely unused; @cgrp->parent is still valid. (Note - can also be called for a newly-created cgroup if an error occurs after this subsystem’s create() method has been called for the new cgroup).

int can_attach(struct cgroup *cgrp, struct cgroup_taskset *tset) (cgroup_mutex held by caller)

Called prior to moving one or more tasks into a cgroup; if the subsystem returns an error, this will abort the attach operation. @tset contains the tasks to be attached and is guaranteed to have at least one task in it.

it’s guaranteed that all are from the same thread group

@tset contains all tasks from the thread group whether or not they’re switching cgroups

the first task is the leader

Each @tset entry also contains the task’s old cgroup and tasks which aren’t switching cgroup can be skipped easily using the cgroup_taskset_for_each() iterator. Note that this isn’t called on a fork. If this method returns 0 (success) then this should remain valid while the caller holds cgroup_mutex and it is ensured that either attach() or cancel_attach() will be called in future.

void css_reset(struct cgroup_subsys_state *css) (cgroup_mutex held by caller)

An optional operation which should restore @css’s configuration to the initial state. This is currently only used on the unified hierarchy when a subsystem is disabled on a cgroup through “cgroup.subtree_control” but should remain enabled because other subsystems depend on it. cgroup core makes such a css invisible by removing the associated interface files and invokes this callback so that the hidden subsystem can return to the initial neutral state. This prevents unexpected resource control from a hidden css and ensures that the configuration is in the initial state when it is made visible again later.

void cancel_attach(struct cgroup *cgrp, struct cgroup_taskset *tset) (cgroup_mutex held by caller)

Called when a task attach operation has failed after can_attach() has succeeded. A subsystem whose can_attach() has some side-effects should provide this function, so that the subsystem can implement a rollback. If not, not necessary. This will be called only about subsystems whose can_attach() operation have succeeded. The parameters are identical to can_attach().

void attach(struct cgroup *cgrp, struct cgroup_taskset *tset) (cgroup_mutex held by caller)

Called after the task has been attached to the cgroup, to allow any post-attachment activity that requires memory allocations or blocking. The parameters are identical to can_attach().

void fork(struct task_struct *task)

Called when a task is forked into a cgroup.

void exit(struct task_struct *task)

Called during task exit.

void free(struct task_struct *task)

Called when the task_struct is freed.

void bind(struct cgroup *root) (cgroup_mutex held by caller)

Called when a cgroup subsystem is rebound to a different hierarchy and root cgroup. Currently this will only involve movement between the default hierarchy (which never has sub-cgroups) and a hierarchy that is being created/destroyed (and hence has no sub-cgroups).

4. Extended attribute usage ¶

cgroup filesystem supports certain types of extended attributes in its directories and files. The current supported types are:

Trusted (XATTR_TRUSTED) Security (XATTR_SECURITY)

Both require CAP_SYS_ADMIN capability to set.

Like in tmpfs, the extended attributes in cgroup filesystem are stored using kernel memory and it’s advised to keep the usage at minimum. This is the reason why user defined extended attributes are not supported, since any user can do it and there’s no limit in the value size.

The current known users for this feature are SELinux to limit cgroup usage in containers and systemd for assorted meta data like main PID in a cgroup (systemd creates a cgroup per service).

5. Questions ¶

- Main Page

- Related Pages

- Data Structures

- Data Structure Index

- Class Hierarchy

- Data Fields

#include < sched.h >

Detailed Description

Definition at line 1190 of file sched.h .

Field Documentation

Definition at line 1255 of file sched.h .

Definition at line 1386 of file sched.h .

Definition at line 1374 of file sched.h .

Definition at line 1440 of file sched.h .

Definition at line 1430 of file sched.h .

Definition at line 1363 of file sched.h .

Definition at line 1298 of file sched.h .

Definition at line 1316 of file sched.h .

Definition at line 1337 of file sched.h .

Definition at line 1330 of file sched.h .

Definition at line 1232 of file sched.h .

Definition at line 1329 of file sched.h .

Definition at line 1335 of file sched.h .

Definition at line 1514 of file sched.h .

Definition at line 1269 of file sched.h .

Definition at line 1503 of file sched.h .

Definition at line 1264 of file sched.h .

Definition at line 1263 of file sched.h .

Definition at line 1356 of file sched.h .

Definition at line 1194 of file sched.h .

Definition at line 1225 of file sched.h .

Definition at line 1354 of file sched.h .

Definition at line 1300 of file sched.h .

Definition at line 1319 of file sched.h .

Definition at line 1270 of file sched.h .

Definition at line 1272 of file sched.h .

Definition at line 1442 of file sched.h .

Definition at line 1446 of file sched.h .

Definition at line 1266 of file sched.h .

Definition at line 1427 of file sched.h .

Definition at line 1445 of file sched.h .

Definition at line 1342 of file sched.h .

Definition at line 1327 of file sched.h .

Definition at line 1323 of file sched.h .

Definition at line 1275 of file sched.h .

Definition at line 1203 of file sched.h .

Definition at line 1369 of file sched.h .

Definition at line 1370 of file sched.h .

Definition at line 1371 of file sched.h .

Definition at line 1231 of file sched.h .

Definition at line 1501 of file sched.h .

Definition at line 1502 of file sched.h .

Definition at line 1358 of file sched.h .

Definition at line 1201 of file sched.h .

Definition at line 1294 of file sched.h .

Definition at line 1382 of file sched.h .

Definition at line 1265 of file sched.h .

Definition at line 1365 of file sched.h .

Definition at line 1268 of file sched.h .

Definition at line 1389 of file sched.h .

Definition at line 1281 of file sched.h .

Definition at line 1311 of file sched.h .

Definition at line 1230 of file sched.h .

Definition at line 1321 of file sched.h .

Definition at line 1195 of file sched.h .

Definition at line 1308 of file sched.h .

Definition at line 1444 of file sched.h .

Definition at line 1307 of file sched.h .

Definition at line 1482 of file sched.h .

Definition at line 1333 of file sched.h .

Definition at line 1293 of file sched.h .

Definition at line 1325 of file sched.h .

Definition at line 1438 of file sched.h .

Definition at line 1207 of file sched.h .

Definition at line 1204 of file sched.h .

Definition at line 1368 of file sched.h .

Definition at line 1367 of file sched.h .

Definition at line 1364 of file sched.h .

Definition at line 1205 of file sched.h .

Definition at line 1279 of file sched.h .

Definition at line 1278 of file sched.h .

Definition at line 1206 of file sched.h .

Definition at line 1379 of file sched.h .

Definition at line 1383 of file sched.h .

Definition at line 1315 of file sched.h .

Definition at line 1299 of file sched.h .

Definition at line 1361 of file sched.h .

Definition at line 1360 of file sched.h .

Definition at line 1487 of file sched.h .

Definition at line 1192 of file sched.h .

Definition at line 1324 of file sched.h .

Definition at line 1191 of file sched.h .

Definition at line 1318 of file sched.h .

Definition at line 1489 of file sched.h .

Definition at line 1372 of file sched.h .

Definition at line 1250 of file sched.h .

Definition at line 1282 of file sched.h .

Definition at line 1352 of file sched.h .

Definition at line 1312 of file sched.h .

Definition at line 1513 of file sched.h .

Definition at line 1193 of file sched.h .

Definition at line 1314 of file sched.h .

- include/linux/ sched.h

task_struct

The Linux Kernel

Quick search.

- Development process

- Submitting patches

- Code of conduct

- Maintainer handbook

- All development-process docs

- Driver APIs

- Core subsystems

- Human interfaces

- Networking interfaces

- Storage interfaces

- CPUFreq - CPU frequency and voltage scaling code in the Linux(TM) kernel

- I2C/SMBus Subsystem

- Industrial I/O

- Serial Peripheral Interface (SPI)

- 1-Wire Subsystem

- Watchdog Support

- Virtualization Support

- Hardware Monitoring

- Compute Accelerators

- Security Documentation

- BPF Documentation

- USB support

- PCI Bus Subsystem

- Assorted Miscellaneous Devices Documentation

- PECI Subsystem

- WMI Subsystem

- TEE Subsystem

- Licensing rules

- Writing documentation

- Development tools

- Testing guide

- Hacking guide

- Fault injection

- Livepatching

- Administration

- Build system

- Reporting issues

- Userspace tools

- Userspace API

- Firmware and Devicetree

- CPU architectures

- Unsorted documentation

- Translations

- Show Source

- Chinese (Simplified)

Per-task statistics interface ¶

Taskstats is a netlink-based interface for sending per-task and per-process statistics from the kernel to userspace.

Taskstats was designed for the following benefits:

efficiently provide statistics during lifetime of a task and on its exit

unified interface for multiple accounting subsystems

extensibility for use by future accounting patches

Terminology ¶

“pid”, “tid” and “task” are used interchangeably and refer to the standard Linux task defined by struct task_struct. per-pid stats are the same as per-task stats.

“tgid”, “process” and “thread group” are used interchangeably and refer to the tasks that share an mm_struct i.e. the traditional Unix process. Despite the use of tgid, there is no special treatment for the task that is thread group leader - a process is deemed alive as long as it has any task belonging to it.

To get statistics during a task’s lifetime, userspace opens a unicast netlink socket (NETLINK_GENERIC family) and sends commands specifying a pid or a tgid. The response contains statistics for a task (if pid is specified) or the sum of statistics for all tasks of the process (if tgid is specified).

To obtain statistics for tasks which are exiting, the userspace listener sends a register command and specifies a cpumask. Whenever a task exits on one of the cpus in the cpumask, its per-pid statistics are sent to the registered listener. Using cpumasks allows the data received by one listener to be limited and assists in flow control over the netlink interface and is explained in more detail below.

If the exiting task is the last thread exiting its thread group, an additional record containing the per-tgid stats is also sent to userspace. The latter contains the sum of per-pid stats for all threads in the thread group, both past and present.

getdelays.c is a simple utility demonstrating usage of the taskstats interface for reporting delay accounting statistics. Users can register cpumasks, send commands and process responses, listen for per-tid/tgid exit data, write the data received to a file and do basic flow control by increasing receive buffer sizes.

Interface ¶

The user-kernel interface is encapsulated in include/linux/taskstats.h

To avoid this documentation becoming obsolete as the interface evolves, only an outline of the current version is given. taskstats.h always overrides the description here.

struct taskstats is the common accounting structure for both per-pid and per-tgid data. It is versioned and can be extended by each accounting subsystem that is added to the kernel. The fields and their semantics are defined in the taskstats.h file.

The data exchanged between user and kernel space is a netlink message belonging to the NETLINK_GENERIC family and using the netlink attributes interface. The messages are in the format:

The taskstats payload is one of the following three kinds:

1. Commands: Sent from user to kernel. Commands to get data on a pid/tgid consist of one attribute, of type TASKSTATS_CMD_ATTR_PID/TGID, containing a u32 pid or tgid in the attribute payload. The pid/tgid denotes the task/process for which userspace wants statistics.

Commands to register/deregister interest in exit data from a set of cpus consist of one attribute, of type TASKSTATS_CMD_ATTR_REGISTER/DEREGISTER_CPUMASK and contain a cpumask in the attribute payload. The cpumask is specified as an ascii string of comma-separated cpu ranges e.g. to listen to exit data from cpus 1,2,3,5,7,8 the cpumask would be “1-3,5,7-8”. If userspace forgets to deregister interest in cpus before closing the listening socket, the kernel cleans up its interest set over time. However, for the sake of efficiency, an explicit deregistration is advisable.

2. Response for a command: sent from the kernel in response to a userspace command. The payload is a series of three attributes of type:

a) TASKSTATS_TYPE_AGGR_PID/TGID : attribute containing no payload but indicates a pid/tgid will be followed by some stats.

b) TASKSTATS_TYPE_PID/TGID: attribute whose payload is the pid/tgid whose stats are being returned.

c) TASKSTATS_TYPE_STATS: attribute with a struct taskstats as payload. The same structure is used for both per-pid and per-tgid stats.

New message sent by kernel whenever a task exits. The payload consists of a series of attributes of the following type:

TASKSTATS_TYPE_AGGR_PID: indicates next two attributes will be pid+stats

TASKSTATS_TYPE_PID: contains exiting task’s pid

TASKSTATS_TYPE_STATS: contains the exiting task’s per-pid stats

TASKSTATS_TYPE_AGGR_TGID: indicates next two attributes will be tgid+stats

TASKSTATS_TYPE_TGID: contains tgid of process to which task belongs

TASKSTATS_TYPE_STATS: contains the per-tgid stats for exiting task’s process

per-tgid stats ¶

Taskstats provides per-process stats, in addition to per-task stats, since resource management is often done at a process granularity and aggregating task stats in userspace alone is inefficient and potentially inaccurate (due to lack of atomicity).

However, maintaining per-process, in addition to per-task stats, within the kernel has space and time overheads. To address this, the taskstats code accumulates each exiting task’s statistics into a process-wide data structure. When the last task of a process exits, the process level data accumulated also gets sent to userspace (along with the per-task data).

When a user queries to get per-tgid data, the sum of all other live threads in the group is added up and added to the accumulated total for previously exited threads of the same thread group.

Extending taskstats ¶

There are two ways to extend the taskstats interface to export more per-task/process stats as patches to collect them get added to the kernel in future:

Adding more fields to the end of the existing struct taskstats. Backward compatibility is ensured by the version number within the structure. Userspace will use only the fields of the struct that correspond to the version its using.

Defining separate statistic structs and using the netlink attributes interface to return them. Since userspace processes each netlink attribute independently, it can always ignore attributes whose type it does not understand (because it is using an older version of the interface).

Choosing between 1. and 2. is a matter of trading off flexibility and overhead. If only a few fields need to be added, then 1. is the preferable path since the kernel and userspace don’t need to incur the overhead of processing new netlink attributes. But if the new fields expand the existing struct too much, requiring disparate userspace accounting utilities to unnecessarily receive large structures whose fields are of no interest, then extending the attributes structure would be worthwhile.

Flow control for taskstats ¶

When the rate of task exits becomes large, a listener may not be able to keep up with the kernel’s rate of sending per-tid/tgid exit data leading to data loss. This possibility gets compounded when the taskstats structure gets extended and the number of cpus grows large.

To avoid losing statistics, userspace should do one or more of the following:

increase the receive buffer sizes for the netlink sockets opened by listeners to receive exit data.

create more listeners and reduce the number of cpus being listened to by each listener. In the extreme case, there could be one listener for each cpu. Users may also consider setting the cpu affinity of the listener to the subset of cpus to which it listens, especially if they are listening to just one cpu.

Despite these measures, if the userspace receives ENOBUFS error messages indicated overflow of receive buffers, it should take measures to handle the loss of data.

Struct kernel :: task :: Task

Wraps the kernel’s struct task_struct .

Instances of this type are always ref-counted, that is, a call to get_task_struct ensures that the allocation remains valid at least until the matching call to put_task_struct .

The following is an example of getting the PID of the current thread with zero additional cost when compared to the C version:

Getting the PID of the current process, also zero additional cost:

Getting the current task and storing it in some struct. The reference count is automatically incremented when creating State and decremented when it is dropped:

Implementations

Pub fn current <'a>() -> taskref <'a>.

Returns a task reference for the currently executing task/thread.

pub fn group_leader (&self) -> & Task

Returns the group leader of the given task.

pub fn pid (&self) -> pid_t

Returns the PID of the given task.

pub fn signal_pending (&self) -> bool

Determines whether the given task has pending signals.

pub fn spawn <T: FnOnce () + Send + 'static>( name: Arguments <'_>, func: T ) -> Result < ARef < Task >>

Starts a new kernel thread and runs it.

Launches 10 threads and waits for them to complete.

pub fn wake_up (&self)

Wakes up the task.

Trait Implementations

Impl alwaysrefcounted for task, fn inc_ref (&self).

Increments the reference count on the object.

unsafe fn dec_ref (obj: NonNull <Self>)

Decrements the reference count on the object. Read more

impl Sync for Task

Auto trait implementations, impl refunwindsafe for task, impl send for task, impl unpin for task, impl unwindsafe for task, blanket implementations, impl<t> any for t where t: 'static + sized , , fn type_id (&self) -> typeid.

Gets the TypeId of self . Read more

impl<T> Borrow <T> for T where T: ? Sized ,

Fn borrow (&self) -> & t.

Immutably borrows from an owned value. Read more

impl<T> BorrowMut <T> for T where T: ? Sized ,

Fn borrow_mut (&mut self) -> &mut t.

Mutably borrows from an owned value. Read more

impl<T> From <T> for T

Fn from (t: t) -> t.

Returns the argument unchanged.

impl<T, U> Into <U> for T where U: From <T>,

Fn into (self) -> u.

Calls U::from(self) .

That is, this conversion is whatever the implementation of From <T> for U chooses to do.

impl<T, U> TryFrom <U> for T where U: Into <T>,

Type error = infallible.

The type returned in the event of a conversion error.

fn try_from (value: U) -> Result <T, <T as TryFrom <U>>:: Error >

Performs the conversion.

impl<T, U> TryInto <U> for T where U: TryFrom <T>,

Type error = <u as tryfrom <t>>:: error, fn try_into (self) -> result <u, <u as tryfrom <t>>:: error >.

Linux 内核101:进程数据结构

- 极客时间专栏《趣谈Linux操作系统》12.进程数据结构(上):项目多了就需要项目管理系统

- Chapter 3. Process Management- Shichao's Notes

- How to kill an individual thread under a process in linux?

- Linux 里面,进程和线程到了内核,统一都叫做任务(Task)。

- 每个 task 都有一个数据接口 task_struct ,用来保存task 状态。

linux 内核中有一个包含所有 task 的链表,把所有的 task_struct 连起来。

task_struct

看一下每个 task_struct 包含了哪些重要的字段。

和任务 ID 相关的字段有下面这些:

这三个字段的具体含义为:

- pid : 每个 task 都有一个 pid,是唯一的,不管是进程还是线程。

- tgid: 指向主线程的 pid

- group_leader: 指向进程的主线程

任何一个进程,如果只有主线程,那 pid 是自己,tgid 是自己,group_leader 指向的还是自己。

但是,如果一个进程创建了其他线程,那就会有所变化了。线程有自己的 pid,tgid 就是进程的主线程的 pid,group_leader 指向的就是进程的主线程。

有了 tgid 之后,我们就可以判断一个 task 是线程还是进程了。

那么区分是进程还是线程有什么用呢?考虑下面几个场景:

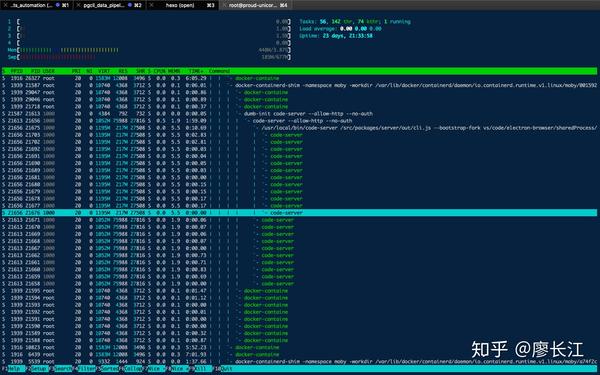

ps 默认展示的是所有进程的列表,而不是把所有的线程都列出来,那会显得很乱没有重点。

- 给线程发送 kill-9 信号?

假如说我们给某个进程中的一个线程发送了退出信号(比如 kill-9 ),那么我们不应该只退出这个线程,而是退出整个进程(至于为什么请看下文)。所以就需要某种方式,能够获取该线程所在进程中所有线程的 pid。

从一个进程中杀死某一个线程是非常危险的操作。 比如说某个 thread正在进行分配内存的工作,这时候它会hold 内存分配器的 lock。如果你把它强制杀死了,这个锁就永远不会释放,那么其他的 thread 也会停止。所以需要主进程的协助,来优雅地退出所有的线程。

上图来源于 这个so 上的问答 。

不信的话,我们可以来做一个实验。

下图显示的是 htop 工具, 白色的表示进程 , 绿色的表示线程 。可以看到每个线程确实都有一个唯一的 PID。

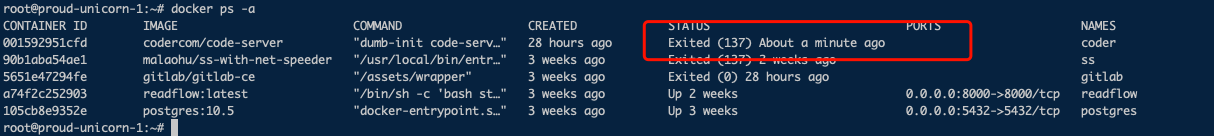

现在让我们来给图中标记的PID 为 21656 的 code-server 线程发送 kill-9 信号,然后发现,整个进程都退出了:

上图中, code-server 这个 docker 容器进程在一分钟前退出了。

源代码地址: https:// github.com/torvalds/lin ux/blob/master/include/linux/sched.h#L863

- blocked : 被阻塞暂不处理

- pending : 等待处理

- sighand : 哪个信号正在被处理

注意这里的 structsignal_struct*signal; 指向了一个 signal struct。这个struct 中还有一个 structsigpending pending; 。前面提到过需要区分线程和进程,这里也可以看出一点端倪。第一个是线程组共享的,一个是本任务的。

一个 task 的任务状态一共可以取下面的这些值:

我的公众号:全栈不存在的

- Starting a Business

- Growing a Business

- Small Business Guide

- Business News

- Science & Technology

- Money & Finance

- For Subscribers

- Write for Entrepreneur

- Entrepreneur Store

- United States

- Asia Pacific

- Middle East

- South Africa

Copyright © 2024 Entrepreneur Media, LLC All rights reserved. Entrepreneur® and its related marks are registered trademarks of Entrepreneur Media LLC

Want to Be More Productive? Here's How Google Executives Structure Their Schedules These five tactics from inside Google will help you focus and protect your time.

By Jason Feifer May 16, 2024

When a Google executive is feeling unproductive, they have a secret weapon: They can call the company's in-house productivity expert for help.

Her name is Laura Mae Martin . She started in sales at Google, and was so efficient that she eventually moved into the office of the CEO, where she coaches senior executives on how to manage their time. She starts by asking executives identify their top three priorities — and then puts them to work.

"After I ask an executive I'm coaching what their top three priorities are," Martin writes in her new book, Uptime , "I pull out their printed calendar from the last few weeks. I give them a highlighter and ask them to circle every meeting, task, or individual work time that relates to those three priorities. It quickly starts to become clear whether or not time spent is lining up with priorities."

If your days often feel out of control, or if you're adding more items than you're checking off from your to-do list, you might want to run an exercise like this too.

And that's just the start.

I spoke to Martin for my podcast, Problem Solvers , where we discussed how to say identify your priorities, how to say no to things that don't match them, and how to create a "list funnel" to keep yourself on track.

You can listen to our conversation in the player above. Or read below, where I share five brief tips from her book Uptime that I found especially eye-opening.

1. How to clean up your to-do list

If your to-do list has gotten too large, Martin suggests creating a list with literally everything you believe you can or should be doing. Then, she writes:

I identify roughly a third of the things on the list that are lowest priority. Those are usually the things that have been in my brain to do for a while and keep getting carried over without getting done from list to list. Then for each of those bottom-third items I ask myself: What is the worst thing that would happen if I never do this? Is there any other way for this to get done without my doing it? Is there any way for me to half-do this and move on from it? These questions can get you thinking about how to delegate, how to streamline what you're working on, and how to cut corners where possible.

Those questions, she says, will help you decide what tasks are worth handing off to someone else, simplifying, or maybe even skipping entirely.

2. How to plan for urgent tasks

Here's a familiar problem: Your day is packed with wall-to-wall meetings, and then something urgent comes up. When are you supposed to do this urgent thing? You have two choices: You can either cancel one of your meetings, or work later into the night.

There's a better way: Put an "urgent time block" on your calendar every day. Martin explains how this works for Google Cloud CEO Thomas Kurian:

He sets it for the same time every day. This way, if urgent things come up, there is always time to fit them in without affecting the rest of his calendar. Also, his team knows that this block of time is the same every day so anyone who needs to urgently speak with him can plan their time accordingly. If nothing urgent comes up, this becomes his work time or a chance to check email. This is similar to office hours held by college professors. It's always available and always at the same time, but if no one comes to chat, it becomes work time.

Martin says that another Google executive does this — but with one notable difference. The exec doesn't tell her team when the urgent block is. That way, if nobody needs it, she can use that time for herself.

3. How to focus on bigger things

If you have something important to do, block time for it on your calendar. But sometimes, Martin writes, you should block an entire day for nothing in particular:

Don't underestimate the value of the occasional entirely unplanned day. If you have the ability to do a "no meeting" day on your schedule, take it! A day with no meetings or commitments at all is so different than a day with even one thirty-minute meeting at 2:00 p.m. For some reason, having even a single commitment feels more than thirty minutes' worth because your entire day still has to flow around it.

This, Martin writes, helps you feel "in total control of what you need to do and when you want to do it, and gets you back in touch with your natural productivity patterns."

4. How to tackle huge projects

Have a big project that can't be finished in one sitting? It's often hard to start projects like this, because they seem so daunting — and it's even harder to keep working on them day after day.

Try this, Martin writes: Stop in the middle.

When you are working on a larger, ongoing task that cannot be completed in one sitting, it usually feels right to find a natural stopping point—like the end of an email or the end of a project section. You step away at that point, and let it go until the next time you work on it, when you will start at the beginning of a new section. Ironically, that makes yet another starting point that your brain has to get over. It's as if you're starting a large task all over again. Alternatively, stopping in the middle of something makes it easier to slide back into what you were doing and start again because you already knew what you were about to do next.

Martin says she did this with her book. Instead of stopping a day's work at the end of a chapter, she'd stop in the middle of a chapter — which made it easier to pick up the next day. "If you're working on large multistep projects," she advises, "try to stop at a point when your brain already knows the next thing to do."

5. How to make your meetings more efficient

Meetings can drag on — but they don't have to! Martin suggests creating shorter meeting times, like 15-minute check-ins, which forces everyone to be more succinct and focused. "Scarcity breeds innovation," she writes.

She offers Google's "Lightening Talks" as an example:

One of my favorite activities that's common at Google is Lightning Talks , where presenters have one slide and three minutes to teach the audience something, get buy-in for their idea, practice a sales pitch, or give an update on a project. The presentations are automatically timed so that after three minutes, the next slide appears, and you're "kicked off" the stage. The audience is instructed to clap loudly when they see the next slide so presenters know it's time for them to go. It's amazing how much is communicated when the presenter knows ahead of time that they have only three minutes to make an impact. They "trim the fat" from their presentations; they make their one slide visually stimulating and compact, including only the most important elements. They have one chance to make an impact, and they make the most of it. Not to mention the audience is highly engaged because the information is succinct and they aren't being asked to listen to anything extraneous.

Now everyone's engaged, everyone's moving fast, and a lot is accomplished in a short amount of time — which you can't say for most meetings!

Entrepreneur Staff

Editor in Chief