malloc in C: Dynamic Memory Allocation in C Explained

What is malloc() in C?

malloc() is a library function that allows C to allocate memory dynamically from the heap. The heap is an area of memory where something is stored.

malloc() is part of stdlib.h and to be able to use it you need to use #include <stdlib.h> .

How to Use Malloc

malloc() allocates memory of a requested size and returns a pointer to the beginning of the allocated block. To hold this returned pointer, we must create a variable. The pointer should be of same type used in the malloc statement. Here we’ll make a pointer to a soon-to-be array of ints

Unlike other languages, C does not know the data type it is allocating memory for; it needs to be told. Luckily, C has a function called sizeof() that we can use.

This statement used malloc to set aside memory for an array of 10 integers. As sizes can change between computers, it’s important to use the sizeof() function to calculate the size on the current computer.

Any memory allocated during the program’s execution will need to be freed before the program closes. To free memory, we can use the free() function

This statement will deallocate the memory previously allocated. C does not come with a garbage collector like some other languages, such as Java. As a result, memory not properly freed will continue to be allocated after the program is closed.

Before you go on…

- Malloc is used for dynamic memory allocation and is useful when you don’t know the amount of memory needed during compile time.

- Allocating memory allows objects to exist beyond the scope of the current block.

- C passes by value instead of reference. Using malloc to assign memory, and then pass the pointer to another function, is more efficient than having the function recreate the structure.

More info on C Programming:

- The beginner's handbook for C programming

- If...else statement in C explained

- Ternary operator in C explained

If this article was helpful, share it .

Learn to code for free. freeCodeCamp's open source curriculum has helped more than 40,000 people get jobs as developers. Get started

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

VirtualAlloc function (memoryapi.h)

Reserves, commits, or changes the state of a region of pages in the virtual address space of the calling process. Memory allocated by this function is automatically initialized to zero.

To allocate memory in the address space of another process, use the VirtualAllocEx function.

[in, optional] lpAddress

The starting address of the region to allocate. If the memory is being reserved, the specified address is rounded down to the nearest multiple of the allocation granularity. If the memory is already reserved and is being committed, the address is rounded down to the next page boundary. To determine the size of a page and the allocation granularity on the host computer, use the GetSystemInfo function. If this parameter is NULL , the system determines where to allocate the region.

If this address is within an enclave that you have not initialized by calling InitializeEnclave , VirtualAlloc allocates a page of zeros for the enclave at that address. The page must be previously uncommitted, and will not be measured with the EEXTEND instruction of the Intel Software Guard Extensions programming model.

If the address is within an enclave that you initialized, then the allocation operation fails with the ERROR_INVALID_ADDRESS error. That is true for enclaves that do not support dynamic memory management (i.e. SGX1). SGX2 enclaves will permit allocation, and the page must be accepted by the enclave after it has been allocated.

[in] dwSize

The size of the region, in bytes. If the lpAddress parameter is NULL , this value is rounded up to the next page boundary. Otherwise, the allocated pages include all pages containing one or more bytes in the range from lpAddress to lpAddress + dwSize . This means that a 2-byte range straddling a page boundary causes both pages to be included in the allocated region.

[in] flAllocationType

The type of memory allocation. This parameter must contain one of the following values.

This parameter can also specify the following values as indicated.

[in] flProtect

The memory protection for the region of pages to be allocated. If the pages are being committed, you can specify any one of the memory protection constants .

If lpAddress specifies an address within an enclave, flProtect cannot be any of the following values:

- PAGE_NOACCESS

- PAGE_NOCACHE

- PAGE_WRITECOMBINE

When allocating dynamic memory for an enclave, the flProtect parameter must be PAGE_READWRITE or PAGE_EXECUTE_READWRITE .

Return value

If the function succeeds, the return value is the base address of the allocated region of pages.

If the function fails, the return value is NULL . To get extended error information, call GetLastError .

Each page has an associated page state . The VirtualAlloc function can perform the following operations:

- Commit a region of reserved pages

- Reserve a region of free pages

- Simultaneously reserve and commit a region of free pages

VirtualAlloc cannot reserve a reserved page. It can commit a page that is already committed. This means you can commit a range of pages, regardless of whether they have already been committed, and the function will not fail.

You can use VirtualAlloc to reserve a block of pages and then make additional calls to VirtualAlloc to commit individual pages from the reserved block. This enables a process to reserve a range of its virtual address space without consuming physical storage until it is needed.

If the lpAddress parameter is not NULL , the function uses the lpAddress and dwSize parameters to compute the region of pages to be allocated. The current state of the entire range of pages must be compatible with the type of allocation specified by the flAllocationType parameter. Otherwise, the function fails and none of the pages are allocated. This compatibility requirement does not preclude committing an already committed page, as mentioned previously.

To execute dynamically generated code, use VirtualAlloc to allocate memory and the VirtualProtect function to grant PAGE_EXECUTE access.

The VirtualAlloc function can be used to reserve an Address Windowing Extensions (AWE) region of memory within the virtual address space of a specified process. This region of memory can then be used to map physical pages into and out of virtual memory as required by the application. The MEM_PHYSICAL and MEM_RESERVE values must be set in the AllocationType parameter. The MEM_COMMIT value must not be set. The page protection must be set to PAGE_READWRITE .

The VirtualFree function can decommit a committed page, releasing the page's storage, or it can simultaneously decommit and release a committed page. It can also release a reserved page, making it a free page.

When creating a region that will be executable, the calling program bears responsibility for ensuring cache coherency via an appropriate call to FlushInstructionCache once the code has been set in place. Otherwise attempts to execute code out of the newly executable region may produce unpredictable results.

For an example, see Reserving and Committing Memory .

Requirements

Memory Management Functions

Virtual Memory Functions

VirtualAllocEx

VirtualFree

VirtualLock

VirtualProtect

VirtualQuery

Vertdll APIs available in VBS enclaves

Was this page helpful?

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback .

Submit and view feedback for

Additional resources

C Data Types

C operators.

- C Input and Output

- C Control Flow

- C Functions

- C Preprocessors

C File Handling

- C Cheatsheet

C Interview Questions

- C Programming Language Tutorial

- C Language Introduction

- Features of C Programming Language

- C Programming Language Standard

- C Hello World Program

- Compiling a C Program: Behind the Scenes

- Tokens in C

- Keywords in C

C Variables and Constants

- C Variables

- Constants in C

- Const Qualifier in C

- Different ways to declare variable as constant in C

- Scope rules in C

- Internal Linkage and External Linkage in C

- Global Variables in C

- Data Types in C

- Literals in C

- Escape Sequence in C

- Integer Promotions in C

- Character Arithmetic in C

- Type Conversion in C

C Input/Output

- Basic Input and Output in C

- Format Specifiers in C

- printf in C

- Scansets in C

- Formatted and Unformatted Input/Output functions in C with Examples

- Operators in C

- Arithmetic Operators in C

- Unary operators in C

- Relational Operators in C

- Bitwise Operators in C

- C Logical Operators

- Assignment Operators in C

- Increment and Decrement Operators in C

- Conditional or Ternary Operator (?:) in C

- sizeof operator in C

- Operator Precedence and Associativity in C

C Control Statements Decision-Making

- Decision Making in C (if , if..else, Nested if, if-else-if )

- C - if Statement

- C if...else Statement

- C if else if ladder

- Switch Statement in C

- Using Range in switch Case in C

- while loop in C

- do...while Loop in C

- For Versus While

- Continue Statement in C

- Break Statement in C

- goto Statement in C

- User-Defined Function in C

- Parameter Passing Techniques in C

- Function Prototype in C

- How can I return multiple values from a function?

- main Function in C

- Implicit return type int in C

- Callbacks in C

- Nested functions in C

- Variadic functions in C

- _Noreturn function specifier in C

- Predefined Identifier __func__ in C

- C Library math.h Functions

C Arrays & Strings

- Properties of Array in C

- Multidimensional Arrays in C

- Initialization of Multidimensional Array in C

- Pass Array to Functions in C

- How to pass a 2D array as a parameter in C?

- What are the data types for which it is not possible to create an array?

- How to pass an array by value in C ?

- Strings in C

- Array of Strings in C

- What is the difference between single quoted and double quoted declaration of char array?

- C String Functions

- Pointer Arithmetics in C with Examples

- C - Pointer to Pointer (Double Pointer)

- Function Pointer in C

- How to declare a pointer to a function?

- Pointer to an Array | Array Pointer

- Difference between constant pointer, pointers to constant, and constant pointers to constants

- Pointer vs Array in C

- Dangling, Void , Null and Wild Pointers in C

- Near, Far and Huge Pointers in C

- restrict keyword in C

C User-Defined Data Types

- C Structures

- dot (.) Operator in C

- Structure Member Alignment, Padding and Data Packing

- Flexible Array Members in a structure in C

- Bit Fields in C

- Difference Between Structure and Union in C

- Anonymous Union and Structure in C

- Enumeration (or enum) in C

C Storage Classes

- Storage Classes in C

- extern Keyword in C

- Static Variables in C

- Initialization of static variables in C

- Static functions in C

- Understanding "volatile" qualifier in C | Set 2 (Examples)

- Understanding "register" keyword in C

C Memory Management

- Memory Layout of C Programs

Dynamic Memory Allocation in C using malloc(), calloc(), free() and realloc()

- Difference Between malloc() and calloc() with Examples

- What is Memory Leak? How can we avoid?

- Dynamic Array in C

- How to dynamically allocate a 2D array in C?

- Dynamically Growing Array in C

C Preprocessor

- C Preprocessor Directives

- How a Preprocessor works in C?

- Header Files in C

- What’s difference between header files "stdio.h" and "stdlib.h" ?

- How to write your own header file in C?

- Macros and its types in C

- Interesting Facts about Macros and Preprocessors in C

- # and ## Operators in C

- How to print a variable name in C?

- Multiline macros in C

- Variable length arguments for Macros

- Branch prediction macros in GCC

- typedef versus #define in C

- Difference between #define and const in C?

- Basics of File Handling in C

- C fopen() function with Examples

- EOF, getc() and feof() in C

- fgets() and gets() in C language

- fseek() vs rewind() in C

- What is return type of getchar(), fgetc() and getc() ?

- Read/Write Structure From/to a File in C

- C Program to print contents of file

- C program to delete a file

- C Program to merge contents of two files into a third file

- What is the difference between printf, sprintf and fprintf?

- Difference between getc(), getchar(), getch() and getche()

Miscellaneous

- time.h header file in C with Examples

- Input-output system calls in C | Create, Open, Close, Read, Write

- Signals in C language

- Program error signals

- Socket Programming in C

- _Generics Keyword in C

- Multithreading in C

- C Programming Interview Questions (2024)

- Commonly Asked C Programming Interview Questions | Set 1

- Commonly Asked C Programming Interview Questions | Set 2

- Commonly Asked C Programming Interview Questions | Set 3

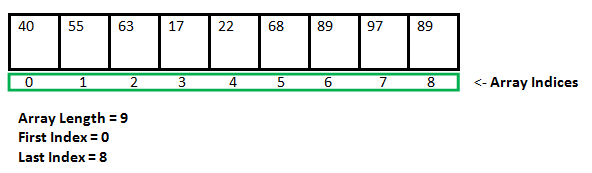

Since C is a structured language, it has some fixed rules for programming. One of them includes changing the size of an array. An array is a collection of items stored at contiguous memory locations.

As can be seen, the length (size) of the array above is 9. But what if there is a requirement to change this length (size)? For example,

- If there is a situation where only 5 elements are needed to be entered in this array. In this case, the remaining 4 indices are just wasting memory in this array. So there is a requirement to lessen the length (size) of the array from 9 to 5.

- Take another situation. In this, there is an array of 9 elements with all 9 indices filled. But there is a need to enter 3 more elements in this array. In this case, 3 indices more are required. So the length (size) of the array needs to be changed from 9 to 12.

This procedure is referred to as Dynamic Memory Allocation in C . Therefore, C Dynamic Memory Allocation can be defined as a procedure in which the size of a data structure (like Array) is changed during the runtime. C provides some functions to achieve these tasks. There are 4 library functions provided by C defined under <stdlib.h> header file to facilitate dynamic memory allocation in C programming. They are:

Let’s look at each of them in greater detail.

C malloc() method

The “malloc” or “memory allocation” method in C is used to dynamically allocate a single large block of memory with the specified size. It returns a pointer of type void which can be cast into a pointer of any form. It doesn’t Initialize memory at execution time so that it has initialized each block with the default garbage value initially.

Syntax of malloc() in C

ptr = (int*) malloc(100 * sizeof(int)); Since the size of int is 4 bytes, this statement will allocate 400 bytes of memory. And, the pointer ptr holds the address of the first byte in the allocated memory.

If space is insufficient, allocation fails and returns a NULL pointer.

Example of malloc() in C

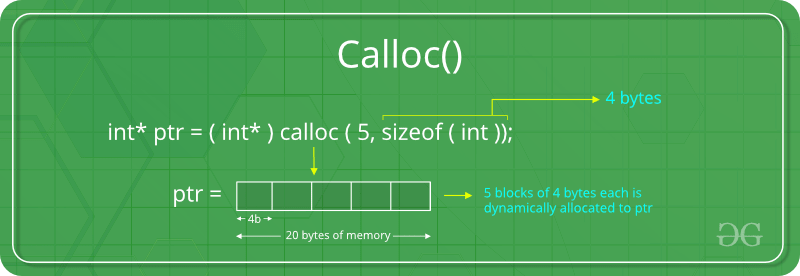

C calloc() method.

- “calloc” or “contiguous allocation” method in C is used to dynamically allocate the specified number of blocks of memory of the specified type. it is very much similar to malloc() but has two different points and these are:

- It initializes each block with a default value ‘0’.

- It has two parameters or arguments as compare to malloc().

Syntax of calloc() in C

For Example:

ptr = (float*) calloc(25, sizeof(float)); This statement allocates contiguous space in memory for 25 elements each with the size of the float.

Example of calloc() in C

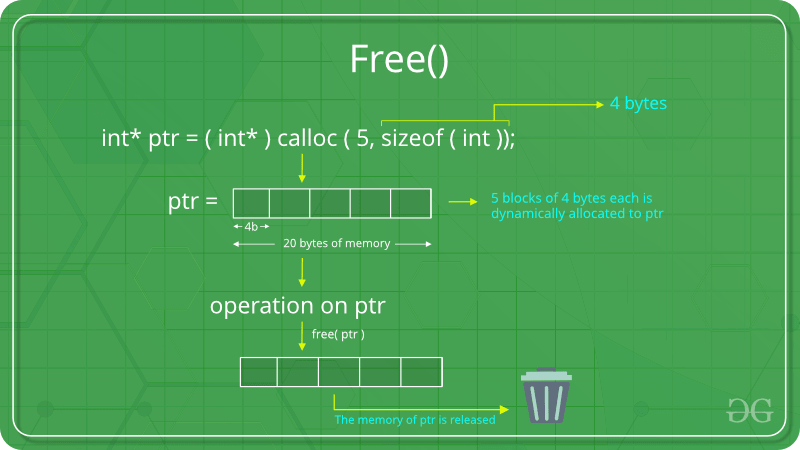

C free() method.

“free” method in C is used to dynamically de-allocate the memory. The memory allocated using functions malloc() and calloc() is not de-allocated on their own. Hence the free() method is used, whenever the dynamic memory allocation takes place. It helps to reduce wastage of memory by freeing it.

Syntax of free() in C

Example of free() in C

C realloc() method.

“realloc” or “re-allocation” method in C is used to dynamically change the memory allocation of a previously allocated memory. In other words, if the memory previously allocated with the help of malloc or calloc is insufficient, realloc can be used to dynamically re-allocate memory . re-allocation of memory maintains the already present value and new blocks will be initialized with the default garbage value.

Syntax of realloc() in C

Example of realloc() in C

One another example for realloc() method is:

Please Login to comment...

Similar reads.

- Dynamic Memory Allocation

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Embedded Artistry

Building Superior Embedded Systems

Generating Aligned Memory

22 February 2017 by Phillip Johnston • Last updated 9 June 2023

Embedded systems often have requirements for pointer alignment. These alignment requirements exist in many places, some including:

- Unaligned access may generate a processor exception with registers that have strict alignment requirements

- You don’t want to accidentally perform clean/invalidate operations on random data

- DMA and USB peripherals often require 8-, 16-, 32-, or 64-byte alignment of buffers depending on the hardware design

- MPU regions (e.g., 32-byte aligned)

- MMU Transition tables

- Stack pointers

- ARM requires the base address to be 32-, 64-, 128-, 256- word aligned.

- Unaligned addresses require multiple read instructions

- aligned addresses require a single read instruction

In this article, we’ll look at methods for allocating aligned memory and implementing aligned variants of malloc and free .

Table of Contents:

Compiler Alignment Attribute

Standard alignment functionality, dynamic memory alignment, aligned_malloc, aligned_free, other dynamic allocation strategies, putting it all together, further reading, how to align memory.

Our needs to align memory extend to both static and dynamic memory allocations. Let’s look at how to handle both cases.

For static & stack allocations, we can use the GNU defined alignment attribute .

This attribute will force the compiler to allocate the variable with at least the requested alignment (e.g. you could request 8-byte alignment and get 32-byte alignment).

Example usage of the alignment attribute from the GNU documentation:

C11 and C ++11 introduced standard alignment specifiers:

- C: _Alignas() and the alignas macro (defined in the header <stdalign.h> until C23, when it becomes a compiler-defined macro)

- C++ : alignas()

You can use the C and C++ variants in the same way: by specifying the desired alignment in bytes via a specific size, or by specifying desired alignment as a type:

You can also get the alignment of a specific type using:

- C11: _Alignof and the alignof macro (defined in the header <stdalign.h> until C23, when it becomes a compiler-defined macro)

- C++11: alignof

These return the alignment in bytes required for the specified type.

When we call malloc , we are going to receive memory with “fundamental alignment,” which is an alignment that is suitably aligned to store any kind of variable. This is vague, and the fundamental alignment can change from one system to another. But, in most cases, you are going to receive memory that is 8-byte aligned on 32-bit systems, and 16-byte aligned on 64-bit systems. Of course, we might have alignment requirements that are greater, such as a USB buffer being 32-byte aligned, or a 128-byte aligned variable that will fit in a cache line. What can we do to get dynamically allocated memory to match these greater alignment requirements?

A common POSIX API that you may be familiar with is memalign . memalign provides exactly what we need:

Let’s see how to implement the equivalent support for our system.

Aligning Dynamically Allocated Memory

Since we have already implemented malloc on our system (or have malloc defined on a development machine), we can use malloc as our base memory allocator. Any other allocator will work, such as the built-in FreeRTOS or ThreadX allocators.

Since malloc (or another dynamic memory allocator) is not guaranteed to align memory as we require, we’ll need to perform two extra steps:

- Request extra bytes so we can return an aligned address

- Request extra bytes and store the offset between our original pointer and our aligned pointer

By allocating these extra bytes, we are making a tradeoff between generating aligned memory and wasting some bytes to ensure the alignment requirement can be met.

Now that we have our high-level strategy, let’s prototype the calls for our aligned malloc implementation. Mirroring memalign , we will have:

Why do we require a separate free API for our aligned allocations?

We are going to be storing an offset and returning an address that differs from the address returned by malloc . Before we can call free on that memory, we have to translate from our aligned pointer to the original pointer returned by malloc .

Now that we know what our APIs look like, what definitions do we need to manage our storage overhead?

I’ve defined the offset_t to be a uint16_t . This supports alignment values up to 64k, a size which is already unlikely to be used for alignment.

Should we need to support larger alignments, we can upgrade this type by adjusting the typedef and increasing the number of bytes used to store the offset with each aligned memory pointer.

I’ve also generated a convenience macro for the offset size. You could skip this macro and just use sizeof(offset_t) if you prefer.

Finally, we need some way to align our memory. I use this align_up definition:

Note that this operates on powers of two, so we will have to limit our alignment values to powers of two.

Let’s start with aligned_malloc . Recall the prototype:

Thinking about our basic function skeleton: we need to ensure align and size are non-zero values before we try to allocate any memory. We also need to check that our alignment request is a power of two, because of our align_up macro.

These requirements result in the following skeleton:

Now that we have protections in place, let’s work on our actual aligned memory allocation. We know we need to allocate extra bytes, but what do we actually allocate?

- I call malloc and get a memory address X.

- I know I need to store a pointer offset value Y, which is fixed in size.

- Our alignment Z is variable.

- This is true even if the pointer is aligned

- If X+Y is aligned, we would need no extra bytes

- If X+Y is unaligned, we would need Z-1 extra bytes in the worst case

- Requested alignment 8

- malloc returns 0xF07

- we add two bytes for our offset storage, which brings us to 0xF09

- We need 7 extra bytes to get us to 0xF10.

- malloc returns 0xF06

- We add two bytes for our offset storage, bringing us to 0xF08

- We are now 8 byte aligned

So our worst case padding for malloc is:

Which translates to our allocation as:

After we’ve made the call to malloc , we need to actually align our pointer and store the offset:

Note that we align the address after including the offset size, as shown in the example above. Even in the best-case scenario where our pointer is already aligned, we need to handle this API generically. Offset storage is always required.

If you are unfamiliar with uintptr_t , it is a standard type that is large enough to contain a pointer address.

Once we have our new aligned address, we move backwards in memory from the aligned location to store the offset. We now know we always need to look one location back from our aligned pointer to find the true offset.

Here’s what our finished aligned_malloc looks like:

As is true in most of the free implementations that we’ve seen, aligned_free is a much simpler implementation than aligned_malloc .

With aligned_free , we look backwards from the pointer to find the offset:

Once we have the offset we can recover the original pointer and pass that to free :

Here’s what our finished aligned_free looks like:

Note well: you must be very careful not to mix up free and aligned_free . If you call free on an aligned pointer, free will not recognize the allocation and you may crash or experience other strange effects. Calling aligned_free on an unaligned pointer will likely result in you reading an invalid offset value and calling free with random data.

In a future article, I will show you how to protect against these simple error cases by using C++ special pointers with custom allocators and deleters.

The above dynamic aligned allocation example is just one approach. It introduces memory overhead, and depending on the required alignment and memory constraints, this overhead can be significant. However, many strategies for dynamically allocating aligned memory will involve some “wasted storage”, so the choice comes in adopting a strategy that minimizes waste for your particular use case.

If you’re making allocations at a common fixed size and with a particular alignment requirement (e.g., image frames from a camera), you can reduce the overhead with a custom allocator that returns blocks of memory from a pre-allocated pool with the required alignment and size (called, unsurprisingly, a “block” or “pool” allocator). The downside here is that blocks are of a fixed size, so you will still end up wasting memory if you need less than a block’s worth of memory. For a case like an image frame from a camera, however, this isn’t too much of a concern: you rarely know in advance just how big a frame will be, and so you want to allocate with the maximum frame size you might receive.

Alternatively, you can investigate a buddy allocation strategy. Buddy allocation has the desired property that blocks will always align to a power-of-two. The allocator’s largest block size and lower limit can be tuned based on your system’s needs and to minimize the amount of possible wasted memory per allocation.

You can find an aligned malloc example in the embedded-resources git repository .

To build it, simply run make from the top level, or in examples/c/ run make or make malloc_aligned .

If you want to use aligned_malloc in your own project, simply change this line:

- GNU Type attributes : read for __attribute__((aligned)) information

- Aligned Malloc Example Source

- C: _Alignas()

- C++: alignas()

- C: _Alignof

- C++: alignof

- Buddy memory allocation – Wikipedia

6 Replies to “Generating Aligned Memory”

Awesome post, but a uint16_t allows for alignment values of up to 32Kb, not 64Kb,

D’oh, will fix that!

uint16_t Is unsigned short int, 16 bit unsigned integer. You can see max value on limita.h at define USHR_MAX you can see 65536. 65536/1024=64kb. 64kb are corrct.

Can you elaborate more on the different data types used while doing pointer arithmetic? For ex: align_up(((uintptr_t)p + PTR_OFFSET_SZ), align);

Here if uintptr_t is 4 bytes, wouldn’t p + PTR_OFFSET_SZ = p + 2*sizeof(uintptr_t)? This would add 8 bytes instead of 2 making it incorrect. Am I missing something?

Here if uintptr_t is 4 bytes, wouldn’t p + PTR_OFFSET_SZ = p + 2*sizeof(uintptr_t)? This would add 8 bytes instead of 2 making it incorrect. Am I missing something?

The cast to uintptr_t converts the value of p to a number (uintptr_t: an unsigned integer type large enough to hold an address), so when we add PTR_OFFSET_SZ (2 bytes), we are in “normal math” land instead of “pointer math” land.

Minor corner cases:

It might be worthwhile asserting that align is not 0.

You’ve made align unsigned. Now a sizeof(size_t) should be equal to sizeof(uintptr_t) but it’s worth thinking about a world where, say, a uintptr_t is 64 bit and a size_t is 32 bit. Then ~(align – 1) ends up being inverted only within 32 bits, without sign extension. That would zero the top 32 bits of your pointer.

Share Your Thoughts Cancel reply

This site uses Akismet to reduce spam. Learn how your comment data is processed .

- API Reference »

- System API »

- Heap Memory Allocation

- Edit on GitHub

Heap Memory Allocation

Stack and heap .

ESP-IDF applications use the common computer architecture patterns of stack (dynamic memory allocated by program control flow), heap (dynamic memory allocated by function calls), and static memory (memory allocated at compile time).

Because ESP-IDF is a multi-threaded RTOS environment, each RTOS task has its own stack. By default, each of these stacks is allocated from the heap when the task is created. See xTaskCreateStatic() for the alternative where stacks are statically allocated.

Because ESP32 uses multiple types of RAM, it also contains multiple heaps with different capabilities. A capabilities-based memory allocator allows apps to make heap allocations for different purposes.

For most purposes, the C Standard Library's malloc() and free() functions can be used for heap allocation without any special consideration. However, in order to fully make use of all of the memory types and their characteristics, ESP-IDF also has a capabilities-based heap memory allocator. If you want to have a memory with certain properties (e.g., DMA-Capable Memory or executable-memory), you can create an OR-mask of the required capabilities and pass that to heap_caps_malloc() .

Memory Capabilities

The ESP32 contains multiple types of RAM:

DRAM (Data RAM) is memory that is connected to CPU's data bus and is used to hold data. This is the most common kind of memory accessed as a heap.

IRAM (Instruction RAM) is memory that is connected to the CPU's instruction bus and usually holds executable data only (i.e., instructions). If accessed as generic memory, all accesses must be aligned to 32-Bit Accessible Memory .

D/IRAM is RAM that is connected to CPU's data bus and instruction bus, thus can be used either Instruction or Data RAM.

For more details on these internal memory types, see Memory Types .

It is also possible to connect external SPI RAM to the ESP32. The external RAM is integrated into the ESP32's memory map via the cache, and accessed similarly to DRAM.

All DRAM memory is single-byte accessible, thus all DRAM heaps possess the MALLOC_CAP_8BIT capability. Users can call heap_caps_get_free_size(MALLOC_CAP_8BIT) to get the free size of all DRAM heaps.

If ran out of MALLOC_CAP_8BIT , the users can use MALLOC_CAP_IRAM_8BIT instead. In that case, IRAM can still be used as a "reserve" pool of internal memory if the users only access it in a 32-bit aligned manner, or if they enable CONFIG_ESP32_IRAM_AS_8BIT_ACCESSIBLE_MEMORY) .

When calling malloc() , the ESP-IDF malloc() internally calls heap_caps_malloc_default(size) . This will allocate memory with the capability MALLOC_CAP_DEFAULT , which is byte-addressable.

Because malloc() uses the capabilities-based allocation system, memory allocated using heap_caps_malloc() can be freed by calling the standard free() function.

Available Heap

At startup, the DRAM heap contains all data memory that is not statically allocated by the app. Reducing statically-allocated buffers increases the amount of available free heap.

To find the amount of statically allocated memory, use the idf.py size command.

See the DRAM (Data RAM) section for more details about the DRAM usage limitations.

At runtime, the available heap DRAM may be less than calculated at compile time, because, at startup, some memory is allocated from the heap before the FreeRTOS scheduler is started (including memory for the stacks of initial FreeRTOS tasks).

At startup, the IRAM heap contains all instruction memory that is not used by the app executable code.

The idf.py size command can be used to find the amount of IRAM used by the app.

Some memory in the ESP32 is available as either DRAM or IRAM. If memory is allocated from a D/IRAM region, the free heap size for both types of memory will decrease.

Heap Sizes

At startup, all ESP-IDF apps log a summary of all heap addresses (and sizes) at level Info:

Finding Available Heap

See Heap Information .

Special Capabilities

Dma-capable memory .

Use the MALLOC_CAP_DMA flag to allocate memory which is suitable for use with hardware DMA engines (for example SPI and I2S). This capability flag excludes any external PSRAM.

32-Bit Accessible Memory

If a certain memory structure is only addressed in 32-bit units, for example, an array of ints or pointers, it can be useful to allocate it with the MALLOC_CAP_32BIT flag. This also allows the allocator to give out IRAM memory, which is sometimes unavailable for a normal malloc() call. This can help to use all the available memory in the ESP32.

Please note that on ESP32 series chips, MALLOC_CAP_32BIT cannot be used for storing floating-point variables. This is because MALLOC_CAP_32BIT may return instruction RAM and the floating-point assembly instructions on ESP32 cannot access instruction RAM.

Memory allocated with MALLOC_CAP_32BIT can only be accessed via 32-bit reads and writes, any other type of access will generate a fatal LoadStoreError exception.

External SPI Memory

When external RAM is enabled, external SPI RAM can be allocated using standard malloc calls, or via heap_caps_malloc(MALLOC_CAP_SPIRAM) , depending on the configuration. See Configuring External RAM for more details.

On ESP32 only external SPI RAM under 4 MiB in size can be allocated this way. To use the region above the 4 MiB limit, you can use the himem API .

Thread Safety

Heap functions are thread-safe, meaning they can be called from different tasks simultaneously without any limitations.

It is technically possible to call malloc , free , and related functions from interrupt handler (ISR) context (see Calling Heap-Related Functions from ISR ). However, this is not recommended, as heap function calls may delay other interrupts. It is strongly recommended to refactor applications so that any buffers used by an ISR are pre-allocated outside of the ISR. Support for calling heap functions from ISRs may be removed in a future update.

Calling Heap-Related Functions from ISR

The following functions from the heap component can be called from the interrupt handler (ISR):

heap_caps_malloc()

heap_caps_malloc_default()

heap_caps_realloc_default()

heap_caps_malloc_prefer()

heap_caps_realloc_prefer()

heap_caps_calloc_prefer()

heap_caps_free()

heap_caps_realloc()

heap_caps_calloc()

heap_caps_aligned_alloc()

heap_caps_aligned_free()

However, this practice is strongly discouraged.

Heap Tracing & Debugging

The following features are documented on the Heap Memory Debugging page:

Heap Information (free space, etc.)

Heap Allocation and Free Function Hooks

Heap Corruption Detection

Heap Tracing (memory leak detection, monitoring, etc.)

Implementation Notes

Knowledge about the regions of memory in the chip comes from the "SoC" component, which contains memory layout information for the chip, and the different capabilities of each region. Each region's capabilities are prioritized, so that (for example) dedicated DRAM and IRAM regions are used for allocations ahead of the more versatile D/IRAM regions.

Each contiguous region of memory contains its own memory heap. The heaps are created using the multi_heap functionality. multi_heap allows any contiguous region of memory to be used as a heap.

The heap capabilities allocator uses knowledge of the memory regions to initialize each individual heap. Allocation functions in the heap capabilities API will find the most appropriate heap for the allocation based on desired capabilities, available space, and preferences for each region's use, and then calling multi_heap_malloc() for the heap situated in that particular region.

Calling free() involves finding the particular heap corresponding to the freed address, and then call multi_heap_free() on that particular multi_heap instance.

API Reference - Heap Allocation

Header file .

components/heap/include/esp_heap_caps.h

This header file can be included with:

#include "esp_heap_caps.h"

Functions

registers a callback function to be invoked if a memory allocation operation fails

callback -- caller defined callback to be invoked

ESP_OK if callback was registered.

Allocate a chunk of memory which has the given capabilities.

Equivalent semantics to libc malloc(), for capability-aware memory.

size -- Size, in bytes, of the amount of memory to allocate

caps -- Bitwise OR of MALLOC_CAP_* flags indicating the type of memory to be returned

A pointer to the memory allocated on success, NULL on failure

Free memory previously allocated via heap_caps_malloc() or heap_caps_realloc().

Equivalent semantics to libc free(), for capability-aware memory.

In IDF, free(p) is equivalent to heap_caps_free(p) .

ptr -- Pointer to memory previously returned from heap_caps_malloc() or heap_caps_realloc(). Can be NULL.

Reallocate memory previously allocated via heap_caps_malloc() or heap_caps_realloc().

Equivalent semantics to libc realloc(), for capability-aware memory.

In IDF, realloc(p, s) is equivalent to heap_caps_realloc(p, s, MALLOC_CAP_8BIT) .

'caps' parameter can be different to the capabilities that any original 'ptr' was allocated with. In this way, realloc can be used to "move" a buffer if necessary to ensure it meets a new set of capabilities.

ptr -- Pointer to previously allocated memory, or NULL for a new allocation.

size -- Size of the new buffer requested, or 0 to free the buffer.

caps -- Bitwise OR of MALLOC_CAP_* flags indicating the type of memory desired for the new allocation.

Pointer to a new buffer of size 'size' with capabilities 'caps', or NULL if allocation failed.

Allocate an aligned chunk of memory which has the given capabilities.

Equivalent semantics to libc aligned_alloc(), for capability-aware memory.

alignment -- How the pointer received needs to be aligned must be a power of two

Used to deallocate memory previously allocated with heap_caps_aligned_alloc.

This function is deprecated, please consider using heap_caps_free() instead

ptr -- Pointer to the memory allocated

Allocate an aligned chunk of memory which has the given capabilities. The initialized value in the memory is set to zero.

n -- Number of continuing chunks of memory to allocate

size -- Size, in bytes, of a chunk of memory to allocate

Allocate a chunk of memory which has the given capabilities. The initialized value in the memory is set to zero.

Equivalent semantics to libc calloc(), for capability-aware memory.

In IDF, calloc(p) is equivalent to heap_caps_calloc(p, MALLOC_CAP_8BIT) .

Get the total size of all the regions that have the given capabilities.

This function takes all regions capable of having the given capabilities allocated in them and adds up the total space they have.

caps -- Bitwise OR of MALLOC_CAP_* flags indicating the type of memory

total size in bytes

Get the total free size of all the regions that have the given capabilities.

This function takes all regions capable of having the given capabilities allocated in them and adds up the free space they have.

Note that because of heap fragmentation it is probably not possible to allocate a single block of memory of this size. Use heap_caps_get_largest_free_block() for this purpose.

Amount of free bytes in the regions

Get the total minimum free memory of all regions with the given capabilities.

This adds all the low watermarks of the regions capable of delivering the memory with the given capabilities.

Note the result may be less than the global all-time minimum available heap of this kind, as "low watermarks" are tracked per-region. Individual regions' heaps may have reached their "low watermarks" at different points in time. However, this result still gives a "worst case" indication for all-time minimum free heap.

Get the largest free block of memory able to be allocated with the given capabilities.

Returns the largest value of s for which heap_caps_malloc(s, caps) will succeed.

Size of the largest free block in bytes.

Start monitoring the value of minimum_free_bytes from the moment this function is called instead of from startup.

This allows to detect local lows of the minimum_free_bytes value that wouldn't be detected otherwise.

esp_err_t ESP_OK if the function executed properly ESP_FAIL if called when monitoring already active

Stop monitoring the value of minimum_free_bytes. After this call the minimum_free_bytes value calculated from startup will be returned in heap_caps_get_info and heap_caps_get_minimum_free_size.

esp_err_t ESP_OK if the function executed properly ESP_FAIL if called when monitoring not active

Get heap info for all regions with the given capabilities.

Calls multi_heap_info() on all heaps which share the given capabilities. The information returned is an aggregate across all matching heaps. The meanings of fields are the same as defined for multi_heap_info_t , except that minimum_free_bytes has the same caveats described in heap_caps_get_minimum_free_size().

info -- Pointer to a structure which will be filled with relevant heap metadata.

Print a summary of all memory with the given capabilities.

Calls multi_heap_info on all heaps which share the given capabilities, and prints a two-line summary for each, then a total summary.

Check integrity of all heap memory in the system.

Calls multi_heap_check on all heaps. Optionally print errors if heaps are corrupt.

Calling this function is equivalent to calling heap_caps_check_integrity with the caps argument set to MALLOC_CAP_INVALID.

Please increase the value of CONFIG_ESP_INT_WDT_TIMEOUT_MS when using this API with PSRAM enabled.

print_errors -- Print specific errors if heap corruption is found.

True if all heaps are valid, False if at least one heap is corrupt.

Check integrity of all heaps with the given capabilities.

Calls multi_heap_check on all heaps which share the given capabilities. Optionally print errors if the heaps are corrupt.

See also heap_caps_check_integrity_all to check all heap memory in the system and heap_caps_check_integrity_addr to check memory around a single address.

Please increase the value of CONFIG_ESP_INT_WDT_TIMEOUT_MS when using this API with PSRAM capability flag.

Check integrity of heap memory around a given address.

This function can be used to check the integrity of a single region of heap memory, which contains the given address.

This can be useful if debugging heap integrity for corruption at a known address, as it has a lower overhead than checking all heap regions. Note that if the corrupt address moves around between runs (due to timing or other factors) then this approach won't work, and you should call heap_caps_check_integrity or heap_caps_check_integrity_all instead.

The entire heap region around the address is checked, not only the adjacent heap blocks.

addr -- Address in memory. Check for corruption in region containing this address.

True if the heap containing the specified address is valid, False if at least one heap is corrupt or the address doesn't belong to a heap region.

Enable malloc() in external memory and set limit below which malloc() attempts are placed in internal memory.

When external memory is in use, the allocation strategy is to initially try to satisfy smaller allocation requests with internal memory and larger requests with external memory. This sets the limit between the two, as well as generally enabling allocation in external memory.

limit -- Limit, in bytes.

Allocate a chunk of memory as preference in decreasing order.

The variable parameters are bitwise OR of MALLOC_CAP_* flags indicating the type of memory. This API prefers to allocate memory with the first parameter. If failed, allocate memory with the next parameter. It will try in this order until allocating a chunk of memory successfully or fail to allocate memories with any of the parameters.

num -- Number of variable parameters

Reallocate a chunk of memory as preference in decreasing order.

num -- Number of variable paramters

Pointer to a new buffer of size 'size', or NULL if allocation failed.

Dump the full structure of all heaps with matching capabilities.

Prints a large amount of output to serial (because of locking limitations, the output bypasses stdout/stderr). For each (variable sized) block in each matching heap, the following output is printed on a single line:

Block address (the data buffer returned by malloc is 4 bytes after this if heap debugging is set to Basic, or 8 bytes otherwise).

Data size (the data size may be larger than the size requested by malloc, either due to heap fragmentation or because of heap debugging level).

Address of next block in the heap.

If the block is free, the address of the next free block is also printed.

Dump the full structure of all heaps.

Covers all registered heaps. Prints a large amount of output to serial.

Output is the same as for heap_caps_dump.

Return the size that a particular pointer was allocated with.

The app will crash with an assertion failure if the pointer is not valid.

ptr -- Pointer to currently allocated heap memory. Must be a pointer value previously returned by heap_caps_malloc, malloc, calloc, etc. and not yet freed.

Size of the memory allocated at this block.

Function called to walk through the heaps with the given set of capabilities.

caps -- The set of capabilities assigned to the heaps to walk through

walker_func -- Callback called for each block of the heaps being traversed

user_data -- Opaque pointer to user defined data

Function called to walk through all heaps defined by the heap component.

Structures

Structure used to store heap related data passed to the walker callback function.

Public Members

Start address of the heap in which the block is located.

End address of the heap in which the block is located.

Structure used to store block related data passed to the walker callback function.

Pointer to the block data.

The size of the block.

Block status. True: used, False: free.

Flags to indicate the capabilities of the various memory systems.

Memory must be able to run executable code

Memory must allow for aligned 32-bit data accesses.

Memory must allow for 8/16/...-bit data accesses.

Memory must be able to accessed by DMA.

Memory must be mapped to PID2 memory space (PIDs are not currently used)

Memory must be mapped to PID3 memory space (PIDs are not currently used)

Memory must be mapped to PID4 memory space (PIDs are not currently used)

Memory must be mapped to PID5 memory space (PIDs are not currently used)

Memory must be mapped to PID6 memory space (PIDs are not currently used)

Memory must be mapped to PID7 memory space (PIDs are not currently used)

Memory must be in SPI RAM.

Memory must be internal; specifically it should not disappear when flash/spiram cache is switched off.

Memory can be returned in a non-capability-specific memory allocation (e.g. malloc(), calloc()) call.

Memory must be in IRAM and allow unaligned access.

Memory must be able to accessed by retention DMA.

Memory must be in RTC fast memory.

Memory must be in TCM memory.

Memory can't be used / list end marker.

Type Definitions

callback called when an allocation operation fails, if registered

in bytes of failed allocation

capabilities requested of failed allocation

function which generated the failure

Function callback used to get information of memory block during calls to heap_caps_walk or heap_caps_walk_all.

See walker_heap_into_t

See walker_block_info_t

Opaque pointer to user defined data

True to proceed with the heap traversal False to stop the traversal of the current heap and continue with the traversal of the next heap (if any)

API Reference - Initialisation

components/heap/include/esp_heap_caps_init.h

#include "esp_heap_caps_init.h"

Initialize the capability-aware heap allocator.

This is called once in the IDF startup code. Do not call it at other times.

Enable heap(s) in memory regions where the startup stacks are located.

On startup, the pro/app CPUs have a certain memory region they use as stack, so we cannot do allocations in the regions these stack frames are. When FreeRTOS is completely started, they do not use that memory anymore and heap(s) there can be enabled.

Add a region of memory to the collection of heaps at runtime.

Most memory regions are defined in soc_memory_layout.c for the SoC, and are registered via heap_caps_init(). Some regions can't be used immediately and are later enabled via heap_caps_enable_nonos_stack_heaps().

Call this function to add a region of memory to the heap at some later time.

This function does not consider any of the "reserved" regions or other data in soc_memory_layout, caller needs to consider this themselves.

All memory within the region specified by start & end parameters must be otherwise unused.

The capabilities of the newly registered memory will be determined by the start address, as looked up in the regions specified in soc_memory_layout.c.

Use heap_caps_add_region_with_caps() to register a region with custom capabilities.

Please refer to following example for memory regions allowed for addition to heap based on an existing region (address range for demonstration purpose only): Existing region : 0x1000 <-> 0x3000 New region : 0x1000 <-> 0x3000 ( Allowed ) New region : 0x1000 <-> 0x2000 ( Allowed ) New region : 0x0000 <-> 0x1000 ( Allowed ) New region : 0x3000 <-> 0x4000 ( Allowed ) New region : 0x0000 <-> 0x2000 ( NOT Allowed ) New region : 0x0000 <-> 0x4000 ( NOT Allowed ) New region : 0x1000 <-> 0x4000 ( NOT Allowed ) New region : 0x2000 <-> 0x4000 ( NOT Allowed )

start -- Start address of new region.

end -- End address of new region.

ESP_OK on success, ESP_ERR_INVALID_ARG if a parameter is invalid, ESP_ERR_NOT_FOUND if the specified start address doesn't reside in a known region, or any error returned by heap_caps_add_region_with_caps().

Add a region of memory to the collection of heaps at runtime, with custom capabilities.

Similar to heap_caps_add_region(), only custom memory capabilities are specified by the caller.

caps -- Ordered array of capability masks for the new region, in order of priority. Must have length SOC_MEMORY_TYPE_NO_PRIOS. Does not need to remain valid after the call returns.

ESP_OK on success

ESP_ERR_INVALID_ARG if a parameter is invalid

ESP_ERR_NO_MEM if no memory to register new heap.

ESP_ERR_INVALID_SIZE if the memory region is too small to fit a heap

ESP_FAIL if region overlaps the start and/or end of an existing region

API Reference - Multi-Heap API

(Note: The multi-heap API is used internally by the heap capabilities allocator. Most ESP-IDF programs never need to call this API directly.)

components/heap/include/multi_heap.h

#include "multi_heap.h"

allocate a chunk of memory with specific alignment

heap -- Handle to a registered heap.

size -- size in bytes of memory chunk

alignment -- how the memory must be aligned

pointer to the memory allocated, NULL on failure

malloc() a buffer in a given heap

Semantics are the same as standard malloc(), only the returned buffer will be allocated in the specified heap.

size -- Size of desired buffer.

Pointer to new memory, or NULL if allocation fails.

free() a buffer aligned in a given heap.

This function is deprecated, consider using multi_heap_free() instead

p -- NULL, or a pointer previously returned from multi_heap_aligned_alloc() for the same heap.

free() a buffer in a given heap.

Semantics are the same as standard free(), only the argument 'p' must be NULL or have been allocated in the specified heap.

p -- NULL, or a pointer previously returned from multi_heap_malloc() or multi_heap_realloc() for the same heap.

realloc() a buffer in a given heap.

Semantics are the same as standard realloc(), only the argument 'p' must be NULL or have been allocated in the specified heap.

size -- Desired new size for buffer.

New buffer of 'size' containing contents of 'p', or NULL if reallocation failed.

p -- Pointer, must have been previously returned from multi_heap_malloc() or multi_heap_realloc() for the same heap.

Size of the memory allocated at this block. May be more than the original size argument, due to padding and minimum block sizes.

Register a new heap for use.

This function initialises a heap at the specified address, and returns a handle for future heap operations.

There is no equivalent function for deregistering a heap - if all blocks in the heap are free, you can immediately start using the memory for other purposes.

start -- Start address of the memory to use for a new heap.

size -- Size (in bytes) of the new heap.

Handle of a new heap ready for use, or NULL if the heap region was too small to be initialised.

Associate a private lock pointer with a heap.

The lock argument is supplied to the MULTI_HEAP_LOCK() and MULTI_HEAP_UNLOCK() macros, defined in multi_heap_platform.h.

The lock in question must be recursive.

When the heap is first registered, the associated lock is NULL.

lock -- Optional pointer to a locking structure to associate with this heap.

Dump heap information to stdout.

For debugging purposes, this function dumps information about every block in the heap to stdout.

Check heap integrity.

Walks the heap and checks all heap data structures are valid. If any errors are detected, an error-specific message can be optionally printed to stderr. Print behaviour can be overridden at compile time by defining MULTI_CHECK_FAIL_PRINTF in multi_heap_platform.h.

This function is not thread-safe as it sets a global variable with the value of print_errors.

print_errors -- If true, errors will be printed to stderr.

true if heap is valid, false otherwise.

Return free heap size.

Returns the number of bytes available in the heap.

Equivalent to the total_free_bytes member returned by multi_heap_get_heap_info().

Note that the heap may be fragmented, so the actual maximum size for a single malloc() may be lower. To know this size, see the largest_free_block member returned by multi_heap_get_heap_info().

Number of free bytes.

Return the lifetime minimum free heap size.

Equivalent to the minimum_free_bytes member returned by multi_heap_get_info().

Returns the lifetime "low watermark" of possible values returned from multi_free_heap_size(), for the specified heap.

Return metadata about a given heap.

Fills a multi_heap_info_t structure with information about the specified heap.

info -- Pointer to a structure to fill with heap metadata.

Perform an aligned allocation from the provided offset.

heap -- The heap in which to perform the allocation

size -- The size of the allocation

alignment -- How the memory must be aligned

offset -- The offset at which the alignment should start

void* The ptr to the allocated memory

Reset the minimum_free_bytes value (setting it to free_bytes) and return the former value.

heap -- The heap in which the reset is taking place

size_t the value of minimum_free_bytes before it is reset

Set the value of minimum_free_bytes to new_minimum_free_bytes_value or keep the current value of minimum_free_bytes if it is smaller than new_minimum_free_bytes_value.

heap -- The heap in which the restore is taking place

new_minimum_free_bytes_value -- The value to restore the minimum_free_bytes to

Call the tlsf_walk_pool function of the heap given as parameter with the walker function passed as parameter.

heap -- The heap to traverse

walker_func -- The walker to trigger on each block of the heap

Structure to access heap metadata via multi_heap_get_info.

Total free bytes in the heap. Equivalent to multi_free_heap_size().

Total bytes allocated to data in the heap.

Size of the largest free block in the heap. This is the largest malloc-able size.

Lifetime minimum free heap size. Equivalent to multi_minimum_free_heap_size().

Number of (variable size) blocks allocated in the heap.

Number of (variable size) free blocks in the heap.

Total number of (variable size) blocks in the heap.

Opaque handle to a registered heap.

Callback called when walking the given heap blocks of memory.

Pointer to the block data

The size of the block

Block status. 0: free, 1: allocated

True if the walker is expected to continue the heap traversal False if the walker is expected to stop the traversal of the heap

Provide feedback about this document

Ace your Coding Interview

- DSA Problems

- Binary Tree

- Binary Search Tree

- Dynamic Programming

- Divide and Conquer

- Linked List

- Backtracking

Dynamically allocate memory for a 3D array in C

This post will discuss various methods to dynamically allocate memory for 3D array in C using Single Pointer and Triple Pointer .

Related Post:

Dynamically allocate memory for a 2D array in C

1. Using Single Pointer

In this approach, we simply allocate memory of size M×N×O dynamically and assign it to a pointer. Even though the memory is linearly allocated, we can use pointer arithmetic to index the 3D array.

Download Run Code

2. Using Triple Pointer

That’s all about dynamically allocating memory for a 3D array in C.

Rate this post

Average rating 4.82 /5. Vote count: 22

No votes so far! Be the first to rate this post.

We are sorry that this post was not useful for you!

Tell us how we can improve this post?

Thanks for reading.

To share your code in the comments, please use our online compiler that supports C, C++, Java, Python, JavaScript, C#, PHP, and many more popular programming languages.

Like us? Refer us to your friends and support our growth. Happy coding :)

Software Engineer | Content Writer | 12+ years experience

How to use external SRAM to allocate memory pool?

Description, define lv_mem_adr 0xc0080000.

0xC0080000 is an address in SDRAM.

I set the value of LV_MEM_ADR, but “LV_LOG_WARN(“Couldn’t allocate memory”);” was reported when allocating memory.

What settings do I need?

What MCU/Processor/Board and compiler are you using?

What lvgl version are you using, what do you want to achieve, what have you tried so far.

Did you also change LV_MEM_SIZE ?

Could you add a printf above this line to find out how much memory it’s trying to allocate? Maybe 128K is not enough.

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

Tile primitives for speedy kernels

HazyResearch/ThunderKittens

Folders and files, repository files navigation, thunderkittens.

ThunderKittens is a framework to make it easy to write fast deep learning kernels in CUDA (and, soon, ROCm and others, too!)

ThunderKittens is built around three key principles:

- Simplicity. ThunderKittens is stupidly simple to write.

- Extensibility. ThunderKittens embeds itself natively, so that if you need more than ThunderKittens can offer, it won’t get in your way of building it yourself.

- Speed. Kernels written in ThunderKittens should be at least as fast as those written from scratch -- especially because ThunderKittens can do things the “right” way under the hood. We think our Flash Attention 2 implementation speaks for this point.

ThunderKittens is built from the hardware up -- we do what the silicon tells us. And modern GPUs tell us that they want to work with fairly small tiles of data. A GPU is not really a 1000x1000 matrix multiply machine (even if it is often used as such); it’s a manycore processor where each core can efficiently run ~16x16 matrix multiplies. Consequently, ThunderKittens is built around manipulating tiles of data no smaller than 16x16 values.

ThunderKittens makes a few tricky things easy that enable high utilization on modern hardware.

- Tensor cores. ThunderKittens can call fast tensor core functions, including asynchronous WGMMA calls on H100 GPUs.

- Shared Memory. I got ninety-nine problems but a bank conflict ain’t one.

- Loads and stores. Hide latencies with asynchronous copies and address generation with TMA.

- Distributed Shared Memory. L2 is so last year.

Example: A Simple Atention Kernel

Here’s an example of what a simple FlashAttention-2 kernel for an RTX 4090 looks like written in ThunderKittens.

Altogether, this is 58 lines of code (not counting whitespace), and achieves about 122 TFLOPs on an RTX 4090. (74% of theoretical max.) We’ll go through some of these primitives more carefully in the next section, the ThunderKittens manual.

Installation

To use Thunderkittens, there's not all that much you need to do with TK itself. It's a header only library, so just clone the repo, and include kittens.cuh. Easy money.

But ThunderKittens does use a bunch of modern stuff, so it has fairly aggressive requirements.

- CUDA 12.3+. Anything after CUDA 12.1 will probably work, but you'll likely end up with serialized wgmma pipelines due to a bug in those earlier versions of CUDA.

- (Extensive) C++20 use -- TK runs on concepts.

If you can't find nvcc, or you experience issues where your environment is pointing to the wrong CUDA version:

Finally, thanks to Jordan Juravsky for putting together a quick doc on setting up a kittens-compatible conda .

To validate your install, and run TK's fairly comprehensive unit testing suite, simply run make -j in the tests folder. Be warned: this may nuke your computer for a minute or two while it compiles thousands of kernels.

To compile examples, run source env.src from the root directory before going into the examples directory. (Many of the examples use the $THUNDERKITTENS_ROOT environment variable to orient themselves and find the src directory.

ThunderKittens Manual

ThunderKittens is actually a pretty small library, in terms of what it gives you.

- Data types: (Register + shared) * (tiles + vectors), all parameterized by layout, type, and size.

- Operations for manipulating these objects.

Despite its simplicity, there are still a few sharp edges that you might encounter if you don’t know what’s going on under the hood. So, we do recommend giving this manual a good read before sitting down to write a kernel -- it’s not too long, we promise!

NVIDIA’s Programming Model

To understand ThunderKittens, it will help to begin by reviewing a bit of how NVIDIA’s programming model works, as NVIDIA provides a few different “scopes” to think about when writing parallel code.

- Thread -- this is the level of doing work on an individual bit of data, like a floating point multiplication. A thread has up to 256 32-bit registers it can access every cycle.

- Warp -- 32 threads make a warp. This is the level at which instructions are issued by the hardware. It’s also the base (and default) scope from which ThunderKittens operates; most ThunderKittens programming happens here.

- Warpgroup -- 4 warps make a warpgroup. This is the level from which asynchronous warpgroup matrix multiply-accumulate instructions are issued. (We really wish we could ignore this level, but you unfortunately need it for the H100.) Correspondingly, many matrix multiply and memory operations are supported at the warpgroup level.

- Block -- N warps make a block, which is the level that shares “shared memory” in the CUDA programming model. In ThunderKittens, N is often 8.

- Grid -- M blocks make a grid, where M should be equal to (or slightly less) than a multiple of the number of SMs on the GPU to avoid tail effects. ThunderKittens does not touch the grid scope except through helping initialize TMA descriptors.

“Register” objects exist at the level of warps -- their contents is split amongst the threads of the warp. Register objects include:

- Register tiles, declared as the kittens::rt struct in src/register_tile/rt.cuh . Kittens provides a few useful wrappers -- for example, a 32x16 row-layout bfloat16 register tile can be declared as kittens::rt_bf_2x1; -- row-layout is implicit by default.

- Register vectors, which are associated with register tiles. They come in two flavors: column vectors and row vectors. Column vectors are used to reduce or map across tile rows, and row vectors reduce and map across tile columns. For example, to hold the sum of the rows of the tile declared above, we would create a kittens::rt_bf_2x1<>::col_vec; In contrast, “Shared” objects exist at the level of the block, and sit only in shared memory.

All ThunderKittens functions follow a common signature. Much like an assembly language (ThunderKittens is in essence an abstract tile-oriented RISC instruction set), the destination of every function is the first operand, and the source operands are passed sequentially afterwards.

For example, if we have three 32x64 floating point register tiles: kittens::rt_fl_2x4 a, b, c; , we can element-wise multiply a and b and store the result in c with the following call: kittens::mul(c, a, b); .

Similarly, if we want to then store the result into a shared tile __shared__ kittens:st_bf_2x4 s; ,we write the function analogously: kittens::store(s, c); .

ThunderKittens tries hard to protect you from yourself. In particular, ThunderKittens wants to know layouts of objects at compile-time and will make sure they’re compatible before letting you do operations. This is important because there are subtleties to the allowable layouts for certain operations, and without static checks it is very easy to get painful silent failures. For example, a normal matrix multiply requires the B operand to be in a column layout, whereas an outer dot product requires the B operand to be in a row layout.

If you are being told an operation that you think exists doesn't exist, double-check your layouts -- this is the most common error. Only then report a bug :)

By default, ThunderKittens operations exist at the warp-level. In other words, each function expects to be called by only a single warp, and that single warp will do all of the work of the function. If multiple warps are assigned to the same work, undefined behavior will result. (And if the operation involves memory movement, it is likely to be completely catastrophic.) In general, you should expect your programming pattern to involve instantiating a warpid at the beginning of the kernel with kittens::warpid() , and assigning tasks to data based on that id.

However, not all ThunderKittens functions operate at the warp level. Many important operations, particularly WGMMA instructions, require collaborative groups of warps. These operations exist in the templated kittens::group<collaborative size> . For example, wgmma instructions are available through kittens::group<4>::mma_AB (or kittens::warpgroup::mma_AB , which is an alias.) Groups of warps can also collaboratively load shared memory or do reductions in shared memory

Other Restrictions

Most operations in ThunderKittens are pure functional. However, some operations do have special restrictions; ThunderKittens tries to warn you by giving them names that stand out. For example, a register tile transpose needs separable arguments: if it is given the same underlying registers as both source and destination, it will silently fail. Consequently, it is named transpose_sep .

Contributors 5

8 effective tips to crack competitive exam in first attempt

C ompetitive exams, though often daunting, are gateways to career dreams. While they may seem formidable, a strategic approach, unwavering commitment, and a well-structured plan can transform these challenges into stepping stones toward your dream profession.

Sujatha Kshirsagar - President & Chief Business Officer, Career Launcher has shared some essential strategies and tips that aspiring professionals need to know while attempting competitive exams:

1. OPTIMISE YOUR STUDY SESSIONS

Planning ahead is a proven strategy for success in competitive exams. One of the keys to effective exam preparation is to create a well-structured study plan and have shorter, focused study sessions.

Define what topics you need to cover and allocate specific study days for each subject or section. Knowing exactly what you need to accomplish each day helps maintain focus and ensures you don't miss any crucial areas.

2. THOROUGHLY UNDERSTAND THE MATERIAL

Reading your study material thoroughly is essential. Don't just skim through it; ensure you understand the concepts, headings, subheadings, and key points. A deep understanding of the subject will serve you well during the exam. Simultaneously, highlight and remember keywords from your study material.

These keywords can be essential during the exam when you need to recall specific information. Regularly review and reinforce these keywords in your memory.

3. TAKE SHORT BREAKS

Don't underestimate the importance of taking short breaks during your study sessions. Prolonged study without breaks can hinder your ability to retain information. Therefore, you must avoid marathon study sessions that can leave you feeling exhausted and frustrated. Instead, break your study time into manageable chunks.

Study for a couple of hours at a stretch, then take a 15 to 20-minute break to recharge your mind. These breaks are crucial for retaining information and preventing burnout.

4. ELIMINATE DISTRACTIONS

Create a study environment that is free from distractions. If your usual study place is noisy or prone to interruptions, consider relocating to a quieter space. Turn off your phone or place it in silent mode to avoid unnecessary interruptions from calls and notifications. Minimising distractions maximises your productivity.

While it is essential to maintain a balance between studying and socialising, be mindful not to overindulge in social activities during your exam preparation. Prioritise your studies, especially during critical study periods, and allocate time for social interactions once your exams are complete.

During study sessions, avoid checking emails, social media, or other online platforms while studying. These distractions can eat up valuable study time and hinder your progress.

5. ALLOCATE AMPLE TIME FOR REVISION

Revision is the foundation of exam preparation. Ensure you allocate sufficient time for multiple rounds of revision before the exam. Repetition helps reinforce what you have learned and enhances your recall abilities during the test.

6. SEEK GUIDANCE

Consider joining coaching classes to get the right study materials, such as textbooks, online resources, and practice papers. High-quality study materials and mock tests conducted by coaching centres such as Career Launcher, can make a significant difference in your preparation.

Mock tests help you get accustomed to the exam pattern besides improving your time management skills. Alternatively, you may seek guidance from mentors, teachers, or experienced individuals who have succeeded in the same exam. They can offer valuable insights and tips.

7. EXERCISE REGULARLY AND MAINTAIN A BALANCED DIET

While competitive exams can be stressful, excessive stress can hamper your performance. Therefore, practice stress management techniques such as deep breathing, meditation, or yoga. Regular exercise also boosts cognitive function by reducing stress, enhancing focus, and improving memory retention.

A balanced diet is equally crucial for overall well-being and cognitive function. Prioritise adequate protein, healthy fats, and carbohydrates in your meals. Stay hydrated by drinking plenty of water and including vegetables in your diet to maintain optimal health and cognitive performance.

8. STAY PERSISTENT

Sometimes, you may not achieve success on the first attempt. In case you fail to achieve the desired results, analyse your performance and try again. Nevertheless, believe in your abilities and maintain a positive attitude as you strive to excel.

Adherence to these tips and strategies can enhance your chances of cracking competitive exams successfully. Stay focused, stay motivated, and believe in yourself, and you will be well on your way to acing competitive exams and reaching your career aspirations.

Watch Live TV in English

Watch Live TV in Hindi

IMAGES

VIDEO

COMMENTS

What is left is typically available for dynamic memory allocation (RAM), or is unused or made for non-volatile storage (Flash/EPROM). Reducing memory usage is primarily a case of selecting/designing efficient data structures, using appropriate data types, and efficient code and algorithm design.

Remarks. If the HeapAlloc function succeeds, it allocates at least the amount of memory requested. To allocate memory from the process's default heap, use HeapAlloc with the handle returned by the GetProcessHeap function. To free a block of memory allocated by HeapAlloc, use the HeapFree function. Memory allocated by HeapAlloc is not movable.

This pointer is returned by an earlier call to the HeapAlloc or HeapReAlloc function. The new size of the memory block, in bytes. A memory block's size can be increased or decreased by using this function. If the heap specified by the hHeap parameter is a "non-growable" heap, dwBytes must be less than 0x7FFF8.

Memory Allocation allocated in BSS, set to zero at startup allocated on stack at start of function f 8 bytes allocated in heap by malloc int iSize; char *f(void) {char *p; ... #define MAX_STRINGS 128 #define MAX_STRING_LENGTH 256 void ReadStrings(char **strings, int *nstrings, int maxstrings, FILE *fp)

malloc in C: Dynamic Memory Allocation in C Explained. malloc () is a library function that allows C to allocate memory dynamically from the heap. The heap is an area of memory where something is stored. malloc () is part of stdlib.h and to be able to use it you need to use #include <stdlib.h>.

Dynamic memory allocation in C. (Reek, Ch. 11) Stack-allocated memory. When a function is called, memory is allocated for all of its parameters and local variables. Each active function call has memory on the stack (with the current function call on top) When a function call terminates, the memory is deallocated ("freed up") Ex: main ...

Reserves a range of the process's virtual address space without allocating any actual physical storage in memory or in the paging file on disk. You can commit reserved pages in subsequent calls to the VirtualAlloc function. To reserve and commit pages in one step, call VirtualAlloc with MEM_COMMIT | MEM_RESERVE.

As we all know, the syntax of allocating memory is a bit clunky in C. The recommended way is: int *p; int n=10; p = malloc(n*sizeof *p); You can use sizeof (int) instead of sizeof *p but it is bad practice. I made a solution to this with a macro: This get called this way: int *p;

C realloc() method "realloc" or "re-allocation" method in C is used to dynamically change the memory allocation of a previously allocated memory. In other words, if the memory previously allocated with the help of malloc or calloc is insufficient, realloc can be used to dynamically re-allocate memory. re-allocation of memory maintains the already present value and new blocks will be ...

The first step is to allocate enough spare space, just in case. Since the memory must be 16-byte aligned (meaning that the leading byte address needs to be a multiple of 16), adding 16 extra bytes guarantees that we have enough space. Somewhere in the first 16 bytes, there is a 16-byte aligned pointer.

By allocating these extra bytes, we are making a tradeoff between generating aligned memory and wasting some bytes to ensure the alignment requirement can be met. Now that we have our high-level strategy, let's prototype the calls for our aligned malloc implementation. Mirroring memalign, we will have: