- CONTACT SALES

- TECHNICAL SUPPORT

- Contact sales

- Technical support

Valuable capabilities of the #1 choice generative learning platform. Learn about AI, automation, gamification, course creation to delivery, and more.

Everything from employee training to customer training, career growth to hybrid learning, certification to compliance, and more.

Explore valuable best practices from CYPHER's customers, featuring insightful videos and expert advice.

Create and deliver courses quicker. Reduce costs and reclaim your resources.

Research, videos, and resources to your AI questions!

5 Activities to teach your students how to spot fake news

By Matt Phillpott

How to spot fake news ? These two words have trended in the last decade as a way of describing news and information that is false. It is not as simple as that, though. Other words like misinformation, disinformation, propaganda, satire, hoaxes, and conspiracy theories also describe something very similar and have been around for much longer. They do not, however, convey that snappy dismissive air conjured by the words “fake news.” To understand this trend, it is important to realize that there is no one description of what it is. In reality, fake news is three separate things:

- Stories that are not true (making people believe something entirely false);

- Stories that are partially true (a deliberate attempt to convince a reader of a viewpoint using skewed information or opinion);

- A tactic used to discredit other people’s views (to make another person’s opinion or even facts appear to someone else to be false, even when there is no sign that this is the case).

What all three descriptions have in common is the attempt to confuse and misdirect . As such, the tools that we need to teach our students are the same ones that we use to help them to assess information and conduct their own research for assignments.

Five activities to teach students how to spot fake news

Students must learn skills and capabilities to check the quality, bias, and background of news they encounter daily. Thus, when instructing students how to spot fake news, we need to teach them to:

- Develop a critical mindset (consider bias, quality, sensationalism, and the date of creation);

- Ask: why has this been written, and by who?;

- Check the source of the story;

- Check elsewhere to see if the story appears in more than one place.

With all of this in mind, how to approach this topic in the classroom? Here are five ideas that will help you navigate this challenging subject:

1. The News Comparison exercise

I love this one. Ask your students to select three or four national news websites. However, there’s a catch: they should include sites they would generally avoid because it conflicts with their opinions. Next, ask them to select the main news item of the day. Visit each of the websites and compare the article they have written to one another.

What is different? What is similar? Your students can write a short reflection about what they have found and then discuss how they might feel about the topic if only one of these sites was their sole source of news. You might wish to take this one step further by asking them to “fact-check” the news story using one of the fact-checking websites such as FactCheck.org , Snopes.com , Washington Post Fact Checker , Politifact.com . While not specifically about fake news, this exercise helps students understand the nature of news and the variability and quality of the information found online.

Read more: Digital reflection tools your students can use in class

2. Google Reverse Image Search

Images are just as likely as text to have been falsified or altered. Set your students a task to trace the history of an image through Google Reverse Image Search:

- Right-click on an image on a website and copy the image address or select an image on your hard drive;

- Go to Google Reverse Image Search ;

- Click on the camera icon and then paste the image address URL into the search field or upload your image from your hard drive;

- The results will show you where the image has appeared online.

This allows you to see where that image has appeared online (context) and to see similar images (which might reveal that it has been doctored).

3. LMS Quiz

Another way to engage students with issues around fake news is to develop a quiz on your learning management system (LMS) which asks students to spot fake news. Here are a few sample questions you might wish to use:

Q: Is this a photograph of how MGM created the legendary MGM intro of a lion roaring?

A. Show students the MGM version then reveal the real version , which is a picture from 2005 of a lion receiving a CAT scan. You can find plenty of other examples online .

Q: In the lead-up to the 2016 US Presidential election, Pope Francis broke papal tradition by endorsing the US Presidential candidate Donald Trump. True or False?

A: False. This fake story appeared on the now-defunct website WTOE 5 News and spread from there. Reuters and other reputable news sources confirmed that the news was false. See the fact check on this story

Q: NASA plans to install internet on the Moon. True or False?

A: True. NASA plans to build a 4G network on the moon to help them control lunar robots. This is called LunaNet. Find out more on the NASA website and see one of the news stories on CNBC .

Read more: 9 Types of assignments teachers can create in their LMS to evaluate student progress

4. Discuss research about fake news

Scholars have published articles about fake news in recent years, examples include Apuke and Omar (2021), Tsfati et al. (2020), and Leeder (2019). Select one or two articles for students to read and appraise, and then discuss the points raised in the classroom, asking them questions about how to spot fake news and websites.

As a homework assignment, ask students to investigate a current fake news story and compare their findings to the research. You might ask them to upload a brief response on an LMS forum , blog, or digital portfolio as an additional exercise. Or, perhaps, to create a poster to advertise to their peers why they should not fall for it.

5. Make up a fake news story

This is a fun exercise to do as a lesson to spot fake news. Divide your class into two groups:

Group A: write a fake news story;

Group B: write a real story.

Ensure that they are unable to share which group they are in. Ask them to individually write a short 500-word news story and then post it to the LMS forum or blog. Students in Group A should make up the story but add three elements of truth to it. Students in Group B should write about a real story that they find online from a reputable source (but one that is on a niche topic). Once done, divide your class into different small groups, and ask them to read through the stories, discuss, and label each one as Fake News or Real. Bring the class together to discuss the results and ask each student to update their forum/blog post to identify it as Fake or Real.

Learning how to spot fake news is a crucial skill

Fake News is a real challenge for educators. However, by teaching research skills to students, it becomes easier for them to identify misinformation and assess the quality of their sources. If there is one more piece of advice I would offer it is to make these activities as real as possible for students. Let them discover information themselves using the tools that they would normally use and focus on how they share stories.

In addition to the activities listed above, you might wish to try exercises created by Noah Tavlin , Vicki Davis , or Terry Heick . In addition, SFU Library provides a nice infographic and some videos about Fake News. Mindtools also provide some useful examples.

Get valuable resources and tips monthly. Subscribe to the newsletter. Don't miss out .

Subscribe to our newsletter, you may also like.

5 Tech tools every student should have

Data literacy skills: what they are and how do we teach them to our students?

- Digital Offerings

- Biochemistry

- College Success

- Communication

- Electrical Engineering

- Environmental Science

- Mathematics

- Nutrition and Health

- Philosophy and Religion

- Our Mission

- Our Leadership

- Accessibility

- Diversity, Equity, Inclusion

- Learning Science

- Sustainability

- Affordable Solutions

- Curriculum Solutions

- Inclusive Access

- Lab Solutions

- LMS Integration

- Instructor Resources

- iClicker and Your Content

- Badging and Credidation

- Press Release

- Learning Stories Blog

- Discussions

- The Discussion Board

- Webinars on Demand

- Digital Community

- Macmillan Learning Peer Consultants

- Macmillan Learning Digital Blog

- Learning Science Research

- Macmillan Learning Peer Consultant Forum

- English Community

- Achieve Adopters Forum

- Hub Adopters Group

- Psychology Community

- Psychology Blog

- Talk Psych Blog

- History Community

- History Blog

- Communication Community

- Communication Blog

- College Success Community

- College Success Blog

- Economics Community

- Economics Blog

- Institutional Solutions Community

- Institutional Solutions Blog

- Handbook for iClicker Administrators

- Nutrition Community

- Nutrition Blog

- Lab Solutions Community

- Lab Solutions Blog

- STEM Community

- STEM Achieve Adopters Forum

- Contact Us & FAQs

- Find Your Rep

- Training & Demos

- First Day of Class

- For Booksellers

- International Translation Rights

- Permissions

- Report Piracy

Digital Products

Instructor catalog, our solutions.

- Macmillan Community

Ten Questions to Ask About Fake News

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Printer Friendly Page

- Report Inappropriate Content

- cause and effect: english

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Bedford New Scholars 49

- Composition 547

- Corequisite Composition 58

- Developmental English 38

- Events and Conferences 6

- Instructor Resources 9

- Literature 55

- Professional Resources 4

- Virtual Learning Resources 48

“Fake News” Resources

Great resources to help you avoid “fake news” and foster critical thinking, book a virtual or in-person presentation.

Joyce Grant, TKN’s co-founder, gives high-energy, engaging virtual presentations for students and for educators as well as keynotes, on “fake news.” How to spot it and how to teach about it.

Her passion for the subject and broad base of knowledge over more than a decade, combined with her background as a journalist make for interesting, exciting presentations.

Find out more about how to book Joyce Grant for a virtual or an in-person visit. Click here or contact [email protected] .

ONLINE GAMES

These may be the best–they’re certainly the most fun –way to learn about fake news and journalism.

Click here or scroll for great online games. We highly recommend these games because they’re fun, educational and support your teaching.

ARTICLES & RESEARCH

Smart, succinct, readable articles about fake news and how to help young people spot it.

Click here or scroll for excellent articles and research studies about fake news. Use in these classroom or for background information for your own learning.

MEDIA LITERACY ORGANIZATIONS

Our curated collection of the best fake news websites, fact-checkers and organizations to support you in learning, and teaching, about fake news.

Click here or scroll for fake-news fighting organizations. They have tons of resources.

Since the dawn of the internet, people have been gambling online. Casinos and other gambling establishments have taken advantage of this by creating websites that allow players to wager on any number of games. All true and complete information about the different online casinos, their services, bonuses and safe payment options they offer can be seen here: onlinecasinosdk.com/payments/paysafecard . In recent years, there has been an increase in online casinos that accept cryptocurrency as a way of payment. This is due to the fact that cryptocurrencies are often more anonymous than traditional forms of payment, and they are also relatively secure. Additionally, some online casinos do not accept all cryptocurrencies, so it’s important to do your research before concluding that one is the right option for you. The sheer number of different casino providers makes it difficult for newcomers to find the right one for them, but reviews can help in narrowing down the selection considerably. Regardless of which provider a player chooses, there are a few key things they should keep in mind. Firstly, always make sure that a casino is licensed by relevant authorities – this will ensure that all funds being deposited are safe and secure.

BBC iReporter may be the single best online resource for learning about how real-world journalism works. Highly recommended.

In BBC iReporter you’re a BBC journalist covering breaking news and have to decide whether or not to post things on social media. It’s real-world and it’s exciting. It will help young people to understand the pressures on journalists to be accurate and at the same time, publish news in a timely manner.

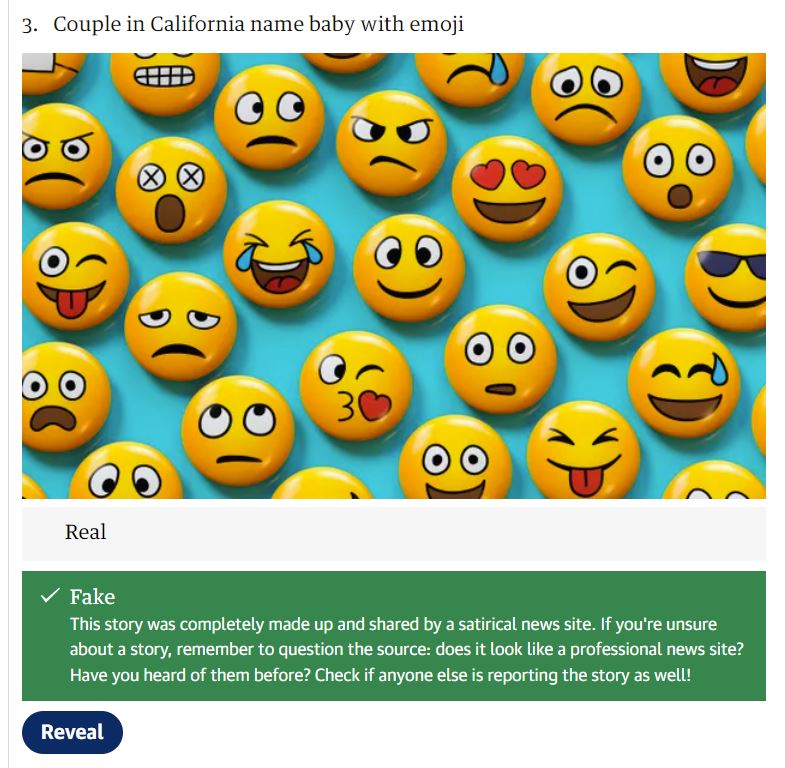

Fake or Real? Look at the headline and then guess if the article is real … or fake news.

The Guardian has put together a fun and interesting group of real and fake headlines for this simple-to-use quiz .

Harmony Square is an online game in which you’re the bad guys, posting fake news and sowing disharmony. It’s silly and fun and it has quite a bit of reading to it.

https://inoculation.science/inoculation-games/harmony-square/

“ Go Viral! ” is a game with a medical misinformation game. You are the fake news creator. You win by going viral with lies and fake news. (By the same people who brought you Breaking Harmony Square, above.)

Not for everyone, because it shows you bad habits in the hopes that you’ll recognize good ones. Older students (grade 7+) may come away with some solid ideas about misinformation that will help them spot it in the future.

Can you tell if a commenter or poster is a troll, a bot or a real human? It’s important to know the difference so you don’t accidentally share their deliberately created fake news!

This is a really great (and fun) game that not only teaches you what to look for, but lets you guess to see if you’re right. https://spotthetroll.org/start

Doubt it or Trust It by The Canadian Journalism Federation’s NewsWise. An online game in which you guess if something is fake or real.

Fake or Foto? Take a look at the image and then take a guess. You’ll get the answers at the end.

https://area.autodesk.com/fakeorfoto

We’ve used this very successfully with classes, both in-person and online. Young people love trying to out-guess the adults (and they usually do)!

Play “Reality Check” by Media Smarts and learn how to check whether something is fake or real.

http://mediasmarts.ca/sites/mediasmarts/files/games/reality-check/index.html#/

Play FakeOut (CIVIX/Newsliteracy.ca): https://newsliteracy.ca/fakeOut

Is it Real or Photoshopped? (by Adobe).

https://landing.adobe.com/en/na/products/creative-cloud/69308-real-or-photoshop/index.html

In Bad News (Junior), created by a team of academics from Cambridge University and media experts, you become a fake news creator. Can you go viral with your lies and exaggerations? The aim is to teach what “not” to do, by teaching what the baddies do.

http://getbadnews.com/droggame_book/junior/#intro

University of Akron: Fake News Quiz

https://akron.qualtrics.com/jfe/form/SV_2bhqIwpegOtj5yZ

Fake News board game. We played this several times with different age groups and found it fun and educational. Lots of interesting facts for the curious. Worth the price ($20 US from BreakingGames.com. Amazon Canada had it online for awhile at $12 CDN, but we can’t find it there now. It’s likely online in other places. Let us know if you can find it in Canada, please.)

In Factitious2020 you try to figure out which articles are fake and which are real.

It’s okay, but a bit wordy, with articles that are fairly lengthy and involved. I’m not sure that just looking at the name of a source would help you truly figure out if something’s fake or not–you’d probably also have to do some Googling as well. But if you want to do a deep dive into teaching about whether an article is fake or not, and talk about sources, this game provides some excellent articles to work with.

Can You Believe It?: How to Spot Fake News and Find the Facts

Written by Teaching Kids News’ co-founder, Joyce Grant and beautifully illustrated by Kathleen Marcotte; published by Kids Can Press in 2022 and suitable for young people 9 to 12 as well as classrooms.

You can buy this illustrated non-fiction book in most independent bookstores or from one of the big chains including Amazon.com and Chapters Indigo .

For today’s tech-savvy kids, here’s the go-to resource for navigating what they read on the internet.

Should we believe everything we read online? Definitely not! And this book will tell you why. This fascinating book explores in depth how real journalism is made, what “fake news” is and, most importantly, how to spot the difference. It’s chock-full of practical advice, thought-provoking examples and tons of relevant information on subjects that range from bylines and credible sources to influencers and clickbait.

For more information and to purchase

MIT study published in Science magazine shows misinformation travels faster than the truth (2018)

Interesting follow-up: The Atlantic published an article saying the MIT study, which had been widely spread, was itself misinformation. AND THEN The Atlantic reneged on that, saying the MIT study was valid after all.

All of this “misinformation” was duly retweeted and shared.

https://news.mit.edu/2018/study-twitter-false-news-travels-faster-true-stories-0308

https://www.theatlantic.com/technology/archive/2022/03/fake-news-misinformation-mit-study/629396/

Wonder why people believe in conspiracy theories? This article gives insight into who these people are and why they believe seemingly ridiculous claims

https://medium.com/jigsaw/7-insights-from-interviewing-conspiracy-theory-believers-c475005f8598

If you’re teaching about POV or “filter bubbles,” this 2-minute BBC video is a must watch.

Journalist Steve Rosenberg is one of the BBC’s main Russia correspondents. He lives in, and reports on, Russia. His video shows very clearly why we all must break out of our “filter bubble” or “information silo.” Russians who only listen to the state TV there have a very different perspective on the invasion of Ukraine (btw, the word “invasion” is banned on Russian state TV) than those who get their news from outside sources via the Internet.

A story of two Russias. We report on Russians who believe what state TV is telling them about Ukraine. And those who don’t, and who are leaving the country. Camera @AntonChicherov Producer @BBCWillVernon @BBCNews @BBCWorld https://t.co/TYRf0LYINR pic.twitter.com/Wbr4m4bNOU — Steve Rosenberg (@BBCSteveR) March 1, 2022

This is a must-read. If you’re an educator interested in media literacy, this is well worth your time.

Click here: STANFORD STUDY ABOUT KIDS AND FAKE NEWS .

Researchers at Stanford University wanted to know if kids can recognize–and avoid–fake news. The bottom line: not very well.

The study explains what kids were asked to do (ie, tell the difference between an ad and advertorial) and how they approached it. The study looks at kids who were good at critical thinking (ie, uncovering fake news), kids who were okay but still had much to learn and kids who weren’t able to distinguish between real and fake news. It’s a fascinating look at kids and media, and many of the results may surprise you.

Filter Bubbles , by Eli Paliser

In this 2011 Ted Talk (9 mins) activist Eli Paliser talks about what he calls “filter bubbles.” We at TKN call them “silos” but it’s the same thing—how social media give you more of what you like. And less, to the point of nothing, of what you’re not clicking on.

We need to consciously seek out information that’s not in our bubble.

Really good NPR Cartoon about how to spot fake news. https://www.npr.org/2020/04/17/837202898/comic-fake-news-can-be-deadly-heres-how-to-spot-it (May 2021) Illo by Connie Jin .

Great article by The Walrus’ fact-checker Viviane Fairbank. She talks about why people share news they don’t even believe and the importance of showing people how “real” news is gathered–something we’ve been advocating for years. A must-read if you’re interested in facts and news and helping young people understand the difference.

https://thewalrus.ca/how-do-we-exit-the-post-truth-era/ (May 2021)

Art by JOSH HOLINATY (Image excerpt used with permission.)

“We must save democracy from conspiracies” TIME magazine article by Sacha Baron Cohen, Oct. 8, 2020. Cohen, as you may know, is a satirist whose humour is often, as he puts it, prepubescent. Nevertheless, he is brilliant and in his article, has this to say about satire: “When it works, satire can humble the powerful and expose the ills of society.”

https://time.com/5897501/conspiracy-theory-misinformation/

“Time to act on newsroom inequality” — Toronto Star

https://www.thestar.com/opinion/public_editor/2020/06/11/time-to-act-on-newsroom-inequality.html

Interesting BBC article about “ Why (even) smart people believe coronavirus myths “

Cognitive immunity. This pdf contains an infographic that is a virtual master class in all aspects of disinformation. We’re still making our way through it ourselves, but if there’s a critical thinking topic you have never heard before, chances are it’s here: https://www.iftf.org/fileadmin/user_upload/downloads/ourwork/IFTF_ODNI_Cognitive_Immunity_Map__2019.pdf

Great article (“Fighting Fake News in the Classroom”) in the American Psychological Association journal about teaching about misinformation. It’s a comprehensive article that includes tons of resources and links to great information about how to get kids thinking about this important subject. https://www.apa.org/monitor/2022/01/career-fake-news

CBC article on how to (tactfully) discourage the spread of false pandemic information in chats and email

https://www.cbc.ca/news/canada/covid-19-misinformation-rumour-1.5532302

This article, on a website called #30 Seconds to Check it Out talks about various forms of fake news:

https://web.archive.org/web/20201125174822/https://30secondes.org/en/module/what-is-fake-news/

This Forbes article talks about microtransactions in games. You know, how games get harder and suddenly you want to buy (with real money) that “thing” that will help you get to the next level. Tell your kids it’s not them, it’s a deliberate business strategy. (Note: I don’t love the headline on this Forbes article–I’m not sure Mario Kart needs “two big warnings.” I don’t think the article really echoes the headline; but the point is a good one.)

https://www.forbes.com/sites/davidthier/2019/09/27/two-big-warnings-about-mario-kart-tour-on-ios-and-android

The Toronto Star’s Classroom Connections has a great series of one-pagers about journalism (TKN’s Joyce Grant is a contributing writer and editor). They’re free to download and they include curriculum questions.

Teaching how “real journalism” is done is key to helping young people understand what “fake news” looks like and how it falls short.

Click here for For the Record: https://www.classroomconnection.ca/for-the-record.html

There is a nice infographic here, by Simon Fraser University, with eight “simple steps” to spotting fake news — not that spotting fake news is that simple, but these offer a good starting point.

https://www.lib.sfu.ca/help/research-assistance/fake-news

Article from Webwise, which Google “the Irish Internet Safety Awareness Centre.” A good article and well written–clear and succinct.

https://www.webwise.ie/teachers/what-is-fake-news/

Sometimes, it’s just nice to hear about it from librarians–here’s much of the same information about what fake news is, but this time from Enoch Pratt free Library, in Baltimore, Maryland, US.

https://www.prattlibrary.org/research/guides/spotting-fake-news

So interesting. In 2016, The New York Times followed a fake news tweet to show how it started and then how it went viral. It started as a tweet on Nov. 9. It was posted by someone else on Reddit, then a conservative discussion forum, was shared 5,000 times and posted on a Facebook page with 300,000 users.

On Nov. 10, US president Donald Trump tweeted about “the protesters,” which “emboldened” Tucker to think maybe he had something after all, if the president was tweeting about it.

Anyway, read the article for the full story–pretty interesting stuff. Tucker eventually did republish his tweet with “FALSE” stamped on it–that correction was retweeted just 29 times.

https://www.nytimes.com/2016/11/20/business/media/how-fake-news-spreads.html?_r=0

Interesting 2016 NPR article (and audio clip) about how they tracked down the creator of one specific fake news article: “FBI Agent Suspected in Hillary Email Leaks Found Dead in Apparent Murder-Suicide.”

“Everything about it was fictional… the town, the sheriff…,” says the “fake news entrepreneur” who created it.

NPR tracked him down in Los Angeles; he makes money from ads on his website. How much money? He wouldn’t say for himself but he said that “$10,000 to $30,000 a month is ‘in the ballpark.'”

https://www.npr.org/sections/alltechconsidered/2016/11/23/503146770/npr-finds-the-head-of-a-covert-fake-news-operation-in-the-suburbs

This Common Sense Media article talks about deepfakes (videos created, using AI, that make it look like someone is doing or saying something they didn’t). Note that the video they link to as a deepfake example is NOT suitable for children , because it contains swears and other inappropriate language.

https://www.commonsensemedia.org/blog/common-sense-explains-what-are-deepfake-videos

Article in the Rolling Stone magazine about Russia’s massive involvement in the dissemination of “fake news” as well as how fake news plays on our emotions–and not just provoking “shocking” or “sad” reactions, but “smug” and “happy.”

https://www.rollingstone.com/politics/politics-features/russia-troll-2020-election-interference-twitter-916482

This article, on the Thomson Rivers University (TRU) website provides a good, simple run-down of the basics about fake news. It also gives links to sources if you’d like to go a bit more in-depth.

https://libguides.tru.ca/fakenews/falling

Feb. 15, 2019 study ( Edelman Trust Barometer ) says 71 per cent of Canadians are worried about “fake news.”

Here is an excellent Global News article that analyses the study.

A fun and quick video that really drives home why it’s so important to CHECK YOUR FACTS . By the News Literacy Project. You will want to share this with your students.

https://www.nytimes.com/2018/03/07/technology/two-months-news-newspapers.html

This article in THE SACRAMENTO BEE talks about a new bill proposed in California, to develop “statewide school standards on internet safety and digital citizenship, including cyberbullying and privacy.”

Great article in the NEW YORK TIMES ABOUT PRINT VS. DIGITAL NEWS : Reporter “took a step back in time,” as he puts it, and read news only from printed newspapers for two months. Here are his fascinating insights about the good and the bad of print vs. digital news.

From the article: “Real life is slow; it takes professionals time to figure out what happened, and how it fits into context. Technology is fast. Smartphones and social networks are giving us facts about the news much faster than we can make sense of them, letting speculation and misinformation fill the gap.”

VANESSA OTERO’S AWESOME MEDIA BIAS CHART can help you plot your favourite “real news” sources. (NOTE: Don’t use her chart without crediting Vanessa Otero and/or linking to her website, AllGeneralizationsAreFalse.com– her chart is copyrighted .)

In fact, you should check out VANESSA OTERO’S WEBSITE, ALLGENERALIZATIONSAREFALSE.COM for tons of great information on bias and the news.

HOW TO DO A GOOGLE REVERSE IMAGE SEARCH (includes links to places where you can do this).

Here’s why a reverse-image search is useful: Let’s say there’s a picture of a living room with a real live shark swimming around in it! You might want to double-check whether that’s a real image or one that’s been Photoshopped. You can do a reverse-image search and find the original image.

If it’s, say, a living room with no water and no shark, then you’ve got your answer!

THE HOUSE HIPPO — “That looked really real –but you knew it couldn’t be true.” One of our favourite go-to videos about critical thinking, still holds true today–even more, in fact. By Concerned Children’s Advertisers. YouTube video, 1:02.

The House Hippo video has had a makeover by Media Smarts! Check out House Hippo 2.0 here:

BBC’S INSIDE LOOK AT THE WHITE HOUSE PRESS CORPS and how they cover the president . (YouTube, 13:49 but totally worth the time–so interesting.)

This shows you just how difficult it can be to get the information you need to write your news article.

Who are the people who create fake news? The University of Massachusetts Amherst and the University of Leeds in the UK teamed up to find out. Read THEIR INTERESTING REPORT, “ARCHITECTS OF NETWORKED DISINFORMATION” on who these people are, and why they do what they do.

Download executive summary HERE .

Download full report HERE .

What’s FACEBOOK DOING TO DISCOURAGE FAKE NEWS? This is Mark Zuckerberg’s statement.

One of the things they’re doing is to ask people to rank a source’s trustworthiness; they’re hoping that will help to identify some fake news sources. (If you Google this topic, you’ll see lots of columns and insights on what these new Facebook initiatives may mean. It’s an ongoing project, and these are merely the early stages.)

Stanford University published this STUDY ABOUT THE INFLUENCE OF SOCIAL MEDIA AND FAKE NEWS IN THE 2016 US ELECTION .

Common Sense Media’s video, 5 WAYS TO SPOT FAKE NEWS .

“How Stuff Works” — a pretty good little flipchart-type presentation: 10 WAYS TO SPOT FAKE NEWS .

THREE LESSON PLANS about “fake news” from Cool Cat Teacher.com.

Study suggests that “lies spread faster than the truth” on Twitter. “Falsehood diffused significantly farther, faster, deeper, and more broadly than the truth in all categories of information…” according to researchers Vosoughi et al. https://science.sciencemag.org/content/359/6380/1146.full

NPR article talks about the above study about how lies travel 70% faster on Twitter than the truth. https://www.npr.org/sections/alltechconsidered/2018/03/12/592885660/can-you-believe-it-on-twitter-false-stories-are-shared-more-widely-than-true-one

The Poynter Institute trains journalists and is a journalism watchdog for ethics and best-practices. Its media literacy arm, MediaWise , supports the education of young people about journalism, fact-checking and fake news.

On this page , they list a number of resources including games that teach about fake news.

Check out the BBC’s broad collection of excellent fake news resources: https://www.bbc.co.uk/beyondfakenews/

The Toronto Star’s Washington correspondent, DANIEL DALE, is @ddale8 on Twitter. He’s in the trenches, uncovering facts, digging around and fighting fake news on a daily basis.

*We can’t fully vouch for third-party links–the ones above are all useful, in our opinion, but their owners could change the material on them at any time, etc. etc. etc., blah, blah, blah.” (Please excuse the legalese.) Also, if you have an excellent resource about “fighting fake news” please let us know on OUR FACEBOOK PAGE .

A Must-Have for your Library! How to Spot Fake News & Find the Facts

How to Talk to Kids About the News

Sometimes the news is challenging or frightening. Here are our tips for talking to kids about difficult news.

Help us Remain FREE to Use!

Internationally recognized.

TKN on CTV’s Things to Know T.O.

https://review.bellmedia.ca/view/503979640

TKN on CTV’s Toronto Together

By tkn’s joyce grant order from your local independent bookstore today.

TKN/Scott Radley Show

Tkn/hamilton spectator.

Website Brings ‘Readable, Teachable News’ to Kids , by Kate McCullough, Hamilton Spectator.

1,100+ free articles in our archives

Teaching Kids News posts weekly news articles, written by professional journalists. It’s free to read and use in the classroom. Please also use TKN’s Search feature to search the more than 1,000 articles in our archives.

MG novel by TKN’s Joyce Grant

MG novel TKN’s Joyce Grant

Browse TKN’s Archives

Teaching kids news.

- Follow us on Facebook

- Follow us on Twitter

- Criminal Justice

- Environment

- Politics & Government

- Race & Gender

Expert Commentary

Fake news and the spread of misinformation: A research roundup

This collection of research offers insights into the impacts of fake news and other forms of misinformation, including fake Twitter images, and how people use the internet to spread rumors and misinformation.

Republish this article

This work is licensed under a Creative Commons Attribution-NoDerivatives 4.0 International License .

by Denise-Marie Ordway, The Journalist's Resource September 1, 2017

This <a target="_blank" href="https://journalistsresource.org/politics-and-government/fake-news-conspiracy-theories-journalism-research/">article</a> first appeared on <a target="_blank" href="https://journalistsresource.org">The Journalist's Resource</a> and is republished here under a Creative Commons license.<img src="https://journalistsresource.org/wp-content/uploads/2020/11/cropped-jr-favicon-150x150.png" style="width:1em;height:1em;margin-left:10px;">

It’s too soon to say whether Google ’s and Facebook ’s attempts to clamp down on fake news will have a significant impact. But fabricated stories posing as serious journalism are not likely to go away as they have become a means for some writers to make money and potentially influence public opinion. Even as Americans recognize that fake news causes confusion about current issues and events, they continue to circulate it. A December 2016 survey by the Pew Research Center suggests that 23 percent of U.S. adults have shared fake news, knowingly or unknowingly, with friends and others.

“Fake news” is a term that can mean different things, depending on the context. News satire is often called fake news as are parodies such as the “Saturday Night Live” mock newscast Weekend Update. Much of the fake news that flooded the internet during the 2016 election season consisted of written pieces and recorded segments promoting false information or perpetuating conspiracy theories. Some news organizations published reports spotlighting examples of hoaxes, fake news and misinformation on Election Day 2016.

The news media has written a lot about fake news and other forms of misinformation, but scholars are still trying to understand it — for example, how it travels and why some people believe it and even seek it out. Below, Journalist’s Resource has pulled together academic studies to help newsrooms better understand the problem and its impacts. Two other resources that may be helpful are the Poynter Institute’s tips on debunking fake news stories and the First Draft Partner Network , a global collaboration of newsrooms, social media platforms and fact-checking organizations that was launched in September 2016 to battle fake news. In mid-2018, JR ‘s managing editor, Denise-Marie Ordway, wrote an article for Harvard Business Review explaining what researchers know to date about the amount of misinformation people consume, why they believe it and the best ways to fight it.

—————————

“The Science of Fake News” Lazer, David M. J.; et al. Science , March 2018. DOI: 10.1126/science.aao2998.

Summary: “The rise of fake news highlights the erosion of long-standing institutional bulwarks against misinformation in the internet age. Concern over the problem is global. However, much remains unknown regarding the vulnerabilities of individuals, institutions, and society to manipulations by malicious actors. A new system of safeguards is needed. Below, we discuss extant social and computer science research regarding belief in fake news and the mechanisms by which it spreads. Fake news has a long history, but we focus on unanswered scientific questions raised by the proliferation of its most recent, politically oriented incarnation. Beyond selected references in the text, suggested further reading can be found in the supplementary materials.”

“Who Falls for Fake News? The Roles of Bullshit Receptivity, Overclaiming, Familiarity, and Analytical Thinking” Pennycook, Gordon; Rand, David G. May 2018. Available at SSRN. DOI: 10.2139/ssrn.3023545.

Abstract: “Inaccurate beliefs pose a threat to democracy and fake news represents a particularly egregious and direct avenue by which inaccurate beliefs have been propagated via social media. Here we present three studies (MTurk, N = 1,606) investigating the cognitive psychological profile of individuals who fall prey to fake news. We find consistent evidence that the tendency to ascribe profundity to randomly generated sentences — pseudo-profound bullshit receptivity — correlates positively with perceptions of fake news accuracy, and negatively with the ability to differentiate between fake and real news (media truth discernment). Relatedly, individuals who overclaim regarding their level of knowledge (i.e. who produce bullshit) also perceive fake news as more accurate. Conversely, the tendency to ascribe profundity to prototypically profound (non-bullshit) quotations is not associated with media truth discernment; and both profundity measures are positively correlated with willingness to share both fake and real news on social media. We also replicate prior results regarding analytic thinking — which correlates negatively with perceived accuracy of fake news and positively with media truth discernment — and shed further light on this relationship by showing that it is not moderated by the presence versus absence of information about the new headline’s source (which has no effect on perceived accuracy), or by prior familiarity with the news headlines (which correlates positively with perceived accuracy of fake and real news). Our results suggest that belief in fake news has similar cognitive properties to other forms of bullshit receptivity, and reinforce the important role that analytic thinking plays in the recognition of misinformation.”

“Social Media and Fake News in the 2016 Election” Allcott, Hunt; Gentzkow, Matthew. Working paper for the National Bureau of Economic Research, No. 23089, 2017.

Abstract: “We present new evidence on the role of false stories circulated on social media prior to the 2016 U.S. presidential election. Drawing on audience data, archives of fact-checking websites, and results from a new online survey, we find: (i) social media was an important but not dominant source of news in the run-up to the election, with 14 percent of Americans calling social media their “most important” source of election news; (ii) of the known false news stories that appeared in the three months before the election, those favoring Trump were shared a total of 30 million times on Facebook, while those favoring Clinton were shared eight million times; (iii) the average American saw and remembered 0.92 pro-Trump fake news stories and 0.23 pro-Clinton fake news stories, with just over half of those who recalled seeing fake news stories believing them; (iv) for fake news to have changed the outcome of the election, a single fake article would need to have had the same persuasive effect as 36 television campaign ads.”

“Debunking: A Meta-Analysis of the Psychological Efficacy of Messages Countering Misinformation” Chan, Man-pui Sally; Jones, Christopher R.; Jamieson, Kathleen Hall; Albarracín, Dolores. Psychological Science , September 2017. DOI: 10.1177/0956797617714579.

Abstract: “This meta-analysis investigated the factors underlying effective messages to counter attitudes and beliefs based on misinformation. Because misinformation can lead to poor decisions about consequential matters and is persistent and difficult to correct, debunking it is an important scientific and public-policy goal. This meta-analysis (k = 52, N = 6,878) revealed large effects for presenting misinformation (ds = 2.41–3.08), debunking (ds = 1.14–1.33), and the persistence of misinformation in the face of debunking (ds = 0.75–1.06). Persistence was stronger and the debunking effect was weaker when audiences generated reasons in support of the initial misinformation. A detailed debunking message correlated positively with the debunking effect. Surprisingly, however, a detailed debunking message also correlated positively with the misinformation-persistence effect.”

“Displacing Misinformation about Events: An Experimental Test of Causal Corrections” Nyhan, Brendan; Reifler, Jason. Journal of Experimental Political Science , 2015. doi: 10.1017/XPS.2014.22.

Abstract: “Misinformation can be very difficult to correct and may have lasting effects even after it is discredited. One reason for this persistence is the manner in which people make causal inferences based on available information about a given event or outcome. As a result, false information may continue to influence beliefs and attitudes even after being debunked if it is not replaced by an alternate causal explanation. We test this hypothesis using an experimental paradigm adapted from the psychology literature on the continued influence effect and find that a causal explanation for an unexplained event is significantly more effective than a denial even when the denial is backed by unusually strong evidence. This result has significant implications for how to most effectively counter misinformation about controversial political events and outcomes.”

“Rumors and Health Care Reform: Experiments in Political Misinformation” Berinsky, Adam J. British Journal of Political Science , 2015. doi: 10.1017/S0007123415000186.

Abstract: “This article explores belief in political rumors surrounding the health care reforms enacted by Congress in 2010. Refuting rumors with statements from unlikely sources can, under certain circumstances, increase the willingness of citizens to reject rumors regardless of their own political predilections. Such source credibility effects, while well known in the political persuasion literature, have not been applied to the study of rumor. Though source credibility appears to be an effective tool for debunking political rumors, risks remain. Drawing upon research from psychology on ‘fluency’ — the ease of information recall — this article argues that rumors acquire power through familiarity. Attempting to quash rumors through direct refutation may facilitate their diffusion by increasing fluency. The empirical results find that merely repeating a rumor increases its power.”

“Rumors and Factitious Informational Blends: The Role of the Web in Speculative Politics” Rojecki, Andrew; Meraz, Sharon. New Media & Society , 2016. doi: 10.1177/1461444814535724.

Abstract: “The World Wide Web has changed the dynamics of information transmission and agenda-setting. Facts mingle with half-truths and untruths to create factitious informational blends (FIBs) that drive speculative politics. We specify an information environment that mirrors and contributes to a polarized political system and develop a methodology that measures the interaction of the two. We do so by examining the evolution of two comparable claims during the 2004 presidential campaign in three streams of data: (1) web pages, (2) Google searches, and (3) media coverage. We find that the web is not sufficient alone for spreading misinformation, but it leads the agenda for traditional media. We find no evidence for equality of influence in network actors.”

“Analyzing How People Orient to and Spread Rumors in Social Media by Looking at Conversational Threads” Zubiaga, Arkaitz; et al. PLOS ONE, 2016. doi: 10.1371/journal.pone.0150989.

Abstract: “As breaking news unfolds people increasingly rely on social media to stay abreast of the latest updates. The use of social media in such situations comes with the caveat that new information being released piecemeal may encourage rumors, many of which remain unverified long after their point of release. Little is known, however, about the dynamics of the life cycle of a social media rumor. In this paper we present a methodology that has enabled us to collect, identify and annotate a dataset of 330 rumor threads (4,842 tweets) associated with 9 newsworthy events. We analyze this dataset to understand how users spread, support, or deny rumors that are later proven true or false, by distinguishing two levels of status in a rumor life cycle i.e., before and after its veracity status is resolved. The identification of rumors associated with each event, as well as the tweet that resolved each rumor as true or false, was performed by journalist members of the research team who tracked the events in real time. Our study shows that rumors that are ultimately proven true tend to be resolved faster than those that turn out to be false. Whilst one can readily see users denying rumors once they have been debunked, users appear to be less capable of distinguishing true from false rumors when their veracity remains in question. In fact, we show that the prevalent tendency for users is to support every unverified rumor. We also analyze the role of different types of users, finding that highly reputable users such as news organizations endeavor to post well-grounded statements, which appear to be certain and accompanied by evidence. Nevertheless, these often prove to be unverified pieces of information that give rise to false rumors. Our study reinforces the need for developing robust machine learning techniques that can provide assistance in real time for assessing the veracity of rumors. The findings of our study provide useful insights for achieving this aim.”

“Miley, CNN and The Onion” Berkowitz, Dan; Schwartz, David Asa. Journalism Practice , 2016. doi: 10.1080/17512786.2015.1006933.

Abstract: “Following a twerk-heavy performance by Miley Cyrus on the Video Music Awards program, CNN featured the story on the top of its website. The Onion — a fake-news organization — then ran a satirical column purporting to be by CNN’s Web editor explaining this decision. Through textual analysis, this paper demonstrates how a Fifth Estate comprised of bloggers, columnists and fake news organizations worked to relocate mainstream journalism back to within its professional boundaries.”

“Emotions, Partisanship, and Misperceptions: How Anger and Anxiety Moderate the Effect of Partisan Bias on Susceptibility to Political Misinformation”

Weeks, Brian E. Journal of Communication , 2015. doi: 10.1111/jcom.12164.

Abstract: “Citizens are frequently misinformed about political issues and candidates but the circumstances under which inaccurate beliefs emerge are not fully understood. This experimental study demonstrates that the independent experience of two emotions, anger and anxiety, in part determines whether citizens consider misinformation in a partisan or open-minded fashion. Anger encourages partisan, motivated evaluation of uncorrected misinformation that results in beliefs consistent with the supported political party, while anxiety at times promotes initial beliefs based less on partisanship and more on the information environment. However, exposure to corrections improves belief accuracy, regardless of emotion or partisanship. The results indicate that the unique experience of anger and anxiety can affect the accuracy of political beliefs by strengthening or attenuating the influence of partisanship.”

“Deception Detection for News: Three Types of Fakes” Rubin, Victoria L.; Chen, Yimin; Conroy, Niall J. Proceedings of the Association for Information Science and Technology , 2015, Vol. 52. doi: 10.1002/pra2.2015.145052010083.

Abstract: “A fake news detection system aims to assist users in detecting and filtering out varieties of potentially deceptive news. The prediction of the chances that a particular news item is intentionally deceptive is based on the analysis of previously seen truthful and deceptive news. A scarcity of deceptive news, available as corpora for predictive modeling, is a major stumbling block in this field of natural language processing (NLP) and deception detection. This paper discusses three types of fake news, each in contrast to genuine serious reporting, and weighs their pros and cons as a corpus for text analytics and predictive modeling. Filtering, vetting, and verifying online information continues to be essential in library and information science (LIS), as the lines between traditional news and online information are blurring.”

“When Fake News Becomes Real: Combined Exposure to Multiple News Sources and Political Attitudes of Inefficacy, Alienation, and Cynicism” Balmas, Meital. Communication Research , 2014, Vol. 41. doi: 10.1177/0093650212453600.

Abstract: “This research assesses possible associations between viewing fake news (i.e., political satire) and attitudes of inefficacy, alienation, and cynicism toward political candidates. Using survey data collected during the 2006 Israeli election campaign, the study provides evidence for an indirect positive effect of fake news viewing in fostering the feelings of inefficacy, alienation, and cynicism, through the mediator variable of perceived realism of fake news. Within this process, hard news viewing serves as a moderator of the association between viewing fake news and their perceived realism. It was also demonstrated that perceived realism of fake news is stronger among individuals with high exposure to fake news and low exposure to hard news than among those with high exposure to both fake and hard news. Overall, this study contributes to the scientific knowledge regarding the influence of the interaction between various types of media use on political effects.”

“Faking Sandy: Characterizing and Identifying Fake Images on Twitter During Hurricane Sandy” Gupta, Aditi; Lamba, Hemank; Kumaraguru, Ponnurangam; Joshi, Anupam. Proceedings of the 22nd International Conference on World Wide Web , 2013. doi: 10.1145/2487788.2488033.

Abstract: “In today’s world, online social media plays a vital role during real world events, especially crisis events. There are both positive and negative effects of social media coverage of events. It can be used by authorities for effective disaster management or by malicious entities to spread rumors and fake news. The aim of this paper is to highlight the role of Twitter during Hurricane Sandy (2012) to spread fake images about the disaster. We identified 10,350 unique tweets containing fake images that were circulated on Twitter during Hurricane Sandy. We performed a characterization analysis, to understand the temporal, social reputation and influence patterns for the spread of fake images. Eighty-six percent of tweets spreading the fake images were retweets, hence very few were original tweets. Our results showed that the top 30 users out of 10,215 users (0.3 percent) resulted in 90 percent of the retweets of fake images; also network links such as follower relationships of Twitter, contributed very little (only 11 percent) to the spread of these fake photos URLs. Next, we used classification models, to distinguish fake images from real images of Hurricane Sandy. Best results were obtained from Decision Tree classifier, we got 97 percent accuracy in predicting fake images from real. Also, tweet-based features were very effective in distinguishing fake images tweets from real, while the performance of user-based features was very poor. Our results showed that automated techniques can be used in identifying real images from fake images posted on Twitter.”

“The Impact of Real News about ‘Fake News’: Intertextual Processes and Political Satire” Brewer, Paul R.; Young, Dannagal Goldthwaite; Morreale, Michelle. International Journal of Public Opinion Research , 2013. doi: 10.1093/ijpor/edt015.

Abstract: “This study builds on research about political humor, press meta-coverage, and intertextuality to examine the effects of news coverage about political satire on audience members. The analysis uses experimental data to test whether news coverage of Stephen Colbert’s Super PAC influenced knowledge and opinion regarding Citizens United, as well as political trust and internal political efficacy. It also tests whether such effects depended on previous exposure to The Colbert Report (Colbert’s satirical television show) and traditional news. Results indicate that exposure to news coverage of satire can influence knowledge, opinion, and political trust. Additionally, regular satire viewers may experience stronger effects on opinion, as well as increased internal efficacy, when consuming news coverage about issues previously highlighted in satire programming.”

“With Facebook, Blogs, and Fake News, Teens Reject Journalistic ‘Objectivity’” Marchi, Regina. Journal of Communication Inquiry , 2012. doi: 10.1177/0196859912458700.

Abstract: “This article examines the news behaviors and attitudes of teenagers, an understudied demographic in the research on youth and news media. Based on interviews with 61 racially diverse high school students, it discusses how adolescents become informed about current events and why they prefer certain news formats to others. The results reveal changing ways news information is being accessed, new attitudes about what it means to be informed, and a youth preference for opinionated rather than objective news. This does not indicate that young people disregard the basic ideals of professional journalism but, rather, that they desire more authentic renderings of them.”

Keywords: alt-right, credibility, truth discovery, post-truth era, fact checking, news sharing, news literacy, misinformation, disinformation

5 fascinating digital media studies from fall 2018

Facebook and the newsroom: 6 questions for Siva Vaidhyanathan

About The Author

Denise-Marie Ordway

Fake News, Misleading News, Biased News: Assignments on Evaluating Sources

- Evaluating Sources

- Assignments on Evaluating Sources

- Terms and Definitions

- Open Textbooks

- Fact-Checking Sites and Plug-Ins

- Coronavirus COVID-19

- Fake News and AI

Assignments

- Caulfield, Mike. The Four Moves: Adventures in Fact-Checking for Students

- CORA (Community of Online Research Assignments). Evaluating news sites: Credible or Clickbait?

- McCormick Foundation. Introduction to news literacy: Structured engagement with current and controversial issues.

- University of Delaware. Curing fake news phobia (Google Doc with lesson plan - by Lauren Wallis)

- University of Texas El Paso. News gathering and investigation: An evaluation exercise

- Whiting, Jacquelyn. (2019, September 4). Everyone has invisible bias. This lesson shows students how to recognize it. EdSurge.

More Assignments

C-SPAN Classroom: Lesson idea: Media Literacy and Fake News

SchoolJournalism.com News and media literacy lessons.

Walsh-Moorman, Elizabeth and Katie Ours. Introducing lateral reading before research MLA Style Center. (Objectives include identifying credibitilty and/or bias of a course, identifying how professional fact-checkers assess iinformation vs a general audience.)

- The Media Manipulation Casebook Includes methods, definitions of terms related to misinformaiton, disinformation, and media manipulation.

A Course on News Literacy

Making Sense of the News: News Literacy Lessons for Digital Citizens A six week course offered by The University of Hong Kong & The State University of New York via Coursera, Audit the course for free. Resources include a glossary of terms such as bias, cognitive dissonance, confirmation bias, propaganda, selective dissonance, verfication, etc.

News Literacy. Digital Resource Center. Stony Brook University

Stony Brook University. Digital Resources Center. The 14 Lessons This course pack consists of lessons that can be taught in sequence or separately and cover topics such as verification, fairness and balance, bias, etc. This material is the basis for the Coursera course (above) on news literacy.

Fake New: Curriculum. Cal State University Long Beach

- Fake News: Curriculum Curriculum about fake news, curriculum guides, presentations; instructional strategies from librarian Lesley Farmer.

Fake News in First-Year Writing - Paul Corrigan

- Corrigan, Paul T. Fake News in First-Year Writing. Writing Commons.org A description of a first-year writing course that integrates feeling and fact-checking with a description of the writing projects.

Need to Evaluate a Source? Try a Worksheet

- Evaluating Web Sites: A Checklist (University of Maryland)

Quality of News Sources - You Decide!

Vanessa Otero - a patent attorney - made a chart with her views on various news sites - and you can too! She put out a blank version so you can decide. See her blog post on news quality and her chart on Twitter

Valenza, J. (2016, November 26). Truth, truthiness, triangulation: A news literacy toolkit for a "post-truth" world. School Library Journal.

A course from University of Washington, Seattle, WA

- Calling Bullshit - Course Syllabus A proposal for a course by two professors from University of Washington, Seattle meant to teach students how to recognize. bullshit. "Bullshit is language, statistical figures, data graphics, and other forms of presentation intended to persuade by impressing and overwhelming a reader or listener, with a blatant disregard for truth and logical coherence."

- Check, Please! Starter Course "In this course, we show you how to fact and source-check in five easy lessons, taking about 30 minutes apiece. The entire online curriculum is two and a half to three hours and is suitable homework for the first week of a college-level module on disinformation or online information literacy, or the first few weeks of a course if assigned with other discipline focused homework." This course has been released into the public domain.

- Real vs. Fake. Science vs. Pseudoscience. A course syllabus Fall 2019 A course by Dr. Douglas Duncan, University of Colorado Boulder

A course from University of Michigan on fake news

- Fake News, Lles, and Propaganda: The Class An entire seven week course developed by librarians at the University of Michigan.

Learning Tools Suggested by Richard Byrne

Learning tools suggested by Richard Byrne in his Practical Ed Tech Tip of the Week .

- Can You Spot the Problem with These Headlines? A TED-Ed lesson.

- Checkology: A free version with interactive modules (that become increasingly difficult.)

- Civic Online Reasoning: Lesson plans From the Stanford History Education Group. (SHEG). Lessons on lateral reading with fact-checking organizations, who's behind the information? What's the evidence? Create a free account to the SHEG to access these lessons.

- Spot the Troll A troll is a fake social media account, often created to spread misleading information.- Learn to spot them! From Clemson University's Media Forensics Lab

- This One Weird Trick Will Help You Spot Clickbait. A TED-Ed lesson

- << Previous: Evaluating Sources

- Next: Terms and Definitions >>

- Last Updated: Mar 21, 2024 6:23 PM

- URL: https://libguides.hccfl.edu/fakenews

© 2024 | All rights reserved

- Environmental Science

- Introduction

- Scientific Principles

- Matter and Energy

- Evolution and Ecology

- Biodiversity

- Land Biomes

- Aquatic Ecosystems

- Human Population

- Environmental Toxins

- Plant Agriculture

- Fishing and Aquaculture

- Animal Agriculture

- Global Climate Change

- Air Pollution

- Water Pollution

- Fossil Fuels

- Nuclear Energy

- Renewable Energy

- Answer Keys and Test Bank

- Current Events Articles

- Planet Earth Series

- Blue Planet Series

- Life in the Freezer Series

Site Navigation

- Anatomy & Physiology

Suggested Materials

Topic search.

Email me or visit my LinkedIn profile .

Teacher Resources

Looking to save time on your lesson planning and assessment design?

Answer keys and a test bank can be accessed for a paid subscription.

Fake News Assignment Death by Vaccines

With the growth of social media, fake news websites are appearing with greater frequency. This has begun the rapid spread of misinformation on topics regarding vaccines, food safety, global warming, and many other topics. Students need to be able to evaluate these news sites and soruces.

This assignment will present students with three articles about vaccines: one from the Washington Post, one from Natural News, and the other from a website called "Vaccines.news". Students will be guided through an analysis of the articles, including identifying clickbait, researching the background of the authors, and judging the validity of several statements within the articles.

Essential concepts: Pseudoscience, fake news, news analysis, sources.

Answer Key: Available as part of a environmental science instructor resources subscription .

Save 10% today on your lessons using the code GIVEME10

Fake News Unit – Media Literacy Analysis Unit

Total Pages: 250 pages Answer Key: Included with rubric Teaching Duration: 2 Weeks File Size: 31.7 MB File Type: PDF (Zip)

- Description

This Fake News Unit contains 10 high-interest lessons about media literacy, fake news and digital citizenship to help 21st-century students learn to think critically about the information they consume from print and digital media sources. This is highly relevant to today’s learners as it contains lessons on social media content and algorithms as well as artificial intelligence and deep fakes. Teachers will use these fake news scaffolded lessons starting with an interactive QR code vocabulary scavenger hunt and headline analysis then moving on to specific lessons about fake news and how to spot it.

After the scaffolded lessons, students will love working through the eight different fake news stations about satire, websites, videos, news articles, TED talks, social media algorithms, deep fakes, and AI music in groups with their peers. The Fake News Unit culminates with students creating their own fake news stories in either print or digital formats.

Click Here To View The Preview

Lesson Overview

- Introduction – QR Code Vocabulary Search

- Lesson 1 – What is Media Literacy?

- Lesson 2 – Media Literacy in Action: Headline Analysis

- Lesson 3 – What is Fake News?

- Lesson 4 – Social Media and Fake News

- Lesson 5 – Case Study: The War of the Worlds Radio Broadcast

- Lesson 6 – How to Spot Fake News

- Lesson 7 – Fake News Stations: Spot The Fake (Satire, Websites, Videos, News Articles, Ted Talks, Social Media Algorithms, Deep Fakes)

- Lesson 8 – Digital Citizenship

- Lesson 9 – Creating Fake News Assignment

What’s Inside:

- 10 Engaging Lessons : Created specifically for middle school students, these lessons are designed to capture their attention and foster meaningful discussions.

- Detailed Teacher Lesson Plans : Teachers can seamlessly integrate each lesson into their plans.

- Interactive Content (QR Codes, Scenarios, Group Work) : Lesson variety helps students stay interested and motivated.

- Group Work Stations : Foster collaboration and dialogue with group work stations that encourage peer-to-peer learning and interactive discussions.

- Vocabulary Lesson : Help meet curriculum objectives with this lesson on media vocabulary.

- Historical Example Case Study : Students will learn about a time in history when the media created panic by not disclosing that the news story was fake.

- MP3 Audio Files : Students can read the articles independently or use the provided MP3 audio files.

- Answer Keys : Guide your students through each lesson with the comprehensive answer keys.

- Video Links : Enhance learning through multimedia experiences that reinforce key concepts.

- Graphic Organizers : Help students organize their thoughts and ideas visually, promoting deeper understanding and engagement with the content.

- Pre-Made Google Slideshow : Seamlessly integrate technology into your lessons with a pre-made Google Slideshow that’s ready to use.

- Print & Digital Formats : Cater to your classroom’s needs with both print and digital formats, ensuring accessibility and flexibility.

Teacher Feedback – Fake News Unit:

- “This is honestly one of my favourite resources I have ever purchased. I have done this unit with grade 8 and a 6/7 class and it has been a hit! Students have loved all the opportunities to explore fake news, and to create their own fake news websites. It is honestly such a great resource and is so highly recommended.”

- “This was a fun and engaging unit that is necessary to teach effectively in today’s world! The students enjoyed it too!!!”

- “This is a great way to introduce a very important topic for students who regularly encounter misleading or fake news while they are online. It is a fun unit for a serious topic – lots of ways for students to make connections with the things they are seeing online in social media or hearing from other students. Helps to build critical thinking skills!”

With 10 captivating fake news lessons that include a variety of independent and group work activities, this Fake News Unit ensures that your students are well-prepared to make informed decisions about the media content they consume. Grab this unit today!

Other Engaging Media Literacy Lessons:

Media Literacy Unit – Analyzing Public Service Announcements and Commercials

- Media Literacy Bundle 1 – Consumer Awareness Lessons

- Media Literacy Bundle 2 – Consumer Awareness Lessons

- Media Literacy Review Writing 16 Lessons

Related products

Book Versus Movie Comparison Analysis Project

Media Literacy and Consumer Awareness Lesson – Outlet vs. Retail

Remembrance Day Unit

This free persuasive writing unit is.

- Perfect for engaging students in public speaking and persuasive writing

- Time and energy saving

- Ideal for in-person or online learning

By using highly-engaging rants, your students won’t even realize you’ve channeled their daily rants and complaints into high-quality, writing!

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

Determinants of individuals’ belief in fake news: A scoping review determinants of belief in fake news

Kirill bryanov.

Laboratory for Social and Cognitive Informatics, National Research University Higher School of Economics, St. Petersburg, Russia

Victoria Vziatysheva

Associated data.

All relevant data are available within the paper. Search protocol is described in the text, and Table 3 contains information about all studies included in the review.

Proliferation of misinformation in digital news environments can harm society in a number of ways, but its dangers are most acute when citizens believe that false news is factually accurate. A recent wave of empirical research focuses on factors that explain why people fall for the so-called fake news. In this scoping review, we summarize the results of experimental studies that test different predictors of individuals’ belief in misinformation.

The review is based on a synthetic analysis of 26 scholarly articles. The authors developed and applied a search protocol to two academic databases, Scopus and Web of Science. The sample included experimental studies that test factors influencing users’ ability to recognize fake news, their likelihood to trust it or intention to engage with such content. Relying on scoping review methodology, the authors then collated and summarized the available evidence.

The study identifies three broad groups of factors contributing to individuals’ belief in fake news. Firstly, message characteristics—such as belief consistency and presentation cues—can drive people’s belief in misinformation. Secondly, susceptibility to fake news can be determined by individual factors including people’s cognitive styles, predispositions, and differences in news and information literacy. Finally, accuracy-promoting interventions such as warnings or nudges priming individuals to think about information veracity can impact judgements about fake news credibility. Evidence suggests that inoculation-type interventions can be both scalable and effective. We note that study results could be partly driven by design choices such as selection of stimuli and outcome measurement.

Conclusions

We call for expanding the scope and diversifying designs of empirical investigations of people’s susceptibility to false information online. We recommend examining digital platforms beyond Facebook, using more diverse formats of stimulus material and adding a comparative angle to fake news research.

Introduction

Deception is not a new phenomenon in mass communication: people had been exposed to political propaganda, strategic misinformation, and rumors long before much of public communication migrated to digital spaces [ 1 ]. In the information ecosystem centered around social media, however, digital deception took on renewed urgency, with the 2016 U.S. presidential election marking the tipping point where the gravity of the issue became a widespread concern [ 2 , 3 ]. A growing body of work documents the detrimental effects of online misinformation on political discourse and people’s societally significant attitudes and beliefs. Exposure to false information has been linked to outcomes such as diminished trust in mainstream media [ 4 ], fostering the feelings of inefficacy, alienation, and cynicism toward political candidates [ 5 ], as well as creating false memories of fabricated policy-relevant events [ 6 ] and anchoring individuals’ perceptions of unfamiliar topics [ 7 ].

According to some estimates, the spread of politically charged digital deception in the buildup to and following the 2016 election became a mass phenomenon: for example, Allcott and Gentzkow [ 1 ] estimated that the average US adult could have read and remembered at least one fake news article in the months around the election (but see Allen et al. [ 8 ] for an opposing claim regarding the scale of the fake news issue). Scholarly reflections upon this new reality sparked a wave of research concerned with a specific brand of false information, labelled fake news and most commonly conceptualized as non-factual messages resembling legitimate news content and created with an intention to deceive [ 3 , 9 ]. One research avenue that has seen a major uptick in the volume of published work is concerned with uncovering the factors driving people’s ability to discern fake from legitimate news. Indeed, in order for deceitful messages to exert the hypothesized societal effects—such as catalyzing political polarization [ 10 ], distorting public opinion [ 11 ], and promoting inaccurate beliefs [ 12 ]—the recipients have to believe that the claims these messages present are true [ 13 ]. Furthermore, research shows that the more people find false information encountered on social media credible, the more likely they are to amplify it by sharing [ 14 ]. The factors and mechanisms underlying individuals’ judgements of fake news’ accuracy and credibility thus become a central concern for both theory and practice.

While message credibility has been a longstanding matter of interest for scholars of communication [ 15 ], the post-2016 wave of scholarship can be viewed as distinct on account of its focus on particular news formats, contents, and mechanisms of spread that have been prevalent amid the recent fake news crisis [ 16 ]. Furthermore, unlike previous studies of message credibility, the recent work is increasingly taking a turn towards developing and testing potential solutions to the problem of digital misinformation, particularly in the form of interventions aimed at improving people’s accuracy judgements.

Some scholars argue that the recent rise of fake news is a manifestation of a broader ongoing epistemological shift, where significant numbers of online information consumers move away from the standards of evidence-based reasoning and pursuit of objective truth toward “alternative facts” and partisan simplism—a malaise often labelled as the state of “post-truth” [ 17 , 18 ]. Lewandowsky and colleagues identify large-scale trends such as declining social capital, rising economic inequality and political polarization, diminishing trust in science, and an increasingly fragmented media landscape as the processes underlying the shift toward the “post-truth.” In order to narrow the scope of this report, we specifically focus on the news media component of the larger “post-truth” puzzle. This leads us to consider only the studies that explore the effects of misinformation packaged in news-like formats, perforce leaving out investigations dealing with other forms of online deception–for example, messages coming from political figures and parties [ 19 ] or rumors [ 20 ].

The apparently vast amount and heterogeneity of recent empirical research output addressing the antecedents to people’s belief in fake news calls for integrative work summarizing and mapping the newly generated findings. We are aware of a single review article published to date synthesizing empirical findings on the factors of individuals’ susceptibility to believing fake news in political contexts, a narrative summary of a subset of relevant evidence [ 21 ]. In order to systematically survey the available literature in a way that permits both transparency and sufficient conceptual breadth, we employ a scoping review methodology, most commonly used in medical and public health research. This method prescribes specifying a research question, search strategy, and criteria for inclusion and exclusion, along with the general logic of charting and arranging the data, thus allowing for a transparent, replicable synthesis [ 22 ]. Because it is well-suited for identifying diverse subsets of evidence pertaining to a broad research question [ 23 ], scoping review methodology is particularly relevant to our study’s objectives. We begin our investigation with articulating the following research questions:

- RQ1: What factors have been found to predict individuals’ belief in fake news and their capacity to discern between false and real news?

- RQ2: What interventions have been found to reduce individuals’ belief in fake news and boost their capacity to discern between false and real news?

In the following sections, we specify our methodology and describe the findings using an inductively developed framework organized around groups of factors and dependent variables extracted from the data. Specifically, we approached the analysis without a preconceived categorization of the factors in mind. Following our assessment of the studies included in the sample, we divided them into three groups based on whether the antecedents of belief in fake news that they focus on 1) reside within the individual or 2) are related to the features of the message, source, or information environment or 3) represent interventions specifically designed to tackle the problem of online misinformation. We conclude with a discussion of the state of play in the research area under review, identify strengths and gaps in existing scholarship, and offer potential avenues for further advancing this body of knowledge.

Materials and methods

Our research pipeline has been developed in accordance with PRISMA guidelines for systematic scoping reviews [ 24 ] and contains the following steps: a) development of a review protocol; b) identification of the relevant studies; c) extraction and charting of the data from selected studies, elaboration of the emerging themes; d) collation and summarization of the results; e) assessment of the strengths and limitations of the body of literature, identification of potential paths for addressing the existing gaps and theory advancement.

Search strategy and protocol development