Advertisement

Marketing survey research best practices: evidence and recommendations from a review of JAMS articles

- Methodological Paper

- Published: 10 April 2017

- Volume 46 , pages 92–108, ( 2018 )

Cite this article

- John Hulland 1 ,

- Hans Baumgartner 2 &

- Keith Marion Smith 3

18k Accesses

558 Citations

11 Altmetric

Explore all metrics

Survey research methodology is widely used in marketing, and it is important for both the field and individual researchers to follow stringent guidelines to ensure that meaningful insights are attained. To assess the extent to which marketing researchers are utilizing best practices in designing, administering, and analyzing surveys, we review the prevalence of published empirical survey work during the 2006–2015 period in three top marketing journals— Journal of the Academy of Marketing Science ( JAMS ), Journal of Marketing ( JM ), and Journal of Marketing Research ( JMR )—and then conduct an in-depth analysis of 202 survey-based studies published in JAMS . We focus on key issues in two broad areas of survey research (issues related to the choice of the object of measurement and selection of raters, and issues related to the measurement of the constructs of interest), and we describe conceptual considerations related to each specific issue, review how marketing researchers have attended to these issues in their published work, and identify appropriate best practices.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

What is Qualitative in Qualitative Research

Patrik Aspers & Ugo Corte

Social media marketing strategy: definition, conceptualization, taxonomy, validation, and future agenda

Fangfang Li, Jorma Larimo & Leonidas C. Leonidou

Online influencer marketing

Fine F. Leung, Flora F. Gu & Robert W. Palmatier

In conducting their topical review of publications in JMR , Huber et al. ( 2014 ) show evidence that the incidence of survey work has declined, particularly as new editors more skeptical of the survey method have emerged. They conclude (p. 88)—in looking at the results of their correspondence analysis—that survey research is more of a peripheral than a core topic in marketing. This perspective seems to be more prevalent in JMR than in JM and JAMS , as we note above.

A copy of the coding scheme used is available from the first author.

Several studies used more than one mode.

Traditionally, commercial researchers used phone as their primary collection mode. Today, 60% of commercial studies are conducted online (CASRO 2015 ), growing at a rate of roughly 8% per year.

Although the two categories are not necessarily mutually exclusive, the overlap was small ( n = 4).

This is close to the number of studies in which an explicit sampling frame was employed, which makes sense (i.e., one would not expect a check for non-response bias when a convenience sample is used).

It is interesting to note that Cote and Buckley examined the extent of CMV present in papers published across a variety of disciplines, and found that CMV was lowest for marketing (16%) and highest for the field of education (> 30%). This does not mean, however, that marketers do a consistently good job of accounting for CMV.

In practice, these items need to be conceptually related yet empirically distinct from one another. Using minor variations of the same basic item just to have multiple items does not result in the advantages described here.

In general, the use of PLS (which is usually employed when the measurement model is formative or mixed) was uncommon in our review, so it appears that most studies focused on using reflective measures.

Most of the studies discussing discriminant validity used the approach proposed by Fornell and Larcker ( 1981 ). A recent paper by Voorhees et al. ( 2016 ) suggests use of two approaches to determining discriminant validity: (1) the Fornell and Larcker test and (2) a new approach proposed by Henseler et al. ( 2015 ).

This solution is not a universal panacea. For example, Kammeyer-Mueller et al. ( 2010 ) show using simulated data that under some conditions using distinct data sources can distort estimation. Their point, however, is that the researcher must think carefully about this issue and resist using easy one-size-fits-all solutions.

Podsakoff et al. ( 2003 ) also mention two other techniques—the correlated uniqueness model and the direct product model—but do not recommend their use. Only very limited use of either technique has been made in marketing, so we do not discuss them further in this paper.

These techniques are described more extensively in Podsakoff et al. ( 2003 ), and contrasted to one another. Figure 1 (p. 898) and Table 4 (p. 891) in their paper are particularly helpful in understanding the differences across approaches.

It is unclear why the procedure is called the Harman test, because Harman never proposed the test and it is unlikely that he would be pleased to have his name associated with it. Greene and Organ ( 1973 ) are sometimes cited as an early application of the Harman test (they specifically mention “Harman’s test of the single-factor model,” p. 99), but they in turn refer to an article by Brewer et al. ( 1970 ), in which Harman’s one-factor test is mentioned. Brewer et al. ( 1970 ) argued that before testing the partial correlation between two variables controlling for a third variable, researchers should test whether a single-factor model can account for the correlations between the three variables, and they mentioned that one can use “a simple algebraic solution for extraction of a single factor (Harman 1960 : 122).” If measurement error is present, three measures of the same underlying factor will not be perfectly correlated, and if a single-factor model is consistent with the data, there is no need to consider a multi-factor model (which is implied by the use of partial correlations). It is clear that the article by Brewer et al. does not say anything about systematic method variance, and although Greene and Organ talk about an “artifact due to measurement error” (p. 99), they do not specifically mention systematic measurement error. Schriesheim ( 1979 ), another early application of Harman’s test, describes a factor analysis of 14 variables, citing Harman as a general factor-analytic reference, and concludes, “no general factor was apparent, suggesting a lack of substantial method variance to confound the interpretation of results” (p. 350). It appears that Schriesheim was the first to conflate Harman and testing for common method variance, although Harman was only cited as background for deciding how many factors to extract. Several years later, Podsakoff and Organ ( 1986 ) described Harman’s one-factor test as a post-hoc method to check for the presence of common method variance (pp. 536–537), although they also mention “some problems inherent in its use” (p. 536). In sum, it appears that starting with Schriesheim, the one-factor test was interpreted as a check for the presence of common method variance, although labeling the test Harman’s one-factor test seems entirely unjustified.

Ahearne, M., Haumann, T., Kraus, F., & Wieseke, J. (2013). It’s a matter of congruence: How interpersonal identification between sales managers and salespersons shapes sales success. Journal of the Academy of Marketing Science, 41 (6), 625–648.

Article Google Scholar

Armstrong, J. S., & Overton, T. S. (1977). Estimating nonresponse bias in mail surveys. Journal of Marketing Research, 14 (3), 396–402.

Arnold, T. J., Fang, E. E., & Palmatier, R. W. (2011). The effects of Customer acquisition and retention orientations on a Firm’s radical and incremental innovation performance. Journal of the Academy of Marketing Science, 39 (2), 234–251.

Bagozzi, R. P., & Yi, Y. (1990). Assessing method variance in Multitrait-Multimethod matrices: The case of self-reported affect and perceptions at work. Journal of Applied Psychology, 75 (5), 547–560.

Baker, R., Blumberg, S. J., Brick, J. M., Couper, M. P., Courtright, M., Dennis, J. M., & Kennedy, C. (2010). Research synthesis AAPOR report on online panels. Public Opinion Quarterly, 74 (4), 711–781.

Baker, T. L., Rapp, A., Meyer, T., & Mullins, R. (2014). The role of Brand Communications on front line service employee beliefs, behaviors, and performance. Journal of the Academy of Marketing Science, 42 (6), 642–657.

Baumgartner, H., & Steenkamp, J. B. E. (2001). Response styles in marketing research: A cross-National Investigation. Journal of Marketing Research, 38 (2), 143–156.

Baumgartner, H., & Weijters, B. (2017). Measurement models for marketing constructs. In B. Wierenga & R. van der Lans (Eds.), Springer Handbook of marketing decision models . New York: Springer.

Google Scholar

Bell, S. J., Mengüç, B., & Widing II, R. E. (2010). Salesperson learning, Organizational learning, and retail store performance. Journal of the Academy of Marketing Science, 38 (2), 187–201.

Bergkvist, L., & Rossiter, J. R. (2007). The predictive validity of multiple-item versus single-item measures of the same constructs. Journal of Marketing Research, 44 (2), 175–184.

Berinsky, A. J. (2008). Survey non-response. In W. Donsbach & M. W. Traugott (Eds.), The SAGE Handbook of Public Opinion research (pp. 309–321). Thousand Oaks: SAGE Publications.

Chapter Google Scholar

Brewer, M. B., Campbell, D. T., & Crano, W. D. (1970). Testing a single-factor model as an alternative to the misuse of partial correlations in hypothesis-testing research. Sociometry, 33 (1), 1–11.

Carmines, E. G., and Zeller, R.A. (1979). Reliability and validity assessment. Sage University Paper Series on Quantitative Applications in the Social Sciences , no. 07-017. Beverly Hills: Sage.

CASRO. (2015). Annual CASRO benchmarking financial survey.

Cote, J. A., & Buckley, M. R. (1987). Estimating trait, method, and error variance: Generalizing across 70 construct validation studies. Journal of Marketing Research, 24 (3), 315–318.

Curtin, R., Presser, S., & Singer, E. (2005). Changes in telephone survey nonresponse over the past quarter century. Public Opinion Quarterly, 69 (1), 87–98.

De Jong, A., De Ruyter, K., & Wetzels, M. (2006). Linking employee confidence to performance: A study of self-managing service teams. Journal of the Academy of Marketing Science, 34 (4), 576–587.

Diamantopoulos, A., Riefler, P., & Roth, K. P. (2008). Advancing formative measurement models. Journal of Business Research, 61 (12), 1203–1218.

Doty, D. H., & Glick, W. H. (1998). Common methods bias: Does common methods variance really bias results? Organizational Research Methods, 1 (4), 374–406.

Fornell, C., & Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research, 18 (3), 39–50.

Goodman, J. K., Cryder, C. E., & Cheema, A. (2013). Data collection in a flat world: The strengths and weaknesses of Mechanical Turk samples. Journal of Behavioral Decision Making, 26 (3), 213–224.

Graesser, A. C., Wiemer-Hastings, K., Kreuz, R., Wiemer-Hastings, P., & Marquis, K. (2000). QUAID: A questionnaire evaluation aid for survey methodologists. Behavior Research Methods, Instruments, & Computers, 32 (2), 254–262.

Graesser, A. C., Cai, Z., Louwerse, M. M., & Daniel, F. (2006). Question understanding aid (QUAID) a web facility that tests question comprehensibility. Public Opinion Quarterly, 70 (1), 3–22.

Graham, J. W. (2009). Missing data analysis: Making it work in the real world. Annual Review of Psychology, 60 , 549–576.

Greene, C. N., & Organ, D. W. (1973). An evaluation of causal models linking the received role with job satisfaction. Administrative Science Quarterly , 95-103.

Grégoire, Y., & Fisher, R. J. (2008). Customer betrayal and retaliation: When your best customers become your worst enemies. Journal of the Academy of Marketing Science, 36 (2), 247–261.

Groves, R. M. (2006). Nonresponse rates and nonresponse bias in household surveys. Public Opinion Quarterly, 70 (5), 646–675.

Groves, R. M., & Couper, M. P. (2012). Nonresponse in household interview surveys . New York: Wiley.

Groves, R. M., Couper, M. P., Lepkowski, J. M., Singer, E., & Tourangeau, R. (2004). Survey methodology (Second ed.). New York: McGraw-Hill.

Harman, H. H. (1960). Modern factor analysis . Chicago: University of Chicago Press.

Heckman, J. J. (1979). Sample selection bias as a specification error. Econometrica, 47 , 153–161.

Henseler, J., Ringle, C. M., & Sarstedt, M. (2015). A new criterion for assessing discriminant validity in variance-based structural equation modeling. Journal of the Academy of Marketing Science, 43 (1), 115–135.

Hillygus, D. S., Jackson, N., & Young, M. (2014). Professional respondents in non-probability online panels. In M. Callegaro, R. Baker, J. Bethlehem, A. S. Goritz, J. A. Krosnick, & P. J. Lavrakas (Eds.), Online panel research: A data quality perspective (pp. 219–237). Chichester: John Wiley & Sons.

Hinkin, T. R. (1995). A review of scale development practices in the study of organizations. Journal of Management, 21 (5), 967–988.

Huber, J., Kamakura, W., & Mela, C. F. (2014). A topical history of JMR. Journal of Marketing Research, 51 (1), 84–91.

Hughes, D. E., Le Bon, J., & Rapp, A. (2013). Gaining and leveraging Customer-based competitive intelligence: The pivotal role of social capital and salesperson adaptive selling skills. Journal of the Academy of Marketing Science, 41 (1), 91–110.

Hulland, J. (1999). Use of partial least squares (PLS) in Strategic Management research: A review of four recent studies. Strategic Management Journal, 20 (2), 195–204.

Jap, S. D., & Anderson, E. (2004). Challenges and advances in marketing strategy field research. In C. Moorman & D. R. Lehman (Eds.), Assessing marketing strategy performance (pp. 269–292). Cambridge: Marketing Science Institute.

Jarvis, C. B., MacKenzie, S. B., & Podsakoff, P. M. (2003). A critical review of construct indicators and measurement model misspecification in marketing and consumer research. Journal of Consumer Research, 30 (2), 199–218.

Kamakura, W. A. (2001). From the Editor. Journal of Marketing Research, 38 , 1–2.

Kammeyer-Mueller, J., Steel, P. D., & Rubenstein, A. (2010). The other side of method bias: The perils of distinct source research designs. Multivariate Behavioral Research, 45 (2), 294–321.

Kemery, E. R., & Dunlap, W. P. (1986). Partialling factor scores does not control method variance: A reply to Podsakoff and Todor. Journal of Management, 12 (4), 525–530.

Lance, C. E., Dawson, B., Birkelbach, D., & Hoffman, B. J. (2010). Method effects, measurement error, and substantive conclusions. Organizational Research Methods, 13 (3), 435–455.

Lenzner, T. (2012). Effects of survey question comprehensibility on response quality. Field Methods, 24 (4), 409–428.

Lenzner, T., Kaczmirek, L., & Lenzner, A. (2010). Cognitive burden of survey questions and response times: A psycholinguistic experiment. Applied Cognitive Psychology, 24 (7), 1003–1020.

Lenzner, T., Kaczmirek, L., & Galesic, M. (2011). Seeing through the eyes of the respondent: An eye-tracking study on survey question comprehension. International Journal of Public Opinion Research, 23 (3), 361–373.

Lindell, M. K., & Whitney, D. J. (2001). Accounting for common method variance in cross-sectional research designs. Journal of Applied Psychology, 86 (1), 114–121.

Lohr, S. (1999). Sampling: Design and analysis . Pacific Grove: Duxbury Press.

MacKenzie, S. B., Podsakoff, P. M., & Jarvis, C. B. (2005). The problem of measurement model misspecification in Behavioral and Organizational research and some recommended solutions. Journal of Applied Psychology, 90 (4), 710.

MacKenzie, S. B., Podsakoff, P. M., & Podsakoff, N. P. (2011). Construct measurement and validation procedures in MIS and Behavioral research: Integrating new and existing techniques. MIS Quarterly, 35 (2), 293–334.

Meade, A. W., & Craig, S. B. (2012). Identifying careless responses in survey data. Psychological Methods, 17 (3), 437–455.

Nunnally, J. (1978). Psychometric methods (Second ed.). New York: McGraw Hill.

Oppenheimer, D. M., Meyvis, T., & Davidenko, N. (2009). Instructional manipulation checks: Detecting satisficing to increase statistical power. Journal of Experimental Social Psychology, 45 (4), 867–872.

Ostroff, C., Kinicki, A. J., & Clark, M. A. (2002). Substantive and operational issues of response bias across levels of analysis: An example of climate-satisfaction relationships. Journal of Applied Psychology, 87 (2), 355–368.

Paolacci, G., Chandler, J., & Ipeirotis, P. G. (2010). Running experiments on Amazon Mechanical Turk. Judgment and Decision making, 5 (5), 411–419.

Phillips, L. W. (1981). Assessing measurement error in key informant reports: A methodological note on Organizational analysis in marketing. Journal of Marketing Research, 18 , 395–415.

Podsakoff, P. M., & Organ, D. W. (1986). Self-reports in Organizational research: Problems and prospects. Journal of Management, 12 (4), 531–544.

Podsakoff, P. M., MacKenzie, S. B., Lee, J. Y., & Podsakoff, N. P. (2003). Common method biases in Behavioral research: A critical review of the literature and recommended remedies. Journal of Applied Psychology, 88 (5), 879–903.

Podsakoff, P. M., MacKenzie, S. B., & Podsakoff, N. P. (2012). Sources of method bias in social Science research and recommendations on how to control it. Annual Review of Psychology, 63 , 539–569.

Richardson, H. A., Simmering, M. J., & Sturman, M. C. (2009). A tale of three perspectives: Examining post hoc statistical techniques for detection and correction of common method variance. Organizational Research Methods, 12 (4), 762–800.

Rindfleisch, A, & Antia, K. D. (2012). Survey research in B2B marketing: Current challenges and emerging opportunities. In G. L. Lilien, & R. Grewal (Eds.), Handbook of Business-to-Business marketing (pp 699–730). Northampton: Edward Elgar.

Rindfleisch, A., Malter, A. J., Ganesan, S., & Moorman, C. (2008). Cross-sectional versus longitudinal survey research: Concepts, findings, and guidelines. Journal of Marketing Research, 45 (3), 261–279.

Rossiter, J. R. (2002). The C-OAR-SE procedure for scale development in marketing. International Journal of Research in Marketing, 19 (4), 305–335.

Schaller, T. K., Patil, A., & Malhotra, N. K. (2015). Alternative techniques for assessing common method variance: An analysis of the theory of planned behavior research. Organizational Research Methods, 18 (2), 177–206.

Schriesheim, C. A. (1979). The similarity of individual directed and group directed leader behavior descriptions. Academy of Management Journal., 22 (2), 345–355.

Schuman, H., & Presser, N. (1981). Questions and answers in attitude surveys . New York: Academic.

Schwarz, N., Groves, R., & Schuman, H. (1998). Survey methods. In D. Gilbert, S. Fiske, & G. Lindzey (Eds.), Handbook of social psychology (Vol. 1, 4th ed., pp. 143–179). New York: McGraw Hill.

Simmering, M. J., Fuller, C. M., Richardson, H. A., Ocal, Y., & Atinc, G. M. (2015). Marker variable choice, reporting, and interpretation in the detection of common method variance: A review and demonstration. Organizational Research Methods, 18 (3), 473–511.

Song, M., Di Benedetto, C. A., & Nason, R. W. (2007). Capabilities and financial performance: The moderating effect of Strategic type. Journal of the Academy of Marketing Science, 35 (1), 18–34.

Stock, R. M., & Zacharias, N. A. (2011). Patterns and performance outcomes of innovation orientation. Journal of the Academy of Marketing Science, 39 (6), 870–888.

Sudman, S., Bradburn, N. M., & Schwarz, N. (1996). Thinking about answers: The application of cognitive processes to survey methodology . San Francisco: Jossey-Bass.

Summers, J. O. (2001). Guidelines for conducting research and publishing in marketing: From conceptualization through the review process. Journal of the Academy of Marketing Science, 29 (4), 405–415.

The American Association for Public Opinion Research. (2016). Standard definitions: Final dispositions of case codes and outcome rates for surveys (9th ed.) AAPOR.

Tourangeau, R., Rips, L. J., & Rasinski, K. (2000). The psychology of survey response . Cambridge: Cambridge University Press.

Book Google Scholar

Voorhees, C. M., Brady, M. K., Calantone, R., & Ramirez, E. (2016). Discriminant validity testing in marketing: An analysis, causes for concern, and proposed remedies. Journal of the Academy of Marketing Science, 44 (1), 119–134.

Wall, T. D., Michie, J., Patterson, M., Wood, S. J., Sheehan, M., Clegg, C. W., & West, M. (2004). On the validity of subjective measures of company performance. Personnel Psychology, 57 (1), 95–118.

Wei, Y. S., Samiee, S., & Lee, R. P. (2014). The influence of organic Organizational cultures, market responsiveness, and product strategy on firm performance in an emerging market. Journal of the Academy of Marketing Science, 42 (1), 49–70.

Weijters, B., Baumgartner, H., & Schillewaert, N. (2013). Reversed item bias: An integrative model. Psychological Methods, 18 (3), 320–334.

Weisberg, H. F. (2005). The Total survey error approach: A guide to the new Science of survey research . Chicago: Chicago University Press.

Wells, W. D. (1993). Discovery-oriented consumer research. Journal of Consumer Research, 19 (4), 489–504.

Williams, L. J., Hartman, N., & Cavazotte, F. (2010). Method variance and marker variables: A review and comprehensive CFA marker technique. Organizational Research Methods, 13 (3), 477–514.

Winship, C., & Mare, R. D. (1992). Models for sample selection bias. Annual Review of Sociology, 18 (1), 327–350.

Wittink, D. R. (2004). Journal of marketing research: 2 Ps. Journal of Marketing Research, 41 (1), 1–6.

Zinkhan, G. M. (2006). From the Editor: Research traditions and patterns in marketing scholarship. Journal of the Academy of Marketing Science, 34 , 281–283.

Download references

Acknowledgements

The constructive comments of the Editor-in-Chief, Area Editor, and three reviewers are gratefully acknowledged.

Author information

Authors and affiliations.

Terry College of Business, University of Georgia, 104 Brooks Hall, Athens, GA, 30602, USA

John Hulland

Smeal College of Business, Penn State University, State College, PA, USA

Hans Baumgartner

D’Amore-McKim School of Business, Northeastern University, Boston, MA, USA

Keith Marion Smith

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to John Hulland .

Additional information

Aric Rindfleisch served as Guest Editor for this article.

Putting the Harman test to rest

A moment’s reflection will convince most researchers that the following two assumptions about method variance are entirely unrealistic: (1) most of the variation in ratings made in response to items meant to measure substantive constructs is due to method variance, and (2) a single source of method variance is responsible for all of the non-substantive variation in ratings. No empirical evidence exists to support these assumptions. Yet when it comes to testing for the presence of unwanted method variance in data, many researchers suspend disbelief and subscribe to these implausible assumptions. The reason, presumably, is that doing so conveniently satisfies two desiderata. First, testing for method variance has become a sine qua non in certain areas of research (e.g., managerial studies), so it is essential that the research contain some evidence that method variance was evaluated. Second, basing a test of method variance on procedures that are strongly biased against detecting method variance essentially guarantees that no evidence of method variance will ever be found in the data.

Although various procedures have been proposed to examine method variance, the most popular is the so-called Harman one-factor test, which makes both of the foregoing assumptions. Footnote 14 While the logic underlying the Harman test is convoluted, it seems to go as follows: If a single factor can account for the correlation among a set of measures, then this is prima facie evidence of common method variance. In contrast, if multiple factors are necessary to account for the correlations, then the data are free of common method variance. Why one factor indicates common method variance and not substantive variance (e.g., several substantive factors that lack discriminant validity), and why several factors indicate multiple substantive factors and not multiple sources of method variance remains unexplained. Although it is true that “if a substantial amount of common method variance is present, either (a) a single factor will emerge from the factor analysis, or (b) one ‘general’ factor will account for the majority of the covariance in the independent and criterion variables” (Podsakoff and Organ 1986 , p. 536), it is a logical fallacy (i.e., affirming the consequent) to argue that the existence of a single common factor (necessarily) implicates common method variance.

Apart from the inherent flaws of the test, several authors have pointed out various other difficulties associated with the Harman test (e.g., see Podsakoff et al. 2003 ). For example, it is not clear how much of the total variance a general factor has to account for before one can conclude that method variance is a problem. Furthermore, the likelihood that a general factor will account for a large portion of the variance decreases as the number of variables analyzed increases. Finally, the test only diagnoses potential problems with method variance but does not correct for them (e.g., Podsakoff and Organ 1986 ; Podsakoff et al. 2003 ). More sophisticated versions of the test have been proposed, which correct some of these shortcoming (e.g., if a confirmatory factor analysis is used, explicit tests of the tenability of a one-factor model are available), but the faulty logic of the test cannot be remedied.

In fact, the most misleading application of the Harman test occurs when the variance accounted for by a general factor is partialled from the observed variables. Since it is likely that the general factor contains not only method variance but also substantive variance, this means that partialling will not only remove common method variance but also substantive variance. Although researchers will most often argue that common method variance is not a problem since partialling a general factor does not materially affect the results, this conclusion is also misleading, because the test is usually conducted in such a way that the desired result is favored. For example, in most cases all loadings on the method factor are restricted to be equal, which makes the questionable assumption that the presumed method factor influences all observed variables equally, even though this assumption is not imposed for the trait loadings.

In summary, the Harman test is entirely non-diagnostic about the presence of common method variance in data. Researchers should stop going through the motions of conducting a Harman test and pretending that they are performing a meaningful investigation of systematic errors of measurement.

Rights and permissions

Reprints and permissions

About this article

Hulland, J., Baumgartner, H. & Smith, K.M. Marketing survey research best practices: evidence and recommendations from a review of JAMS articles. J. of the Acad. Mark. Sci. 46 , 92–108 (2018). https://doi.org/10.1007/s11747-017-0532-y

Download citation

Received : 19 August 2016

Accepted : 29 March 2017

Published : 10 April 2017

Issue Date : January 2018

DOI : https://doi.org/10.1007/s11747-017-0532-y

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Survey research

- Best practices

- Literature review

- Survey error

- Common method variance

- Non-response error

- Find a journal

- Publish with us

- Track your research

IMAGES

VIDEO

COMMENTS

Speci cally, new technology (1) sup-. provides new types of data that enable new analytic methods, (3) creates marketing innovations, and (4) requires new strate-gic marketing frameworks. It is important to keep in mind that human representatives of the rm with machine agents, facilitat-. and Cian 2022).

Explore the latest full-text research PDFs, articles, conference papers, preprints and more on MARKETING RESEARCH. Find methods information, sources, references or conduct a literature review on ...

Abstract. Marketing strategy is a construct that lies at the conceptual heart of the field of strategic marketing and is central to the practice of marketing. It is also the area within which many ...

Marketing innovation: a systematic review. June 2020. Journal of Marketing Management 36 (5):1-31. DOI: 10.1080/0267257X.2020.1774631. Authors: Sharon Purchase. University of Western Australia ...

specific objectives: (a) to develop a framework through which to assess the current state of. research conducted within marketing strategy; (b) to illuminate and illustrate the "state of. knowledge" in core sub-domains of marketing strategy development and execution; and (c), to.

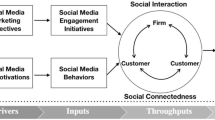

1 INTRODUCTION. The exponential growth of social media during the last decade has drastically changed the dynamics of firm-customer interactions and transformed the marketing environment in many profound ways.1 For example, marketing communications are shifting from one to many to one to one, as customers are changing from being passive observers to being proactive collaborators, enabled by ...

Developing (D3) Market Environments; Managing Business and Innovation in Emerging Markets; and Generalizations in Marketing: Systematic Reviews and Meta Analyses.1 These efforts primarily sought to expand our research scope to include substantive or managerially impactful papers from different domains of the marketing discipline and geographi-

6. Discuss the considerations involved in selecting marketing scales. 7. Explain ways researchers can ensure the reliability and validity of scales. Introduction . Marketing scales are used extensively by marketing researchers to measure a wide array of beliefs, attitudes, and behaviors.

Influencer marketing is a modern tactic used by brands to enhance their visibility to their target audience by using the services of influential people. The objective of this ... the 2010 Pew Research report, the millennial is defined as having been born between 1977 and 1992 (Norén, L. 2011). The reviewers of the millennial generation have a ...

Journal of Marketing Research Search the journal. JMR publishes articles representing the entire spectrum of research in marketing, ranging from analytical models of marketing phenomena to descriptive and case studies. Journal information. All Issues 2020s 2020 (Vol. 57) No. 6 DECEMBER 2020 pp. 985-1168 ...

Brief History of Marketing Research 10 The Marketing Research Industry Today 11 Conducting Research In-House Versus Outsourcing 11 Full-Service and Boutique Market Research Firms 14 Sample Aggregators 14 Emerging Trends in Marketing Research 15 Telecommunications Technology 16 Economics 17 Competition 17 Overview of the Text 18 Global Concerns ...

This research aims to. study in depth the factors that affect consumers' ability to market products online and to then. develop and focus on the most productive measures of marketing so that ...

According to the dictionary, the word 'research' means to search or investigate exhaustively or in detail. The thesaurus gives as a synonym for 'research' the word 'inquiry', which means the act of seeking truth, information or knowledge. So market research can be defined as a detailed search for the truth.

While not by a large margin, research on marketing strategy (as delineated in Fig. 1) comprises the smallest number (less than 6% of all published papers) of the different types of strategic marketing papers coded in our review across the six journals we examine (vs. Tactics, Internal/External Environment, Inputs, and Outputs). However, we also ...

We present an integrative review of existing marketing research on mobile apps, clarifying and expanding what is known around how apps shape customer experiences and value across iterative customer journeys, leading to the attainment of competitive advantage, via apps (in instances of apps attached to an existing brand) and for apps (when the app is the brand). To synthetize relevant knowledge ...

Despite particular research conducted on the issues related to digital marketing and marketing analytics, additional attention is needed to study the revolution and potentially disruptive nature of these domains (Petrescu and Krishen 2021, 2022).Considering the substantial impact of digital marketing and marketing analytics in the current competitive and demanding business landscape, the ...

Survey research methodology is widely used in marketing, and it is important for both the field and individual researchers to follow stringent guidelines to ensure that meaningful insights are attained. To assess the extent to which marketing researchers are utilizing best practices in designing, administering, and analyzing surveys, we review the prevalence of published empirical survey work ...