Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 14 December 2022

Advancing ethics review practices in AI research

- Madhulika Srikumar ORCID: orcid.org/0000-0002-6776-4684 1 ,

- Rebecca Finlay 1 ,

- Grace Abuhamad 2 ,

- Carolyn Ashurst 3 ,

- Rosie Campbell 4 ,

- Emily Campbell-Ratcliffe 5 ,

- Hudson Hongo 1 ,

- Sara R. Jordan 6 ,

- Joseph Lindley ORCID: orcid.org/0000-0002-5527-3028 7 ,

- Aviv Ovadya ORCID: orcid.org/0000-0002-8766-0137 8 &

- Joelle Pineau ORCID: orcid.org/0000-0003-0747-7250 9 , 10

Nature Machine Intelligence volume 4 , pages 1061–1064 ( 2022 ) Cite this article

9234 Accesses

10 Citations

46 Altmetric

Metrics details

A Publisher Correction to this article was published on 11 January 2023

This article has been updated

The implementation of ethics review processes is an important first step for anticipating and mitigating the potential harms of AI research. Its long-term success, however, requires a coordinated community effort, to support experimentation with different ethics review processes, to study their effect, and to provide opportunities for diverse voices from the community to share insights and foster norms.

You have full access to this article via your institution.

As artificial intelligence (AI) and machine learning (ML) technologies continue to advance, awareness of the potential negative consequences on society of AI or ML research has grown. Anticipating and mitigating these consequences can only be accomplished with the help of the leading experts on this work: researchers themselves.

Several leading AI and ML organizations, conferences and journals have therefore started to implement governance mechanisms that require researchers to directly confront risks related to their work that can range from malicious use to unintended harms. Some have initiated new ethics review processes, integrated within peer review, which primarily facilitate a reflection on the potential risks and effects on society after the research is conducted (Box 1 ). This is distinct from other responsibilities that researchers undertake earlier in the research process, such as the protection of the welfare of human participants, which are governed by bodies such as institutional review boards (IRBs).

Box 1 Current ethics review practices

Current ethics review practices can be thought of as a sliding scale that varies according to how submitting authors must conduct an ethical analysis and document it in their contributions. Most conferences and journals are yet to initiate ethics review.

Key examples of different types of ethics review process are outlined below.

Impact statement

NeurIPS 2020 broader impact statements - all authors were required to include a statement of the potential broader impact of their work, such as its ethical aspects and future societal consequences of the research, including positive and negative effects. Organizers also specified additional evaluation criteria for paper reviewers to flag submissions with potential ethical issues.

Other examples include the NAACL 2021 and the EMNLP 2021 ethical considerations sections, which encourages authors and reviewers to consider ethical questions in their submitted papers.

Nature Machine Intelligence asks authors for ethical and societal impact statements in papers that involve the identification or detection of humans or groups of humans, including behavioural and socio-economic data.

NeurIPS 2021 paper checklist - a checklist to prompt authors to reflect on potential negative societal effects of their work during the paper writing process (as well as other criteria). Authors of accepted papers were encouraged to include the checklist as an appendix. Reviewers could flag papers that required additional ethics review by the appointed ethics committee.

Other examples include the ACL Rolling Review (ARR) Responsible NLP Research checklist, which is designed to encourage best practices for responsible research.

Code of ethics or guidelines

International Conference on Learning Representations (ICLR) code of ethics - ICLR required authors to review and acknowledge the conference’s code of ethics during the submission process. Authors were not expected to include discussion on ethical aspects in their submissions unless necessary. Reviewers were encouraged to flag papers that may violate the code of ethics.

Other examples include the ACM Code of Ethics and Professional Conduct, which considers ethical principles but through the wider lens of professional conduct.

Although these initiatives are commendable, they have yet to be widely adopted. They are being pursued largely without the benefit of community alignment. As researchers and practitioners from academia, industry and non-profit organizations in the field of AI and its governance, we believe that community coordination is needed to ensure that critical reflection is meaningfully integrated within AI research to mitigate its harmful downstream consequences. The pace of AI and ML research and its growing potential for misuse necessitates that this coordination happen today.

Writing in Nature Machine Intelligence , Prunkl et al. 1 argue that the AI research community needs to encourage public deliberation on the merits and future of impact statements and other self-governance mechanisms in conference submissions. We agree. Here, we build on this suggestion, and provide three recommendations to enable this effective community coordination, as more ethics review approaches begin to emerge across conferences and journals. We believe that a coordinated community effort will require: (1) more research on the effects of ethics review processes; (2) more experimentation with such processes themselves; and (3) the creation of venues in which diverse voices both within and beyond the AI or ML community can share insights and foster norms. Although many of the challenges we address have been previously highlighted 1 , 2 , 3 , 4 , 5 , 6 , this Comment takes a wider view, calling for collaboration between different conferences and journals by contextualizing this conversation against more recent studies 7 , 8 , 9 , 10 , 11 and developments.

Developments in AI research ethics

In the past, many applied scientific communities have contended with the potential harmful societal effects of their research. The infamous anthrax attacks in 2001, for example, catalysed the creation of the National Science Advisory Board for Biosecurity to prevent the misuse of biomedical research. Virology, in particular, has had long-running debates about the responsibility of individual researchers conducting gain-of-function research. Today, the field of AI research finds itself at a similar juncture 12 . Algorithmic systems are now being deployed for high-stakes applications such as law enforcement and automated decision-making, in which the tools have the potential to increase bias, injustice, misuse and other harms at scale. The recent adoption of ethics and impact statements and checklists at some AI conferences and journals signals a much-needed willingness to deal with these issues. However, these ethics review practices are still evolving and are experimental in nature. The developments acknowledge gaps in existing, well-established governance mechanisms, such as IRBs, which focus on risks to human participants rather than risks to society as a whole. This limited focus leaves ethical issues such as the welfare of data workers and non-participants, and the implications of data generated by or about people outside of their scope 6 . We acknowledge that such ethical reflection, beyond IRB mechanisms, may also be relevant to other academic disciplines, particularly those for whom large datasets created by or about people are increasingly common, but such a discussion is beyond the scope of this piece. The need to reflect on ethical concerns seems particularly pertinent within AI, because of its relative infancy as a field, the rapid development of its capabilities and outputs, and its increasing effects on society.

In 2020, the NeurIPS ML conference required all papers to carry a ‘broader impact’ statement examining the ethical and societal effects of the research. The conference updated its approach in 2021, asking authors to complete a checklist and to document potential downstream consequences of their work. In the same year, the Partnership on AI released a white paper calling for the field to expand peer review criteria to consider the potential effects of AI research on society, including accidents, unintended consequences, inappropriate applications and malicious uses 3 . In an editorial citing the white paper, Nature Machine Intelligence announced that it would ask submissions to carry an ethical statement when the research involves the identification of individuals and related sensitive data 13 , recognizing that mitigating downstream consequences of AI research cannot be completely disentangled from how the research itself is conducted. In another recent development, Stanford University’s Ethics and Society Review (ESR) requires AI researchers who apply for funding to identify if their research poses any risks to society and also explain how those risks will be mitigated through research design 14 .

Other developments include the rising popularity of interdisciplinary conferences examining the effects of AI, such as the ACM Conference on Fairness, Accountability, and Transparency (FAccT), and the emergence of ethical codes of conduct for professional associations in computer science, such as the Association for Computing Machinery (ACM). Other actors have focused on upstream initiatives such as the integration of ethics reflection into all levels of the computer science curriculum.

Reactions from the AI research community to the introduction of ethics review practices include fears that these processes could restrict open scientific inquiry 3 . Scholars also note the inherent difficulty of anticipating the consequences of research 1 , with some AI researchers expressing concern that they do not have the expertise to perform such evaluations 7 . Other challenges include concerns about the lack of transparency in review practices at corporate research labs (which increasingly contribute to the most highly cited papers at premier AI conferences such as NeurIPS and ICML 9 ) as well as academic research culture and incentives supporting the ‘publish or perish’ mentality that may not allow time for ethical reflection.

With the emergence of these new attempts to acknowledge and articulate unique ethical considerations in AI research and the resulting concerns from some researchers, the need for the AI research community to come together to experiment, share knowledge and establish shared best practices is all the more urgent. We recommend the following three steps.

Study community behaviour and share learnings

So far, there are limited studies that have explored the responses of ML researchers to the launch of experimental ethics review practices. To understand how behaviour is changing and how to align practice with intended effect, we need to study what is happening and share learnings iteratively to advance innovation. For example, in response to the NeurIPS 2020 requirement for broader impact statements, a paper found that most researchers surveyed spent fewer than two hours working on this process 7 , perhaps retroactively towards the end of their research, making it difficult to know whether this reflection influenced or shifted research directions or not. Surveyed researchers also expressed scepticism about the mandated reflection on societal impacts 7 . An analysis of preprints found that researchers assessed impact through the narrow lens of technical contributions (that is, describing their work in the context of how it contributes to the research space and not how it may affect society), thereby overlooking potential effects on vulnerable stakeholders 8 . A qualitative analysis of a larger sample 10 and a quantitative analysis of all submitted papers 11 found that engagement was highly variable, and that researchers tended to favour the discussion of positive effects over negative effects.

We need to understand what works. These findings, all drawn from studies examining the implementation of ethics review at NeurIPS 2020, point to a pressing need to review actual versus intended community behaviour more thoroughly and consistently to evaluate the effectiveness of ethics review practices. We recognize that other fields have considered ethics in research in different ways. To get started, we propose the following approach, building on and expanding the analysis of Prunkl et al. 1 .

First, clear articulation of the purposes behind impact statements and other ethics review requirements is needed to evaluate efficacy and motivate future iterations by the community. Publication venues that organize ethics review must communicate expectations of this process comprehensively both at the level of individual contribution and for the community at large. At the individual level, goals could include encouraging researchers to reflect on the anticipated effects on society. At the community level, goals could include creating a culture of shared responsibility among researchers and (in the longer run) identifying and mitigating harms.

Second, because the exercise of anticipating downstream effects can be abstract and risks being reduced to a box-ticking endeavour, we need more data to ascertain whether they effectively promote reflection. Similar to the studies above, conference organizers and journal editors must monitor community behaviour through surveys with researchers and reviewers, partner with information scientists to analyse the responses 15 , and share their findings with the larger community. Reviewing community attitudes more systematically can provide data both on the process and effect of reflecting on harms for individual researchers, the quality of exploration encountered by reviewers, and uncover systemic challenges to practicing thoughtful ethical reflection. Work to better understand how AI researchers view their responsibility about the effects of their work in light of changing social contexts is also crucial.

Evaluating whether AI or ML researchers are more explicit about the downsides of their research in their papers is a preliminary metric for measuring change in community behaviour at large 2 . An analysis of the potential negative consequences of AI research can consider the types of application the research can make possible, the potential uses of those applications, and the societal effects they can cause 4 .

Building on the efforts at NeurIPS 16 and NAACL 17 , we can openly share our learnings as conference organizers and ethics committee members to gain a better understanding of what does and does not work.

Community behaviour in response to ethics review at the publication stage must also be studied to evaluate how structural and cultural forces throughout the research process can be reshaped towards more responsible research. The inclusion of diverse researchers and ethics reviewers, as well as people who face existing and potential harm, is a prerequisite to conduct research responsibly and improve our ability to anticipate harms.

Expand experimentation of ethical review

The low uptake of ethics review practices, and the lack of experimentation with such processes, limits our ability to evaluate the effectiveness of different approaches. Experimentation cannot be limited to a few conferences that focus on some subdomains of ML and computing research — especially for subdomains that envision real-world applications such as in employment, policing and healthcare settings. For instance, NeurIPS, which is largely considered a methods and theoretical conference, began an ethics review process in 2020, whereas conferences closer to applications, such as top-tier conferences in computer vision, have yet to implement such practices.

Sustained experimentation across subfields of AI can help us to study actual community behaviour, including differences in researcher attitudes and the unique opportunities and challenges that come with each domain. In the absence of accepted best practices, implementing ethics review processes will require conference organizers and journal editors to act under uncertainty. For that reason, we recognize that it may be easier for publication venues to begin their ethics review process by making it voluntary for authors. This can provide researchers and reviewers with the opportunity to become familiar with ethical and societal reflection, remove incentives for researchers to ‘game’ the process, and help the organizers and wider community to get closer to identifying how they can best facilitate the reflection process.

Create venues for debate, alignment and collective action

This work requires considerable cultural and institutional change that goes beyond the submission of ethical statements or checklists at conferences.

Ethical codes in scientific research have proven to be insufficient in the absence of community-wide norms and discussion 1 . Venues for open exchange can provide opportunities for researchers to share their experiences and challenges with ethical reflection. Such venues can be conducive to reflect on values as they evolve in AI or ML research, such as topics chosen for research, how research is conducted, and what values best reflect societal needs.

The establishment of venues for dialogue where conference organizers and journal editors can regularly share experiences, monitor trends in attitudes, and exchange insights on actual community behaviour across domains, while considering the evolving research landscape and range of opinions, is crucial. These venues would bring together an international group of actors involved throughout the research process, from funders, research leaders, and publishers to interdisciplinary experts adopting a critical lens on AI impact, including social scientists, legal scholars, public interest advocates, and policymakers.

In addition, reflection and dialogue can have a powerful role in influencing the future trajectory of a technology. Historically, gatherings convened by scientists have had far-reaching effects — setting the norms that guide research, and also creating practices and institutions to anticipate risks and inform downstream innovation. The Asilomar Conference on Recombinant DNA in 1975 and the Bermuda Meetings on genomic data sharing in the 1990s are instructive examples of scientists and funders, respectively, creating spaces for consensus-building 18 , 19 .

Proposing a global forum for gene-editing, scholars Jasanoff and Hulburt argued that such a venue should promote reflection on “what questions should be asked, whose views must be heard, what imbalances of power should be made visible, and what diversity of views exist globally” 20 . A forum for global deliberation on ethical approaches to AI or ML research will also need to do this.

By focusing on building the AI research field’s capacity to measure behavioural change, exchange insights, and act together, we can amplify emerging ethical review and oversight efforts. Doing this will require coordination across the entire research community and, accordingly, will come with challenges that need to be considered by conference organizers and others in their funding strategies. That said, we believe that there are important incremental steps that can be taken today towards realizing this change. For example, hosting an annual workshop on ethics review at pre-eminent AI conferences, or holding public panels on this subject 21 , hosting a workshop to review ethics statements 22 , and bringing conference organizers together 23 . Recent initiatives undertaken by AI research teams at companies to implement ethics review processes 24 , better understand societal impacts 25 and share learnings 26 , 27 also show how industry practitioners can have a positive effect. The AI community recognizes that more needs to be done to mitigate this technology’s potential harms. Recent developments in ethics review in AI research demonstrate that we must take action together.

Change history

11 january 2023.

A Correction to this paper has been published: https://doi.org/10.1038/s42256-023-00608-6

Prunkl, C. E. A. et al. Nat. Mach. Intell. 3 , 104–110 (2021).

Article Google Scholar

Hecht, B. et al. Preprint at https://doi.org/10.48550/arXiv.2112.09544 (2021).

Partnership on AI. https://go.nature.com/3UUX0p3 (2021).

Ashurst, C. et al. https://go.nature.com/3gsQfvp (2020).

Hecht, B. https://go.nature.com/3AASZhf (2020).

Ashurst, C., Barocas, S., Campbell, R., Raji, D. in FAccT ‘22: 2022 ACM Conf. on Fairness, Accountability, and Transparency 2057–2068 (2022).

Abuhamad, G. et al. Preprint at https://arxiv.org/abs/2011.13032 (2020).

Boyarskaya, M. et al. Preprint at https://arxiv.org/abs/2011.13416 (2020).

Birhane, A. et al. in FAccT ‘ 22: 2022 ACM Conference on Fairness, Accountability, and Transparency 173–184 (2022).

Nanayakkara, P. et al. in AIES ‘ 21: Proc. 2021 AAAI/ACM Conference on AI, Ethics, and Society 795–806 (2021).

Ashurst, C., Hine, E., Sedille, P. & Carlier, A. in FAccT ‘22: 2022 ACM Conf. on Fairness, Accountability, and Transparency 2047–2056 (2022).

National Academies of Sciences, Engineering, and Medicine. https://go.nature.com/3UTKOEJ (date accessed 16 September 2022).

Nat. Mach. Intell . 3 , 367 (2021).

Bernstein, M. S. et al. Proc. Natl Acad. Sci. USA 118 , e2117261118 (2021).

Pineau, J. et al. J. Mach. Learn. Res. 22 , 7459–7478 (2021).

Google Scholar

Benjio, S. et al. Neural Information Processing Systems. https://go.nature.com/3tQxGEO (2021).

Bender, E. M. & Fort, K. https://go.nature.com/3TWnbua (2021).

Gregorowius, D., Biller-Andorno, N. & Deplazes-Zemp, A. EMBO Rep. 18 , 355–358 (2017).

Jones, K. M., Ankeny, R. A. & Cook-Deegan, R. J. Hist. Biol. 51 , 693–805 (2018).

Jasanoff, S. & Hurlbut, J. B. Nature 555 , 435–437 (2018).

Partnership on AI. https://go.nature.com/3EpQwY4 (2021).

Sturdee, M. et al. in CHI Conf.Human Factors in Computing Systems Extended Abstracts (CHI ’21 Extended Abstracts) ; https://doi.org/10.1145/3411763.3441330 (2021).

Partnership on AI. https://go.nature.com/3AzdNFW (2022).

DeepMind. https://go.nature.com/3EQyUWT (2022).

Meta AI. https://go.nature.com/3i3PBVX (2022).

Munoz Ferrandis, C. OpenRAIL; https://huggingface.co/blog/open_rail (2022).

OpenAI. https://go.nature.com/3GyZPYk (2022).

Download references

Author information

Authors and affiliations.

Partnership on AI, San Francisco, CA, USA

Madhulika Srikumar, Rebecca Finlay & Hudson Hongo

ServiceNow, Santa Clara, CA, USA

Grace Abuhamad

The Alan Turing Institute, London, UK

Carolyn Ashurst

OpenAI, San Francisco, CA, USA

Rosie Campbell

Centre for Data Ethics and Innovation, London, UK

Emily Campbell-Ratcliffe

Future of Privacy Forum, Washington, DC, USA

Sara R. Jordan

Design Research Works, Lancaster University, Lancaster, UK

Joseph Lindley

Belfer Center for Science and International Affairs, Harvard Kennedy School, Cambridge, MA, USA

Aviv Ovadya

Meta AI, Menlo Park, CA, USA

Joelle Pineau

McGill University, Montreal, Canada

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Madhulika Srikumar .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Peer review

Peer review information.

Nature Machine Intelligence thanks Carina Prunkl and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Rights and permissions

Reprints and permissions

About this article

Cite this article.

Srikumar, M., Finlay, R., Abuhamad, G. et al. Advancing ethics review practices in AI research. Nat Mach Intell 4 , 1061–1064 (2022). https://doi.org/10.1038/s42256-022-00585-2

Download citation

Published : 14 December 2022

Issue Date : December 2022

DOI : https://doi.org/10.1038/s42256-022-00585-2

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

How to design an ai ethics board.

- Jonas Schuett

- Ann-Katrin Reuel

- Alexis Carlier

AI and Ethics (2024)

Machine learning in precision diabetes care and cardiovascular risk prediction

- Evangelos K. Oikonomou

- Rohan Khera

Cardiovascular Diabetology (2023)

Generative AI entails a credit–blame asymmetry

- Sebastian Porsdam Mann

- Brian D. Earp

- Julian Savulescu

Nature Machine Intelligence (2023)

Recommendations for the use of pediatric data in artificial intelligence and machine learning ACCEPT-AI

- V. Muralidharan

npj Digital Medicine (2023)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Click through the PLOS taxonomy to find articles in your field.

For more information about PLOS Subject Areas, click here .

Loading metrics

Open Access

Peer-reviewed

Research Article

Research ethics review during the COVID-19 pandemic: An international study

Roles Conceptualization, Formal analysis, Investigation, Methodology, Visualization, Writing – original draft

Current address: Dalla Lana School of Public Health, University of Toronto, Toronto, Canada

Affiliation Lunenfeld-Tanenbaum Research Institute, Bridgepoint Collaboratory for Research and Innovation, Sinai Health, Toronto, Canada

Roles Conceptualization, Writing – review & editing

Affiliation Institute for the History and Philosophy of Science and Technology, University of Toronto, Toronto, Canada

Roles Conceptualization, Funding acquisition, Methodology, Validation, Writing – review & editing

Affiliation School of Public Health, The University of Sydney, Sydney, Australia

Roles Conceptualization, Formal analysis, Funding acquisition, Investigation, Methodology, Supervision, Writing – review & editing

Affiliations Lunenfeld-Tanenbaum Research Institute, Bridgepoint Collaboratory for Research and Innovation, Sinai Health, Toronto, Canada, Dalla Lana School of Public Health, University of Toronto, Toronto, Canada

Roles Conceptualization, Funding acquisition, Investigation, Methodology, Project administration, Resources, Supervision, Validation, Writing – review & editing

* E-mail: [email protected]

Affiliation Faculty of Health Sciences, Western University, London, Canada

- Fabio Salamanca-Buentello,

- Rachel Katz,

- Diego S. Silva,

- Ross E. G. Upshur,

- Maxwell J. Smith

- Published: April 16, 2024

- https://doi.org/10.1371/journal.pone.0292512

- Reader Comments

Research ethics review committees (ERCs) worldwide faced daunting challenges during the COVID-19 pandemic. There was a need to balance rapid turnaround with rigorous evaluation of high-risk research protocols in the context of considerable uncertainty. This study explored the experiences and performance of ERCs during the pandemic. We conducted an anonymous, cross-sectional, global online survey of chairs (or their delegates) of ERCs who were involved in the review of COVID-19-related research protocols after March 2020. The survey ran from October 2022 to February 2023 and consisted of 50 items, with opportunities for descriptive responses to open-ended questions. Two hundred and three participants [130 from high-income countries (HICs) and 73 from low- and middle-income countries (LMICs)] completed our survey. Respondents came from diverse entities and organizations from 48 countries (19 HICs and 29 LMICs) in all World Health Organization regions. Responses show little of the increased global funding for COVID-19 research was allotted to the operation of ERCs. Few ERCs had pre-existing internal policies to address operation during public health emergencies, but almost half used existing guidelines. Most ERCs modified existing procedures or designed and implemented new ones but had not evaluated the success of these changes. Participants overwhelmingly endorsed permanently implementing several of them. Few ERCs added new members but non-member experts were consulted; quorum was generally achieved. Collaboration among ERCs was infrequent, but reviews conducted by external ERCs were recognized and validated. Review volume increased during the pandemic, with COVID-19-related studies being prioritized. Most protocol reviews were reported as taking less than three weeks. One-third of respondents reported external pressure on their ERCs from different stakeholders to approve or reject specific COVID-19-related protocols. ERC members faced significant challenges to keep their committees functioning during the pandemic. Our findings can inform ERC approaches towards future public health emergencies. To our knowledge, this is the first international, COVID-19-related study of its kind.

Citation: Salamanca-Buentello F, Katz R, Silva DS, Upshur REG, Smith MJ (2024) Research ethics review during the COVID-19 pandemic: An international study. PLoS ONE 19(4): e0292512. https://doi.org/10.1371/journal.pone.0292512

Editor: Collins Atta Poku, Kwame Nkrumah University of Science and Technology, GHANA

Received: September 5, 2023; Accepted: March 23, 2024; Published: April 16, 2024

Copyright: © 2024 Salamanca-Buentello et al. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Data Availability: All relevant data for this study are within the paper and its Supporting Information files. Additionally, the raw survey data are available from the figshare database ( https://doi.org/10.6084/m9.figshare.24076704 ).

Funding: This study was funded by Canadian Institutes of Health Research grant #C150-2019-11 ( https://cihr-irsc.gc.ca/e/193.html ) awarded to MJS. The funder had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interests: The authors have declared that no competing interests exist.

Introduction

The ethical review of research protocols during public health emergencies (PHEs) such as the COVID-19 pandemic is a daunting endeavour. Committees tasked with assessing the ethical acceptability of research projects, which we refer to as ethics review committees (ERCs) but are also variably called research ethics boards, research ethics committees, ethics review boards, and institutional review boards, face challenges to reviewing research protocols swiftly while maintaining a high degree of rigour, all under suboptimal conditions and uncertainty. ERCs must balance the urge for rapid turnaround and flexibility with the requirement for intense scrutiny given that new projects often propose innovative but high-risk diagnostic, therapeutic, or preventive approaches to address the PHE. This is especially challenging in the case of countries with fragile health systems, poor infrastructure, and little experience conducting medical research, and also of countries experiencing protracted emergencies [ 1 – 5 ].

Failure to ensure rigour and depth during rapid ethics reviews in public health emergencies may place research participants at risk [ 6 ]. In such challenging circumstances, ERCs must consider how interventions, study design, eligibility criteria, community engagement, and approaches to vulnerable populations impact scientific validity, participant autonomy, respect for persons, welfare, justice, and social value [ 2 , 7 – 9 ]. Additional demands on ERCs may include the ability to incorporate and respond swiftly to newly available knowledge, to provide monitoring and oversight of research, and to grapple with the impact of the PHE on those involved in the research process, such as research participants, investigators, and ERC members and staff [ 7 ].

Public health emergencies force ERCs to make reasonable adjustments and design innovative strategies to address the various components of research ethics review while still adhering to ethical principles [ 3 , 6 , 7 , 10 ]. Moreover, after a PHE, changes implemented to secure continued operations of ERCs must be evaluated to determine their success and whether they should be permanently put in place to improve the everyday functioning of the committees.

Given the challenges that ERCs worldwide faced during the COVID-19 pandemic, we aimed in this exploratory study to identify their experiences in the attempt to adapt to this PHE. We were particularly interested in the availability of pandemic-specific support, the promptness of protocol review, the volume of protocols received, the modifications to and innovations in operational procedures and policies and the evaluation of their outcomes, the anticipated permanence of such changes beyond the pandemic, the presence of pressure from different stakeholders on ERCs, the efforts to ensure quorum, the changes to the composition of ERCs, and the approaches to strengthen inter-ERC collaboration. To our knowledge, this is the first international, COVID-19-related study of its kind.

This international, cross-sectional, exploratory online survey was conducted by researchers from Western University, the University of Toronto, and the Lunenfeld–Tanenbaum Research Institute in Canada, and the University of Sydney in Australia, in collaboration with the World Health Organization’s COVID-19 Ethics and Governance Working Group.

Inclusion criteria

We used targeted purposive and criterion sampling to invite Chairs and members of ERCs who were actively involved in the ethics review of COVID-19 research protocols to participate in this study. To ensure eligibility of participants, the first question of the survey asked respondents to confirm whether they had reviewed COVID-19-related research protocols during the pandemic. Responding to our survey was entirely voluntary. For the purposes of this study, we considered March 2020 as the beginning of this PHE. We specifically targeted individuals from all WHO regions. Participants were assigned to either of two categories: high-income countries (HICs) or low- and middle-income countries (LMICs), according to their reported country of residence. To do this, we used the World Bank classification of countries ( https://datahelpdesk.worldbank.org/knowledgebase/articles/906519-world-bank-country-and-lending-groups ), which is based on gross national income per capita. We adopted this widely used categorization notwithstanding its limitations in terms of hiding power imbalances and reducing important differences among countries to questions of economics [ 11 ].

Survey questionnaire

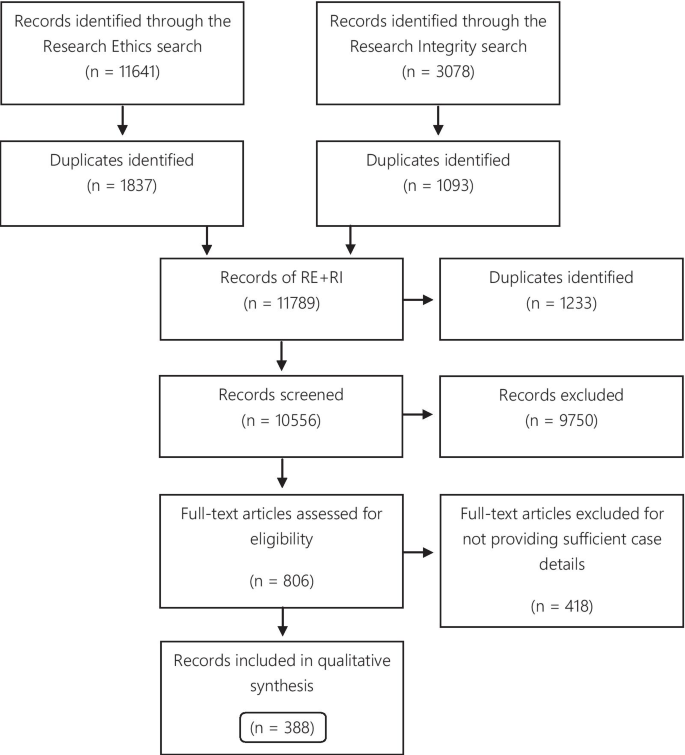

The complete questionnaire is available as S1 Appendix . The overall structure and flow of the survey questionnaire, which consisted of a main “trunk” of 37 items organized into 11 thematic categories, is shown in Fig 1 . As can be seen in this figure, eight of these items branched into different survey flow elements based on respondents’ answers; seven of these eight items branched into elements with questions (six of them contained two questions). Thus, in total, the questionnaire, written in English, included 50 questions. We privileged close-ended over open-ended questions, but we allowed respondents the opportunity to provide additional comments for some items. We pilot-tested the online questionnaire with a small group of experts who fulfilled the inclusion criteria. This helped polish the wording of the questions and also assess and improve the logistics of the administration of the survey.

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

https://doi.org/10.1371/journal.pone.0292512.g001

Data collection

The invitation to participate in the survey explained the nature and purpose of our study, the inclusion criteria used to select participants, a summary of the procedures involved, and the URL link to the survey. These invitations were initially distributed by email by the WHO’s COVID-19 Ethics and Governance Working Group through the email listserv of the 13th Global Summit of National Ethics Committees (an event that took place in September 2022). The Working Group identified additional potential participants among its extensive contact networks. We also circulated the invitation to experts identified by the research team. Invitations could also be forwarded to individuals designated by ERCs.

Our survey was active from October 11, 2022, to February 28, 2023. We used the Qualtrics Experience Management (XM) online platform to administer the questionnaire, which was open only to individuals who received the invitation with the link to the survey.

Data analysis

The analysis of the findings of this exploratory study employed descriptive statistics and stratified the comparison between responses of participants from HICs with those of participants from LMICs. To facilitate the examination of the results, tables were prepared showing the number and percentage of respondents from HICs and LMICs who answered each question in the survey. Qualitative data (descriptive responses to open-ended questions) were evaluated using thematic analysis and the constant comparative method.

Research ethics approval

Our study received approval from Western University’s Non-Medical Research Ethics Board (Protocol ID 120455). Additionally, it was evaluated by the World Health Organization Research Ethics Review Committee (Protocol ID CERC.0181) and was exempted from further review. The use of the Qualtrics platform facilitated data collection and management while respecting the privacy and confidentiality of participants. Respondents indicated their consent to participate in our survey by selecting a button labelled “I consent” at the end of the letter of information and consent, which appeared on the first page of the questionnaire. Responses were anonymous to protect participants’ privacy and confidentiality and encourage the open sharing of experiences.

Reporting survey results

While no universally agreed-upon reporting standards for surveys exist like there are for clinical trials and meta-analyses, the EQUATOR (Enhancing the QUAlity and Transparency Of health Research) Network has recently (2021) proposed a checklist for reporting of survey studies [ 12 ]. EQUATOR has been responsible for the development of several of these standards, including the Consolidated Standards of Reporting Trials (CONSORT) for randomized control trials; Strengthening the Reporting of Observational studies in Epidemiology (STROBE) for observational studies; and Preferred Reporting Items for Systemic Reviews and Meta-analyses (PRISMA) for systematic reviews and meta-analyses. Even though this is not a globally recognized official standard, it is quite useful, and we have ensured that our manuscript fulfills all the reporting requirements included in this checklist.

Characterization of survey respondents

Two hundred and eighty-one individuals opened our survey. Of these, 250 answered the first question, which confirmed whether respondents fulfilled our inclusion criteria, and with which we could confirm their eligibility. Forty-three individuals explicitly indicated that they did not meet our criteria. Thus, the initial number of suitable respondents was 207. As expected in surveys such as ours in which participants are allowed to skip questions, the number of respondents per question varied slightly, from a maximum of 207 to a minimum of 147.

Of the 204 participants who indicated their sex / gender, 120 (58.8%) were female, 82 (40.2%) were male, one (0.5%) preferred to self-describe, and one (0.5%) preferred not to disclose this information ( Box 1 , Table a ). The proportion of females was higher in HICs (64.9%) than in LMICs (47.9%); thus, the distribution of respondents by sex / gender was more balanced in LMICs than in HICs. As shown in Box 1 , Table b , more than three quarters of respondents (77.9%) were 45 years old or older. This was true for both HICs and LMICs. Most respondents provided ethics review for national bodies such as national ethics committees or national public health organizations; more than a quarter participated in ERCs linked to academic or research institutions ( Box 1 , Table c ). However, while almost half of respondents from HICs were members of ERCs affiliated with national bodies, only one quarter of participants from LMICs provided ethics review for such organizations. In contrast, in LMICs, 40% of respondents were members of ERCs associated with academic or research institutions. Furthermore, only 20% of participants from LMICs and 13.9% of participants from HICs provided ethics review for health care facilities.

Box 1. Characterization of survey participants

https://doi.org/10.1371/journal.pone.0292512.t001

In terms of the WHO region for which ethics review was provided, all regions were represented in our survey ( S1 Table in S2 Appendix ). More than one third of respondents reviewed research protocols from Europe, almost one fifth from the Americas, one tenth from Africa, and less than one tenth each from the other WHO regions.

Table 1 shows the number of respondents by country of residence. Participants from 48 countries (19 HICs and 29 LMICs) responded to our survey. Of the 203 individuals who indicated their country of residence, 130 (64%) were from HICs and 73 (36%) from LMICs. There was a large contingent of respondents from the UK (93).

https://doi.org/10.1371/journal.pone.0292512.t002

Two thirds of respondents had six or more years of experience as ERC members. This is true for participants from both HICs and LMICs ( S2 Table in S2 Appendix ).

As shown in S3 Table in S2 Appendix , about one half of respondents (52%) were involved in only one ERC. This pattern was common for participants from HICs and LMICs. However, more than one third of respondents from HICs participated in three or more ERCs during the COVID-19 pandemic. Of those who indicated involvement with multiple ERCs, close to one half specified that such participation was simultaneous ( S4 Table in S2 Appendix ).

Support for the operation of ERCs during the pandemic

As shown in S5 Table in S2 Appendix , an overwhelming majority (78.4%) of respondents indicated that their ERCs received no additional support for the operation of their committees during the pandemic. This lack of support was more pronounced in the case of ERCs in LMICs. For the minority of ERCs that did receive support, this consisted mainly of administrative and human resources, with one quarter of respondents from LMICs stating that their ERCs also received financial support, in contrast to only 12.5% of those from HICs ( S6 Table in S2 Appendix ). In terms of specific areas supported, participants from both HICs and LMICs mentioned teleconferencing and virtual meeting capabilities, information technology, support staff, assistance for ERC reviewers, and training of ERC members ( Table 2 ). Interestingly, while 20% of respondents from HICs chose ERC support staff as one of the areas that received assistance, only 7.5% of those from LMICs did.

https://doi.org/10.1371/journal.pone.0292512.t003

In their descriptive responses, participants alluded to support for covering the costs of using online platforms for meetings and protocol review, and for acquiring or upgrading hardware such as laptops and webcams. In one ERC, members were able to claim costs of setting up teleconferencing and of telephone calls if dialling into a meeting. In other ERCs, information technology training was offered, along with technical support for the use of online platforms. It is important to note that almost half of respondents from HICs, but close to the totality (91.4%) of those from LMICs, indicated that their ERCs lacked any pre-pandemic financial planning that included provisions for the support of the committees during a public health emergency ( S7 Table in S2 Appendix ).

Modification of existing procedures or policies

Respondents from both HICs and LMICs overwhelmingly (more than 75% of participants in both cases) reported that their ERCs modified existing procedures or policies to operate during the pandemic ( S8 Table in S2 Appendix ). The most frequently modified domain was meeting logistics, followed by meeting frequency and procedures for protocol review and approval ( S9 Table in S2 Appendix ).

In terms of modifications to review procedures, several participants pointed out in their descriptive responses that their ERCs fast-tracked the review of pandemic-related studies, shortening the timeline to review and approve protocols. ERC members were expected to complete the review of these protocols within a few days and, in some cases, 24 hours. To facilitate such a quick turnaround, some ERCs created special sub-committees that would conduct very fast protocol reviews. Moreover, participants emphasized the importance of simplifying and increasing the flexibility of administrative processes. For example, several respondents indicated that their ERCs switched entirely to the use of online platforms for protocol review, eliminating the need for paper documents.

Numerous participants stated that all ERC meetings were conducted virtually (as opposed to face-to-face) during the pandemic, which, in their view, enabled ERC members and researchers to participate regardless of geographical location, prevented contagion, and allowed rapid turnaround of reviews. Even in the case of virtual sessions, all other full meeting requirements such as quorum had to be met. Some ERCs modified their meetings to open a permanent slot in their agendas for COVID-19-related research or added urgent full meetings to discuss top-priority pandemic-related trial protocols. In other cases, members were permanently available to review COVID-19-related protocols, with those pertaining to other topics addressed less frequently.

While most respondents acknowledged the advantages of using online platforms during the pandemic to organize ERC meetings and to review research protocols, several participants highlighted the challenges that the use of such technologies entailed, particularly for new and more senior members of the ERCs who felt uncomfortable using these platforms. Some individuals deplored the loss of quality in the dynamics among ERC members (stilted conversations, fewer informal interactions) compared against the benefits of face-to-face meetings. Resistance to working online for some was compounded by difficulties accessing the internet and the lack of adequate electronic devices to do so.

Regarding the modification of protocol requirements, respondents mentioned adding safety procedures for study participants and members of the research teams, facilitating remote documentation of consent, and changing the policies regarding the use of non-anonymized data from health service and public health records for the duration of the pandemic to allow more unrestrained use of data. Some ERCs transitioned from requiring the physical signature of conflict-of-interest declaration forms to an email declaration.

As shown in Table 3 , only a minority of respondents indicated that their ERCs conducted a formal evaluation of the success or failure of modifying existing procedures or policies (28% of participants from HICs and 17% of those from LMICs). More than one quarter of respondents did not know whether such modifications had been assessed.

https://doi.org/10.1371/journal.pone.0292512.t004

Design and implementation of new procedures and policies

Almost two-thirds of respondents from both HICs and LMICs reported that their ERCs had designed and implemented new procedures and policies to address the challenges brought about by the pandemic ( S10 Table in S2 Appendix ). As in the case of modifications to ERC processes, innovations occurred mainly in the areas of meeting logistics and frequency, and of procedures for protocol review and approval ( S11 Table in S2 Appendix ). This was the case for ERCs in both HICs and LMICs.

In their descriptive responses, participants mentioned the development and implementation of new standard operating procedures (SOPs) and the integration of ad hoc committees, some including specialists, for urgent, accelerated protocol review. Such fast-track ERCs could review studies in one or two days, considerably shortening the time to complete reviews. One respondent considered the most successful innovation to be the formation of a “pool” of committee members ready to be convened at very short notice to quickly review COVID-19-related protocols. Such an ad hoc committee enabled applications to be reviewed and turned around very quickly. Of note, survey participants did not explicitly specify in their descriptive responses whether these ad hoc committees were integrated exclusively by existing ERC members, or if external experts and specialists were invited to take part in them. Similarly, respondents did not comment on whether existing SOPs contemplated the creation of ad hoc committees, on the way these entities were governed, or on the modifications made to SOPs to allow the integration of such committees.

The proportion of ERCs that formally evaluated the success or failure of new procedures and policies was analogous to that described for modifications to SOPs. Table 4 shows that just 37% of respondents from HICs and 21% of those from LMICs reported that their ERCs conducted such an evaluation.

https://doi.org/10.1371/journal.pone.0292512.t005

Permanently putting into effect modifications and innovations

A substantial majority of respondents (almost three quarters of those from HICs and more than four-fifths of those from LMICs) stated that many of the modifications and innovations to operating procedures implemented during the pandemic should be permanently put into effect ( S12 Table in S2 Appendix ), particularly in the areas of meeting logistics and frequency, procedures for protocol review and approval, and training of ethics review committee members in new or modified procedures ( S13 Table in S2 Appendix ). Several participants argued in their descriptive responses that virtual online meetings should be a permanent feature of ERC operations, as they increase efficiency and preclude many of the disadvantages of face-to-face meetings. Another recommendation was to enable the integration of ad hoc committees during times of increased demand. Similarly, respondents emphasized the relevance of facilitating the incorporation of new expert members to the ERCs as required. However, 20% of participants from HICs and 50% of those from LMICs indicated that their ERCs had no support to permanently implement modifications or innovations established during the COVID-19 pandemic ( S14 Table in S2 Appendix ).

Policies, procedures, and guidelines for public health emergencies

It is noteworthy that almost half of respondents from HICs and three-quarters of participants from LMICs indicated that their ERCs did not have internal policies, procedures, or guidelines before the pandemic that could orient members regarding the functioning of the committees during PHEs ( S15 Table in S2 Appendix ). Regarding the use of internal guidelines, some ERCs adapted existing documents, while others developed entirely new procedures. In the absence of specific internal guidelines, some SOPs explicitly privileged expedited review during health crises.

In contrast to the widespread absence of internal guidelines, the ERCs of one quarter of respondents from HICs and of almost half of those from LMICs used external guidelines not developed by their committees to govern their operation during the pandemic ( S16 Table in S2 Appendix ). Members of several committees referred to publicly available national and international guidelines. A selection of the most consulted documents appears in Box 2 .

Box 2. National and international external guidelines* that survey respondents reported were used by their ERCs to manage operations during the COVID-19 pandemic

International health organizations.

• Council for International Organizations of Medical Sciences, & World Health Organization (2016). International Ethical Guidelines for Health-related Research Involving Humans (Fourth Ed.). Council for International Organizations of Medical Sciences. https://doi.org/10.56759/rgxl7405

• Pan-American Health Organization (2020). Guidance for ethics oversight of COVID-19 research in response to emerging evidence. https://iris.paho.org/handle/10665.2/53021

• Pan-American Health Organization (2020). Guidance and strategies to streamline ethics review and oversight of COVID-19-related research. https://iris.paho.org/handle/10665.2/52089

• Pan-American Health Organization (2020). Template and operational guidance for the ethics review and oversight of COVID-19-related research. https://iris.paho.org/handle/10665.2/52086

• Pan-American Health Organization (2022). Catalyzing ethical research in emergencies. Ethics guidance, lessons learned from the COVID-19 pandemic, and pending agenda. https://iris.paho.org/handle/10665.2/56139

• Red de América Latina y el Caribe de Comités Nacionales de Bioética—United Nations Educational, Scientific and Cultural Organization (UNESCO) (2020). Ante las investigaciones biomédicas por la pandemia de enfermedad infecciosa por coronavirus Covid-19. https://redbioetica.com.ar/wp-content/uploads/2020/03/Declaracion-RED-ALAC-CNBS-Investigaciones-Covid-19.pdf

• World Health Organization (2016). Guidance for managing ethical issues in infectious disease outbreaks. World Health Organization. https://apps.who.int/iris/handle/10665/250580

• World Health Organization (2020). Key criteria for the ethical acceptability of COVID-19 human challenge studies. https://apps.who.int/iris/handle/10665/331976

• World Health Organization (2020). Guidance for research ethics committees for rapid review of research during public health emergencies. https://apps.who.int/iris/handle/10665/332206

• World Health Organization (2020). Ethical standards for research during public health emergencies: distilling existing guidance to support COVID-19 R&D. https://apps.who.int/iris/handle/10665/331507

Bioethics centres

• Nuffield Council of Bioethics (2020). Ethical considerations in responding to the COVID-19 pandemic. https://www.nuffieldbioethics.org/assets/pdfs/Ethical-considerations-in-responding-to-the-COVID-19-pandemic.pdf

The Hastings Center: Berlinger N et al . (2020). Ethical Framework for Health Care Institutions Responding to Novel Coronavirus SARS-CoV-2 (COVID-19). Guidelines for Institutional Ethics Services Responding to COVID-19. https://www.thehastingscenter.org/ethicalframeworkcovid19/

Scientific publications mentioned by respondents

• Saxena et al. (2019). Ethics preparedness: facilitating ethics review during outbreaks—recommendations from an expert panel. https://bmcmedethics.biomedcentral.com/articles/10.1186/s12910-019-0366-x

National guidelines

Resolución 908/2020. Ministerio de Salud de Argentina: https://www.argentina.gob.ar/normativa/nacional/resoluci%C3%B3n-908-2020-337359/texto

• Normativas da Comissão Nacional de Ética em Pesquisa: http://conselho.saude.gov.br/normativas-conep?view=default

• Consejo Nacional de Investigación en Salud de Costa Rica (CONIS) (2020). COMUNICADO 2: Recomendaciones para realizar investigación biomédica durante el periodo de la emergencia sanitaria por COVID-19 en Costa Rica. https://www.ministeriodesalud.go.cr/gestores_en_salud/conis/circulares/comunicado_cec_oac_oic_20082020.pdf

El Salvador

• Comité Nacional de Ética de la Investigación en Salud de El Salvador (2015). Manual de procedimientos operativos estándar para comités de ética de la investigación en salud. https://www.cneis.org.sv/wp-content/uploads/2018/07/MANUAL-CNEIS.pdf

• Indian Council of Medical Research (2017). National ethical guidelines for biomedical and health research involving human participants. https://ethics.ncdirindia.org/asset/pdf/ICMR_National_Ethical_Guidelines.pdf

• Indian Council of Medical Research (2020).National guidelines for ethics committees reviewing biomedical & health research during COVID-19 pandemic. https://main.icmr.nic.in/sites/default/files/guidelines/EC_Guidance_COVID19_06_05_2020.pdf

• Kenya Medical Research Institute Scientific and Ethics Review Unit (2019). KEMRI SERU guidelines for the conduct of research during the covid-19 pandemic in Kenya. https://www.kemri.go.ke/wp-content/uploads/2019/11/KEMRI-SERU_GUIDELINES-FOR-THE-CONDUCT-OF-RESEARCH-DURING-THE-COVID_8-June-2020_Final.pdf

Garis Panduan Pengurusan COVID-19 di Malaysia No.5 [COVID-19 Management Guidelines in Malaysia No.5] (2020). Ministry of Health of Malaysia. https://covid-19.moh.gov.my/garis-panduan/garis-panduan-kkm

• Government of Pakistan National COVID Command and Operation Center (NCOC) Guidelines (2020). [No longer available, as NCOC ceased operations on April 1, 2022)]

South Africa

• Department of Health, Republic of South Africa (2015). Ethics in Health Research: Principles, Processes and Structures (2d Ed). https://www.sun.ac.za/english/research-innovation/Research-Development/Documents/Integrity%20and%20Ethics/DoH%202015%20Ethics%20in%20Health%20Research%20-%20Principles,%20Processes%20and%20Structures%202nd%20Ed.pdf

South Korea

• Government of the Republic of Korea (2014). Bioethics and Safety Act (Act No. 12844). https://elaw.klri.re.kr/eng_mobile/viewer.do?hseq=33442&type=part&key=36

United Kingdom

• United Kingdom Health Departments / Research Ethics Service (2022). Standard Operating Procedures for Research Ethics Committees (Version 7.6). https://www.hra.nhs.uk/documents/3090/RES_Standard_Operating_Procedures_Version_7.6_September_2022_Final.pdf . [In particular, several respondents from the UK mentioned Section 9 of this document, which addresses expedited review in situations such as public health emergencies.]

• Health Research Authority (2020). https://www.hra.nhs.uk/approvals-amendments/

• Health Research Authority (2020). https://www.hra.nhs.uk/covid-19-research/covid-19-guidance-sponsors-sites-and-researchers/

• Department of Health and Social Care (2020). Coronavirus (COVID-19): notification to organisations to share information. https://www.gov.uk/government/publications/coronavirus-covid-19-notification-of-data-controllers-to-share-information

* We defined “external guidelines” as those not developed internally by participants’ ERCs

Changes in workload

Respondents stated that the workload of ERC members increased considerably during the pandemic because of the increase in the number of protocols reviewed and also due to the urgency that the approval of COVID-19-related studies demanded. More than half of participants indicated that the volume of protocols received for review increased, both for studies assigned to delegated / expedited review, and for protocols that underwent full review ( S17 Table in S2 Appendix ). The increase in the volume of protocols had unexpected consequences. For example, in one HIC, the number of applicants who were summoned to discuss their protocols with ERCs in online meetings increased proportionally to the escalation in the volume of protocols submitted. In another case, ERC members were burdened with additional tasks such as working closely with the investigators of rejected COVID-19 protocols to improve their applications until these could be approved.

In terms of the time it took ERCs to process and approve protocols during the pandemic, participants confirmed in their descriptive responses that the turnaround time for ERC review was markedly shortened, from weeks or even months to just a few days. In general, more than half of survey participants indicated that, before the pandemic, the duration of the review process, from the time of initial submission to full approval, was between three and eight weeks ( S18 Table in S2 Appendix ). In contrast, during the pandemic, this process was substantially reduced to less than two weeks for both delegated / expedited review and full review. However, this decrease was more pronounced in HICs than in LMICs ( S19 Table in S2 Appendix ). Unsurprisingly, the approval of COVID-19-related research protocols was faster than that of non-COVID-19 studies. More than two-thirds of respondents indicated that delegated / expedited review of COVID-19-related protocols took less than five weeks; this was the case for more than half of full reviews. The process was longer in LMICs, though ( S20 Table in S2 Appendix ). Conversely, protocol review was slightly longer for non-COVID-19 studies, except in the case of full reviews in LMICs, which participants reported took between three and more than 12 weeks ( S21 Table in S2 Appendix ).

Presence of external pressure on ERCs

While only 14% of respondents from HICs reported that their ERCs were subjected to different types of external pressure to both approve and reject research protocols, one third of participants from LMICs (34%) faced such a challenge ( S22 Table in S2 Appendix ). The perceived demand mentioned most frequently involved pressures to rush studies through the review process at the expense of proper examination and ethical oversight. This was especially evident in the case of COVID-19 vaccine clinical trials. Some participants highlighted their defense of the autonomy of their ERCs in the face of external influences by using, for example, research policies developed and implemented specifically for the pandemic as a tool for transparent decision-making and as a safeguard against external pressures. One ERC successfully resisted government pressure to approve a research protocol related to a domestic PCR test, human trials of locally developed ventilators, and a placebo-controlled vaccine trial proposed despite the existence of six emergency-authorized vaccines and ongoing mass vaccination.

While some respondents acknowledged that entities such as national governments were understandably impatient for preventive, diagnostic, and therapeutic measures to combat the pandemic, they still emphasized the need for proper and thorough review of research protocols. One respondent stated that institutional authorities that favoured or sponsored certain studies sought their immediate approval and considered ERCs as inconvenient hindrances to achieve this goal. Several participants described instances in which ERCs, particularly in LMICs, received pressure to approve alternative medicine clinical trials.

Types of COVID-19 protocols reviewed by ERCs

Given the range of challenges brought about by the COVID-19 pandemic, it was interesting to determine the proportion of protocols received by ERCs according to the research area in which they could be classified, namely, diagnostics, therapeutics, vaccines, pharmacovigilance, or other topics such as behavioural research. Our results suggest that between one-half and two-thirds of ERCs received from one to 10 studies in each area ( Table 5 ). In other words, all areas of COVID-19 research were covered in these protocols submitted to ERCs of both HICs and LMICs. However, it must be noted that between one-third and one-half of respondents could not classify the protocols received by their ERCs (perhaps due to not tracking such information).

https://doi.org/10.1371/journal.pone.0292512.t006

Prioritization of protocols for ethics review

Overwhelmingly, and as expected, participants reported that their ERCs considered COVID-19-related protocols urgent and thus prioritized their review and approval over that of others, particularly in terms of expediting the review of these studies and privileging their discussion during committee meetings. More than three quarters of respondents from HICs and almost two-thirds of those from LMICs indicated that their ERCs gave priority to COVID-19-related studies ( S23 Table in S2 Appendix ). In fact, in one case, an ERC stopped reviewing non-COVID-19-related protocols altogether. Some ERCs gave precedence to the review of COVID-19-related studies according to the priorities determined by their national governments. Others were assigned studies by an ad hoc national entity that triaged the research protocols. Interestingly, however, as shown in S23 Table in S2 Appendix , 15% of respondents from HICs and 27% of those from LMICs stated that their ERCs did not give priority to pandemic-related studies.

Furthermore, our results show that almost one-third of respondents from HICs and almost half of those from LMICs indicated that, for their ERCs, the review of some types of COVID-19-related studies took precedence over that of others ( S24 Table in S2 Appendix ). In their descriptive responses, participants explicitly mentioned prioritizing clinical trials, particularly those focused on COVID-19 vaccine development and safety monitoring; studies related to therapeutic agents for the treatment of COVID-19; protocols about diagnostics and prognostic factors; epidemiological studies, including those related to the natural history of COVID-19 and serosurveillance; and research affecting public health policy. In the case of one ERC in a HIC with very low infection rates resulting from successful public health measures, priority was given to vaccine trials and observational research on vaccine monitoring and community incidence.

Membership of ERCs during the pandemic

One of the main challenges that ERCs worldwide faced during the pandemic was making certain that the number and expertise of their members enabled the efficient operation of the committees under such demanding circumstances. Most survey respondents indicated that their ERCs were able to ensure quorum (80% of participants from HICs, but only 60% of those from LMICs); however, one-third of respondents from LMICs stated that quorum in their ERCs was infrequently met ( S25 Table in S2 Appendix ). Two-thirds of participants from HICs and three quarters of those from LMICs reported that their committees had taken measures to ensure continuity of adequate review of research protocols in case existing members became unavailable due to the pandemic ( S26 Table in S2 Appendix ).

ERCs in both HICs and LMICs did invite new members or appointed alternate ones to ensure quorum and inclusion of individuals with appropriate expertise. Yet, consulting expert non-members seems to have been preferred to incorporating individuals to the committee. Only 13% of respondents from HICs and 24% of those from LMICs indicated that their ERCs had added new members to accelerate protocol review during the pandemic ( S27 Table in S2 Appendix ). Similarly, 11% of participants from HICs and 37% of those from LMICs added new members with specific expertise ( S28 Table in S2 Appendix ). In contrast, almost one-third of individuals from HICs, but close to two-thirds of those from LMICs, stated that their committees had consulted expert non-members to address novel areas of research or to provide enhanced scrutiny of research protocols ( S29 Table in S2 Appendix ). In their descriptive responses, participants expressed that, in some cases, ERCs incorporated new members who were available at quick notice and comfortable with the use of online platforms for meetings and protocol review. A similar approach consisted of integrating virtual ad hoc committees solely to review COVID-19-related-protocols. For some ERCs, national legislation complicated getting additional support or adding new members. Another factor complicating the integration of ERCs was that clinical responsibilities of individuals directly in the care of COVID-19 patients soared, hindering their participation in committee meetings. One participant reported that some ERC members could not fulfill their duties in their respective ERCs because they had become highly sought-after “media celebrity” experts.

Survey respondents suggested that it would be worthwhile to assess the psychological and emotional challenges that ERC members faced when having to evaluate protocols using new, unfamiliar procedures under extreme pressure. Also, it is worth reiterating that, according to several participants, many ERC members, particularly older ones, deplored the loss of features common to face-to-face meetings, such as a warmer, more informal and welcoming environment that favoured interpersonal interactions. Other respondents expressed their desire for constructive and supportive feedback and for more appreciative and generous gestures of gratitude for the extraordinary efforts of ERCs. However, a few participants considered that being able to respond in a useful way to a public health crisis as ERC members was in itself very gratifying and validating.

National and international collaboration

While 38.5% of respondents from HICs and 40% of those from LMICs reported the presence of national and international collaboration among ERCs to standardize emergency operations and procedures during the pandemic, almost one-third of participants from HICs were unsure about the existence of such collaboration ( S30 Table in S2 Appendix ). Almost half of respondents from HICs, but more than two-thirds of those from LMICs, indicated that their ERCs did not have strategies to harmonize multiple review processes ( S31 Table in S2 Appendix ). Most participants (55% of those from HICs and 63% of those from LMICs) reported that their ERCs relied on established procedures to recognize and validate research protocol reviews conducted by other committees ( S32 Table in S2 Appendix ). About one half of respondents from HICs, but almost two-thirds of those from LMICs, affirmed that their ERCs collaborated with scientific committees that pre-reviewed or prioritized pandemic-related research protocols ( S33 Table in S2 Appendix ).

Almost 50% of participants from HICs, but little more than a third of those from LMICs, reported the presence of centralized ethics review of research protocols for multicentre studies related to COVID-19 ( S34 Table in S2 Appendix ). Conversely, one-third of respondents from HICs, but more than two-thirds of those from LMICs, stated that their ERCs did not consider the formation of Joint Scientific Advisory Committees, Data Safety Review Committees, Data Access Committees, or a Joint Ethics Review Committee integrated with representatives of ethics committees of all institutions and countries involved in COVID-19-related research ( S35 Table in S2 Appendix ).

In their descriptive responses, participants noted the need for better inter-ERC collaboration and communication at the national and international levels to share successful strategies and avoid effort duplication. A case of very successful national inter-ERC collaboration is worth mentioning. Respondents from one particular LMIC stated that, given the critical and unforeseen absence of the national entity responsible for health research ethics during the pandemic, ERCs throughout the country joined forces to create an ad hoc spontaneous informal national network of all ERC chairs and co-chairs (it also included members of the national drug regulator) to strengthen mutual support, enhance communication among ERCs, identify best practices, and share academic and ethics resources.

ERCs faced considerable challenges during the COVID-19 pandemic. Demands were placed on them to urgently review an increased volume of protocols while maintaining rigour, all under suboptimal conditions and uncertainty. Yet, our findings suggest ERCs reviewed a greater volume of protocols and did so faster than before the pandemic. Against this backdrop, our results also reveal that, despite billions of dollars having been invested into the research and development (R&D) ecosystem to support the COVID-19 research response, little to no additional resources were directed to ERCs to support or expedite their functions. This should be particularly sobering for those who raise complaints about ERCs being an “obstacle” to research [ 13 – 16 ]. It may also help to explain other challenges experienced by ERCs during the pandemic, such as the absence of internal policies or guidelines for adapting to a PHE, the collateral damage sustained from deprioritizing non-COVID-19 protocols, and the pressures felt to rush protocols through review.

Our finding that ERCs wish to sustain many of the modifications made to their operations during the COVID-19 pandemic should be interpreted in light of the fact that ERCs also report having received little or no support during the COVID-19 pandemic as well as exiguous support for the maintenance of any modifications they would like to make permanent into the future. While it is expected that the research ethics ecosystem learn from this experience and enhance operations for future threats, it is difficult to see how this will be possible without significant investment. While no one seems to disagree that the research ethics ecosystem should strive for greater efficiency and collaboration, especially during PHEs, investments are required to achieve these aims. Simply put, the experience of ERCs during the COVID-19 pandemic, while herculean in many respects, was a function of necessity and is unlikely to be sustainable.

Extant literature reporting the challenges faced by ERCs during the COVID-19 pandemic is scant and tends to be limited to the early phases of this PHE. Most studies published on this topic are confined to single countries or geographical regions, with only one study including 14 countries in Africa, Asia, Australia, and Europe[ 17 ]. Several of these contributions focus exclusively on one ERC, usually associated with an academic or health care institution. The literature includes descriptions of ERC operations during the pandemic in Central America and the Dominican Republic [ 18 ], China [ 19 ], Ecuador [ 20 ], Egypt [ 21 ], Germany [ 22 ], India [ 23 – 25 ], Iran [ 10 ], Ireland [ 26 ], Kenya [ 27 ], Kyrgyzstan [ 28 ], Latin America [ 29 ], the Netherlands [ 30 ], Pakistan [ 31 ], South Africa [ 32 , 33 ], Turkey [ 34 ], and the United States [ 35 – 37 ]. Most of these studies reported results from surveys, interviews, focus groups, and documentary analysis, including review of research protocols, ERC meeting minutes, and existing SOPs. Participants usually consisted of ERC chairpersons and members, clinical and biomedical researchers, institutional representatives, and laypeople. Most studies based on surveys and interviews included fewer than 30 respondents, with only some having more than 100 participants.

Our findings agree with this literature. Given that our study is truly global in scope, it considerably broadens what is known about the operation of ERCs during the COVID-19 pandemic and clears a path towards greater consensus on strategies to prepare for and respond during future PHEs.

In this literature, several studies emphasize the lack of support and resources to operate during the pandemic. The vast majority of ERCs made numerous modifications to their SOPs. In particular, the use of online platforms for ERC meetings and for protocol review was ubiquitous. However, ERC members across studies pointed out several disadvantages of such platforms, including lack of familiarity and technical know-how, particularly in the case of more senior members of the committees. Only a few institutions provided training, equipment, and technical support for the use of these online platforms. Consistent with our findings, almost no ERCs in these studies reported having internal policies, procedures, or guidelines to operate during a PHE. National regulations on this topic, where available, were often unclear, contradictory, rapidly changing, vague, or difficult to interpret. Conversely, several ERCs availed themselves of international guidelines ( Box 2 ), in particular those prepared by WHO [ 38 – 41 ] and PAHO [ 42 – 45 ].

In terms of changes in workload, all ERCs in the studies mentioned earlier experienced a dramatic increase in the number of COVID-19-related protocols received, which had to be reviewed very quickly in the face of pressure for expedited approvals from researchers, institutions, governments, and the media. The surge in the volume of protocols, along with shortened timelines for turnaround, severely strained ERC members’ ability to conduct rigorous, thorough, high-quality assessments. Despite feeling overwhelmed, ERC members participating in these studies managed to fulfill their responsibilities, sometimes at great personal cost.

Given the urgency to examine and approve an ever-increasing number of COVID-19 research protocols, previous studies report several strategies implemented by ERCs worldwide to prioritize their review. This frequently meant that the assessment of non-COVID-19-related studies was postponed or even abandoned. Similarly, non-interventional COVID-19 protocols were given secondary importance. Prioritization of COVID-19 protocols by type of study was rare.

Despite numerous staffing challenges, most ERCs in the studies examined were able to ensure quorum. In some cases, their institutions provided training sessions to update committee members on the rapidly changing landscape of basic and clinical knowledge about COVID-19. A less frequently used approach was to incorporate new members with relevant expertise into the ERCs. One common strategy across different countries was the integration of ad hoc committees focused exclusively on the review of COVID-19 -related protocols.