- Engineering Mathematics

- Discrete Mathematics

- Operating System

- Computer Networks

- Digital Logic and Design

- C Programming

- Data Structures

- Theory of Computation

- Compiler Design

- Computer Org and Architecture

Static Single Assignment (with relevant examples)

- Solidity - Assignment Operators

- How to Implement Move Assignment Operator in C++?

- Augmented Assignment Operators in Python

- How to Create Custom Assignment Operator in C++?

- How to create static classes in PHP ?

- Java - Lambda Expression Variable Capturing with Examples

- Directed Acyclic graph in Compiler Design (with examples)

- Java Assignment Operators with Examples

- std::is_assignable template in C++ with Examples

- std::is_trivially_assignable in C++ with Examples

- std::is_nothrow_assignable in C++ with Examples

- std::is_copy_assignable in C++ with Examples

- std::is_move_assignable C++ with Examples

- std::is_trivially_move_assignable in C++ with Examples

- std::is_nothrow_copy_assignable in C++ with Examples

- std::is_trivially_copy_assignable class in C++ with Examples

- Static Variables in Java with Examples

- Different Forms of Assignment Statements in Python

- Can we Overload or Override static methods in java ?

Static Single Assignment was presented in 1988 by Barry K. Rosen, Mark N, Wegman, and F. Kenneth Zadeck.

In compiler design, Static Single Assignment ( shortened SSA) is a means of structuring the IR (intermediate representation) such that every variable is allotted a value only once and every variable is defined before it’s use. The prime use of SSA is it simplifies and improves the results of compiler optimisation algorithms, simultaneously by simplifying the variable properties. Some Algorithms improved by application of SSA –

- Constant Propagation – Translation of calculations from runtime to compile time. E.g. – the instruction v = 2*7+13 is treated like v = 27

- Value Range Propagation – Finding the possible range of values a calculation could result in.

- Dead Code Elimination – Removing the code which is not accessible and will have no effect on results whatsoever.

- Strength Reduction – Replacing computationally expensive calculations by inexpensive ones.

- Register Allocation – Optimising the use of registers for calculations.

Any code can be converted to SSA form by simply replacing the target variable of each code segment with a new variable and substituting each use of a variable with the new edition of the variable reaching that point. Versions are created by splitting the original variables existing in IR and are represented by original name with a subscript such that every variable gets its own version.

Example #1:

Convert the following code segment to SSA form:

Here x,y,z,s,p,q are original variables and x 2 , s 2 , s 3 , s 4 are versions of x and s.

Example #2:

Here a,b,c,d,e,q,s are original variables and a 2 , q 2 , q 3 are versions of a and q.

Phi function and SSA codes

The three address codes may also contain goto statements, and thus a variable may assume value from two different paths.

Consider the following example:-

Example #3:

When we try to convert the above three address code to SSA form, the output looks like:-

Attempt #3:

We need to be able to decide what value shall y take, out of x 1 and x 2 . We thus introduce the notion of phi functions, which resolves the correct value of the variable from two different computation paths due to branching.

Hence, the correct SSA codes for the example will be:-

Solution #3:

Thus, whenever a three address code has a branch and control may flow along two different paths, we need to use phi functions for appropriate addresses.

Please Login to comment...

Similar reads.

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

ENOSUCHBLOG

Programming, philosophy, pedaling., understanding static single assignment forms, oct 23, 2020 tags: llvm , programming .

This post is at least a year old.

With thanks to Niki Carroll , winny, and kurufu for their invaluable proofreading and advice.

By popular demand , I’m doing another LLVM post. This time, it’s single static assignment (or SSA) form, a common feature in the intermediate representations of optimizing compilers.

Like the last one , SSA is a topic in compiler and IR design that I mostly understand but could benefit from some self-guided education on. So here we are.

How to represent a program

At the highest level, a compiler’s job is singular: to turn some source language input into some machine language output . Internally, this breaks down into a sequence of clearly delineated 1 tasks:

- Lexing the source into a sequence of tokens

- Parsing the token stream into an abstract syntax tree , or AST 2

- Validating the AST (e.g., ensuring that all uses of identifiers are consistent with the source language’s scoping and definition rules) 3

- Translating the AST into machine code, with all of its complexities (instruction selection, register allocation, frame generation, &c)

In a single-pass compiler, (4) is monolithic: machine code is generated as the compiler walks the AST, with no revisiting of previously generated code. This is extremely fast (in terms of compiler performance) in exchange for some a few significant limitations:

Optimization potential: because machine code is generated in a single pass, it can’t be revisited for optimizations. Single-pass compilers tend to generate extremely slow and conservative machine code.

By way of example: the System V ABI (used by Linux and macOS) defines a special 128-byte region beyond the current stack pointer ( %rsp ) that can be used by leaf functions whose stack frames fit within it. This, in turn, saves a few stack management instructions in the function prologue and epilogue.

A single-pass compiler will struggle to take advantage of this ABI-supplied optimization: it needs to emit a stack slot for each automatic variable as they’re visited, and cannot revisit its function prologue for erasure if all variables fit within the red zone.

Language limitations: single-pass compilers struggle with common language design decisions, like allowing use of identifiers before their declaration or definition. For example, the following is valid C++:

C and C++ generally require pre-declaration and/or definition for identifiers, but member function bodies may reference the entire class scope. This will frustrate a single-pass compiler, which expects Rect::width and Rect::height to already exist in some symbol lookup table for call generation.

Consequently, (virtually) all modern compilers are multi-pass .

Multi-pass compilers break the translation phase down even more:

- The AST is lowered into an intermediate representation , or IR

- Analyses (or passes) are performed on the IR, refining it according to some optimization profile (code size, performance, &c)

- The IR is either translated to machine code or lowered to another IR, for further target specialization or optimization 4

So, we want an IR that’s easy to correctly transform and that’s amenable to optimization. Let’s talk about why IRs that have the static single assignment property fill that niche.

At its core, the SSA form of any program source program introduces only one new constraint: all variables are assigned (i.e., stored to) exactly once .

By way of example: the following (not actually very helpful) function is not in a valid SSA form with respect to the flags variable:

Why? Because flags is stored to twice: once for initialization, and (potentially) again inside the conditional body.

As programmers, we could rewrite helpful_open to only ever store once to each automatic variable:

But this is clumsy and repetitive: we essentially need to duplicate every chain of uses that follow any variable that is stored to more than once. That’s not great for readability, maintainability, or code size.

So, we do what we always do: make the compiler do the hard work for us. Fortunately there exists a transformation from every valid program into an equivalent SSA form, conditioned on two simple rules.

Rule #1: Whenever we see a store to an already-stored variable, we replace it with a brand new “version” of that variable.

Using rule #1 and the example above, we can rewrite flags using _N suffixes to indicate versions:

But wait a second: we’ve made a mistake!

- open(..., flags_1, ...) is incorrect: it unconditionally assigns O_CREAT , which wasn’t in the original function semantics.

- open(..., flags_0, ...) is also incorrect: it never assigns O_CREAT , and thus is wrong for the same reason.

So, what do we do? We use rule 2!

Rule #2: Whenever we need to choose a variable based on control flow, we use the Phi function (φ) to introduce a new variable based on our choice.

Using our example once more:

Our quandary is resolved: open always takes flags_2 , where flags_2 is a fresh SSA variable produced applying φ to flags_0 and flags_1 .

Observe, too, that φ is a symbolic function: compilers that use SSA forms internally do not emit real φ functions in generated code 5 . φ exists solely to reconcile rule #1 with the existence of control flow.

As such, it’s a little bit silly to talk about SSA forms with C examples (since C and other high-level languages are what we’re translating from in the first place). Let’s dive into how LLVM’s IR actually represents them.

SSA in LLVM

First of all, let’s see what happens when we run our very first helpful_open through clang with no optimizations:

(View it on Godbolt .)

So, we call open with %3 , which comes from…a load from an i32* named %flags ? Where’s the φ?

This is something that consistently slips me up when reading LLVM’s IR: only values , not memory, are in SSA form. Because we’ve compiled with optimizations disabled, %flags is just a stack slot that we can store into as many times as we please, and that’s exactly what LLVM has elected to do above.

As such, LLVM’s SSA-based optimizations aren’t all that useful when passed IR that makes direct use of stack slots. We want to maximize our use of SSA variables, whenever possible, to make future optimization passes as effective as possible.

This is where mem2reg comes in:

This file (optimization pass) promotes memory references to be register references. It promotes alloca instructions which only have loads and stores as uses. An alloca is transformed by using dominator frontiers to place phi nodes, then traversing the function in depth-first order to rewrite loads and stores as appropriate. This is just the standard SSA construction algorithm to construct “pruned” SSA form.

(Parenthetical mine.)

mem2reg gets run at -O1 and higher, so let’s do exactly that:

Foiled again! Our stack slots are gone thanks to mem2reg , but LLVM has actually optimized too far : it figured out that our flags value is wholly dependent on the return value of our access call and erased the conditional entirely.

Instead of a φ node, we got this select :

which the LLVM Language Reference describes concisely:

The ‘select’ instruction is used to choose one value based on a condition, without IR-level branching.

So we need a better example. Let’s do something that LLVM can’t trivially optimize into a select (or sequence of select s), like adding an else if with a function that we’ve only provided the declaration for:

That’s more like it! Here’s our magical φ:

LLVM’s phi is slightly more complicated than the φ(flags_0, flags_1) that I made up before, but not by much: it takes a list of pairs (two, in this case), with each pair containing a possible value and that value’s originating basic block (which, by construction, is always a predecessor block in the context of the φ node).

The Language Reference backs us up:

The type of the incoming values is specified with the first type field. After this, the ‘phi’ instruction takes a list of pairs as arguments, with one pair for each predecessor basic block of the current block. Only values of first class type may be used as the value arguments to the PHI node. Only labels may be used as the label arguments. There must be no non-phi instructions between the start of a basic block and the PHI instructions: i.e. PHI instructions must be first in a basic block.

Observe, too, that LLVM is still being clever: one of our φ choices is a computed select ( %spec.select ), so LLVM still managed to partially erase the original control flow.

So that’s cool. But there’s a piece of control flow that we’ve conspicuously ignored.

What about loops?

Not one, not two, but three φs! In order of appearance:

Because we supply the loop bounds via count , LLVM has no way to ensure that we actually enter the loop body. Consequently, our very first φ selects between the initial %base and %add . LLVM’s phi syntax helpfully tells us that %base comes from the entry block and %add from the loop, just as we expect. I have no idea why LLVM selected such a hideous name for the resulting value ( %base.addr.0.lcssa ).

Our index variable is initialized once and then updated with each for iteration, so it also needs a φ. Our selections here are %inc (which each body computes from %i.07 ) and the 0 literal (i.e., our initialization value).

Finally, the heart of our loop body: we need to get base , where base is either the initial base value ( %base ) or the value computed as part of the prior loop ( %add ). One last φ gets us there.

The rest of the IR is bookkeeping: we need separate SSA variables to compute the addition ( %add ), increment ( %inc ), and exit check ( %exitcond.not ) with each loop iteration.

So now we know what an SSA form is , and how LLVM represents them 6 . Why should we care?

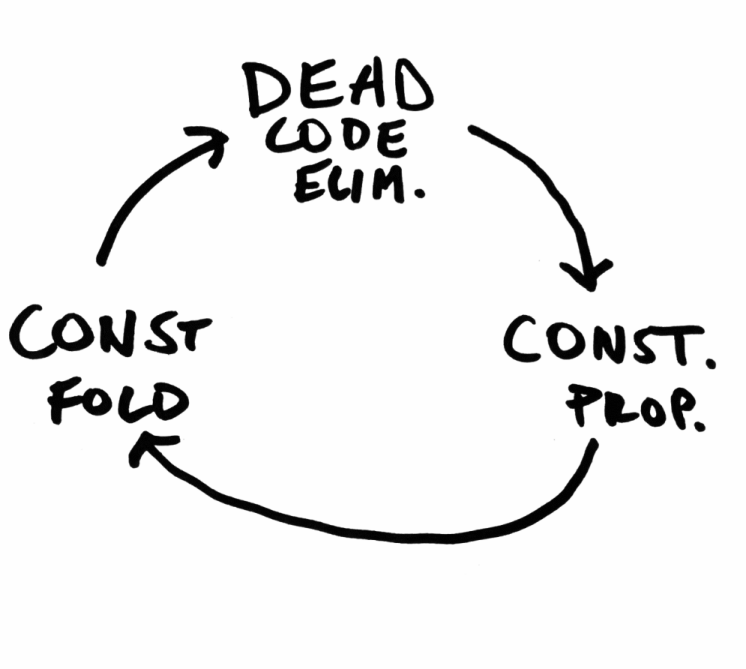

As I briefly alluded to early in the post, it comes down to optimization potential: the SSA forms of programs are particularly suited to a number of effective optimizations.

Let’s go through a select few of them.

Dead code elimination

One of the simplest things that an optimizing compiler can do is remove code that cannot possibly be executed . This makes the resulting binary smaller (and usually faster, since more of it can fit in the instruction cache).

“Dead” code falls into several categories 7 , but a common one is assignments that cannot affect program behavior, like redundant initialization:

Without an SSA form, an optimizing compiler would need to check whether any use of x reaches its original definition ( x = 100 ). Tedious. In SSA form, the impossibility of that is obvious:

And sure enough, LLVM eliminates the initial assignment of 100 entirely:

Constant propagation

Compilers can also optimize a program by substituting uses of a constant variable for the constant value itself. Let’s take a look at another blob of C:

As humans, we can see that y and z are trivially assigned and never modified 8 . For the compiler, however, this is a variant of the reaching definition problem from above: before it can replace y and z with 7 and 10 respectively, it needs to make sure that y and z are never assigned a different value.

Let’s do our SSA reduction:

This is virtually identical to our original form, but with one critical difference: the compiler can now see that every load of y and z is the original assignment. In other words, they’re all safe to replace!

So we’ve gotten rid of a few potential register operations, which is nice. But here’s the really critical part: we’ve set ourselves up for several other optimizations :

Now that we’ve propagated some of our constants, we can do some trivial constant folding : 7 + 10 becomes 17 , and so forth.

In SSA form, it’s trivial to observe that only x and a_{1..4} can affect the program’s behavior. So we can apply our dead code elimination from above and delete y and z entirely!

This is the real magic of an optimizing compiler: each individual optimization is simple and largely independent, but together they produce a virtuous cycle that can be repeated until gains diminish.

Register allocation

Register allocation (alternatively: register scheduling) is less of an optimization itself , and more of an unavoidable problem in compiler engineering: it’s fun to pretend to have access to an infinite number of addressable variables, but the compiler eventually insists that we boil our operations down to a small, fixed set of CPU registers .

The constraints and complexities of register allocation vary by architecture: x86 (prior to AMD64) is notoriously starved for registers 9 (only 8 full general purpose registers, of which 6 might be usable within a function’s scope 10 ), while RISC architectures typically employ larger numbers of registers to compensate for the lack of register-memory operations.

Just as above, reductions to SSA form have both indirect and direct advantages for the register allocator:

Indirectly: Eliminations of redundant loads and stores reduces the overall pressure on the register allocator, allowing it to avoid expensive spills (i.e., having to temporarily transfer a live register to main memory to accommodate another instruction).

Directly: Compilers have historically lowered φs into copies before register allocation, meaning that register allocators traditionally haven’t benefited from the SSA form itself 11 . There is, however, (semi-)recent research on direct application of SSA forms to both linear and coloring allocators 12 13 .

A concrete example: modern JavaScript engines use JITs to accelerate program evaluation. These JITs frequently use linear register allocators for their acceptable tradeoff between register selection speed (linear, as the name suggests) and acceptable register scheduling. Converting out of SSA form is a timely operation of its own, so linear allocation on the SSA representation itself is appealing in JITs and other contexts where compile time is part of execution time.

There are many things about SSA that I didn’t cover in this post: dominance frontiers , tradeoffs between “pruned” and less optimal SSA forms, and feedback mechanisms between the SSA form of a program and the compiler’s decision to cease optimizing, among others. Each of these could be its own blog post, and maybe will be in the future!

In the sense that each task is conceptually isolated and has well-defined inputs and outputs. Individual compilers have some flexibility with respect to whether they combine or further split the tasks. ↩

The distinction between an AST and an intermediate representation is hazy: Rust converts their AST to HIR early in the compilation process, and languages can be designed to have ASTs that are amendable to analyses that would otherwise be best on an IR. ↩

This can be broken up into lexical validation (e.g. use of an undeclared identifier) and semantic validation (e.g. incorrect initialization of a type). ↩

This is what LLVM does: LLVM IR is lowered to MIR (not to be confused with Rust’s MIR ), which is subsequently lowered to machine code. ↩

Not because they can’t: the SSA form of a program can be executed by evaluating φ with concrete control flow. ↩

We haven’t talked at all about minimal or pruned SSAs, and I don’t plan on doing so in this post. The TL;DR of them: naïve SSA form generation can lead to lots of unnecessary φ nodes, impeding analyses. LLVM (and GCC, and anything else that uses SSAs probably) will attempt to translate any initial SSA form into one with a minimally viable number of φs. For LLVM, this tied directly to the rest of mem2reg . ↩

Including removing code that has undefined behavior in it, since “doesn’t run at all” is a valid consequence of invoking UB. ↩

And are also function scoped, meaning that another translation unit can’t address them. ↩

x86 makes up for this by not being a load-store architecture : many instructions can pay the price of a memory round-trip in exchange for saving a register. ↩

Assuming that %esp and %ebp are being used by the compiler to manage the function’s frame. ↩

LLVM, for example, lowers all φs as one of its very first preparations for register allocation. See this 2009 LLVM Developers’ Meeting talk . ↩

Wimmer 2010a: “Linear Scan Register Allocation on SSA Form” ( PDF ) ↩

Hack 2005: “Towards Register Allocation for Programs in SSA-form” ( PDF ) ↩

Lesson 5: Global Analysis & SSA

- global analysis & optimization

- static single assignment

- SSA slides from Todd Mowry at CMU another presentation of the pseudocode for various algorithms herein

- Revisiting Out-of-SSA Translation for Correctness, Code Quality, and Efficiency by Boissinot on more sophisticated was to translate out of SSA form

- tasks due October 7

Lots of definitions!

- Reminders: Successors & predecessors. Paths in CFGs.

- A dominates B iff all paths from the entry to B include A .

- The dominator tree is a convenient data structure for storing the dominance relationships in an entire function. The recursive children of a given node in a tree are the nodes that that node dominates.

- A strictly dominates B iff A dominates B and A ≠ B . (Dominance is reflexive, so "strict" dominance just takes that part away.)

- A immediately dominates B iff A dominates B but A does not strictly dominate any other node that strictly dominates B . (In which case A is B 's direct parent in the dominator tree.)

- A dominance frontier is the set of nodes that are just "one edge away" from being dominated by a given node. Put differently, A 's dominance frontier contains B iff A does not strictly dominate B , but A does dominate some predecessor of B .

- Post-dominance is the reverse of dominance. A post-dominates B iff all paths from B to the exit include A . (You can extend the strict version, the immediate version, trees, etc. to post-dominance.)

An algorithm for finding dominators:

The dom relation will, in the end, map each block to its set of dominators. We initialize it as the "complete" relation, i.e., mapping every block to the set of all blocks. The loop pares down the sets by iterating to convergence.

The running time is O(n²) in the worst case. But there's a trick: if you iterate over the CFG in reverse post-order , and the CFG is well behaved (reducible), it runs in linear time—the outer loop runs a constant number of times.

Natural Loops

Some things about loops:

- Natural loops are strongly connected components in the CFG with a single entry.

- Natural loops are formed around backedges , which are edges from A to B where B dominates A .

- A natural loop is the smallest set of vertices L including A and B such that, for every v in L , either all the predecessors of v are in L or v = B .

- A language that only has for , while , if , break , continue , etc. can only generate reducible CFGs. You need goto or something to generate irreducible CFGs.

Loop-Invariant Code Motion (LICM)

And finally, loop-invariant code motion (LICM) is an optimization that works on natural loops. It moves code from inside a loop to before the loop, if the computation always does the same thing on every iteration of the loop.

A loop's preheader is its header's unique predecessor. LICM moves code to the preheader. But while natural loops need to have a unique header, the header does not necessarily have a unique predecessor. So it's often convenient to invent an empty preheader block that jumps directly to the header, and then move all the in-edges to the header to point there instead.

LICM needs two ingredients: identifying loop-invariant instructions in the loop body, and deciding when it's safe to move one from the body to the preheader.

To identify loop-invariant instructions:

(This determination requires that you already calculated reaching definitions! Presumably using data flow.)

It's safe to move a loop-invariant instruction to the preheader iff:

- The definition dominates all of its uses, and

- No other definitions of the same variable exist in the loop, and

- The instruction dominates all loop exits.

The last criterion is somewhat tricky: it ensures that the computation would have been computed eventually anyway, so it's safe to just do it earlier. But it's not true of loops that may execute zero times, which, when you think about it, rules out most for loops! It's possible to relax this condition if:

- The assigned-to variable is dead after the loop, and

- The instruction can't have side effects, including exceptions—generally ruling out division because it might divide by zero. (A thing that you generally need to be careful of in such speculative optimizations that do computations that might not actually be necessary.)

Static Single Assignment (SSA)

You have undoubtedly noticed by now that many of the annoying problems in implementing analyses & optimizations stem from variable name conflicts. Wouldn't it be nice if every assignment in a program used a unique variable name? Of course, people don't write programs that way, so we're out of luck. Right?

Wrong! Many compilers convert programs into static single assignment (SSA) form, which does exactly what it says: it ensures that, globally, every variable has exactly one static assignment location. (Of course, that statement might be executed multiple times, which is why it's not dynamic single assignment.) In Bril terms, we convert a program like this:

Into a program like this, by renaming all the variables:

Of course, things will get a little more complicated when there is control flow. And because real machines are not SSA, using separate variables (i.e., memory locations and registers) for everything is bound to be inefficient. The idea in SSA is to convert general programs into SSA form, do all our optimization there, and then convert back to a standard mutating form before we generate backend code.

Just renaming assignments willy-nilly will quickly run into problems. Consider this program:

If we start renaming all the occurrences of a , everything goes fine until we try to write that last print a . Which "version" of a should it use?

To match the expressiveness of unrestricted programs, SSA adds a new kind of instruction: a ϕ-node . ϕ-nodes are flow-sensitive copy instructions: they get a value from one of several variables, depending on which incoming CFG edge was most recently taken to get to them.

In Bril, a ϕ-node appears as a phi instruction:

The phi instruction chooses between any number of variables, and it picks between them based on labels. If the program most recently executed a basic block with the given label, then the phi instruction takes its value from the corresponding variable.

You can write the above program in SSA like this:

It can also be useful to see how ϕ-nodes crop up in loops.

(An aside: some recent SSA-form IRs, such as MLIR and Swift's IR , use an alternative to ϕ-nodes called basic block arguments . Instead of making ϕ-nodes look like weird instructions, these IRs bake the need for ϕ-like conditional copies into the structure of the CFG. Basic blocks have named parameters, and whenever you jump to a block, you must provide arguments for those parameters. With ϕ-nodes, a basic block enumerates all the possible sources for a given variable, one for each in-edge in the CFG; with basic block arguments, the sources are distributed to the "other end" of the CFG edge. Basic block arguments are a nice alternative for "SSA-native" IRs because they avoid messy problems that arise when needing to treat ϕ-nodes differently from every other kind of instruction.)

Bril in SSA

Bril has an SSA extension . It adds support for a phi instruction. Beyond that, SSA form is just a restriction on the normal expressiveness of Bril—if you solemnly promise never to assign statically to the same variable twice, you are writing "SSA Bril."

The reference interpreter has built-in support for phi , so you can execute your SSA-form Bril programs without fuss.

The SSA Philosophy

In addition to a language form, SSA is also a philosophy! It can fundamentally change the way you think about programs. In the SSA philosophy:

- definitions == variables

- instructions == values

- arguments == data flow graph edges

In LLVM, for example, instructions do not refer to argument variables by name—an argument is a pointer to defining instruction.

Converting to SSA

To convert to SSA, we want to insert ϕ-nodes whenever there are distinct paths containing distinct definitions of a variable. We don't need ϕ-nodes in places that are dominated by a definition of the variable. So what's a way to know when control reachable from a definition is not dominated by that definition? The dominance frontier!

We do it in two steps. First, insert ϕ-nodes:

Then, rename variables:

Converting from SSA

Eventually, we need to convert out of SSA form to generate efficient code for real machines that don't have phi -nodes and do have finite space for variable storage.

The basic algorithm is pretty straightforward. If you have a ϕ-node:

Then there must be assignments to x and y (recursively) preceding this statement in the CFG. The paths from x to the phi -containing block and from y to the same block must "converge" at that block. So insert code into the phi -containing block's immediate predecessors along each of those two paths: one that does v = id x and one that does v = id y . Then you can delete the phi instruction.

This basic approach can introduce some redundant copying. (Take a look at the code it generates after you implement it!) Non-SSA copy propagation optimization can work well as a post-processing step. For a more extensive take on how to translate out of SSA efficiently, see “Revisiting Out-of-SSA Translation for Correctness, Code Quality, and Efficiency” by Boissinot et al.

- Find dominators for a function.

- Construct the dominance tree.

- Compute the dominance frontier.

- One thing to watch out for: a tricky part of the translation from the pseudocode to the real world is dealing with variables that are undefined along some paths.

- You will want to make sure the output of your "to SSA" pass is actually in SSA form. There's a really simple is_ssa.py script that can check that for you.

- You'll also want to make sure that programs do the same thing when converted to SSA form and back again. Fortunately, brili supports the phi instruction, so you can interpret your SSA-form programs if you want to check the midpoint of that round trip.

- For bonus "points," implement global value numbering for SSA-form Bril code.

- Static Dress return with "crying"

Static Dress have returned for their first taste of new music in two years in the form of a brand new single, "crying".

“The video for ‘crying’ explores several themes of the song’s lyrical character, revolving around taboo topics presented as a battle between the sacred and the profane. Rather than focusing solely on striking presentation and the visual stimulation of colour and design, we ask the viewer to travel through multiple scenes, all with their own subject matter; guilt and battling with self-loathing, mass corporate manipulation, capitalist horde mentality and weaponised lust," Olli Appleyard says of the new single. "We hope to provoke further questions and conversation with this piece, aiming to push the boundaries of our own storytelling and visual presentation in a way that exhibits the growth we’ve experienced in recent years."

Following the release of their 2022 debut album, Rouge Carpet Disaster , the band signed to Roadrunner Records, and unveiled a 2023 release of the album’s Redux version; a fully fledged reinterpretation of the project that saw each track exist within parallel universes while featuring contributions from the likes of Loathe, Creeper, World Of Pleasure.

"crying" is out now via Roadrunner Records.

- Moby celebrates the late Benjamin Zephaniah with "where is your pride?"

- John Lennon 's estate announce remastered ultimate edition of Mind Games

- Nada Surf announce first album in four years, Moon Mirror

- Kate Nash shares "My Bile" and announces UK in-store dates

- BAD WITH PHONES announces debut album, CRASH

- Chanel Loren announces forthcoming debut EP, BETWEEN2WORLDS

Get the Best Fit take on the week in music direct to your inbox every Friday

Jordan Rakei The Loop

IGLOOGHOST Tidal Memory Exo

BIG SPECIAL POSTINDUSTRIAL HOMETOWN BLUES

Yaya Bey Ten Fold

Amen Dunes Death Jokes

- App Building

- Be Release Ready – Summer ’24

- Integration

- Salesforce Well-Architected ↗

- See all products ↗

- Career Resources

- Essential Habits

- Salesforce Admin Skills Kit

- Salesforce Admin Enablement Kit

Home » Article » Summer ’24 Feature Deep Dive: Create Richer Screen Flows with Action Buttons (Beta) | Be Release Ready

- Summer ’24 Feature Deep Dive: Create Richer Screen Flows with Action Buttons (Beta) | Be Release Ready

Summer ’24 is just around the corner! Discover Action Buttons, one of the new screen flow capabilities I’m really excited about, and check out Be Release Ready for additional resources to help you prepare for Summer ’24.

Screen Actions are a screen flow game changer

One of the most important new screen flow capabilities is now here: Action Buttons! You can now add an Action Button component to a screen to run what we call Screen Actions that can retrieve or crunch data and then makes the data available in that same screen for other components to use. This unlocks the true power of reactivity to let you create truly dynamic single-page applications.

Let’s take a deep dive into one of my favorite features ever to come to screen flows (though I am admittedly biased!).

Use Screen Actions to keep users on the same screen

When would you use a Screen Action? If you want to keep users on a single screen, Action Buttons are your ticket.

Let’s take a classic use case where you select an account, and from there, you generate a list of contacts based on that Account (or Accounts), and then get more information about a selected contact. You can do that with an Action Button.

In the example below, we have a single flow serving as an underlying data broker for the screen. Each of the Action Buttons on the screen calls on the same autolaunched flow for the data that it needs, powering any components that need it. For you developers out there, think of this as your Apex controller, except you don’t need to write any code.

Set up an Action Button

Setting up an Action Button is straightforward, especially for those of you who have created a subflow before.

Let’s dive in. At a high level, you can see the process we follow in the below diagram.

1. Plan your screen and Screen Action(s)

Think about what you want your screen to do and how your Screen Action will help you create that bigger, cooler, amazing dynamic screen. What inputs do you send to your Screen Action from the screen? Are there any existing autolaunched flows you could use?

For example, imagine you want to do a few things on your screen. You want a user to select one or multiple contacts from a lookup and get contact roles for the opportunity as well as some basic information about that opportunity. In that same screen, perhaps in a separate Action Button, you also want to see the account’s other opportunities in a Data Table component.

Here’s what your autolaunched flow would need to receive from or send to the parent screen flow.

- A list of account IDs : This will be an accountIds text collection value that we get from a Lookup component. We will use it to retrieve the entire set of opportunities and contacts for a given list of accounts.

- Contact records

- Opportunity record

2. Create or reuse your action (autolaunched) flow

This process should be familiar to anybody who’s made a subflow—in fact, it is the same!

- Perform the actions you want in your autolaunched flow, like a Get Records, a Loop, or an Invocable Action.

- Create variables for any data that you want passed into the autolaunched flow by marking them as ‘Available for Input’. This ensures you can pass data from your screen components into your autolaunched flow.

- Next, create variables for the data you want to come back into the screen flow and mark them as ‘Available for Output’. Below is an example, but take note of the naming here. I like to start the names of variables with ‘output’ or ‘input’ to make things cleaner.

- Assign the results you want returned back into your screen flow in an Assignment element to the resources you’ve marked as ‘Available for output’. This would be things like Get Records, Apex Actions, a Transform result, or a Collection Filter element.

- Save, test, and activate your autolaunched flow. If you can debug/test it by itself, I’d recommend doing that first. The autolaunched flow must be active to appear in the Action Button’s flow selector.

3. Set up your Action Button

- Select the Action Button component from the component palette, provide an API Name and Label for the Action Button, and select the autolaunched flow we just saved, tested, and activated.

- From here on the experience is identical to setting up a subflow! Map the screen components to your autolaunch flow’s input variables and check out the fancy new ‘View Output Resources’ pane that has a list of all of the Screen Action’s outputs.

4. Use the Screen Action results anywhere on the screen

- Now that you’ve set up the Action Button, you can reference the results by finding the API Name of the Screen Action triggered by the button and drilling into ‘Results’, then finding the output you want from the flow that ran.

- In the below example, I set up a Data Table component with contact records that were the output results from the Action Button.

Note: In some cases, like when you’re creating a collection choice set as a choice option, for the action results to appear in the screen editor, you may need to first save your Screen element by clicking Done in the screen editor before the action will appear in the resource picker.

Run the screen flow and watch the magic happen! Combine it with conditional visibility and our new Read Only/Disabled input states and you’ve got some grade-A screen flow goodness.

More screen action examples with reactive choice options

We’ve also made the underlying infrastructure of choice options more dynamic with reactive collection choice sets. Now, when the underlying collection of a collection choice set changes, the options will change as well automatically! This opens up a ton of brand new use cases that were previously accessible only with code and pair wonderfully with Screen Actions.

Dependent picklists as records

Use cases for screen Action Buttons extend well beyond the basic data table example above. As part of Summer ’24, collection choice sets are now reactive, which means you can also dynamically generate choice options within things like radio buttons and choice lookup! This means you’ll be able to create what we call ‘DIY dependent picklists’ that are based on record data.

Imagine you have a university application where you select a major (stored as records) and concentrations (stored as records that are related to Majors). With screen actions and reactive record choice sets, you can now keep users on the same screen when selecting both a major and a concentration.

Filtering the records in a lookup

For years, the community has asked for better record filtering with the screen Lookup component. Unfortunately, we won’t be directly delivering that, but I think we are inching ever closer with the Choice Lookup component as a viable alternative. In some cases, this may be an even better solution. As a refresher, the Choice Lookup component lets you provide a collection of records as your source data and have users select a record in a similar experience as the Lookup component. Before Summer ’24, that list of available records was static upon entering the screen. With reactive choice options and screen actions, you can now generate lookup options specific to the actions taken by the user on the same screen .

Think about that for a moment. What’s powerful here is that you can go well beyond basic formulas that exist in a lookup filter. You can perform callouts, traverse deep into relationships, or retrieve unrelated records and use those records as the source data for your Choice Lookup component. Need to generate a list of contacts with high-priority cases based on an account selected in the same screen? Go for it!

Creating scalable Screen Actions

I’d like to take a moment to generalize how I think people should be thinking about these screen actions. Think about what reusable jobs you want your action flows, or subflows, to perform. I suggest creating a set of ‘utility flows’ that perform frequent operations. Some examples of reusable flows:

- Takes a recordId, looks at it, determines the right object, and returns the entire record and any key related records, such as an account record and all of its contacts, opportunities, or cases

- Takes the selections from a multi-select checkbox (a semi-colon delimited text variable), parses it, and converts the selection to records

On top of reusing autolaunched flows, you may also want to consider scenarios where a single autolaunched flow handles all of the work for a single screen in your flow (or all of your screens depending on how complex it is).

Take, for example, this scenario where there are two Action Buttons on a screen: one button to retrieve the contacts for a set of selected accounts with specific criteria, and another to get the contact record and its high-priority open cases.

Because of the extra filtering requirements, it might not make sense to reuse your utility flows. You could build two individual flows for each button, but that might be hard to manage. Instead, create a single flow that both buttons can launch.

In the below flow, we use one autolaunched flow to get contacts and contact cases, and retrieve the entire contact record that we can use throughout the screen.

Notice that we can use the above flow for two different buttons on the same screen. The only difference between the two is the inputs into the action.

Notice how we use the same autolaunched flow but use different inputs for the two buttons. This allows us to make things nice and tidy, keeping the maintenance of the autolaunched flow to a minimum. While the naming here isn’t great, think about a naming convention that lets you easily tie the autolaunched flow to the screen flow. If your screen flow is called ‘Account/Contact Handle Flow’, consider naming your autolaunched flow something like ‘Account/Contact Handle Flow – Action – Get Account and Contact Info’ so they appear together when ordered alphabetically in your flow list view.

Build your flows differently when using Screen Actions in Experience Cloud sites

Here are some quick tips to make sure your Screen Actions are secure. In many ways, Screen Actions are a lot like subflows, but there are some differences in how they run. This is particularly important if you’re building a flow intended for unauthenticated users, or guest users, in Experience Cloud sites.

Flows are executed differently when they’re run by a Subflow element versus a Screen Action. Screen Actions execute from the user’s browser, then the server. In contrast, subflows run exclusively from Salesforce’s servers. This key difference means that you need to be mindful of what goes in and out of your Screen Actions when exposing your flow to external audiences. Knowing this, let’s look at some recommendations.

- Don’t enable system context for your Screen Actions: In general, it is poor practice to use system context-enabled screen flows in Experience Cloud sites. Instead, use subflows elevated in system context that perform the operations you need between screens.

- Identify specific fields when using the Get Records element: In order to keep your data secure, we recommend retrieving the specific fields you need in a Get Records and avoid using the default ‘Automatically store all fields’ setting. This recommendation is important for unauthenticated experiences, but is also relevant to all implementations of Screen Actions. See the next section for details.

- Be selective with your inputs and outputs: Screen Actions should only be used to power components on the same screen—we don’t recommend referencing a Screen Action output outside of the screen where it is used. Only return records and fields that will be used on the screen—nothing more.

- Perform Create / Update / Delete operations outside of the screen: For better error handling and data security, we do not recommend performing anything other than queries and complex data calculations in your Screen Actions.

Improve performance by manually identifying which fields to store in the Get Records element

When you output a collection of records in a Screen Action, we recommend that you avoid using the ‘Automatically store all fields’ option in the Get Records element to prevent performance degradation and/or errors related to governor limits.

This handy setting was originally added to prevent Flow from hitting errors if you tried to reference a field that you didn’t include in your Get Records. While the Flow engine does its best to try and obtain any fields downstream in your flow, it is impossible to know which fields are needed in many scenarios.

When passing the results of a Get Records that has the ‘Automatically store all fields’ setting selected into a Lightning Web Component, like Data Table, everything the running user has access to will be pulled into your browser behind the scenes. This has big performance implications.

Think about what happens when you have an object’s worth of data stored in the browser multiple times. That is a ton of information being passed around. If, for example, you have 200 data table rows selected, any time a row selection happens, the flow runtime makes that data available elsewhere in your flow to use. The more fields you have, the more data is passed, which introduces potential for performance degradation.

On top of browser performance limitations, there’s also a hard framework limit that prevents a single screen from being larger than 1 MB at the time of writing this article (Summer ’24). While this is difficult to calculate and track, multiple data tables loaded with records and fields can potentially trigger this limit. For the curious folks out there, you can see this limit in action by embedding a Data Table component of ContentVersion (Files) records and including the FileData field in the source data. When you select a record and navigate, you’ll hit that size limit.

Key differences between Screen Actions and Subflows

We’re beefing up our Help docs with some of this information, but keep in mind that there are key differences in how these Screen Actions behave from a normal subflow.

- User / system context: Screen Actions do not inherit the context of the parent flow. If your screen flow is running in system context, your action flow will not inherit system context. System context must be explicitly turned on for any Screen Action.

- Limiting flow access: If you’ve limited access to run a flow , as is required to run a flow for a guest user, you will also need to grant access to any flow run by a screen action within that flow. Subflows, conversely, inherit the access from the flow calling it and do not need special access.

- Debugging and flow versions: When debugging a screen flow, you can optionally run the latest version of any subflow. Screen Actions always run the active version.

Tips and tricks

I’d like to share some tips and tricks that all use the ‘IsSuccess’ output from every screen action that runs from a button press. You can do some pretty snazzy things with it.

Validating components based on the results of a screen action

Imagine you had some fancy Apex validating an address or an email address in your action flow. You could validate that a user typed in a valid entry by outputting a simple boolean/checkbox flag to your flow, then check that it is true in your Input Validation criteria.

Then, mark your Email input as required, and you’re done!

By marking a component required that uses the action’s results and including the ‘isSuccess’ flag in the component’s input validation criteria, you can ensure a user will need to click the button before proceeding to the next screen.

Disabling or hiding components until an action runs

We recently added Disabled and Read Only inputs to many of our components! If you map any of these new Disabled or Read Only inputs to the ‘isSuccess’ output of a screen Action Button, you can disable an input when an action runs to prevent people from overwriting any of its values.

On that same note, you can conditionally hide components that might use the action’s data until the action runs so users don’t see empty component data until the screen runs.

Connect with the community

We want to hear from you! We’ve created the Screen Flow Action Button Beta Trailblazer Community Group for you to describe your cool use cases, ask questions, and share feedback with the Automation product team.

Summer ’24 resources

Check out our Reactive Components Resources trailmix to learn more about reactivity through articles, blog posts, and videos from all over the Flow community.

If you want to learn more about securely sharing your Experience Cloud Sites with Guest Users, check out this help article .

Throughout Summer ’24, we’ll publish blogs and videos designed to equip you with the knowledge to fully leverage the upcoming release. Make sure to bookmark Be Release Ready and visit often to discover the latest resources for Salesforce Admins.

Adam is a Flow Product Manager and former Solution Architect at Salesforce. Adam is passionate about Flow and actively contributes to and helps manage the UnofficialSF.com community as well as being heavily involved in the wider Flow community through the Trailblazer Community, Salesforce Exchange Discord Server, and Ohana Slack server.

Flow Enhancements | Summer ’24 Be Release Ready

- Reactive Screen Components (GA) | Learn MOAR Winter ’24

- Reactive Screen Components (Beta) | Learn MOAR Summer ’23

Related Posts

By Adam White | April 16, 2024

Summer ’24 is almost here! Learn more about new Flow Builder enhancements like the Automation App, Action Button (beta), and more, and check out Be Release Ready to discover more resources to help you prepare for Summer ’24. Want to see these enhancements in action? Salesforce product manager Sam Reynard and I will demo some […]

The Ultimate Guide to Prompt Builder | Spring ’24

By Raveesh Raina | February 6, 2024

Artificial intelligence (AI) is not a new concept to Salesforce or to Salesforce Admins. Over the years, Salesforce has empowered admins with a user-friendly interface for the setup and configuration of predictive AI features such as Opportunity Scoring, Lead Scoring, Einstein Bots, and more. The introduction of generative AI in Salesforce brings even more possibilities […]

10 Important Takeaways for Salesforce Admins from 2023

By Brittney Gibson | December 18, 2023

As we approach the end of 2023, it’s the perfect time to reflect on what we’ve learned as a Salesforce Admin community and to prepare for the exciting year ahead. In this blog post, we’ll recap essential insights that will help you walk away from this year ready to tackle 2024. Let’s dive into our […]

IMAGES

VIDEO

COMMENTS

In compiler design, static single assignment form (often abbreviated as SSA form or simply SSA) is a type of intermediate representation (IR) where each variable is assigned exactly once. SSA is used in most high-quality optimizing compilers for imperative languages, including LLVM, the GNU Compiler Collection, and many commercial compilers.. There are efficient algorithms for converting ...

SSA form. Static single-assignment form arranges for every value computed by a program to have. aa unique assignment (aka, "definition") A procedure is in SSA form if every variable has (statically) exactly one definition. SSA form simplifies several important optimizations, including various forms of redundancy elimination. Example.

Static Single Assignment Form (and dominators, post-dominators, dominance frontiers…) CS252r Spring 2011 ... •Identify (natural) loops in CFG •All nodes X dominated by entry node H, where X can reach H, and there is exactly one back edge (head dominates tail) in loop

Static Single Assignment Form L6.2 2 Basic Blocks A basic block is a sequence of instructions with one entry point and one exit point. In particular, from nowhere in the program do we jump into the middle of the ... Now we note that there are two ways to reach the label loop: when we first enter the loop, or from the end of the loop body. This ...

Static Single Assignment Form L10.4 3 Loops To appreciate the difficulty and solution of how to handle more complex programs, we consider the example of the exponential function, where pow(b;e) = be for e 0. int pow(int b, int e) //@requires e >= 0; {int r = 1; while (e > 0) //@loop_invariant e >= 0;

•Static Single Assignment (SSA) •CFGs but with immutable variables •Plus a slight "hack" to make graphs work out •Now widely used (e.g., LLVM) •Intra-procedural representation only •An SSA representation for whole program is possible (i.e., each global variable and memory location has static single

In Static Single Assignment (SSA) Form each assignment to a variable, v, is changed into a unique assignment to new variable, v i. If variable v has n assignments to it ... Loop: Here, let M = Z = N. MoZ, (N dom M) but ¬(N sdom Z), so a phi function is needed in Z. DF(N) =

Many compilers convert programs into static single assignment (SSA) form, which does exactly what it says: it ensures that, globally, every variable has exactly one static assignment location. (Of course, that statement might be executed multiple times, which is why it's not dynamic single assignment.) In Bril terms, we convert a program like ...

• This type of representation is known as SSA (static single assignment) oEach variable has exactly one definition M. Lam CS243: SMT 3 X 1 = 2 X 3 =Φ(X 1, X 2) Y 1 = X 3 + 2 X 2 = 3. ... opportunities in loops M. Lam CS243: SMT 12 Braun, M. et al (2013). Simple and Efficient Construction of Static Single Assignment Form. CS243: SMT 14

Static Single Assignment (SSA) • Static single assignment is an IR where every variable is assigned a value at most once in the program text • Easy for a basic block (reminiscent of Value Numbering): -Visit each instruction in program order: •LHS: assign to a fresh version of the variable •RHS: use the most recent version of each variable

Static Single Assignment •Induction variables (standard vs. SSA) •Loop Invariant Code Motion with SSA CS 380C Lecture 7 21 Static Single Assignment Cytron et al. Dominance Frontier Algorithm let SUCC(S) = [s∈S SUCC(s) DOM!−1(v) = DOM−1(v) - v, then

Static Single Assignment was presented in 1988 by Barry K. Rosen, Mark N, Wegman, and F. Kenneth Zadeck.. In compiler design, Static Single Assignment ( shortened SSA) is a means of structuring the IR (intermediate representation) such that every variable is allotted a value only once and every variable is defined before it's use. The prime use of SSA is it simplifies and improves the ...

With thanks to Niki Carroll, winny, and kurufu for their invaluable proofreading and advice.. Preword. By popular demand, I'm doing another LLVM post.This time, it's single static assignment (or SSA) form, a common feature in the intermediate representations of optimizing compilers.. Like the last one, SSA is a topic in compiler and IR design that I mostly understand but could benefit from ...

Static single-assignment "Slight lie": SSA is useful for much more than register allocation! In fact, the main advantage of SSA form is that, by representing data dependencies as precisely as possible, it makes many optimising transformations simpler and more effective, e.g. constant propagation, loop-invariant code motion, partial-redundancy

single unique definition point. But this seems impossible for most programs—or is it? In Static Single Assignment (SSA) Form each assignment to a variable, v, is changed into a unique assignment to new variable, vi. If variable v has n assignments to it throughout the program, then (at least) n new variables, v1 to vn, are created to replace ...

In this way the compiler can hop quickly from use to definition to use to definition. An improvement on the idea of def-use chains is static single-assignment form, or SSA form, an intermediate representation in which each variable has only one definition in the program text. The one (static) definition site may be in a loop that is executed ...

EECS 583 - Class 8 Classic Code Optimization Static Single Assignment. EECS 583 - Class 8 Classic Code Optimization Static Single Assignment. University of Michigan. October 4, 2016. - 1 -. Class Problem. Optimize this applying 1. dead code elimination 2. forward copy propagation 3. CSE. r4 = r1 r6 = r15 r2 = r3 * r4 r8 = r2 + r5 r9 = r3.

SSA Lecture Annotated Notes: https://shorturl.at/iyAV5 (Please Like & Comment)Static single assignment form (SSA): SSA is a naming convention for storage loc...

Natural loops are strongly connected components in the CFG with a single entry. Natural loops are formed around backedges, ... Many compilers convert programs into static single assignment (SSA) form, which does exactly what it says: it ensures that, globally, every variable has exactly one static assignment location.

Static single-assignment form. SSA form. an ir that has a value-based name system, created by renaming and use of pseudooperations called ϕ-functions ssa encodes both control and value flow. It is used widely in optimization (see Section 9.3). (ssa) is an ir and a naming discipline that many modern compilers use to encode information about both the flow of control and the flow of values in ...

Find basic loop induction variable(s) 1. Inspect back edges in the loop 2. Each back edge points to a ϕ node in the loop header, which may indicate a basic induction variable 3. ϕ is a function of an initialized variable and a definition in the form of "i + c" (i.e., increment operation) 23 Identifying Basic Loop Induction Variable i 1 ...

COMPUTING STATIC SINGLE-ASSIGNMENT FORM 2.1 Naming of Values Every assignment to a variable v generates a new value v, where i is a ... For example, if the join nodes of the two loops were merged one could not move loop-invariant calculations out of the innermost loop. 2.3 Where to Place @Assignments

In this way the compiler can hop quickly from use to definition to use to definition. An improvement on the idea of def-use chains is static single-assignment form, or SSA form, an intermediate representation in which each variable has only one definition in the program text. The one (static) definition-site may be in a loop that is executed ...

Static vs. dynamic analysis * Some static analyses are unsound; dynamic analyses can be sound. Dynamic analysis Reason about the program based on some program executions. Observe concrete behavior at run time. Improve confidence in correctness. Unsound* but precise. Static analysis Reason about the program without executing it.

Static Dress have returned for their first taste of new music in two years in the form of a brand new single, "crying". The Line of Best Fit The Line of Best Fit. The Line of Best Fit. Features; News; Tracks ... The Loop. 10 May 2024. IGLOOGHOST. Tidal Memory Exo. 10 May 2024. BIG SPECIAL. POSTINDUSTRIAL HOMETOWN BLUES. 09 May 2024.

Assign the results you want returned back into your screen flow in an Assignment element to the resources you've marked as 'Available for output'. This would be things like Get Records, Apex Actions, a Transform result, or a Collection Filter element. Save, test, and activate your autolaunched flow.