6.859 : Interactive Data Visualization

Assignment 1: visualization design.

In this assignment, you will now create a digital visualization for the same small dataset from A0 and provide a rigorous rationale for your design choices. You should in theory be ready to explain the contribution of every pixel in the display. You are free to use any graphics or charting tool you please – including Microsoft Excel, Tableau, Adobe Illustrator, PowerPoint/Keynote, or Paint. However, you may find it most instructive to create the chart from scratch using a visualization package of your choice (see the resources page for a list of visualization tools.)

- The Dataset: U.S. Population, 1900 vs. 2000

Every 10 years, the census bureau documents the demographic make-up of the United States, influencing everything from congressional districting to social services. This dataset contains a high-level summary of census data for two years a century apart: 1900 and 2000. The data is a CSV (comma-separated values) file that describes the U.S. population in terms of year, reported sex (1: male, 2: female), age group (binned into 5 year segments from 0-4 years old up to 90+ years old), and the total count of people per group. There are 38 data points per year, for a total of 76 data points.

Note: This is the same dataset from A0. But, this time, we’re providing you the raw data directly with exact, precise population counts for each age group and year. Your submission should reflect these accurate values rather than the rounded values from A0.

Dataset: CSV

Source: U.S. Census Bureau via IPUMS

Start by choosing a question you’d like a visualization to answer. This question could be the same as the one you chose for A0, or you can pick a new question if you would like.

Design a static visualization (i.e., a single image) that you believe effectively answers that question, and use the question as the title of your graphic. We recommend that you iterate on your ideas from your A0, but you may also start from scratch and/or draw on inspiration from other sources.

Provide a short writeup (approximately 4-5 paragraphs) describing your process and design decisions, and how A0 informed your final visual.

While you must use the data set given, you are free to transform the data as necessary. Such transforms may include (but are not limited to) log transformations, computing percentages or averages, grouping elements into new categories, or removing unnecessary variables or records. You are also free to incorporate external data as you see fit. Your chart image should be interpretable without consulting your writeup. Do not forget to include a title, axis labels or legends as needed!

In your writeup, you should provide a rigorous rationale for your design decisions. As different visualizations can emphasize different aspects of a data set, your writeup should document what aspects of the data you are attempting to most effectively communicate. In short, what story are you trying to tell?

Document the visual encodings you used and why they are appropriate for the data and your specific question. These decisions include the choice of visualization (mark) type, size, color, scale, and other visual elements, as well as the use of sorting or other data transformations. How do these decisions facilitate effective communication? Just as important, also note which aspects of the data might be obscured or down-played due to your visualization design.

Your writeup should also include a paragraph reflecting on how A0 may or may not have influenced your design for this assignment. For example, you could discuss to what extent sketching helped inform your final design. What aspects of your A0 sketches did you keep or discard, and why did you make those decisions? Or, how did A0 help you change course for A1?

The assignment score is out of a maximum of 10 points. Historically, the median score on this assignment has been 8.5. We will determine scores by judging both the soundness of your design and the quality of the write-up. We will also look for consideration of the audience, message and intended task.

We will use the following rubric to grade your assignment. Note that rubric cells may not map exactly to specific point scores.

| Component | Excellent | Satisfactory | Poor |

|---|---|---|---|

| Data Question | An interesting question (i.e., one without an immediately obvious answer) is posed. The visualization provides a clear answer. | A reasonable question is posed, but it is unclear whether the visualization provides an answer to it. | Missing or unclear question posed of the data. |

| Mark, Encoding, and Data Transforms | All design choices are effective. The visualization can be read and understood effortlessly. | Design choices are largely effective, but minor errors hinder comprehension. | Ineffective mark, encoding, or data transformation choices are distracting or potentially misleading. |

| Titles & Labels | Titles and labels helpfully describe and contextualize the visualization. | Most necessary titles and labels are present, but they could provide more context. | Many titles or labels are missing, or do not provide human-understandable information. |

| Design Rationale | Well crafted write-up provides reasoned justification for all design choices and discussion of impact of A0. | Most design decisions are described, but rationale could be explained at a greater level of detail. | Missing or incomplete. Several design choices or impacts of A0 are left unexplained. |

| Creativity & Originality | You exceeded the parameters of the assignment, with original insights or a particularly engaging design. | You met all the parameters of the assignment. | You met most of the parameters of the assignment. |

- Submission Details

This is an individual assignment. You may not work in groups. Your completed assignment is due on Monday 3/1, 11:59 pm EST .

Submit your assignment on Canvas . We expect your visualization and writeup to be included in a single file (use .pdf). Please make sure your image is sized for a reasonable viewing experience – readers should not have to zoom or scroll in order to effectively view your submission!

Due: Monday 3/1, 11:59 pm EST

Submit », on this page.

The Increasing Global Temperature

Coursera data visualization project 1, created by jean pan.

This is a simple data visualization exercise from Coursera Data Visualization course.

The data used in this assignment is GISTEMP data from NASA.

The visualization tool I'm using is D3.js .

Explanation

This graph visualizes the GISTEMP data for the Globe and the North and South Hemispheres through all the given years ( 1880 - 2014 ). The Blue line is for the Globe, the Orange line describes the data for the Northern Hemisphere and the Green for the South Hemisphere.

From the resulting graph, although there is a little decreasing during 19th century, we can see that the overall trend of global temperature is increasing. Both north and south follow the same trend as the global, but we can find the south increases smoother than the north.

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

Data Visualization with Python - Final Assignment

NatashadT/Final-Assignment

Folders and files.

| Name | Name | |||

|---|---|---|---|---|

| 6 Commits | ||||

Repository files navigation

Final-assignment, import required libraries.

import pandas as pd import dash import dash_html_components as html import dash_core_components as dcc from dash.dependencies import Input, Output, State import plotly.graph_objects as go import plotly.express as px from dash import no_update

Create a dash application

app = dash.Dash( name )

REVIEW1: Clear the layout and do not display exception till callback gets executed

app.config.suppress_callback_exceptions = True

Read the airline data into pandas dataframe

airline_data = pd.read_csv(' https://cf-courses-data.s3.us.cloud-object-storage.appdomain.cloud/IBMDeveloperSkillsNetwork-DV0101EN-SkillsNetwork/Data%20Files/airline_data.csv ', encoding = "ISO-8859-1", dtype={'Div1Airport': str, 'Div1TailNum': str, 'Div2Airport': str, 'Div2TailNum': str})

List of years

year_list = [i for i in range(2005, 2021, 1)]

"""Compute graph data for creating yearly airline performance report

Function that takes airline data as input and create 5 dataframes based on the grouping condition to be used for plottling charts and grphs.

Returns: Dataframes to create graph. """ def compute_data_choice_1(df): # Cancellation Category Count bar_data = df.groupby(['Month','CancellationCode'])['Flights'].sum().reset_index() # Average flight time by reporting airline line_data = df.groupby(['Month','Reporting_Airline'])['AirTime'].mean().reset_index() # Diverted Airport Landings div_data = df[df['DivAirportLandings'] != 0.0] # Source state count map_data = df.groupby(['OriginState'])['Flights'].sum().reset_index() # Destination state count tree_data = df.groupby(['DestState', 'Reporting_Airline'])['Flights'].sum().reset_index() return bar_data, line_data, div_data, map_data, tree_data

"""Compute graph data for creating yearly airline delay report

This function takes in airline data and selected year as an input and performs computation for creating charts and plots.

Arguments: df: Input airline data.

Returns: Computed average dataframes for carrier delay, weather delay, NAS delay, security delay, and late aircraft delay. """ def compute_data_choice_2(df): # Compute delay averages avg_car = df.groupby(['Month','Reporting_Airline'])['CarrierDelay'].mean().reset_index() avg_weather = df.groupby(['Month','Reporting_Airline'])['WeatherDelay'].mean().reset_index() avg_NAS = df.groupby(['Month','Reporting_Airline'])['NASDelay'].mean().reset_index() avg_sec = df.groupby(['Month','Reporting_Airline'])['SecurityDelay'].mean().reset_index() avg_late = df.groupby(['Month','Reporting_Airline'])['LateAircraftDelay'].mean().reset_index() return avg_car, avg_weather, avg_NAS, avg_sec, avg_late

Application layout

Task1: add title to the dashboard, enter your code below. make sure you have correct formatting..

app.layout = html.Div(children=[html.H1('US Domestic Airline Flights Performance', style={'textAlign': 'center', 'color': '#503D36', 'font-size': 24}),

Callback function definition

Task4: add 5 ouput components.

@app.callback([Output(component_id='plot1',component_property='children'), Output(component_id='plot2',component_property='children'), Output(component_id='plot3',component_property='children'), Output(component_id='plot4',component_property='children'), Output(component_id='plot5',component_property='children')],

REVIEW4: Holding output state till user enters all the form information. In this case, it will be chart type and year

Add computation to callback function and return graph.

def get_graph(chart, year, children1, children2, c3, c4, c5):

Run the app

if name == ' main ': app.run_server()

- Python 100.0%

Programming Assignment 1: Visualize Data Using a Chart

Introduction.

This assignment will give you a chance to explore the topics covered in week 2 of the course by visualizing some data as a chart. The data set we provided deals with world temperatures and comes from NASA: http://data.giss.nasa.gov/gistemp/ . Alternatively you can use any data that you would like to explore. You are not required to use D3.js, but if you would like to, we have provided some helpful resources that will make it easier for you to create a visualization. You are welcome to use the additional resources, especially if you do not want to program to complete this project.

The goal of this assignment is to give you some experience with handling and deciding how to visualize some data and for you to get a feel for the various aspects of the visualization.

Overview of the data

In this section we give an overview of the structure of the data, such as basic and summary statistics.

Plotting the trends by year

Plotting trends through a quantitative variable, some answers.

- What are your X and Y axes?

R: My axe ‘X’ will always be the variable year, and ‘Y’ will be the temperature measurements

- Did you use a subset of the data? If so, what was it?

R: Yes, in the first graph only use variable names were recognized and measures to graph them together

- Are there any particular aspects of your visualization to which you would like to bring attention?

R: Yes, there is a clear upward trend in the tempereatura levels over time, even in the hemispheres

- What do you think the data, and your visualization, shows?

R: It tells a story of how the temperature levels has increased over time and more drastically from the 60

Assignment overview

You will get the most of out this class if you:

- Engage with the readings and lecture materials

- Regularly use R

Each type of assignment in this class helps with one of these strategies.

Reflections

To encourage engagement with the course content, you’ll need to write a ≈150 word reflection about the readings and lectures for the day. That’s fairly short: there are ≈250 words on a typical double-spaced page in Microsoft Word (500 when single-spaced).

You can do a lot of different things with this memo: discuss something you learned from the course content, write about the best or worst data visualization you saw recently, connect the course content to your own work, etc. These reflections let you explore and answer some of the key questions of this course, including:

- What is truth? How is truth related to visualization?

- Why do we visualize data?

- What makes a great visualization? What makes a bad visualization?

- How do you choose which kind of visualization to use?

- What is the role of stories in presenting analysis?

The course content for each day will also include a set of questions specific to that topic. You do not have to answer all (or any) of these questions . That would be impossible. They exist to guide your thinking, that’s all.

I will grade these memos using a check system:

- ✔+: ( 11.5 points (115%) in gradebook ) Reflection shows phenomenal thought and engagement with the course content. I will not assign these often.

- ✔: ( 10 points (100%) in gradebook ) Reflection is thoughtful, well-written, and shows engagement with the course content. This is the expected level of performance.

- ✔−: ( 5 points (50%) in gradebook ) Reflection is hastily composed, too short, and/or only cursorily engages with the course content. This grade signals that you need to improve next time. I will hopefully not assign these often.

Notice that is essentially a pass/fail or completion-based system. I’m not grading your writing ability, I’m not counting the exact number of words you’re writing, and I’m not looking for encyclopedic citations of every single reading to prove that you did indeed read everything. I’m looking for thoughtful engagement, that’s all. Do good work and you’ll get a ✓.

You will turn these reflections in via iCollege. You will write them using R Markdown and they will be the first section of your daily exercises (see below).

Each class session has interactive lessons and fully annotated examples of code that teach and demonstrate how to do specific tasks in R. However, without practicing these principles and making graphics on your own, you won’t remember what you learn!

To practice working with ggplot2 and making data-based graphics, you will complete a brief set of exercises for each class session. These exercises will have 1–3 short tasks that are directly related to the topic for the day. You need to show that you made a good faith effort to work each question. The problem sets will also be graded using a check system:

- ✔+: ( 11.5 points (115%) in gradebook ) Exercises are 100% completed. Every task was attempted and answered, and most answers are correct. Knitted document is clean and easy to follow. Work is exceptional. I will not assign these often.

- ✔: ( 10 points (100%) in gradebook ) Exercises are 70–99% complete and most answers are correct. This is the expected level of performance.

- ✔−: ( 5 points (50%) in gradebook ) Exercises are less than 70% complete and/or most answers are incorrect. This indicates that you need to improve next time. I will hopefully not assign these often.

Note that this is also essentially a pass/fail system. I’m not grading your coding ability, I’m not checking each line of code to make sure it produces some exact final figure, and I’m not looking for perfect. Also note that a ✓ does not require 100% completion—you will sometimes get stuck with weird errors that you can’t solve, or the demands of pandemic living might occasionally become overwhelming. I’m looking for good faith effort, that’s all. Try hard, do good work, and you’ll get a ✓.

You may (and should!) work together on the exercises, but you must turn in your own answers.

You will turn these exercises in using iCollege. You will include your reflection in the first part of the document—the rest will be your exercise tasks.

Mini projects

To give you practice with the data and design principles you’ll learn in this class, you will complete two mini projects. I will provide you with real-world data and pose one or more questions—you will make a pretty picture to answer those questions.

The mini projects will be graded using a check system:

- ✔+: ( 85 points (≈115%) in gradebook ) Project is phenomenally well-designed and uses advanced R techniques. The project uncovers an important story that is not readily apparent from just looking at the raw data. I will not assign these often.

- ✔: ( 75 points (100%) in gradebook ) Project is fine, follows most design principles, answers a question from the data, and uses R correctly. This is the expected level of performance.

- ✔−: ( 37.5 points (50%) in gradebook ) Project is missing large components, is poorly designed, does not answer a relevant question, and/or uses R incorrectly. This indicates that you need to improve next time. I will hopefully not assign these often.

Because these mini projects give you practice for the final project, I will provide you with substantial feedback on your design and code.

Final project

At the end of the course, you will demonstrate your data visualization skills by completing a final project.

Complete details for the final project (including past examples of excellent projects) are here.

There is no final exam. This project is your final exam.

The project will not be graded using a check system. Instead I will use a rubric to grade four elements of your project:

- Technical skills

- Visual design

- Truth and beauty

If you’ve engaged with the course content and completed the exercises and mini projects throughout the course, you should do just fine with the final project.

Last updated on July 31, 2021

Edit this page

- Email Sign-Up

Data Visualization: Exploring and Explaining with Data | 1st Edition

Available study tools, international mindtap for camm/cochran/fry/ohlmann’s data visualization: exploring and explaining with data instant access, about this product.

DATA VISUALIZATION: Exploring and Explaining with Data is designed to introduce best practices in data visualization to undergraduate and graduate students. This is one of the first books on data visualization designed for college courses. The book contains material on effective design, choice of chart type, effective use of color, how to explore data visually, and how to explain concepts and results visually in a compelling way with data. Indeed, the skills developed in this book will be helpful to all who want to influence with data or be accurately informed by data. The book is designed for a semester-long course at either undergraduate or graduate level. The examples used in this book are drawn from a variety of functional areas in the business world including accounting, finance, operations, and human resources as well as from sports, politics, science, and economics.

- Programming Assignment 1 - Data Visualisation

- by Edwin van 't Hul

- Last updated almost 9 years ago

- Hide Comments (–) Share Hide Toolbars

Twitter Facebook Google+

Or copy & paste this link into an email or IM:

Data Visualization Guide

- Resources: Chart Types

- Resources: Tools

- Resources: Assets

- Resources: Books

Data Visualization Workflow

Follow these steps to guide your data visualization process to ensure clarity, accuracy, and relevance, all of which are crucial in effective data visualization:

| The first step in data visualization is understanding the purpose of your visualization. Determine you want to convey, is, and they will take from the information presented. Also, consider where the visualization will be published. Printed media can only accommodate visualizations, while videos, websites, and social media can include either or visualizations in addition to the static ones. | |

| An analysis is helpful in understanding the characteristics of your data. Look for , , , and any . statistics and testing can also provide valuable into your data. | |

| The most appropriate visualization depends on your and . Refer to this to help you choose which visualization is most appropriate for your project. | |

| Create and Refine Your Visualization Once you decide on a visualization, use the right tools to bring it to life. Spreadsheet software like or might be sufficient for generating basic visualizations. For more advanced techniques, you might need other tools that require coding skills, such as (Matplotlib, Seaborn, Plotly.py) or (ggplot, Plotly.R), or those that require little to no coding skills, like , , and . Don't forget to evaluate your visualization for and . Ensure the data is correctly represented, and the visualization effectively communicates the intended message. Seek from peers or stakeholders. If you use colors, ensure they are , especially for colorblind individuals. Tools like or can help you refine your visualization's color scheme. | |

| To effectively present visualizations to your audience, it is beneficial to information and , key insights, and guide your audience through the data narrative for better comprehension. By following these guidelines, you can create, present, and share impactful data visualizations that not only convey your message effectively but also resonate with your audience and help them make informed decisions. |

- << Previous: Home

- Next: Resources: Chart Types >>

- Last Updated: Jul 1, 2024 5:24 PM

- URL: https://guides.library.stonybrook.edu/data-viz

- Request a Class

- Hours & Locations

- Ask a Librarian

- Special Collections

- Library Faculty & Staff

Library Administration: 631.632.7100

- Stony Brook Home

- Campus Maps

- Web Accessibility Information

- Accessibility Barrier Report Form

Comments or Suggestions? | Library Webmaster

Except where otherwise noted, this work by SBU Libraries is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License .

Assignment 1: Data, Visualization, and Housing

This first assignment will give you a gentle introduction to the key ideas of this course: how can we use data and visualization to analyze, communicate, and ultimately affect policy around the housing affordability crisis. Due: Mon 2/12, 11:59 pm ET Submit on the class forum →

News Articles

Select two news articles from the list below .

For each article, write a two paragraph comment. Your comment should not summarize the article. Rather, focus on analyzing both the topic of the article (i.e., the issues related to housing) and reflecting on the use of data and visualization to communicate the information. Here are some prompts to help spark your thinking:

- What do you appreciate about the arguments it is making, and what critiques or points of disagreement might you have? Why?

- How did the article confirm or shift your prior understanding?

- How did it help you identify and question assumptions you had been making?

- How has the article changed (or not) your opinion or outlook on the topic?

- How well did visual design choices (e.g., chart type, colors, layout, etc.) help reveal the important features or trends of the underlying data in a straightforward fashion? Or how much work did you need to do to understand what is being shown?

- If the visualization was interactive or animated, how did these bits of dynamic behavior help convey the takeaway message? Or were they more distracting than helpful? Was it clear how to begin interacting, and was performing the interaction satisfying or worth the effort?

- What about the “smaller details”? For instance, how were titles, labels, and annotations used to help guide your attention to the key pieces of information, and help explain how to read the chart? Or were you/would you have preferred to have been left to your own devices?

Post your comment to the class forum under the thread corresponding to the article you are commenting on. You are welcome to structure your submission as either commenting on the article directly, or as a response to the points made by one of your colleagues.

Please select two articles to comment on from the following list. Note, we have used “gift” links but these may expire—if you hit a paywall, please let us know by posting on the class forum and we will issue new links accordingly.

- The Secret Bias Hidden in Mortgage-Approval Algorithms , The Markup . [ forum post ]

- When Private Equity Becomes Your Landlord , ProPublica . [ forum post ]

- The housing market is cooling. What’s it like in your area? , The Washington Post . [ forum post ]

- The Housing Shortage Isn’t Just a Coastal Crisis Anymore , The New York Times . [ forum post ]

- Cities Start to Question an American Ideal: A House With a Yard on Every Lot , The New York Times . [ forum post ]

- In 83 Million Eviction Records, a Sweeping and Intimate New Look at Housing in America , The New York Times . [ forum post ]

- Mass. has a huge waitlist for state-funded housing. So why are 2,300 units vacant? , WBUR . [ forum post ]

- Where we build homes helps explain America’s political divide , The Washington Post . [ forum post ]

- Houses are too expensive. Apartments are too small. Is this a fix? , The Washington Post . [ forum post ]

This assignment is worth 4 points, 2 points per comment. Comments will be graded on a check-minus/check/check-plus scale according to the following rubric:

Check-minus (1/2) : Surface-level engagement with the article including: summarizing the points made in the article, offering a high-level reflection without unpacking the “why”, relatively shallow analysis or critiques of the use of data and visualization, and other styles of comments that suggest only cursory readings of the article. Partially complete submissions may also earn a check-minus if appropriate.

Check (2/2) : Effective engagement with the article. Comments awarded check grades indicate that they understand the main ideas of the article, and the reflections and analysis are reasonable with nontrivial observations worth surfacing. We expect most comments to be awarded checks.

Check-plus (3/2) : Excellent engagement with the article. Check-plus grades are reserved for rare instances where a comment really hits on an interesting, unique, and insightful point of view. Generally only a few submissions for each paper earn a check-plus.

- Artificial intelligence in healthcare

Yuichiro Chino/Moment via Getty

Top 10 Challenges of Big Data Analytics in Healthcare

Big data analytics in healthcare comes with many challenges, including security, visualization, and a number of data integrity concerns..

- Editorial Staff

Big data analytics is a major undertaking for the healthcare industry.

Providers who have barely come to grips with putting data into their electronic health records (EHRs) are now tasked with pulling actionable insights out of them – and applying those learnings to complicated initiatives that directly impact reimbursement.

For healthcare organizations that successfully integrate data-driven insights into their clinical and operational processes, the rewards can be huge. Healthier patients, lower care costs, more visibility into performance, and higher staff and consumer satisfaction rates are among the many benefits of turning data assets into data insights .

However, the road to meaningful healthcare analytics is a rocky one, filled with challenges and problems to solve.

Big data are complex and unwieldy, requiring healthcare organizations to take a close look at their approaches to collecting, storing, analyzing, and presenting their data to staff members, business partners, and patients.

What are some of the top challenges organizations typically face when booting up a big data analytics program, and how can they overcome these issues to achieve their data-driven clinical and financial goals?

All data comes from somewhere. Unfortunately, for many healthcare providers, it doesn’t always come from somewhere with impeccable data governance habits. Capturing data that is clean, complete, accurate, and formatted correctly for use in multiple systems is an ongoing battle for organizations, many of which aren’t on the winning side of the conflict.

Having a robust data collection process is key to advancing big data analytics efforts in healthcare in the age of EHRs, artificial intelligence (AI), and machine learning (ML). Proper data capture is one of the first steps organizations can take to build datasets and support projects to improve clinical care.

Poor EHR usability, convoluted workflows, and an incomplete understanding of why big data are important to capture can all contribute to quality issues that will plague data throughout its lifecycle and limit its useability.

Providers can start to improve their data capture routines by prioritizing valuable data types – EHRs, genomic data, population-level information – for their specific projects, enlisting the data governance and integrity expertise of health information management professionals, and developing clinical documentation improvement programs that coach clinicians about how to ensure that data are useful for downstream analytics.

2. CLEANING

Healthcare providers are intimately familiar with the importance of cleanliness in the clinic and the operating room, but may not be quite as aware of how vital it is to cleanse their data, too.

Dirty data can quickly derail a big data analytics project, especially when bringing together disparate data sources that may record clinical or operational elements in slightly different formats. Data cleaning – also known as cleansing or scrubbing – ensures that datasets are accurate, correct, consistent, relevant, and not corrupted.

The Office of the National Coordinator for Health Information Technology (ONC) recommends conducting data cleaning processes as close to the point of first capture as possible, as doing so minimizes potential duplications of effort or conflicting cleansing activities.

While some data cleaning processes are still performed manually, automated data cleaning tools and frameworks are available to assist healthcare stakeholders with their data integrity efforts. These tools are likely to become increasingly sophisticated and precise as AI and ML techniques continue their rapid advance, reducing the time and expense required to ensure high levels of accuracy and integrity in healthcare data warehouses.

Data storage is a critical cost, security, and performance issue for a healthcare information technology (IT) department. As the volume of healthcare data grows exponentially, some providers are no longer able to manage the costs and impacts of on-premise data centers.

On-premise data storage promises control over security, access, and up-time, but an on-site server network can be expensive to scale, difficult to maintain, and prone to producing data silos across different departments.

Cloud storage and other digital health ecosystems are becoming increasingly attractive for providers and payers as costs drop and reliability grows.

The cloud offers nimble disaster recovery , lower up-front costs, and easier expansion – although organizations must be extremely careful about choosing Health Insurance Portability and Accountability Act of 1996 (HIPAA)-compliant cloud storage partners.

Many organizations end up with a hybrid approach to their data storage programs, which may be the most flexible and workable approach for providers with varying data access and storage needs. When developing hybrid infrastructure, however, providers should be careful to ensure that disparate systems are able to communicate and share data with other segments of the organization when necessary.

4. SECURITY

Data security is a major priority for healthcare organizations, especially in the wake of a rapid-fire series of high-profile breaches, hackings, and ransomware episodes. From zero-day attacks to AI-assisted cyberattacks , healthcare data are subject to a nearly infinite array of vulnerabilities.

The HIPAA Security Rule includes a long list of technical safeguards for organizations storing protected health information (PHI), including transmission security, authentication protocols, and controls over access, integrity, and auditing.

In practice, these safeguards translate into common-sense security procedures such as using up-to-date anti-virus software, encrypting sensitive data, and using multi-factor authentication.

But even the most tightly secured data center can be taken down by the fallibility of human staff members, who may not be well-versed in good cybersecurity practices .

Healthcare organizations must frequently communicate the critical nature of data security protocols across the enterprise, prioritize employee cybersecurity training and healthcare-specific cybersecurity performance goals , and consistently review who has access to high-value data assets to prevent malicious parties from causing damage.

5. STEWARDSHIP

Healthcare data, especially on the clinical side, has a long shelf life. In addition to keeping patient data accessible for at least six years as required by HIPAA, providers may wish to utilize de-identified datasets for research projects, which makes ongoing stewardship and curation an important concern. Data may also be reused or reexamined for other purposes, such as quality measurement or performance benchmarking.

Understanding when, by whom, and for what purpose the data were created – as well as how those data were used in the past – is important for researchers and data analysts.

Developing complete, accurate, and updated metadata is a key component of a successful data governance plan. Metadata allows analysts to exactly replicate previous queries, which is vital for scientific studies and accurate benchmarking, and prevents the creation of “data dumpsters,” or isolated datasets with limited utility.

Healthcare organizations should assign a data steward to handle the development and curation of meaningful metadata. A data steward can ensure that all elements have standard definitions and formats, are documented appropriately from creation to deletion, and remain useful for the tasks at hand.

6. QUERYING

Robust metadata and strong stewardship protocols also make it easier for organizations to query their data and get the answers that they seek. The ability to query data is foundational for reporting and analytics, but healthcare organizations must typically overcome a number of challenges before they can engage in meaningful analysis of their big data assets .

Firstly, they must overcome data silos and interoperability problems that prevent query tools from accessing the organization’s entire repository of information. If different components of a dataset exist in multiple walled-off systems or in different formats, it may not be possible to generate a complete portrait of an organization’s status or an individual patient’s health.

Even if data live in a common warehouse, standardization and quality can be lacking. In the absence of medical coding systems like the International Classification of Diseases (ICD) , SNOMED-CT , or Logical Observation Identifiers Names and Codes (LOINC) that reduce free-form concepts into a shared ontology, it may be difficult to ensure that a query is identifying and returning the correct information to the user.

Many organizations use Structured Query Language (SQL) to dive into large datasets and relational databases, but it is only effective when a user can first trust the accuracy, completeness, and standardization of the data at hand.

7. REPORTING

After providers have nailed down the query process, they must generate a report that is clear, concise, and accessible to the target audience.

Once again, the accuracy and integrity of the data has a critical downstream impact on the accuracy and reliability of the report. Poor data at the outset will produce suspect reports at the end of the process, which can be detrimental for clinicians who are trying to use the information to treat patients.

Providers must also understand the difference between “analysis” and “reporting.” Reporting is often the prerequisite for analysis – the data must be extracted before it can be examined – but reporting can also stand on its own as an end product.

While some reports may be geared toward highlighting a certain trend, coming to a novel conclusion, or convincing the reader to take a specific action, others must be presented in a way that allows the reader to draw their own inferences about what the full spectrum of data means.

Organizations should be very clear about how they plan to use their reports to ensure that database administrators can generate the information they actually need.

A great deal of the reporting in the healthcare industry is external, since regulatory and quality assessment programs frequently demand large volumes of data to feed quality measures and reimbursement models. Providers have a number of options for meeting these various requirements, including qualified registries, reporting tools built into their electronic health records, and web portals hosted by the Centers for Medicare & Medicaid Services (CMS) and other groups.

8. VISUALIZATION

At the point of care, clean and engaging data visualization can make it much easier for a clinician to absorb information and use it appropriately.

Color-coding is a popular data visualization technique that typically produces an immediate response – for example, red, yellow, and green are generally understood to mean stop, caution, and go.

Organizations must also consider data presentation best practices , such as leveraging charts that use proper proportions to illustrate contrasting figures and correct labeling of information to reduce potential confusion. Convoluted flowcharts, cramped or overlapping text, and low-quality graphics can frustrate and annoy recipients, leading them to ignore or misinterpret data.

Common healthcare data visualization approaches include pivot tables, charts, and dashboards , all of which have their own specific uses to illustrate concepts and information.

9. UPDATING

Healthcare data are dynamic, and most elements will require relatively frequent updates in order to remain current and relevant. For some datasets, like patient vital signs, these updates may occur every few seconds. Other information, such as home address or marital status, might only change a few times during an individual’s entire lifetime.

Understanding the volatility of big data , or how often and to what degree it changes, can be a challenge for organizations that do not consistently monitor their data assets.

Providers must have a clear idea of which datasets need manual updating, which can be automated, how to complete this process without downtime for end-users, and how to ensure that updates can be conducted without damaging the quality or integrity of the dataset.

Organizations should also ensure that they are not creating unnecessary duplicate records when attempting an update to a single element, which may make it difficult for clinicians to access necessary information for patient decision-making.

10. SHARING

Providers don’t operate in a vacuum, and few patients receive all of their care at a single location. This means that sharing data with external partners is essential, especially as the industry moves toward population health management and value-based care.

Data interoperability is a perennial concern for organizations of all types, sizes, and positions along the data maturity spectrum.

Fundamental differences in the design and implementation of health information systems can severely curtail a user’s ability to move data between disparate organizations, often leaving clinicians without information they need to make key decisions, follow up with patients, and develop strategies to improve overall outcomes.

The industry is currently working hard to improve the sharing of data across technical and organizational barriers. Emerging tools and strategies such as the Fast Healthcare Interoperability Resource (FHIR) and application programming interfaces (APIs) are making it easier for organizations to share data easily and securely.

But adoption of these methodologies varies, leaving many organizations cut off from the possibilities inherent in the seamless sharing of patient data.

In order to develop a big data exchange ecosystem that connects all members of the care continuum with trustworthy, timely, and meaningful information, providers will need to overcome every challenge on this list. Doing so will take time, commitment, funding, and communication – but success will ease the burdens of all those concerns.

- The Healthcare Data Cycle: Generation, Collection, and Processing

- Storage, Management, and Analysis in the Health Data Lifecycle

- Visualizing, Interpreting, and Disposing of Healthcare Analytics Data

Related Resources

- Enabling High-Quality Healthcare And Outcomes With Better Analytics –Teradata

Dig Deeper on Artificial intelligence in healthcare

4 Emerging Strategies to Advance Big Data Analytics in Healthcare

Understanding De-Identified Data, How to Use It in Healthcare

Patient Privacy in Healthcare Analytics: The Role of Augmentation PETs

Using Algorithmic Privacy-Enhancing Technologies in Healthcare Analytics

UW Health nurses are piloting a generative AI tool that drafts responses to patient messages to improve clinical efficiency ...

Industry stakeholders are working with DirectTrust to create a data standard for secure cloud fax to address health data exchange...

A new HHS final rule outlines information blocking disincentives for healthcare providers across several CMS programs, including ...

Data Visualization ¶

In this lesson, we'll learn two data visualization libraries matplotlib and seaborn . By the end of this lesson, students will be able to:

- Skim library documentation to identify relevant examples and usage information.

- Apply seaborn and matplotlib to create and customize relational and regression plots.

- Describe data visualization principles as they relate the effectiveness of a plot.

Just like how we like to import pandas as pd , we'll import matplotlib.pyplot as plt and seaborn as sns .

Seaborn is a Python data visualization library based on matplotlib. Behind the scenes, seaborn uses matplotlib to draw its plots. When importing seaborn, it is recommended to call sns.set_theme() to apply the recommended seaborn visual style instead of the default matplotlib theme.

Let's load this uniquely-formatted pokemon dataset.

| Name | Type 1 | Type 2 | Total | HP | Attack | Defense | Sp. Atk | Sp. Def | Speed | Stage | Legendary | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Num | ||||||||||||

| 1 | Bulbasaur | Grass | Poison | 318 | 45 | 49 | 49 | 65 | 65 | 45 | 1 | False |

| 2 | Ivysaur | Grass | Poison | 405 | 60 | 62 | 63 | 80 | 80 | 60 | 2 | False |

| 3 | Venusaur | Grass | Poison | 525 | 80 | 82 | 83 | 100 | 100 | 80 | 3 | False |

| 4 | Charmander | Fire | NaN | 309 | 39 | 52 | 43 | 60 | 50 | 65 | 1 | False |

| 5 | Charmeleon | Fire | NaN | 405 | 58 | 64 | 58 | 80 | 65 | 80 | 2 | False |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 147 | Dratini | Dragon | NaN | 300 | 41 | 64 | 45 | 50 | 50 | 50 | 1 | False |

| 148 | Dragonair | Dragon | NaN | 420 | 61 | 84 | 65 | 70 | 70 | 70 | 2 | False |

| 149 | Dragonite | Dragon | Flying | 600 | 91 | 134 | 95 | 100 | 100 | 80 | 3 | False |

| 150 | Mewtwo | Psychic | NaN | 680 | 106 | 110 | 90 | 154 | 90 | 130 | 1 | True |

| 151 | Mew | Psychic | NaN | 600 | 100 | 100 | 100 | 100 | 100 | 100 | 1 | False |

151 rows × 12 columns

Note how this table consists of individual records of pokemons as rows. This makes it a long-form table, and is good for seaborn plotting.

Figure-level versus axes-level functions ¶

One way to draw a scatter plot comparing every pokemon's Attack and Defense stats is by calling sns.scatterplot . Because this plotting function has so many parameters , it's good practice to specify keyword arguments that tell Python which argument should go to which parameter.

The return type of sns.scatterplot is a matplotlib feature called axes that can be used to compose multiple plots into a single visualization. We can show two plots side-by-side by placing them on the same axes. For example, we could compare the attack and defense stats for two different groups of pokemon: not- Legendary and Legendary .

Each problem in the plot above can be fixed manually by repeatedly editing and running the code until you get a satisfactory result, but it's a tedious process. Seaborn was invented to make our data visualization experience less tedious. Methods like sns.scatterplot are considered axes-level functions designed for interoperability with the rest of matplotlib , but they come at the cost of forcing you to deal with the tediousness of tweaking matplotlib.

Instead, the recommended way to create plots in seaborn is to use figure-level functions like sns.relplot as in relational plot . Figure-level functions return specialized seaborn objects (such as FacetGrid ) that are intended to provide more usable results without tweaking.

By default, relational plots produce scatter plots but they can also produce line plots by specifying the keyword argument kind="line" .

Alongside relplot , seaborn provides several other useful figure-level plotting functions:

- relplot for relational plots , such as scatter plots and line plots.

- displot for distribution plots , such as histograms and kernel density estimates.

- catplot for categorical plots , such as strip plots, box plots, violin plots, and bar plots.

- lmplot for relational plots with a regression fit , such as the scatter plot with regression fit below.

When reading documentation online, it is important to remember that we will only use figure-level plots in this course because they are the recommended approach. On the relative merits of figure-level functions in the seaborn documentation:

On balance, the figure-level functions add some additional complexity that can make things more confusing for beginners, but their distinct features give them additional power. The tutorial documentation mostly uses the figure-level functions, because they produce slightly cleaner plots, and we generally recommend their use for most applications. The one situation where they are not a good choice is when you need to make a complex, standalone figure that composes multiple different plot kinds. At this point, it’s recommended to set up the figure using matplotlib directly and to fill in the individual components using axes-level functions.

Customizing a FacetGrid plot ¶

relplot , displot , catplot , and lmplot all return a FacetGrid , a specialized seaborn object that represents a data visualization canvas. As we've seen above, a FacetGrid can put two plots side-by-side and manage their axes by removing the y-axis labels on the right plot because they are the same as the plot on the left.

However, there are still many instances where we might want to customize a plot by changing labels or adding titles. We might want to create a bar plot to count the number of each type of pokemon.

The pokemon types on the x-axis are hardly readable, the y-axis label "count" could use capitalization, and the plot could use a title. To modify the attributes of a plot, we can assign the returned FacetGrid to a variable like grid and then call tick_params or set .

Practice: Life expectancy versus health expenditure ¶

Seaborn includes a repository of example datasets that we can load into a DataFrame by calling sns.load_dataset . Let's examine the Life expectancy vs. health expenditure, 1970 to 2015 dataset that combines two data sources:

- The Life expectancy at birth dataset from the UN World Population Prospects (2022): "For a given year, it represents the average lifespan for a hypothetical group of people, if they experienced the same age-specific death rates throughout their lives as the age-specific death rates seen in that particular year."

- The Health expenditure (2010 int.-$) dataset from OECD.stat . "Per capita health expenditure and financing in OECD countries, measured in 2010 international dollars."

| Spending_USD | Life_Expectancy | ||

|---|---|---|---|

| Year | Country | ||

| 1970 | Germany | 252.311 | 70.6 |

| France | 192.143 | 72.2 | |

| Great Britain | 123.993 | 71.9 | |

| Japan | 150.437 | 72.0 | |

| USA | 326.961 | 70.9 | |

| ... | ... | ... | ... |

| 2020 | Germany | 6938.983 | 81.1 |

| France | 5468.418 | 82.3 | |

| Great Britain | 5018.700 | 80.4 | |

| Japan | 4665.641 | 84.7 | |

| USA | 11859.179 | 77.0 |

274 rows × 2 columns

Write a seaborn expression to create a line plot plotting the Life_Expectancy (y-axis) against the Year (x-axis) colored with hue="Country" .

Anything noticeable? What title can we give to this plot?

What makes bad figures bad? ¶

In chapter 1 of Data Visualization , Kieran Hiely explains how data visualization is about communication and rhetoric.

While it is tempting to simply start laying down the law about what works and what doesn't, the process of making a really good or really useful graph cannot be boiled down to a list of simple rules to be followed without exception in all circumstances. The graphs you make are meant to be looked at by someone. The effectiveness of any particular graph is not just a matter of how it looks in the abstract, but also a question of who is looking at it, and why. An image intended for an audience of experts reading a professional journal may not be readily interpretable by the general public. A quick visualization of a dataset you are currently exploring might not be of much use to your peers or your students.

Bad taste ¶

Kieran identifies three problems, the first of which is bad taste .

Kieran draws on Edward Tufte's principles (all quoted from Tufte 1983):

- have a properly chosen format and design

- use words, numbers, and drawing together

- display an accessible complexity of detail

- avoid content-free decoration, including chartjunk

In essence, these principles amount to "an encouragement to maximize the 'data-to-ink' ratio." In practice, our plotting libraries like seaborn do a fairly good job of providing defaults that generally follow these principles.

The second problem is bad data , which can involve either cherry-picking data or presenting information in a misleading way.

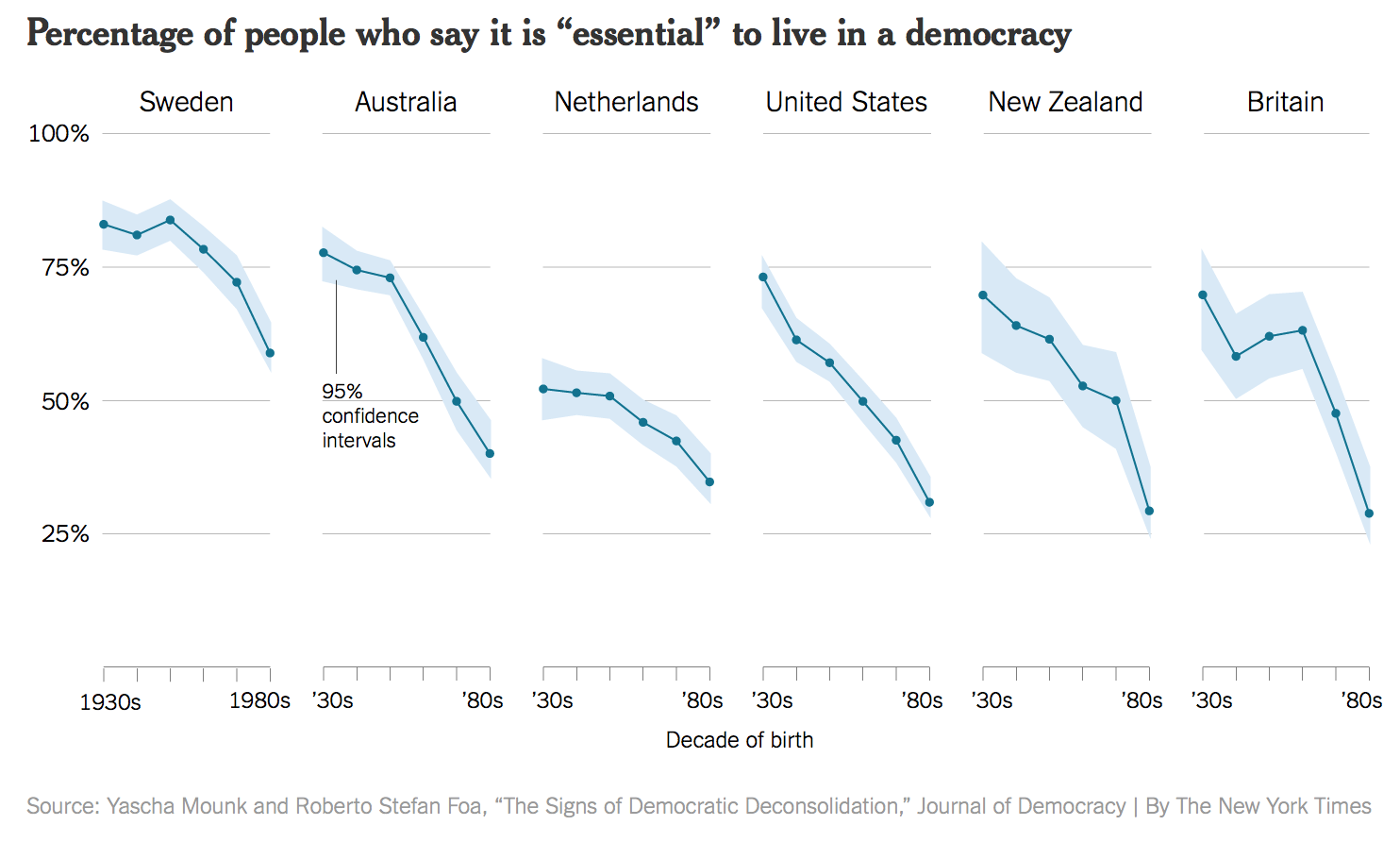

In November of 2016, The New York Times reported on some research on people's confidence in the institutions of democracy. It had been published in an academic journal by the political scientist Yascha Mounk. The headline in the Times ran, "How Stable Are Democracies? ‘Warning Signs Are Flashing Red’” (Taub, 2016). The graph accompanying the article

This plot is one that is well-produced, and that we could reproduce by calling sns.relplot like we learned above. The x-axis shows the decade of birth for people all surveyed in the research study.

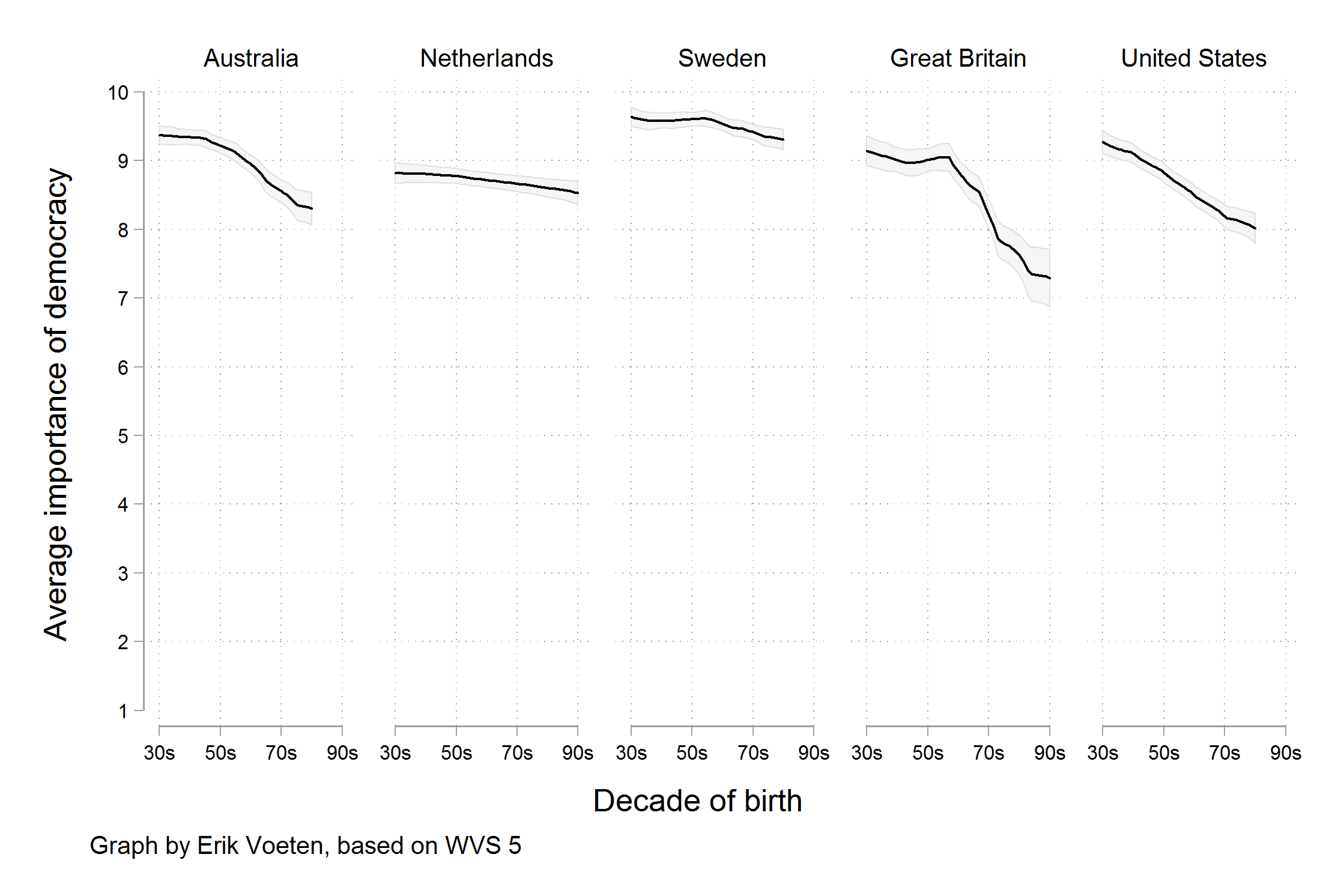

[But] scholars who knew the World Values Survey data underlying the graph noticed something else. The graph reads as though people were asked to say whether they thought it was essential to live in a democracy, and the results plotted show the percentage of respondents who said "Yes", presumably in contrast to those who said "No". But in fact the survey question asked respondents to rate the importance of living in a democracy on a ten point scale, with 1 being "Not at all Important" and 10 being "Absolutely Important". The graph showed the difference across ages of people who had given a score of "10" only, not changes in the average score on the question. As it turns out, while there is some variation by year of birth, most people in these countries tend to rate the importance of living in a democracy very highly, even if they do not all score it as "Absolutely Important". The political scientist Erik Voeten redrew the figure based using the average response.

Bad perception ¶

The third problem is bad perception , which refers to how humans process the information contained in a visualization. Let's walk through section 1.3 on " Perception and data visualization ".

IMAGES

VIDEO

COMMENTS

Assignment 1: Visualization Design. In this assignment, you will design a visualization for a small data set and provide a rigorous rationale for your design choices. You should in theory be ready to explain the contribution of every pixel in the display. You are free to use any graphics or charting tool you please - including drafting it by ...

Assignment 1: Visualization Design. ... This dataset contains a high-level summary of census data for two years a century apart: 1900 and 2000. The data is a CSV (comma-separated values) file that describes the U.S. population in terms of year, reported sex (1: male, 2: female), age group (binned into 5 year segments from 0-4 years old up to 90 ...

This is a simple data visualization exercise from Coursera Data Visualization course. The data used in this assignment is GISTEMP data from NASA. The visualization tool I'm using is D3.js. 1,880 1,900 1,920 1,940 1,960 1,980 2,000 Year (1880 - 2014) -40 -20 0 20 40 60 80 Average Temperature (ºF) Glob NHem SHem.

educ - number of years of formal education completed by respondent. 17 - 17+ years (aka first year of graduate school and up) Your assignment is to design a static visualization (i.e. a single image) that effectively communicates the data and provide a written explanation (approximately 750-1000 words) describing your design.

The Blue line is for the Globe, the Orange line describes the data for the Northern Hemisphere and the Green for the South Hemisphere.</p> <p>From the resulting graph, although there is a little decreasing during 19th century, we can see that the overall trend of global temperature is increasing.

Assignment 1: Visualization Design. In this assignment, you will design a visualization for a small data set and provide a rigorous rationale for your design choices. You should in theory be ready to explain the contribution of every pixel in the display. You are free to use any graphics or charting tool you please - including drafting it by hand.

Assignment 1: Visualization Design 10%; Assignment 2: Exploratory Data Analysis 15%; Assignment 3: Interactive Visualization 25%; Final Project 40%; Class Participation. It is important to attend the lectures and read the readings. Each lecture will assume that you have read and are ready to discuss the day's readings.

Data visualization is a way of presenting complex data in a form that is graphical and easy to understand. When analyzing large volumes of data and making data-driven decisions, data visualization is crucial. ... Final Assignment: Part 1 - Create Visualizations using Matplotlib, Seaborn & Folium ...

Assignment 1: Visualization Design. In this assignment, you will design a visualization for a small data set and provide a rigorous rationale for your design choices. You should in theory be ready to explain the contribution of every pixel in the display. You are free to use any graphics or charting tool you please - including drafting it by hand.

Week 1: The Computer and the Human. Module 2 • 3 hours to complete. In this week's module, you will learn what data visualization is, how it's used, and how computers display information. You'll also explore different types of visualization and how humans perceive information. What's included.

VDOM DHTML tml>. Data Visualization course - Programming Assignment 1.

# Select data df = airline_data[airline_data['Year']==int(year)] if chart == 'OPT1': # Compute required information for creating graph from the data bar_data, line_data, div_data, map_data, tree_data = compute_data_choice_1(df) # Number of flights under different cancellation categories bar_fig = px.bar(bar_data, x='Month', y='Flights', color ...

The visual representation of data is a powerful tool for data exploration, discovery, insight, and interaction. It enhances data analysis by making information more accessible and understandable, thus enabling users to uncover meaningful patterns, derive insights, and make informed decisions. Whether we are dealing with business data ...

Programming Assignment 1: Visualize Data Using a Chart. Introduction. This assignment will give you a chance to explore the topics covered in week 2 of the course by visualizing some data as a chart. ... D3.js, but if you would like to, we have provided some helpful resources that will make it easier for you to create a visualization. You are ...

Data Visualisation COS30045 Assignment 1 Table of Contents Introduction 3 Background 3 Key Concepts and terms Aim Of the Report Audience 3 4 6 In depth analysis 1 7 In depth analysis 2 9 In depth analysis 3 10 Contrast 11 Conclusion 12 Bibliography 13 2 Alignment- helps create order in the visual.

Milestone 2 Assignment Overview • 1 minute ... In this milestone, you will take a short but essential break from the data visualization software and begin to give structure to your data stories. You will define the basic story arc of your data story, or draft a narrative description of what your data visualization communicates. ...

I will not assign these often. : ( 75 points (100%) in gradebook) Project is fine, follows most design principles, answers a question from the data, and uses R correctly. This is the expected level of performance. −: ( 37.5 points (50%) in gradebook) Project is missing large components, is poorly designed, does not answer a relevant question ...

DATA VISUALIZATION: Exploring and Explaining with Data is designed to introduce best practices in data visualization to undergraduate and graduate students. This is one of the first books on data visualization designed for college courses. The book contains material on effective design, choice of chart type, effective use of color, how to ...

Password. Forgot your password? Sign InCancel. RPubs. by RStudio. Sign inRegister. Programming Assignment 1 - Data Visualisation. by Edwin van 't Hul. Last updatedalmost 9 years ago.

1. Define Your Goals. The first step in data visualization is understanding the purpose of your visualization. Determine what message you want to convey, who your audience is, and what decisions or actions they will take from the information presented. Also, consider where the visualization will be published.

Assignment 1: Data, Visualization, and Housing. This first assignment will give you a gentle introduction to the key ideas of this course: how can we use data and visualization to analyze, communicate, and ultimately affect policy around the housing affordability crisis. Due: Mon 2/12, 11:59 pm ET. Submit on the class forum →. Table of contents.

Assignment 1: Expository Visualization. An expository article requires the author to investigate an idea, evaluate evidence, expound on the idea, and set forth an argument concerning that idea in a clear and concise manner. In this assignment, you will design an expository visualization to clearly communicate an idea based on a small data set.

Assignment 1: Visualization Design. In this assignment, you will design a visualization for a small data set and provide a rigorous rationale for your design choices. You should in theory be ready to explain the contribution of every pixel in the display. You are free to use any graphics or charting tool you please - including drafting it by hand.

They include data visualization tools, dashboards, and reporting software. Each tool helps you to visualize complex data sets, making it easier to interpret and act upon. For example, dashboards ...

By completing this course, you'll be equipped to: -Understand and utilize cutting-edge Generative AI tools to create data visualization artifacts. -Gain practical knowledge on optimizing data visualization processes using AI. -Explore current and emerging trends in data visualization through AI-powered visualization platforms.

At the point of care, clean and engaging data visualization can make it much easier for a clinician to absorb information and use it appropriately. Color-coding is a popular data visualization technique that typically produces an immediate response - for example, red, yellow, and green are generally understood to mean stop, caution, and go.

At Tableau, we believe in the power of data to tell compelling stories and drive positive change. This Pride Month, we are excited to shine a spotlight on the incredible ways data visualization can celebrate diversity, promote inclusion, and amplify the voices of the LGBTQ+ community. The Power of Data in Telling LGBTQ+ Stories

By Moe Clark - Data Reporter, Denver Business Journal. Jun 29, 2024 ... Though slower than 2023, the economy continues to expand in 2024, growing by 1.3% in the first quarter of the year ...

In chapter 1 of Data Visualization, Kieran Hiely explains how data visualization is about communication and rhetoric. While it is tempting to simply start laying down the law about what works and what doesn't, the process of making a really good or really useful graph cannot be boiled down to a list of simple rules to be followed without ...