- Search Menu

- Advance articles

- AHR Interview

- History Unclassified

- Submission Guidelines

- Review Guide

- Submission Site

- Join the AHR Community

- About The American Historical Review

- About the American Historical Association

- AHR Staff & Editors

- Advertising and Corporate Services

- Self-Archiving Policy

- Dispatch Dates

- Journals on Oxford Academic

- Books on Oxford Academic

Article Contents

- < Previous

AI in History

- Article contents

- Figures & tables

- Supplementary Data

Matthew L. Jones, AI in History, The American Historical Review , Volume 128, Issue 3, September 2023, Pages 1360–1367, https://doi.org/10.1093/ahr/rhad361

- Permissions Icon Permissions

Late in 1982, Edinburgh professor Donald Michie explained the fundamental error that plagued earlier efforts to create artificial intelligence. “The inductive learning of concepts, rules, strategies, etc. from examples is what confers on the human problem-solver his power and versatility, and not (as had earlier been supposed) power of calculation.” 1 A minority position in 1982, learning from examples came to dominate artificial intelligence early in the new millennium. In a key 2009 manifesto celebrating the “unreasonable effectiveness of data,” three Google researchers argued “sciences that involve human beings rather than elementary particles have proven more resistant to elegant mathematics.” We should “embrace complexity and make use of the best ally we have: the unreasonable effectiveness of data.” 2

The computer scientist John McCarthy coined the term “artificial intelligence” originally in search of funding; in the mid 2010s, the term was dramatically redefined to describe large-scale algorithmic decision-making systems and predictive machine learning “trained” on massive data sets. 3 Through most of the Cold War and beyond, AI researchers focused on “symbolic AI” largely ignored data collected from everyday and military activities. 4 Such empiricism of the quotidian paled in prestige in comparison with logic and numerical computation and the more empirically oriented approaches such as neural networks and pattern recognition were widely lambasted. 5 Learning from data seemed to be the wrong approach for producing intelligence or intelligent behaviors. Alongside this symbolic approach, in the USA, USSR, and beyond, a far less prestigious empiricist stratum developed comprising congeries of techniques for dealing with large-scale military, intelligence, and commercial data. Our contemporary world of AI, with its often-biased algorithmic decision system, owes far more to this empirical strand of inquiry than to the previously higher status and much studied symbolic artificial intelligence. 6

Email alerts

Citing articles via.

- Editorial Board

- Author Guidelines

- Recommend to your Library

Affiliations

- Online ISSN 1937-5239

- Print ISSN 0002-8762

- Copyright © 2024 The American Historical Association

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

Advertisement

- Previous Article

- Next Article

A Brief History of Artificial Intelligence Research

- Cite Icon Cite

- Permissions

- Article contents

- Figures & tables

- Supplementary Data

- Peer Review

- Search Site

Christoph Adami; A Brief History of Artificial Intelligence Research. Artif Life 2021; 27 (2): 131–137. doi: https://doi.org/10.1162/artl_a_00349

Download citation file:

- Ris (Zotero)

- Reference Manager

Day by day, however, the machines are gaining ground upon us; day by day we are becoming more subservient to them; more men are daily bound down as slaves to tend them, more men are daily devoting the energies of their whole lives to the development of mechanical life.

—Samuel Butler, “Darwin Among the Machines,” 1863

Can machines ever be sentient? Could they perceive and feel things; be conscious of their surroundings? From a materialistic point of view the answer must surely be “Yes,” in particular if we accept that we ourselves are mere machines, albeit made from flesh and bones and neurons. But a point of view is not a proof: Only if we succeed in creating sentient machines can this question be answered definitively. But what are the prospects of achieving such a feat? Is it ethical to embark on such a path to begin with? And what...

Client Account

Sign in via your institution, email alerts.

- Online ISSN 1530-9185

- Print ISSN 1064-5462

A product of The MIT Press

Mit press direct.

- About MIT Press Direct

Information

- Accessibility

- For Authors

- For Customers

- For Librarians

- Direct to Open

- Open Access

- Media Inquiries

- Rights and Permissions

- For Advertisers

- About the MIT Press

- The MIT Press Reader

- MIT Press Blog

- Seasonal Catalogs

- MIT Press Home

- Give to the MIT Press

- Direct Service Desk

- Terms of Use

- Privacy Statement

- Crossref Member

- COUNTER Member

- The MIT Press colophon is registered in the U.S. Patent and Trademark Office

This Feature Is Available To Subscribers Only

Sign In or Create an Account

A Brief History of Artificial Intelligence Research

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

The brief history of artificial intelligence: the world has changed fast — what might be next?

Despite their brief history, computers and ai have fundamentally changed what we see, what we know, and what we do. little is as important for the world’s future and our own lives as how this history continues..

To see what the future might look like, it is often helpful to study our history. This is what I will do in this article. I retrace the brief history of computers and artificial intelligence to see what we can expect for the future.

How did we get here?

How rapidly the world has changed becomes clear by how even quite recent computer technology feels ancient today. Mobile phones in the ‘90s were big bricks with tiny green displays. Two decades before that, the main storage for computers was punch cards.

In a short period, computers evolved so quickly and became such an integral part of our daily lives that it is easy to forget how recent this technology is. The first digital computers were only invented about eight decades ago, as the timeline shows.

Since the early days of this history, some computer scientists have strived to make machines as intelligent as humans. The next timeline shows some of the notable artificial intelligence (AI) systems and describes what they were capable of.

The first system I mention is the Theseus. It was built by Claude Shannon in 1950 and was a remote-controlled mouse that was able to find its way out of a labyrinth and could remember its course. 1 In seven decades, the abilities of artificial intelligence have come a long way.

The language and image recognition capabilities of AI systems have developed very rapidly

The chart shows how we got here by zooming into the last two decades of AI development. The plotted data stems from a number of tests in which human and AI performance were evaluated in different domains, from handwriting recognition to language understanding.

Within each of the domains, the initial performance of the AI system is set to –100, and human performance in these tests is used as a baseline set to zero. This means that when the model’s performance crosses the zero line is when the AI system scored more points in the relevant test than the humans who did the same test. 2

Just 10 years ago, no machine could reliably provide language or image recognition at a human level. But, as the chart shows, AI systems have become steadily more capable and are now beating humans in tests in all these domains. 3

Outside of these standardized tests, the performance of these AIs is mixed. In some real-world cases, these systems are still performing much worse than humans. On the other hand, some implementations of such AI systems are already so cheap that they are available on the phone in your pocket: image recognition categorizes your photos and speech recognition transcribes what you dictate.

From image recognition to image generation

The previous chart showed the rapid advances in the perceptive abilities of artificial intelligence. AI systems have also become much more capable of generating images.

This series of nine images shows the development over the last nine years. None of the people in these images exist; all were generated by an AI system.

The series begins with an image from 2014 in the top left, a primitive image of a pixelated face in black and white. As the first image in the second row shows, just three years later, AI systems were already able to generate images that were hard to differentiate from a photograph.

In recent years, the capability of AI systems has become much more impressive still. While the early systems focused on generating images of faces, these newer models broadened their capabilities to text-to-image generation based on almost any prompt. The image in the bottom right shows that even the most challenging prompts — such as “A Pomeranian is sitting on the King’s throne wearing a crown. Two tiger soldiers are standing next to the throne” — are turned into photorealistic images within seconds. 5

Timeline of images generated by artificial intelligence 4

Language recognition and production is developing fast

Just as striking as the advances of image-generating AIs is the rapid development of systems that parse and respond to human language.

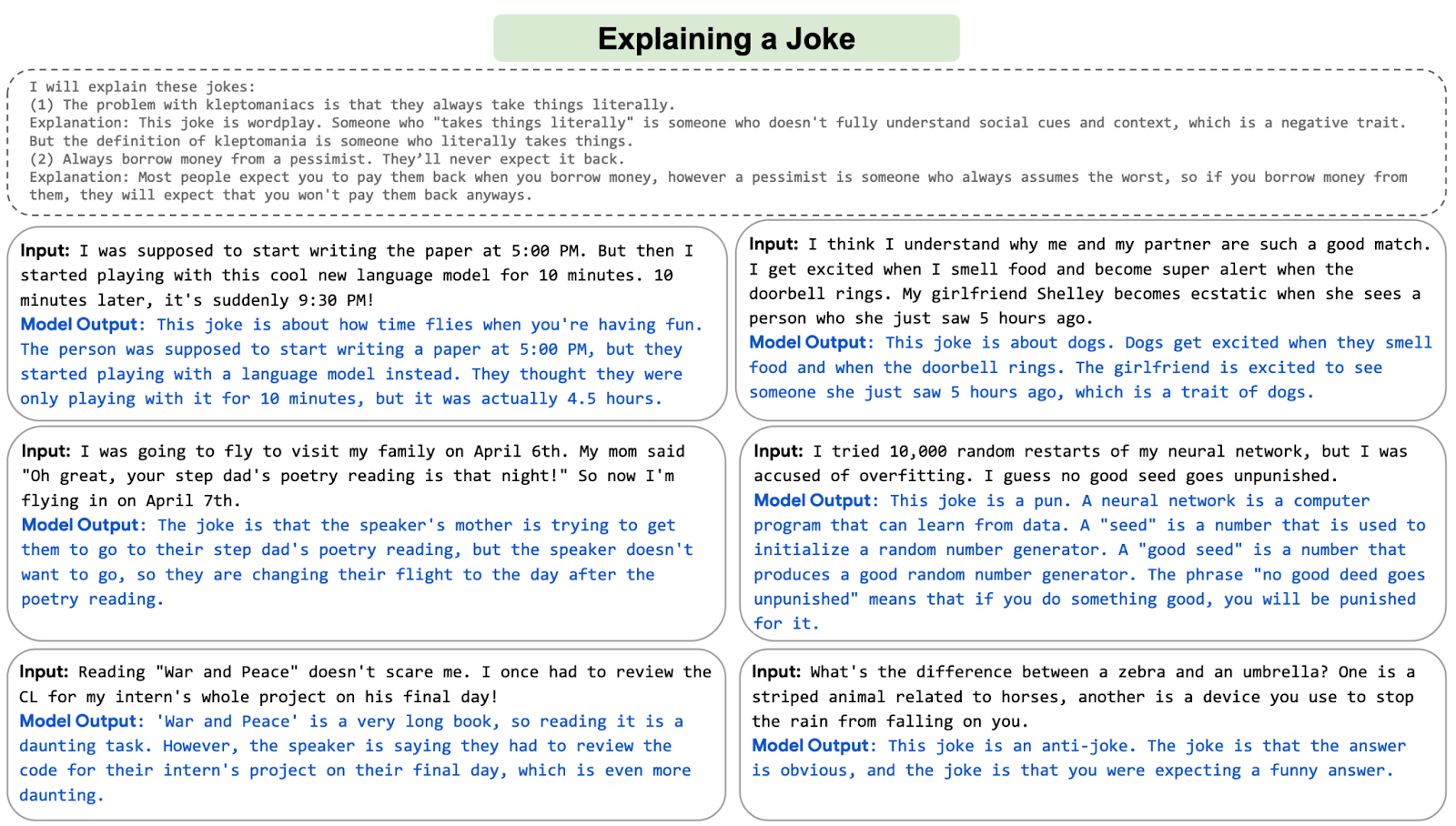

The image shows examples of an AI system developed by Google called PaLM. In these six examples, the system was asked to explain six different jokes. I find the explanation in the bottom right particularly remarkable: the AI explains an anti-joke specifically meant to confuse the listener.

AIs that produce language have entered our world in many ways over the last few years. Emails get auto-completed, massive amounts of online texts get translated, videos get automatically transcribed, school children use language models to do their homework, reports get auto-generated, and media outlets publish AI-generated journalism.

AI systems are not yet able to produce long, coherent texts. In the future, we will see whether the recent developments will slow down — or even end — or whether we will one day read a bestselling novel written by an AI.

Output of the AI system PaLM after being asked to interpret six different jokes 6

Where we are now: AI is here

These rapid advances in AI capabilities have made it possible to use machines in a wide range of new domains:

When you book a flight, it is often an artificial intelligence, no longer a human, that decides what you pay. When you get to the airport, it is an AI system that monitors what you do at the airport. And once you are on the plane, an AI system assists the pilot in flying you to your destination.

AI systems also increasingly determine whether you get a loan , are eligible for welfare, or get hired for a particular job. Increasingly, they help determine who is released from jail .

Several governments have purchased autonomous weapons systems for warfare, and some use AI systems for surveillance and oppression .

AI systems help to program the software you use and translate the texts you read. Virtual assistants , operated by speech recognition, have entered many households over the last decade. Now self-driving cars are becoming a reality.

In the last few years, AI systems have helped to make progress on some of the hardest problems in science.

Large AIs called recommender systems determine what you see on social media, which products are shown to you in online shops, and what gets recommended to you on YouTube. Increasingly they are not just recommending the media we consume, but based on their capacity to generate images and texts, they are also creating the media we consume.

Artificial intelligence is no longer a technology of the future; AI is here, and much of what is reality now would have looked like sci-fi just recently. It is a technology that already impacts all of us, and the list above includes just a few of its many applications .

The wide range of listed applications makes clear that this is a very general technology that can be used by people for some extremely good goals — and some extraordinarily bad ones, too. For such “dual-use technologies”, it is important that all of us develop an understanding of what is happening and how we want the technology to be used.

Just two decades ago, the world was very different. What might AI technology be capable of in the future?

What is next?

The AI systems that we just considered are the result of decades of steady advances in AI technology.

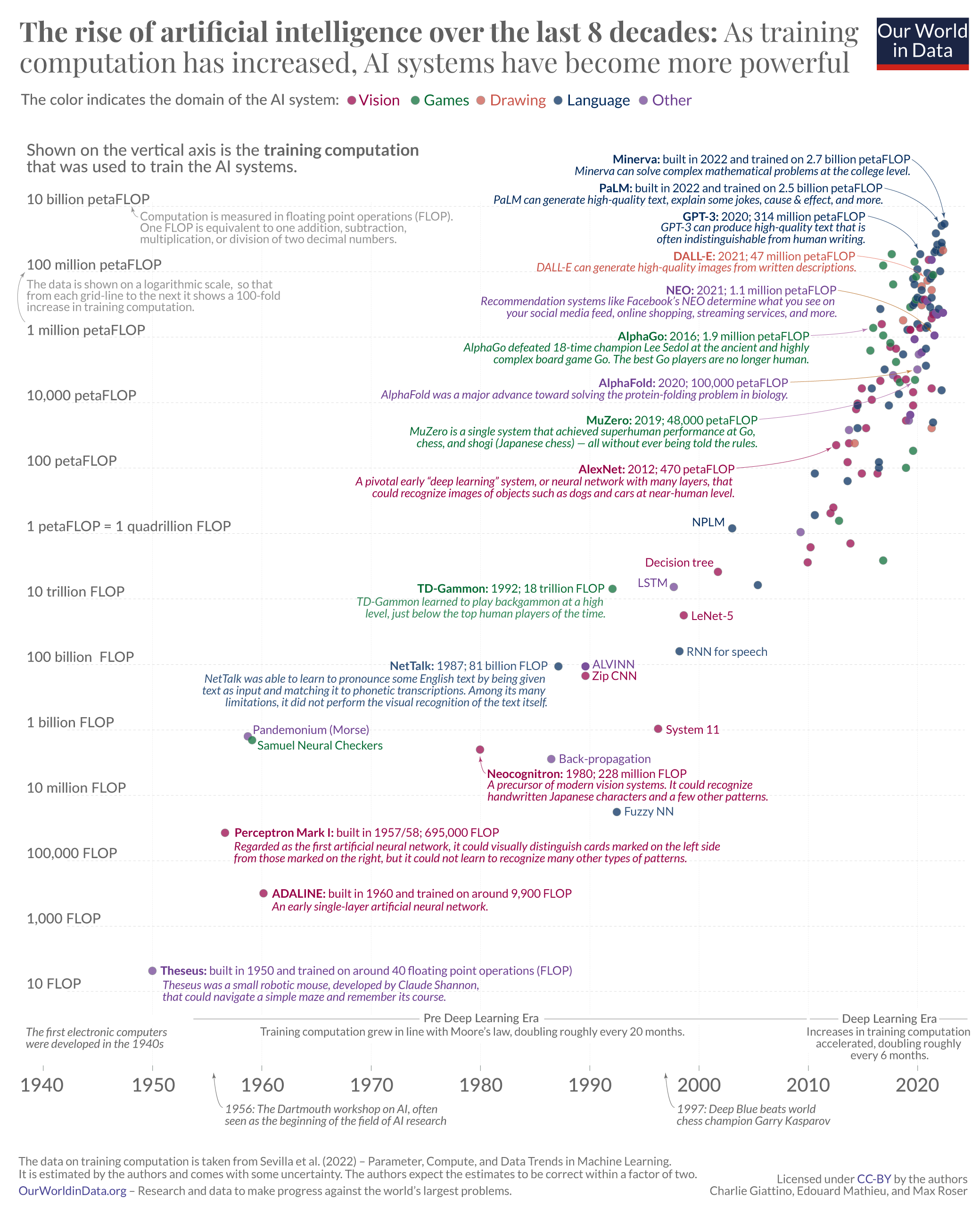

The big chart below brings this history over the last eight decades into perspective. It is based on the dataset produced by Jaime Sevilla and colleagues. 7

The rise of artificial intelligence over the last 8 decades: As training computation has increased, AI systems have become more powerful 8

Each small circle in this chart represents one AI system. The circle’s position on the horizontal axis indicates when the AI system was built, and its position on the vertical axis shows the amount of computation used to train the particular AI system.

Training computation is measured in floating point operations , or FLOP for short. One FLOP is equivalent to one addition, subtraction, multiplication, or division of two decimal numbers.

All AI systems that rely on machine learning need to be trained, and in these systems, training computation is one of the three fundamental factors that are driving the capabilities of the system. The other two factors are the algorithms and the input data used for the training. The visualization shows that as training computation has increased, AI systems have become more and more powerful.

The timeline goes back to the 1940s when electronic computers were first invented. The first shown AI system is ‘Theseus’, Claude Shannon’s robotic mouse from 1950 that I mentioned at the beginning. Towards the other end of the timeline, you find AI systems like DALL-E and PaLM; we just discussed their abilities to produce photorealistic images and interpret and generate language. They are among the AI systems that used the largest amount of training computation to date.

The training computation is plotted on a logarithmic scale so that from each grid line to the next, it shows a 100-fold increase. This long-run perspective shows a continuous increase. For the first six decades, training computation increased in line with Moore’s Law , doubling roughly every 20 months. Since about 2010, this exponential growth has sped up further, to a doubling time of just about 6 months. That is an astonishingly fast rate of growth. 9

The fast doubling times have accrued to large increases. PaLM’s training computation was 2.5 billion petaFLOP, more than 5 million times larger than AlexNet, the AI with the largest training computation just 10 years earlier. 10

Scale-up was already exponential and has sped up substantially over the past decade. What can we learn from this historical development for the future of AI?

Studying the long-run trends to predict the future of AI

AI researchers study these long-term trends to see what is possible in the future. 11

Perhaps the most widely discussed study of this kind was published by AI researcher Ajeya Cotra. She studied the increase in training computation to ask at what point the computation to train an AI system could match that of the human brain. The idea is that, at this point, the AI system would match the capabilities of a human brain. In her latest update, Cotra estimated a 50% probability that such “transformative AI” will be developed by the year 2040, less than two decades from now. 12

In a related article , I discuss what transformative AI would mean for the world. In short, the idea is that such an AI system would be powerful enough to bring the world into a ‘qualitatively different future’. It could lead to a change at the scale of the two earlier major transformations in human history, the agricultural and industrial revolutions. It would certainly represent the most important global change in our lifetimes.

Cotra’s work is particularly relevant in this context as she based her forecast on the kind of historical long-run trend of training computation that we just studied. But it is worth noting that other forecasters who rely on different considerations arrive at broadly similar conclusions. As I show in my article on AI timelines , many AI experts believe that there is a real chance that human-level artificial intelligence will be developed within the next decades, and some believe that it will exist much sooner.

Building a public resource to enable the necessary public conversation

Computers and artificial intelligence have changed our world immensely, but we are still in the early stages of this history. Because this technology feels so familiar, it is easy to forget that all of these technologies we interact with are very recent innovations and that the most profound changes are yet to come.

Artificial intelligence has already changed what we see, what we know, and what we do. This is despite the fact that this technology has had only a brief history.

There are no signs that these trends are hitting any limits anytime soon. On the contrary, particularly over the course of the last decade, the fundamental trends have accelerated: investments in AI technology have rapidly increased , and the doubling time of training computation has shortened to just six months.

All major technological innovations lead to a range of positive and negative consequences. This is already true of artificial intelligence. As this technology becomes more and more powerful, we should expect its impact to still increase.

Because of the importance of AI, we should all be able to form an opinion on where this technology is heading and understand how this development is changing our world. For this purpose, we are building a repository of AI-related metrics, which you can find on OurWorldinData.org/artificial-intelligence .

We are still in the early stages of this history, and much of what will become possible is yet to come. A technological development as powerful as this should be at the center of our attention. Little might be as important for how the future of our world — and the future of our lives — will play out.

Acknowledgments: I would like to thank my colleagues Natasha Ahuja, Daniel Bachler, Julia Broden, Charlie Giattino, Bastian Herre, Edouard Mathieu, and Ike Saunders for their helpful comments to drafts of this essay and their contributions in preparing the visualizations.

On the Theseus see Daniel Klein (2019) — Mighty mouse , Published in MIT Technology Review. And this video on YouTube of a presentation by its inventor Claude Shannon.

The chart shows that the speed at which these AI technologies developed increased over time. Systems for which development was started early — handwriting and speech recognition — took more than a decade to approach human-level performance, while more recent AI developments led to systems that overtook humans in only a few years. However, one should not overstate this point. To some extent, this is dependent on when the researchers started to compare machine and human performance. One could have started evaluating the system for language understanding much earlier, and its development would appear much slower in this presentation of the data.

It is important to remember that while these are remarkable achievements — and show very rapid gains — these are the results from specific benchmarking tests. Outside of tests, AI models can fail in surprising ways and do not reliably achieve performance that is comparable with human capabilities.

The relevant publications are the following:

2014: Goodfellow et al.: Generative Adversarial Networks

2015: Radford, Metz, and Chintala: Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks

2016: Liu and Tuzel: Coupled Generative Adversarial Networks

2017: Karras et al.: Progressive Growing of GANs for Improved Quality, Stability, and Variation

2018: Karras, Laine, and Aila: A Style-Based Generator Architecture for Generative Adversarial Networks (StyleGAN from NVIDIA)

2019: Karras et al.: Analyzing and Improving the Image Quality of StyleGAN

AI-generated faces generated by this technology can be found on thispersondoesnotexist.com .

2020: Ho, Jain, and Abbeel: Denoising Diffusion Probabilistic Models

2021: Ramesh et al: Zero-Shot Text-to-Image Generation (first DALL-E from OpenAI; blog post ). See also Ramesh et al. (2022) — Hierarchical Text-Conditional Image Generation with CLIP Latents (DALL-E 2 from OpenAI; blog post ).

2022: Saharia et al: Photorealistic Text-to-Image Diffusion Models with Deep Language Understanding (Google’s Imagen; blog post )

Because these systems have become so powerful, the latest AI systems often don’t allow the user to generate images of human faces to prevent abuse.

From Chowdhery et al. (2022) — PaLM: Scaling Language Modeling with Pathways . Published on arXiv on 7 Apr 2022.

See the footnote on the chart's title for the references and additional information.

The data is taken from Jaime Sevilla, Lennart Heim, Anson Ho, Tamay Besiroglu, Marius Hobbhahn, Pablo Villalobos (2022) — Compute Trends Across Three eras of Machine Learning . Published in arXiv on March 9, 2022. See also their post on the Alignment Forum .

The authors regularly update and extend their dataset, a helpful service to the AI research community. At Our World in Data, my colleague Charlie Giattino regularly updates the interactive version of this chart with the latest data made available by Sevilla and coauthors.

See also these two related charts:

Number of parameters in notable artificial intelligence systems

Number of datapoints used to train notable artificial intelligence systems

At some point in the future, training computation is expected to slow down to the exponential growth rate of Moore's Law. Tamay Besiroglu, Lennart Heim, and Jaime Sevilla of the Epoch team estimate in their report that the highest probability for this reversion occurring is in the early 2030s.

The training computation of PaLM, developed in 2022, was 2,700,000,000 petaFLOP. The training computation of AlexNet, the AI with the largest training computation up to 2012, was 470 petaFLOP. 2,500,000,000 petaFLOP / 470 petaFLOP = 5,319,148.9. At the same time, the amount of training computation required to achieve a given performance has been falling exponentially.

The costs have also increased quickly. The cost to train PaLM is estimated to be $9–$23 million, according to Lennart Heim, a researcher in the Epoch team. See Lennart Heim (2022) — Estimating PaLM's training cost .

Scaling up the size of neural networks — in terms of the number of parameters and the amount of training data and computation — has led to surprising increases in the capabilities of AI systems. This realization motivated the “scaling hypothesis.” See Gwern Branwen (2020) — The Scaling Hypothesis .

Her research was announced in various places, including in the AI Alignment Forum here: Ajeya Cotra (2020) — Draft report on AI timelines . As far as I know, the report always remained a “draft report” and was published here on Google Docs .

The cited estimate stems from Cotra’s Two-year update on my personal AI timelines , in which she shortened her median timeline by 10 years.

Cotra emphasizes that there are substantial uncertainties around her estimates and therefore communicates her findings in a range of scenarios. She published her big study in 2020, and her median estimate at the time was that around the year 2050, there will be a 50%-probability that the computation required to train such a model may become affordable. In her “most conservative plausible”-scenario, this point in time is pushed back to around 2090, and in her “most aggressive plausible”-scenario, this point is reached in 2040.

The same is true for most other forecasters: all emphasize the large uncertainty associated with their forecasts .

It is worth emphasizing that the computation of the human brain is highly uncertain. See Joseph Carlsmith's New Report on How Much Computational Power It Takes to Match the Human Brain from 2020.

Cite this work

Our articles and data visualizations rely on work from many different people and organizations. When citing this article, please also cite the underlying data sources. This article can be cited as:

BibTeX citation

Reuse this work freely

All visualizations, data, and code produced by Our World in Data are completely open access under the Creative Commons BY license . You have the permission to use, distribute, and reproduce these in any medium, provided the source and authors are credited.

The data produced by third parties and made available by Our World in Data is subject to the license terms from the original third-party authors. We will always indicate the original source of the data in our documentation, so you should always check the license of any such third-party data before use and redistribution.

All of our charts can be embedded in any site.

Our World in Data is free and accessible for everyone.

Help us do this work by making a donation.

History of Artificial Intelligence

- First Online: 29 April 2021

Cite this chapter

- Gerard O’Regan 2

2373 Accesses

1 Citations

This chapter presents a short history of artificial intelligence (AI), and we discuss the Turing Test, which is a test of machine intelligence. We discuss strong and weak AI, where strong AI considers an AI-programmed computer to be essentially a mind, whereas weak AI considers an AI computer to simulate thought without real understanding. We discuss Searle’s Chinese room, which is a rebuttal of strong AI, and we discuss philosophical issues in AI and Weizenbaum’s views on the ethics of AI. There are many subfields in AI and we discuss logic, neural networks, and expert systems.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as EPUB and PDF

- Read on any device

- Instant download

- Own it forever

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

This long-term goal may be hundreds of years as there is unlikely to be an early breakthrough in machine intelligence as there are deep philosophical problems to be solved.

Weizenbaum was a psychologist who invented the ELIZA program, which simulated a psychologist in dialogue with a patient. He was initially an advocate of artificial intelligence but later became a critic.

Descartes’ ontological argument is similar to St. Amselm’s argument on the existence of God, and implicitly assumes existence as a predicate (which was refuted by Kant).

An automaton is a self-operating machine or mechanism that behaves and responds in a mechanical way.

Russell is said to have remarked that he was delighted to see that the Principia Mathematica could be done by machine, and that if he and Whitehead had known this in advance that they would not have wasted 10 years doing this work by hand in the early twentieth century. The LT program succeeded in proving 38 of the 52 theorems in chapter 2 of Principia Mathematica. Its approach was to start with the theorem to be proved and to then search for relevant axioms and operators to prove the theorem.

The machine would somehow need to know what premises are relevant and should be selected in applying the deductive method from the many premises that are already encoded. This is nontrivial.

Common sense includes basic facts about events, beliefs, actions, knowledge, and desires. It also includes basic facts about objects and their properties.

Rogerian psychotherapy (person-centered therapy) was developed by Carl Rodgers in the 1940s.

Eliza Doolittle was a working-class character in Shaw’s play Pygmalion. She is taught to speak with an upper-class English accent.

The term “Miletians” refers to inhabitants of the Greek city state Miletus, which is located in modern Turkey. Anaximander and Anaximenes were two other Miletians who made contributions to early Greek philosophy in approx. 600 B.C.

Plato was an Idealist, i.e., that reality is in the world of ideas rather than the external world. Realists (in contrast) believe that the external world corresponds to our mental ideas.

Berkeley was an Irish philosopher and he was born in Dysart castle in Kilkenny, Ireland. He was educated at Trinity College, Dublin, and served as bishop of Cloyne in Co. Cork. He planned to establish an education seminary in Bermuda for the sons of colonists in America, but the project failed due to the lack of funding from the British government. Berkeley University in San Francisco is named after him.

Berkeley’s theory of Ontology is that for an entity to exist it must be perceived, i.e., “ Esse est percipi. ” He argues that “It is an opinion strangely prevailing amongst men, that houses, mountains, rivers, and in a world all sensible objects have an existence natural or real, distinct from being perceived.” This led to a famous Limerick that poked fun at Berkeley’s theory. “There once was a man who said God; Must think it exceedingly odd; To find that this tree, continues to be; When there is no one around in the Quad.” The reply to this Limerick was appropriately: “Dear sir, your astonishments odd; I am always around in the Quad; And that’s why this tree will continue to be; Since observed by, yours faithfully, God.”

Hume argues that these principles apply to subjects such as Theology as its foundations are in faith and divine revelation, which are neither matters of fact nor relations of ideas.

“When we run over libraries, persuaded of these principles, what havoc must we make? If we take in our hand any volume; of divinity or school metaphysics, for instance; let us ask, Does it contain any abstract reasoning concerning quantity or number? No. Does it contain any experimental reasoning concerning matter of fact and existence? No. Commit it then to the flames: for it can contain nothing but sophistry and illusion.”

Merleau-Ponty was a French philosopher who was strongly influenced by the phenomenology of Husserl. He was also closely associated with the French existentialist philosophers such as Jean-Paul Sartre and Simone de Beauvoir.

Atomism actually goes back to the work of the ancient Greeks and was originally developed by Democritus and his teacher Leucippus in the fifth century BC Atomism was rejected by Plato in the dialogue of the Timaeus.

Cybernetics was defined by Couffignal (one of its pioneers) as the art of ensuring the efficacy of action.

These are essentially the transmission lines of the nervous system. They are microscopic in diameter and conduct electrical impulses. The axon is the output from the neuron and the dendrites are input.

Dendrites extend like the branches of a tree. The origin of the word dendrite is from the Greek word (δενδρον) for tree.

The brain works through massive parallel processing.

The firing of a neuron means that it sends off a new signal with a particular strength (or weight).

Principles of Human Knowledge. George Berkeley. Oxford University Press. 1999. (Originally published in 1710).

Google Scholar

Discourse on Method and Meditations on First Philosophy, 4th Edition. Rene Descartes. Translated by Donald Cress. Hackett Publishing Company. 1999.

Kurt Goedel. Undecidable Propositions in Arithmetic. Über formal unentscheidbare Sätze der Principia Mathematica und verwandter Systeme, I. Monatshefte für Mathematik und Physik 38: 173–98. 1931.

An Enquiry concerning Human Understanding. Digireads.com. David Hume. 2006. (Originally published in 1748).

Critique of Pure Reason. Immanuel Kant. Dover Publications. 2003. Originally published in 1781.

Programs with Common Sense. John McCarthy. Proceedings of the Teddington Conference on the Mechanization of Thought Processes. 1959.

Giants of Computing. Gerard O’ Regan. Springer Verlag. 2013.

Principia Mathematica. B. Russell and A.N. Whitehead. Cambridge University Press. Cambridge. 1910.

Minds, Brains, and Programs. John Searle. The Behavioral and Brain Sciences (3), 417–457. 1980.

Computing, Machinery and Intelligence. Alan Turing. Mind (49). Pages 433–460. 1950.

E liza . A Computer Program for the study of Natural Language Communication between Man and Machine. Joseph Weizenbaum. Communications of the ACM. 9(1) 36–45. 1966.

Computer Power and Human Reason: From Judgments to Calculation. Joseph Weizenbaum. W.H. Freeman & Co Ltd. 1976.

Download references

Author information

Authors and affiliations.

Mallow, Co. Cork, Ireland

Gerard O’Regan

You can also search for this author in PubMed Google Scholar

Rights and permissions

Reprints and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

O’Regan, G. (2021). History of Artificial Intelligence. In: A Brief History of Computing. Springer, Cham. https://doi.org/10.1007/978-3-030-66599-9_22

Download citation

DOI : https://doi.org/10.1007/978-3-030-66599-9_22

Published : 29 April 2021

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-66598-2

Online ISBN : 978-3-030-66599-9

eBook Packages : Computer Science Computer Science (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 02 August 2023

Scientific discovery in the age of artificial intelligence

- Hanchen Wang ORCID: orcid.org/0000-0002-1691-024X 1 , 2 na1 nAff37 nAff38 ,

- Tianfan Fu 3 na1 ,

- Yuanqi Du 4 na1 ,

- Wenhao Gao 5 ,

- Kexin Huang 6 ,

- Ziming Liu 7 ,

- Payal Chandak ORCID: orcid.org/0000-0003-1097-803X 8 ,

- Shengchao Liu ORCID: orcid.org/0000-0003-2030-2367 9 , 10 ,

- Peter Van Katwyk ORCID: orcid.org/0000-0002-3512-0665 11 , 12 ,

- Andreea Deac 9 , 10 ,

- Anima Anandkumar 2 , 13 ,

- Karianne Bergen 11 , 12 ,

- Carla P. Gomes ORCID: orcid.org/0000-0002-4441-7225 4 ,

- Shirley Ho 14 , 15 , 16 , 17 ,

- Pushmeet Kohli ORCID: orcid.org/0000-0002-7466-7997 18 ,

- Joan Lasenby 1 ,

- Jure Leskovec ORCID: orcid.org/0000-0002-5411-923X 6 ,

- Tie-Yan Liu 19 ,

- Arjun Manrai 20 ,

- Debora Marks ORCID: orcid.org/0000-0001-9388-2281 21 , 22 ,

- Bharath Ramsundar 23 ,

- Le Song 24 , 25 ,

- Jimeng Sun 26 ,

- Jian Tang 9 , 27 , 28 ,

- Petar Veličković 18 , 29 ,

- Max Welling 30 , 31 ,

- Linfeng Zhang 32 , 33 ,

- Connor W. Coley ORCID: orcid.org/0000-0002-8271-8723 5 , 34 ,

- Yoshua Bengio ORCID: orcid.org/0000-0002-9322-3515 9 , 10 &

- Marinka Zitnik ORCID: orcid.org/0000-0001-8530-7228 20 , 22 , 35 , 36

Nature volume 620 , pages 47–60 ( 2023 ) Cite this article

98k Accesses

153 Citations

599 Altmetric

Metrics details

- Computer science

- Machine learning

- Scientific community

A Publisher Correction to this article was published on 30 August 2023

This article has been updated

Artificial intelligence (AI) is being increasingly integrated into scientific discovery to augment and accelerate research, helping scientists to generate hypotheses, design experiments, collect and interpret large datasets, and gain insights that might not have been possible using traditional scientific methods alone. Here we examine breakthroughs over the past decade that include self-supervised learning, which allows models to be trained on vast amounts of unlabelled data, and geometric deep learning, which leverages knowledge about the structure of scientific data to enhance model accuracy and efficiency. Generative AI methods can create designs, such as small-molecule drugs and proteins, by analysing diverse data modalities, including images and sequences. We discuss how these methods can help scientists throughout the scientific process and the central issues that remain despite such advances. Both developers and users of AI tools need a better understanding of when such approaches need improvement, and challenges posed by poor data quality and stewardship remain. These issues cut across scientific disciplines and require developing foundational algorithmic approaches that can contribute to scientific understanding or acquire it autonomously, making them critical areas of focus for AI innovation.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

24,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

185,98 € per year

only 3,65 € per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Accurate structure prediction of biomolecular interactions with AlphaFold 3

Highly accurate protein structure prediction with AlphaFold

De novo generation of multi-target compounds using deep generative chemistry

Change history, 30 august 2023.

A Correction to this paper has been published: https://doi.org/10.1038/s41586-023-06559-7

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521 , 436–444 (2015). This survey summarizes key elements of deep learning and its development in speech recognition, computer vision and and natural language processing .

Article ADS CAS PubMed Google Scholar

de Regt, H. W. Understanding, values, and the aims of science. Phil. Sci. 87 , 921–932 (2020).

Article MathSciNet Google Scholar

Pickstone, J. V. Ways of Knowing: A New History of Science, Technology, and Medicine (Univ. Chicago Press, 2001).

Han, J. et al. Deep potential: a general representation of a many-body potential energy surface. Commun. Comput. Phys. 23 , 629–639 (2018). This paper introduced a deep neural network architecture that learns the potential energy surface of many-body systems while respecting the underlying symmetries of the system by incorporating group theory.

Akiyama, K. et al. First M87 Event Horizon Telescope results. IV. Imaging the central supermassive black hole. Astrophys. J. Lett. 875 , L4 (2019).

Article ADS CAS Google Scholar

Wagner, A. Z. Constructions in combinatorics via neural networks. Preprint at https://arxiv.org/abs/2104.14516 (2021).

Coley, C. W. et al. A robotic platform for flow synthesis of organic compounds informed by AI planning. Science 365 , eaax1566 (2019).

Article CAS PubMed Google Scholar

Bommasani, R. et al. On the opportunities and risks of foundation models. Preprint at https://arxiv.org/abs/2108.07258 (2021).

Davies, A. et al. Advancing mathematics by guiding human intuition with AI. Nature 600 , 70–74 (2021). This paper explores how AI can aid the development of pure mathematics by guiding mathematical intuition.

Article ADS CAS PubMed PubMed Central MATH Google Scholar

Jumper, J. et al. Highly accurate protein structure prediction with AlphaFold. Nature 596 , 583–589 (2021). This study was the first to demonstrate the ability to predict protein folding structures using AI methods with a high degree of accuracy, achieving results that are at or near the experimental resolution. This accomplishment is particularly noteworthy, as predicting protein folding has been a grand challenge in the field of molecular biology for over 50 years.

Article ADS CAS PubMed PubMed Central Google Scholar

Stokes, J. M. et al. A deep learning approach to antibiotic discovery. Cell 180 , 688–702 (2020).

Article CAS PubMed PubMed Central Google Scholar

Bohacek, R. S., McMartin, C. & Guida, W. C. The art and practice of structure-based drug design: a molecular modeling perspective. Med. Res. Rev. 16 , 3–50 (1996).

Bileschi, M. L. et al. Using deep learning to annotate the protein universe. Nat. Biotechnol. 40 , 932–937 (2022).

Bellemare, M. G. et al. Autonomous navigation of stratospheric balloons using reinforcement learning. Nature 588 , 77–82 (2020). This paper describes a reinforcement-learning algorithm for navigating a super-pressure balloon in the stratosphere, making real-time decisions in the changing environment.

Tshitoyan, V. et al. Unsupervised word embeddings capture latent knowledge from materials science literature. Nature 571 , 95–98 (2019).

Zhang, L. et al. Deep potential molecular dynamics: a scalable model with the accuracy of quantum mechanics. Phys. Rev. Lett. 120 , 143001 (2018).

Deiana, A. M. et al. Applications and techniques for fast machine learning in science. Front. Big Data 5 , 787421 (2022).

Karagiorgi, G. et al. Machine learning in the search for new fundamental physics. Nat. Rev. Phys. 4 , 399–412 (2022).

Zhou, C. & Paffenroth, R. C. Anomaly detection with robust deep autoencoders. In ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 665–674 (2017).

Hinton, G. E. & Salakhutdinov, R. R. Reducing the dimensionality of data with neural networks. Science 313 , 504–507 (2006).

Article ADS MathSciNet CAS PubMed MATH Google Scholar

Kasieczka, G. et al. The LHC Olympics 2020 a community challenge for anomaly detection in high energy physics. Rep. Prog. Phys. 84 , 124201 (2021).

Govorkova, E. et al. Autoencoders on field-programmable gate arrays for real-time, unsupervised new physics detection at 40 MHz at the Large Hadron Collider. Nat. Mach. Intell. 4 , 154–161 (2022).

Article Google Scholar

Chamberland, M. et al. Detecting microstructural deviations in individuals with deep diffusion MRI tractometry. Nat. Comput. Sci. 1 , 598–606 (2021).

Article PubMed PubMed Central Google Scholar

Rafique, M. et al. Delegated regressor, a robust approach for automated anomaly detection in the soil radon time series data. Sci. Rep. 10 , 3004 (2020).

Pastore, V. P. et al. Annotation-free learning of plankton for classification and anomaly detection. Sci. Rep. 10 , 12142 (2020).

Naul, B. et al. A recurrent neural network for classification of unevenly sampled variable stars. Nat. Astron. 2 , 151–155 (2018).

Article ADS Google Scholar

Lee, D.-H. et al. Pseudo-label: the simple and efficient semi-supervised learning method for deep neural networks. In ICML Workshop on Challenges in Representation Learning (2013).

Zhou, D. et al. Learning with local and global consistency. In Advances in Neural Information Processing Systems 16 , 321–328 (2003).

Radivojac, P. et al. A large-scale evaluation of computational protein function prediction. Nat. Methods 10 , 221–227 (2013).

Barkas, N. et al. Joint analysis of heterogeneous single-cell RNA-seq dataset collections. Nat. Methods 16 , 695–698 (2019).

Tran, K. & Ulissi, Z. W. Active learning across intermetallics to guide discovery of electrocatalysts for CO 2 reduction and H 2 evolution. Nat. Catal. 1 , 696–703 (2018).

Article CAS Google Scholar

Jablonka, K. M. et al. Bias free multiobjective active learning for materials design and discovery. Nat. Commun. 12 , 2312 (2021).

Roussel, R. et al. Turn-key constrained parameter space exploration for particle accelerators using Bayesian active learning. Nat. Commun. 12 , 5612 (2021).

Ratner, A. J. et al. Data programming: creating large training sets, quickly. In Advances in Neural Information Processing Systems 29 , 3567–3575 (2016).

Ratner, A. et al. Snorkel: rapid training data creation with weak supervision. In International Conference on Very Large Data Bases 11 , 269–282 (2017). This paper presents a weakly-supervised AI system designed to annotate massive amounts of data using labeling functions.

Butter, A. et al. GANplifying event samples. SciPost Phys. 10 , 139 (2021).

Brown, T. et al. Language models are few-shot learners. In Advances in Neural Information Processing Systems 33 , 1877–1901 (2020).

Ramesh, A. et al. Zero-shot text-to-image generation. In International Conference on Machine Learning 139 , 8821–8831 (2021).

Littman, M. L. Reinforcement learning improves behaviour from evaluative feedback. Nature 521 , 445–451 (2015).

Cubuk, E. D. et al. Autoaugment: learning augmentation strategies from data. In IEEE Conference on Computer Vision and Pattern Recognition 113–123 (2019).

Reed, C. J. et al. Selfaugment: automatic augmentation policies for self-supervised learning. In IEEE Conference on Computer Vision and Pattern Recognition 2674–2683 (2021).

ATLAS Collaboration et al. Deep generative models for fast photon shower simulation in ATLAS. Preprint at https://arxiv.org/abs/2210.06204 (2022).

Mahmood, F. et al. Deep adversarial training for multi-organ nuclei segmentation in histopathology images. IEEE Trans. Med. Imaging 39 , 3257–3267 (2019).

Teixeira, B. et al. Generating synthetic X-ray images of a person from the surface geometry. In IEEE Conference on Computer Vision and Pattern Recognition 9059–9067 (2018).

Lee, D., Moon, W.-J. & Ye, J. C. Assessing the importance of magnetic resonance contrasts using collaborative generative adversarial networks. Nat. Mach. Intell. 2 , 34–42 (2020).

Kench, S. & Cooper, S. J. Generating three-dimensional structures from a two-dimensional slice with generative adversarial network-based dimensionality expansion. Nat. Mach. Intell. 3 , 299–305 (2021).

Wan, C. & Jones, D. T. Protein function prediction is improved by creating synthetic feature samples with generative adversarial networks. Nat. Mach. Intell. 2 , 540–550 (2020).

Repecka, D. et al. Expanding functional protein sequence spaces using generative adversarial networks. Nat. Mach. Intell. 3 , 324–333 (2021).

Marouf, M. et al. Realistic in silico generation and augmentation of single-cell RNA-seq data using generative adversarial networks. Nat. Commun. 11 , 166 (2020).

Ghahramani, Z. Probabilistic machine learning and artificial intelligence. Nature 521 , 452–459 (2015). This survey provides an introduction to probabilistic machine learning, which involves the representation and manipulation of uncertainty in models and predictions, playing a central role in scientific data analysis.

Cogan, J. et al. Jet-images: computer vision inspired techniques for jet tagging. J. High Energy Phys. 2015 , 118 (2015).

Zhao, W. et al. Sparse deconvolution improves the resolution of live-cell super-resolution fluorescence microscopy. Nat. Biotechnol. 40 , 606–617 (2022).

Brbić, M. et al. MARS: discovering novel cell types across heterogeneous single-cell experiments. Nat. Methods 17 , 1200–1206 (2020).

Article PubMed Google Scholar

Qiao, C. et al. Evaluation and development of deep neural networks for image super-resolution in optical microscopy. Nat. Methods 18 , 194–202 (2021).

Andreassen, A. et al. OmniFold: a method to simultaneously unfold all observables. Phys. Rev. Lett. 124 , 182001 (2020).

Bergenstråhle, L. et al. Super-resolved spatial transcriptomics by deep data fusion. Nat. Biotechnol. 40 , 476–479 (2021).

Vincent, P. et al. Extracting and composing robust features with denoising autoencoders. In International Conference on Machine Learning 1096–1103 (2008).

Kingma, D. P. & Welling, M. Auto-encoding variational Bayes. In International Conference on Learning Representations (2014).

Eraslan, G. et al. Single-cell RNA-seq denoising using a deep count autoencoder. Nat. Commun. 10 , 390 (2019).

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning (MIT Press, 2016).

Olshausen, B. A. & Field, D. J. Emergence of simple-cell receptive field properties by learning a sparse code for natural images. Nature 381 , 607–609 (1996).

Bengio, Y. Deep learning of representations for unsupervised and transfer learning. In ICML Workshop on Unsupervised and Transfer Learning (2012).

Detlefsen, N. S., Hauberg, S. & Boomsma, W. Learning meaningful representations of protein sequences. Nat. Commun. 13 , 1914 (2022).

Becht, E. et al. Dimensionality reduction for visualizing single-cell data using UMAP. Nat. Biotechnol. 37 , 38–44 (2019).

Bronstein, M. M. et al. Geometric deep learning: going beyond euclidean data. IEEE Signal Process Mag. 34 , 18–42 (2017).

Anderson, P. W. More is different: broken symmetry and the nature of the hierarchical structure of science. Science 177 , 393–396 (1972).

Qiao, Z. et al. Informing geometric deep learning with electronic interactions to accelerate quantum chemistry. Proc. Natl Acad. Sci. USA 119 , e2205221119 (2022).

Bogatskiy, A. et al. Symmetry group equivariant architectures for physics. Preprint at https://arxiv.org/abs/2203.06153 (2022).

Bronstein, M. M. et al. Geometric deep learning: grids, groups, graphs, geodesics, and gauges. Preprint at https://arxiv.org/abs/2104.13478 (2021).

Townshend, R. J. L. et al. Geometric deep learning of RNA structure. Science 373 , 1047–1051 (2021).

Wicky, B. I. M. et al. Hallucinating symmetric protein assemblies. Science 378 , 56–61 (2022).

Kipf, T. N. & Welling, M. Semi-supervised classification with graph convolutional networks. In International Conference on Learning Representations (2017).

Veličković, P. et al. Graph attention networks. In International Conference on Learning Representations (2018).

Hamilton, W. L., Ying, Z. & Leskovec, J. Inductive representation learning on large graphs. In Advances in Neural Information Processing Systems 30 , 1024–1034 (2017).

Gilmer, J. et al. Neural message passing for quantum chemistry. In International Conference on Machine Learning 1263–1272 (2017).

Li, M. M., Huang, K. & Zitnik, M. Graph representation learning in biomedicine and healthcare. Nat. Biomed. Eng. 6 , 1353–1369 (2022).

Satorras, V. G., Hoogeboom, E. & Welling, M. E( n ) equivariant graph neural networks. In International Conference on Machine Learning 9323–9332 (2021). This study incorporates principles of physics into the design of neural models, advancing the field of equivariant machine learning .

Thomas, N. et al. Tensor field networks: rotation-and translation-equivariant neural networks for 3D point clouds. Preprint at https://arxiv.org/abs/1802.08219 (2018).

Finzi, M. et al. Generalizing convolutional neural networks for equivariance to lie groups on arbitrary continuous data. In International Conference on Machine Learning 3165–3176 (2020).

Fuchs, F. et al. SE(3)-transformers: 3D roto-translation equivariant attention networks. In Advances in Neural Information Processing Systems 33 , 1970-1981 (2020).

Zaheer, M. et al. Deep sets. In Advances in Neural Information Processing Systems 30 , 3391–3401 (2017). This paper is an early study that explores the use of deep neural architectures on set data, which consists of an unordered list of elements.

Cohen, T. S. et al. Spherical CNNs. In International Conference on Learning Representations (2018).

Gordon, J. et al. Permutation equivariant models for compositional generalization in language. In International Conference on Learning Representations (2019).

Finzi, M., Welling, M. & Wilson, A. G. A practical method for constructing equivariant multilayer perceptrons for arbitrary matrix groups. In International Conference on Machine Learning 3318–3328 (2021).

Dijk, D. V. et al. Recovering gene interactions from single-cell data using data diffusion. Cell 174 , 716–729 (2018).

Gainza, P. et al. Deciphering interaction fingerprints from protein molecular surfaces using geometric deep learning. Nat. Methods 17 , 184–192 (2020).

Hatfield, P. W. et al. The data-driven future of high-energy-density physics. Nature 593 , 351–361 (2021).

Bapst, V. et al. Unveiling the predictive power of static structure in glassy systems. Nat. Phys. 16 , 448–454 (2020).

Zhang, R., Zhou, T. & Ma, J. Multiscale and integrative single-cell Hi-C analysis with Higashi. Nat. Biotechnol. 40 , 254–261 (2022).

Sammut, S.-J. et al. Multi-omic machine learning predictor of breast cancer therapy response. Nature 601 , 623–629 (2022).

DeZoort, G. et al. Graph neural networks at the Large Hadron Collider. Nat. Rev. Phys . 5 , 281–303 (2023).

Liu, S. et al. Pre-training molecular graph representation with 3D geometry. In International Conference on Learning Representations (2022).

The LIGO Scientific Collaboration. et al. A gravitational-wave standard siren measurement of the Hubble constant. Nature 551 , 85–88 (2017).

Reichstein, M. et al. Deep learning and process understanding for data-driven Earth system science. Nature 566 , 195–204 (2019).

Goenka, S. D. et al. Accelerated identification of disease-causing variants with ultra-rapid nanopore genome sequencing. Nat. Biotechnol. 40 , 1035–1041 (2022).

Bengio, Y. et al. Greedy layer-wise training of deep networks. In Advances in Neural Information Processing Systems 19 , 153–160 (2006).

Hinton, G. E., Osindero, S. & Teh, Y.-W. A fast learning algorithm for deep belief nets. Neural Comput. 18 , 1527–1554 (2006).

Article MathSciNet PubMed MATH Google Scholar

Jordan, M. I. & Mitchell, T. M. Machine learning: trends, perspectives, and prospects. Science 349 , 255–260 (2015).

Devlin, J. et al. BERT: pre-training of deep bidirectional transformers for language understanding. In North American Chapter of the Association for Computational Linguistics 4171–4186 (2019).

Rives, A. et al. Biological structure and function emerge from scaling unsupervised learning to 250 million protein sequences. Proc. Natl Acad. Sci. USA 118 , e2016239118 (2021).

Elnaggar, A. et al. ProtTrans: rowards cracking the language of lifes code through self-supervised deep learning and high performance computing. In IEEE Transactions on Pattern Analysis and Machine Intelligence (2021).

Hie, B. et al. Learning the language of viral evolution and escape. Science 371 , 284–288 (2021). This paper modeled viral escape with machine learning algorithms originally developed for human natural language.

Biswas, S. et al. Low- N protein engineering with data-efficient deep learning. Nat. Methods 18 , 389–396 (2021).

Ferruz, N. & Höcker, B. Controllable protein design with language models. Nat. Mach. Intell. 4 , 521–532 (2022).

Hsu, C. et al. Learning inverse folding from millions of predicted structures. In International Conference on Machine Learning 8946–8970 (2022).

Baek, M. et al. Accurate prediction of protein structures and interactions using a three-track neural network. Science 373 , 871–876 (2021). Inspired by AlphaFold2, this study reported RoseTTAFold, a novel three-track neural module capable of simultaneously processing protein’s sequence, distance and coordinates.

Weininger, D. SMILES, a chemical language and information system. 1. Introduction to methodology and encoding rules. J. Chem. Inf. Comput. Sci. 28 , 31–36 (1988).

Lin, T.-S. et al. BigSMILES: a structurally-based line notation for describing macromolecules. ACS Cent. Sci. 5 , 1523–1531 (2019).

Krenn, M. et al. SELFIES and the future of molecular string representations. Patterns 3 , 100588 (2022).

Flam-Shepherd, D., Zhu, K. & Aspuru-Guzik, A. Language models can learn complex molecular distributions. Nat. Commun. 13 , 3293 (2022).

Skinnider, M. A. et al. Chemical language models enable navigation in sparsely populated chemical space. Nat. Mach. Intell. 3 , 759–770 (2021).

Chithrananda, S., Grand, G. & Ramsundar, B. ChemBERTa: large-scale self-supervised pretraining for molecular property prediction. In Machine Learning for Molecules Workshop at NeurIPS (2020).

Schwaller, P. et al. Predicting retrosynthetic pathways using transformer-based models and a hyper-graph exploration strategy. Chem. Sci. 11 , 3316–3325 (2020).

Tetko, I. V. et al. State-of-the-art augmented NLP transformer models for direct and single-step retrosynthesis. Nat. Commun. 11 , 5575 (2020).

Schwaller, P. et al. Mapping the space of chemical reactions using attention-based neural networks. Nat. Mach. Intell. 3 , 144–152 (2021).

Kovács, D. P., McCorkindale, W. & Lee, A. A. Quantitative interpretation explains machine learning models for chemical reaction prediction and uncovers bias. Nat. Commun. 12 , 1695 (2021).

Article ADS PubMed PubMed Central Google Scholar

Pesciullesi, G. et al. Transfer learning enables the molecular transformer to predict regio-and stereoselective reactions on carbohydrates. Nat. Commun. 11 , 4874 (2020).

Vaswani, A. et al. Attention is all you need. In Advances in Neural Information Processing Systems 30 , 5998–6008 (2017). This paper introduced the transformer, a modern neural network architecture that can process sequential data in parallel, revolutionizing natural language processing and sequence modeling.

Mousavi, S. M. et al. Earthquake transformer—an attentive deep-learning model for simultaneous earthquake detection and phase picking. Nat. Commun. 11 , 3952 (2020).

Avsec, Ž. et al. Effective gene expression prediction from sequence by integrating long-range interactions. Nat. Methods 18 , 1196–1203 (2021).

Meier, J. et al. Language models enable zero-shot prediction of the effects of mutations on protein function. In Advances in Neural Information Processing Systems 34 , 29287–29303 (2021).

Kamienny, P.-A. et al. End-to-end symbolic regression with transformers. In Advances in Neural Information Processing Systems 35 , 10269–10281 (2022).

Jaegle, A. et al. Perceiver: general perception with iterative attention. In International Conference on Machine Learning 4651–4664 (2021).

Chen, L. et al. Decision transformer: reinforcement learning via sequence modeling. In Advances in Neural Information Processing Systems 34 , 15084–15097 (2021).

Dosovitskiy, A. et al. An image is worth 16x16 words: transformers for image recognition at scale. In International Conference on Learning Representations (2020).

Choromanski, K. et al. Rethinking attention with performers. In International Conference on Learning Representations (2021).

Li, Z. et al. Fourier neural operator for parametric partial differential equations. In International Conference on Learning Representations (2021).

Kovachki, N. et al. Neural operator: learning maps between function spaces. J. Mach. Learn. Res. 24 , 1–97 (2023).

Russell, J. L. Kepler’s laws of planetary motion: 1609–1666. Br. J. Hist. Sci. 2 , 1–24 (1964).

Huang, K. et al. Artificial intelligence foundation for therapeutic science. Nat. Chem. Biol. 18 , 1033–1036 (2022).

Guimerà, R. et al. A Bayesian machine scientist to aid in the solution of challenging scientific problems. Sci. Adv. 6 , eaav6971 (2020).

Liu, G. et al. Deep learning-guided discovery of an antibiotic targeting Acinetobacter baumannii. Nat. Chem. Biol. https://doi.org/10.1038/s41589-023-01349-8 (2023).

Gómez-Bombarelli, R. et al. Design of efficient molecular organic light-emitting diodes by a high-throughput virtual screening and experimental approach. Nat. Mater. 15 , 1120–1127 (2016). This paper proposes using a black-box AI predictor to accelerate high-throughput screening of molecules in materials science.

Article ADS PubMed Google Scholar

Sadybekov, A. A. et al. Synthon-based ligand discovery in virtual libraries of over 11 billion compounds. Nature 601 , 452–459 (2022).

The NNPDF Collaboration Evidence for intrinsic charm quarks in the proton. Nature 606 , 483–487 (2022).

Graff, D. E., Shakhnovich, E. I. & Coley, C. W. Accelerating high-throughput virtual screening through molecular pool-based active learning. Chem. Sci. 12 , 7866–7881 (2021).

Janet, J. P. et al. Accurate multiobjective design in a space of millions of transition metal complexes with neural-network-driven efficient global optimization. ACS Cent. Sci. 6 , 513–524 (2020).

Bacon, F. Novum Organon Vol. 1620 (2000).

Schmidt, M. & Lipson, H. Distilling free-form natural laws from experimental data. Science 324 , 81–85 (2009).

Petersen, B. K. et al. Deep symbolic regression: recovering mathematical expressions from data via risk-seeking policy gradients. In International Conference on Learning Representations (2020).

Zhavoronkov, A. et al. Deep learning enables rapid identification of potent DDR1 kinase inhibitors. Nat. Biotechnol. 37 , 1038–1040 (2019). This paper describes a reinforcement-learning algorithm for navigating molecular combinatorial spaces, and it validates generated molecules using wet-lab experiments.

Zhou, Z. et al. Optimization of molecules via deep reinforcement learning. Sci. Rep. 9 , 10752 (2019).

You, J. et al. Graph convolutional policy network for goal-directed molecular graph generation. In Advances in Neural Information Processing Systems 31 , 6412–6422 (2018).

Bengio, Y. et al. GFlowNet foundations. Preprint at https://arxiv.org/abs/2111.09266 (2021). This paper describes a generative flow network that generates objects by sampling them from a distribution optimized for drug design.

Jain, M. et al. Biological sequence design with GFlowNets. In International Conference on Machine Learning 9786–9801 (2022).

Malkin, N. et al. Trajectory balance: improved credit assignment in GFlowNets. In Advances in Neural Information Processing Systems 35 , 5955–5967 (2022).

Borkowski, O. et al. Large scale active-learning-guided exploration for in vitro protein production optimization. Nat. Commun. 11 , 1872 (2020). This study introduced a dynamic programming approach to determine the optimal locations and capacities of hydropower dams in the Amazon Basin, balancing between energy production and environmental impact .

Flecker, A. S. et al. Reducing adverse impacts of Amazon hydropower expansion. Science 375 , 753–760 (2022). This study introduced a dynamic programming approach to determine the optimal locations and capacities of hydropower dams in the Amazon basin, achieving a balance between the benefits of energy production and the potential environmental impacts.

Pion-Tonachini, L. et al. Learning from learning machines: a new generation of AI technology to meet the needs of science. Preprint at https://arxiv.org/abs/2111.13786 (2021).

Kusner, M. J., Paige, B. & Hernández-Lobato, J. M. Grammar variational autoencoder. In International Conference on Machine Learning 1945–1954 (2017). This paper describes a grammar variational autoencoder that generates novel symbolic laws and drug molecules.

Brunton, S. L., Proctor, J. L. & Kutz, J. N. Discovering governing equations from data by sparse identification of nonlinear dynamical systems. Proc. Natl Acad. Sci. USA 113 , 3932–3937 (2016).

Article ADS MathSciNet CAS PubMed PubMed Central MATH Google Scholar

Liu, Z. & Tegmark, M. Machine learning hidden symmetries. Phys. Rev. Lett. 128 , 180201 (2022).

Article ADS MathSciNet CAS PubMed Google Scholar

Gabbard, H. et al. Bayesian parameter estimation using conditional variational autoencoders for gravitational-wave astronomy. Nat. Phys. 18 , 112–117 (2022).

Chen, D. et al. Automating crystal-structure phase mapping by combining deep learning with constraint reasoning. Nat. Mach. Intell. 3 , 812–822 (2021).

Gómez-Bombarelli, R. et al. Automatic chemical design using a data-driven continuous representation of molecules. ACS Cent. Sci. 4 , 268–276 (2018).

Anishchenko, I. et al. De novo protein design by deep network hallucination. Nature 600 , 547–552 (2021).

Fu, T. et al. Differentiable scaffolding tree for molecular optimization. In International Conference on Learning Representations (2021).

Sanchez-Lengeling, B. & Aspuru-Guzik, A. Inverse molecular design using machine learning: generative models for matter engineering. Science 361 , 360–365 (2018).

Huang, K. et al. Therapeutics Data Commons: machine learning datasets and tasks for drug discovery and development. In NeurIPS Datasets and Benchmarks (2021). This study describes an initiative with open AI models, datasets and education programmes to facilitate advances in therapeutic science across all stages of drug discovery and development.

Dance, A. Lab hazard. Nature 458 , 664–665 (2009).

Segler, M. H. S., Preuss, M. & Waller, M. P. Planning chemical syntheses with deep neural networks and symbolic AI. Nature 555 , 604–610 (2018). This paper describes an approach that combines deep neural networks with Monte Carlo tree search to plan chemical synthesis.

Gao, W., Raghavan, P. & Coley, C. W. Autonomous platforms for data-driven organic synthesis. Nat. Commun. 13 , 1075 (2022).

Kusne, A. G. et al. On-the-fly closed-loop materials discovery via Bayesian active learning. Nat. Commun. 11 , 5966 (2020).

Gormley,A. J. & Webb, M. A. Machine learning in combinatorial polymer chemistry. Nat. Rev. Mater. 6 , 642–644 (2021).

Ament, S. et al. Autonomous materials synthesis via hierarchical active learning of nonequilibrium phase diagrams. Sci. Adv. 7 , eabg4930 (2021).

Degrave, J. et al. Magnetic control of tokamak plasmas through deep reinforcement learning. Nature 602 , 414–419 (2022). This paper describes an approach for controlling tokamak plasmas, using a reinforcement-learning agent to command-control coils and satisfy physical and operational constraints.

Melnikov, A. A. et al. Active learning machine learns to create new quantum experiments. Proc. Natl Acad. Sci. USA 115 , 1221–1226 (2018).

Smith, J. S., Isayev, O. & Roitberg, A. E. ANI-1: an extensible neural network potential with DFT accuracy at force field computational cost. Chem. Sci. 8 , 3192–3203 (2017).

Wang, D. et al. Efficient sampling of high-dimensional free energy landscapes using adaptive reinforced dynamics. Nat. Comput. Sci. 2 , 20–29 (2022). This paper describes a neural network for reliable uncertainty estimations in molecular dynamics, enabling efficient sampling of high-dimensional free energy landscapes.

Wang, W. & Gómez-Bombarelli, R. Coarse-graining auto-encoders for molecular dynamics. npj Comput. Mater. 5 , 125 (2019).

Hermann, J., Schätzle, Z. & Noé, F. Deep-neural-network solution of the electronic Schrödinger equation. Nat. Chem. 12 , 891–897 (2020). This paper describes a method to learn the wavefunction of quantum systems using deep neural networks in conjunction with variational quantum Monte Carlo.

Carleo, G. & Troyer, M. Solving the quantum many-body problem with artificial neural networks. Science 355 , 602–606 (2017).

Em Karniadakis, G. et al. Physics-informed machine learning. Nat. Rev. Phys. 3 , 422–440 (2021).

Li, Z. et al. Physics-informed neural operator for learning partial differential equations. Preprint at https://arxiv.org/abs/2111.03794 (2021).

Kochkov, D. et al. Machine learning–accelerated computational fluid dynamics. Proc. Natl Acad. Sci. USA 118 , e2101784118 (2021). This paper describes an approach to accelerating computational fluid dynamics by training a neural network to interpolate from coarse to fine grids and generalize to varying forcing functions and Reynolds numbers.

Ji, W. et al. Stiff-PINN: physics-informed neural network for stiff chemical kinetics. J. Phys. Chem. A 125 , 8098–8106 (2021).

Smith, J. D., Azizzadenesheli, K. & Ross, Z. E. EikoNet: solving the Eikonal equation with deep neural networks. IEEE Trans. Geosci. Remote Sens. 59 , 10685–10696 (2020).

Waheed, U. B. et al. PINNeik: Eikonal solution using physics-informed neural networks. Comput. Geosci. 155 , 104833 (2021).

Chen, R. T. Q. et al. Neural ordinary differential equations. In Advances in Neural Information Processing Systems 31 , 6572–6583 (2018). This paper established a connection between neural networks and differential equations by introducing the adjoint method to learn continuous-time dynamical systems from data, replacing backpropagation.

Raissi, M., Perdikaris, P. & Karniadakis, G. E. Physics-informed neural networks: a deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 378 , 686–707 (2019). This paper describes a deep-learning approach for solving forwards and inverse problems in nonlinear partial differential equations and can find solutions to differential equations from data.

Article ADS MathSciNet MATH Google Scholar

Lu, L. et al. Learning nonlinear operators via DeepONet based on the universal approximation theorem of operators. Nat. Mach. Intell. 3 , 218–229 (2021).

Brandstetter, J., Worrall, D. & Welling, M. Message passing neural PDE solvers. In International Conference on Learning Representations (2022).

Noé, F. et al. Boltzmann generators: sampling equilibrium states of many-body systems with deep learning. Science 365 , eaaw1147 (2019). This paper presents an efficient sampling algorithm using normalizing flows to simulate equilibrium states in many-body systems.

Rezende, D. & Mohamed, S. Variational inference with normalizing flows. In International Conference on Machine Learning 37 , 1530–1538, (2015).

Dinh, L., Sohl-Dickstein, J. & Bengio, S. Density estimation using real NVP. In International Conference on Learning Representations (2017).

Nicoli, K. A. et al. Estimation of thermodynamic observables in lattice field theories with deep generative models. Phys. Rev. Lett. 126 , 032001 (2021).

Kanwar, G. et al. Equivariant flow-based sampling for lattice gauge theory. Phys. Rev. Lett. 125 , 121601 (2020).

Gabrié, M., Rotskoff, G. M. & Vanden-Eijnden, E. Adaptive Monte Carlo augmented with normalizing flows. Proc. Natl Acad. Sci. USA 119 , e2109420119 (2022).

Article MathSciNet PubMed PubMed Central Google Scholar

Jasra, A., Holmes, C. C. & Stephens, D. A. Markov chain Monte Carlo methods and the label switching problem in Bayesian mixture modeling. Stat. Sci. 20 , 50–67 (2005).

Bengio, Y. et al. Better mixing via deep representations. In International Conference on Machine Learning 552–560 (2013).

Pompe, E., Holmes, C. & Łatuszyński, K. A framework for adaptive MCMC targeting multimodal distributions. Ann. Stat. 48 , 2930–2952 (2020).

Article MathSciNet MATH Google Scholar

Townshend, R. J. L. et al. ATOM3D: tasks on molecules in three dimensions. In NeurIPS Datasets and Benchmarks (2021).

Kearnes, S. M. et al. The open reaction database. J. Am. Chem. Soc. 143 , 18820–18826 (2021).

Chanussot, L. et al. Open Catalyst 2020 (OC20) dataset and community challenges. ACS Catal. 11 , 6059–6072 (2021).

Brown, N. et al. GuacaMol: benchmarking models for de novo molecular design. J. Chem. Inf. Model. 59 , 1096–1108 (2019).

Notin, P. et al. Tranception: protein fitness prediction with autoregressive transformers and inference-time retrieval. In International Conference on Machine Learning 16990–17017 (2022).

Mitchell, M. et al. Model cards for model reporting. In Conference on Fairness, Accountability, and Transparency 220–229 (2019).

Gebru, T. et al. Datasheets for datasets. Commun. ACM 64 , 86–92 (2021).

Bai, X. et al. Advancing COVID-19 diagnosis with privacy-preserving collaboration in artificial intelligence. Nat. Mach. Intell. 3 , 1081–1089 (2021).

Warnat-Herresthal, S. et al. Swarm learning for decentralized and confidential clinical machine learning. Nature 594 , 265–270 (2021).

Hie, B., Cho, H. & Berger, B. Realizing private and practical pharmacological collaboration. Science 362 , 347–350 (2018).

Rohrbach, S. et al. Digitization and validation of a chemical synthesis literature database in the ChemPU. Science 377 , 172–180 (2022).

Gysi, D. M. et al. Network medicine framework for identifying drug-repurposing opportunities for COVID-19. Proc. Natl Acad. Sci. USA 118 , e2025581118 (2021).

King, R. D. et al. The automation of science. Science 324 , 85–89 (2009).

Mirdita, M. et al. ColabFold: making protein folding accessible to all. Nat. Methods 19 , 679–682 (2022).

Doerr, S. et al. TorchMD: a deep learning framework for molecular simulations. J. Chem. Theory Comput. 17 , 2355–2363 (2021).

Schoenholz, S. S. & Cubuk, E. D. JAX MD: a framework for differentiable physics. In Advances in Neural Information Processing Systems 33 , 11428–11441 (2020).

Peters, J., Janzing, D. & Schölkopf, B. Elements of Causal Inference: Foundations and Learning Algorithms (MIT Press, 2017).

Bengio, Y. et al. A meta-transfer objective for learning to disentangle causal mechanisms. In International Conference on Learning Representations (2020).

Schölkopf, B. et al. Toward causal representation learning. Proc. IEEE 109 , 612–634 (2021).

Goyal, A. & Bengio, Y. Inductive biases for deep learning of higher-level cognition. Proc. R. Soc. A 478 , 20210068 (2022).

Deleu, T. et al. Bayesian structure learning with generative flow networks. In Conference on Uncertainty in Artificial Intelligence 518–528 (2022).

Geirhos, R. et al. Shortcut learning in deep neural networks. Nat. Mach. Intell. 2 , 665–673 (2020).

Koh, P. W. et al. WILDS: a benchmark of in-the-wild distribution shifts. In International Conference on Machine Learning 5637–5664 (2021).

Luo, Z. et al. Label efficient learning of transferable representations across domains and tasks. In Advances in Neural Information Processing Systems 30 , 165–177 (2017).

Mahmood, R. et al. How much more data do I need? estimating requirements for downstream tasks. In IEEE Conference on Computer Vision and Pattern Recognition 275–284 (2022).

Coley, C. W., Eyke, N. S. & Jensen, K. F. Autonomous discovery in the chemical sciences part II: outlook. Angew. Chem. Int. Ed. 59 , 23414–23436 (2020).

Gao, W. & Coley, C. W. The synthesizability of molecules proposed by generative models. J. Chem. Inf. Model. 60 , 5714–5723 (2020).

Kogler, R. et al. Jet substructure at the Large Hadron Collider. Rev. Mod. Phys. 91 , 045003 (2019).

Acosta, J. N. et al. Multimodal biomedical AI. Nat. Med. 28 , 1773–1784 (2022).

Alayrac, J.-B. et al. Flamingo: a visual language model for few-shot learning. In Advances in Neural Information Processing Systems 35 , 23716–23736 (2022).

Elmarakeby, H. A. et al. Biologically informed deep neural network for prostate cancer discovery. Nature 598 , 348–352 (2021).

Qin, Y. et al. A multi-scale map of cell structure fusing protein images and interactions. Nature 600 , 536–542 (2021).

Schaffer, L. V. & Ideker, T. Mapping the multiscale structure of biological systems. Cell Systems 12 , 622–635 (2021).

Stiglic, G. et al. Interpretability of machine learning-based prediction models in healthcare. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 10 , e1379 (2020).

Erion, G. et al. A cost-aware framework for the development of AI models for healthcare applications. Nat. Biomed. Eng. 6 , 1384–1398 (2022).

Lundberg, S. M. et al. Explainable machine-learning predictions for the prevention of hypoxaemia during surgery. Nat. Biomed. Eng. 2 , 749–760 (2018).