CS224N | Winter2019 | Assignment 3 Solution

CS224n, 2019W - Assignment3 Solution

HW3: Dependency parsing and neural network foundations you can find material: code handout

Table of contents

(c), (d), (e), 1. machine learning & neural networks (8 points), (a) adam optimizer.

Momentum is working as acceleration reflecting the previous gradients tendency. Hight variance is well known to raising overfitting problem.

Parameters that previous gradients are small and not volatile get larger updates. This helps model to handle with sparse gradients(merits of AdaGrad) and also non-stationary objectives(merits of RMSProp)

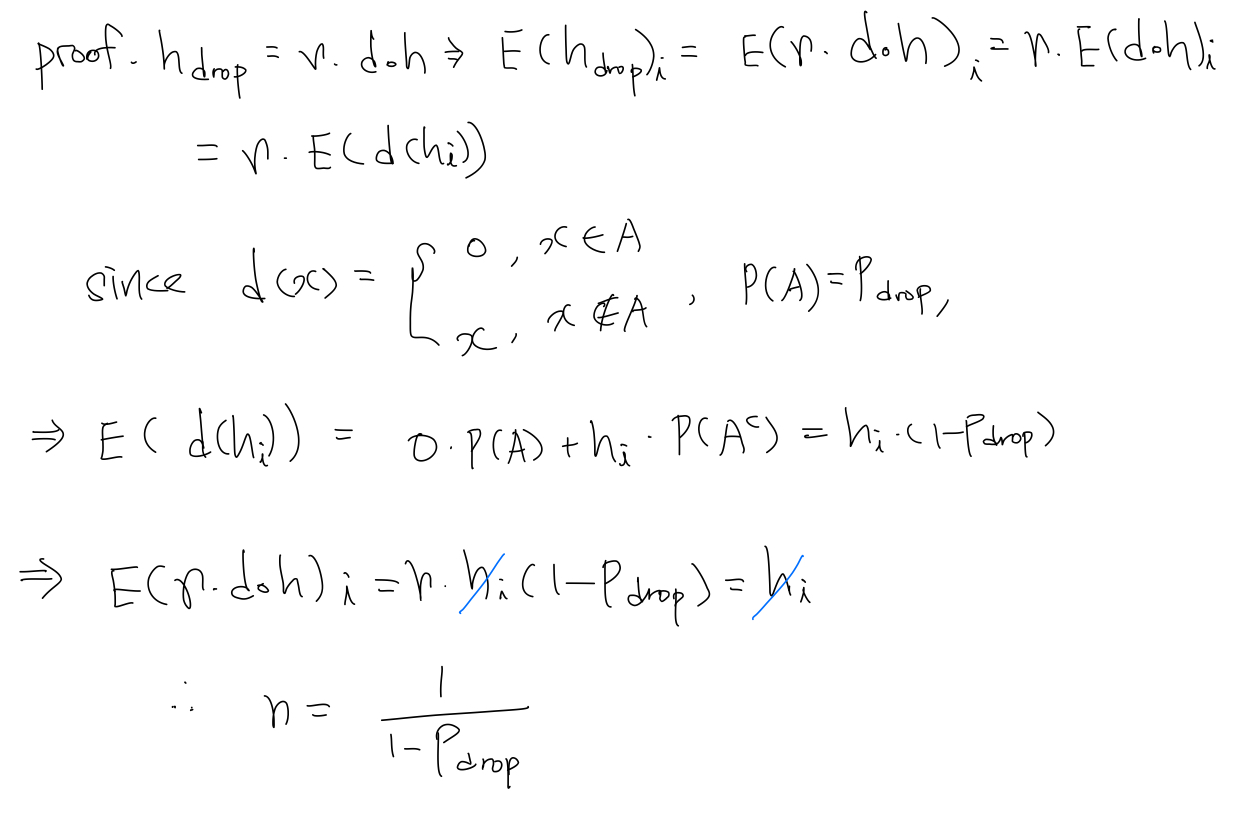

(b) Dropout

Drop out is one of the regularizations, which restrict an overfitting. Randomly setting units zero is for make units stronger on abrupt absence of other units.

And evaluation is made from train, to model being improved. But if we use drop out also at evaluation, it does re-trained with same circumstances, so that can’t do well in test.

2. Neural Transition-Based Dependency Parsing (42 points)

Whole scripts are here .

- Error type : Verb Phrase Attachment Error

- Incorrect dependency : wedding → fearing

- Correct dependency : heading → fearing

- Error type : Coordination Attachment Error

- Incorrect dependency : rescue → and

- Correct dependency : rescue → rush

- Error type : Prepositional Phrase Attachment Error

- Incorrect dependency : named → Midland

- Correct dependency : guy → Midland

- Error type : Modifier Attachment Error

- Incorrect dependency : element → most

- Correct dependency : crucial → most

Stanford University

Stanford engineering, s tanford e ngineering e verywhere, cs224n - natural language processing, course is inactive, stanford center for professional development.

- Stanford Home

- Maps & Directions

- Search Stanford

- Emergency Info

- Terms of Use

- Non-Discrimination

- Accessibility

© Stanford University, Stanford, California 94305

Assignment 5

Handout: CS 224N: Assignment 5: Self-Attention, Transformers, and Pretraining

1. Attention Exploration

(a). copying in attention.

\(\alpha\) is calculating the softmax of \(k_i^T q\) which makes it like a categorical probability distribution.

To make \(\alpha_j \gg \sum_{i \neq j} \alpha_i\) for some \(j\) . \(k_j^T q \gg k_i^T q, \forall i\neq j\) is required.

under conditions give in ii. \(c\approx v_j\)

Intuitively, the larger \(\alpha_i\) is, the higher attention is given on \(v_i\) in the output \(c\) .

(b). An average of two

Let $$ M=\left[\begin{array}{c} a_{1}^{\top} \\ \vdots \\ a_{m}^{\top} \end{array}\right] $$ then, \(Ms = M(v_a+v_b)=v_a\)

(c). Drawbacks of single-headed attention

Since the variance is small, we can treat \(k_i\) as concentrating around \(\mu_i\) , so $$ q=\beta(\mu_a+\mu_b), \beta\gg 0 $$

As the figures shows, \(k_a\) might ahve larger or smaller magnitude comparing with \(\mu_a\) . To make an estimation, we can assume \(k_a\approx \gamma \mu_a, \gamma \sim N(1, \frac{1}{2})\) , so $$ c \approx \frac{\exp (\gamma \beta)}{\exp (\gamma \beta)+\exp (\beta)} v_{a}+\frac{\exp (\beta)}{\exp (\gamma \beta)+\exp (\beta)} v_{b} =\frac{1}{\exp ((1-\gamma) \beta)+1} v_{a}+\frac{1}{\exp ((\gamma-1) \beta)+1} v_{b} $$ i.e. \(c\) will oscillates between \(v_a\) and \(v_b\) as sampling \({k_1, k_2, ..., k_n}\) multiple times.

(d). Benefits of multi-headed attention

- \(q_1=q_2=\beta(\mu_a+\mu_b), \beta \gg 0\)

- \(q_1 = \mu_a, q_2 = \mu_b\) , or vice versa.

Under two circumstances in i. 1. Averaging between different samples makes \(k_a\) closer to its expectation \(\mu_a\) , the result is that \(c\) is closer to \(\frac{1}{2}(v_a+v_b)\) . 2. \(c\) is always approximately \(\frac{1}{2}(v_a+v_b)\) , exactly when \(\alpha=0\) .

2. Pretrained Transformer models and knowledge access

Implementation: Assignment 5 Code

(g). sythesize variant

The single sythesizer can't capture the inter-dimension relative importance.

The Unique Burial of a Child of Early Scythian Time at the Cemetery of Saryg-Bulun (Tuva)

<< Previous page

Pages: 379-406

In 1988, the Tuvan Archaeological Expedition (led by M. E. Kilunovskaya and V. A. Semenov) discovered a unique burial of the early Iron Age at Saryg-Bulun in Central Tuva. There are two burial mounds of the Aldy-Bel culture dated by 7th century BC. Within the barrows, which adjoined one another, forming a figure-of-eight, there were discovered 7 burials, from which a representative collection of artifacts was recovered. Burial 5 was the most unique, it was found in a coffin made of a larch trunk, with a tightly closed lid. Due to the preservative properties of larch and lack of air access, the coffin contained a well-preserved mummy of a child with an accompanying set of grave goods. The interred individual retained the skin on his face and had a leather headdress painted with red pigment and a coat, sewn from jerboa fur. The coat was belted with a leather belt with bronze ornaments and buckles. Besides that, a leather quiver with arrows with the shafts decorated with painted ornaments, fully preserved battle pick and a bow were buried in the coffin. Unexpectedly, the full-genomic analysis, showed that the individual was female. This fact opens a new aspect in the study of the social history of the Scythian society and perhaps brings us back to the myth of the Amazons, discussed by Herodotus. Of course, this discovery is unique in its preservation for the Scythian culture of Tuva and requires careful study and conservation.

Keywords: Tuva, Early Iron Age, early Scythian period, Aldy-Bel culture, barrow, burial in the coffin, mummy, full genome sequencing, aDNA

Information about authors: Marina Kilunovskaya (Saint Petersburg, Russian Federation). Candidate of Historical Sciences. Institute for the History of Material Culture of the Russian Academy of Sciences. Dvortsovaya Emb., 18, Saint Petersburg, 191186, Russian Federation E-mail: [email protected] Vladimir Semenov (Saint Petersburg, Russian Federation). Candidate of Historical Sciences. Institute for the History of Material Culture of the Russian Academy of Sciences. Dvortsovaya Emb., 18, Saint Petersburg, 191186, Russian Federation E-mail: [email protected] Varvara Busova (Moscow, Russian Federation). (Saint Petersburg, Russian Federation). Institute for the History of Material Culture of the Russian Academy of Sciences. Dvortsovaya Emb., 18, Saint Petersburg, 191186, Russian Federation E-mail: [email protected] Kharis Mustafin (Moscow, Russian Federation). Candidate of Technical Sciences. Moscow Institute of Physics and Technology. Institutsky Lane, 9, Dolgoprudny, 141701, Moscow Oblast, Russian Federation E-mail: [email protected] Irina Alborova (Moscow, Russian Federation). Candidate of Biological Sciences. Moscow Institute of Physics and Technology. Institutsky Lane, 9, Dolgoprudny, 141701, Moscow Oblast, Russian Federation E-mail: [email protected] Alina Matzvai (Moscow, Russian Federation). Moscow Institute of Physics and Technology. Institutsky Lane, 9, Dolgoprudny, 141701, Moscow Oblast, Russian Federation E-mail: [email protected]

Shopping Cart Items: 0 Cart Total: 0,00 € place your order

Price pdf version

student - 2,75 € individual - 3,00 € institutional - 7,00 €

Copyright В© 1999-2022. Stratum Publishing House

- Yekaterinburg

- Novosibirsk

- Vladivostok

- Tours to Russia

- Practicalities

- Russia in Lists

Rusmania • Deep into Russia

Out of the Centre

Savvino-storozhevsky monastery and museum.

Zvenigorod's most famous sight is the Savvino-Storozhevsky Monastery, which was founded in 1398 by the monk Savva from the Troitse-Sergieva Lavra, at the invitation and with the support of Prince Yury Dmitrievich of Zvenigorod. Savva was later canonised as St Sabbas (Savva) of Storozhev. The monastery late flourished under the reign of Tsar Alexis, who chose the monastery as his family church and often went on pilgrimage there and made lots of donations to it. Most of the monastery’s buildings date from this time. The monastery is heavily fortified with thick walls and six towers, the most impressive of which is the Krasny Tower which also serves as the eastern entrance. The monastery was closed in 1918 and only reopened in 1995. In 1998 Patriarch Alexius II took part in a service to return the relics of St Sabbas to the monastery. Today the monastery has the status of a stauropegic monastery, which is second in status to a lavra. In addition to being a working monastery, it also holds the Zvenigorod Historical, Architectural and Art Museum.

Belfry and Neighbouring Churches

Located near the main entrance is the monastery's belfry which is perhaps the calling card of the monastery due to its uniqueness. It was built in the 1650s and the St Sergius of Radonezh’s Church was opened on the middle tier in the mid-17th century, although it was originally dedicated to the Trinity. The belfry's 35-tonne Great Bladgovestny Bell fell in 1941 and was only restored and returned in 2003. Attached to the belfry is a large refectory and the Transfiguration Church, both of which were built on the orders of Tsar Alexis in the 1650s.

To the left of the belfry is another, smaller, refectory which is attached to the Trinity Gate-Church, which was also constructed in the 1650s on the orders of Tsar Alexis who made it his own family church. The church is elaborately decorated with colourful trims and underneath the archway is a beautiful 19th century fresco.

Nativity of Virgin Mary Cathedral

The Nativity of Virgin Mary Cathedral is the oldest building in the monastery and among the oldest buildings in the Moscow Region. It was built between 1404 and 1405 during the lifetime of St Sabbas and using the funds of Prince Yury of Zvenigorod. The white-stone cathedral is a standard four-pillar design with a single golden dome. After the death of St Sabbas he was interred in the cathedral and a new altar dedicated to him was added.

Under the reign of Tsar Alexis the cathedral was decorated with frescoes by Stepan Ryazanets, some of which remain today. Tsar Alexis also presented the cathedral with a five-tier iconostasis, the top row of icons have been preserved.

Tsaritsa's Chambers

The Nativity of Virgin Mary Cathedral is located between the Tsaritsa's Chambers of the left and the Palace of Tsar Alexis on the right. The Tsaritsa's Chambers were built in the mid-17th century for the wife of Tsar Alexey - Tsaritsa Maria Ilinichna Miloskavskaya. The design of the building is influenced by the ancient Russian architectural style. Is prettier than the Tsar's chambers opposite, being red in colour with elaborately decorated window frames and entrance.

At present the Tsaritsa's Chambers houses the Zvenigorod Historical, Architectural and Art Museum. Among its displays is an accurate recreation of the interior of a noble lady's chambers including furniture, decorations and a decorated tiled oven, and an exhibition on the history of Zvenigorod and the monastery.

Palace of Tsar Alexis

The Palace of Tsar Alexis was built in the 1650s and is now one of the best surviving examples of non-religious architecture of that era. It was built especially for Tsar Alexis who often visited the monastery on religious pilgrimages. Its most striking feature is its pretty row of nine chimney spouts which resemble towers.

Plan your next trip to Russia

Ready-to-book tours.

Your holiday in Russia starts here. Choose and book your tour to Russia.

REQUEST A CUSTOMISED TRIP

Looking for something unique? Create the trip of your dreams with the help of our experts.

Follow Puck Worlds online:

- Follow Puck Worlds on Twitter

Site search

Filed under:

- Kontinental Hockey League

Gagarin Cup Preview: Atlant vs. Salavat Yulaev

Share this story.

- Share this on Facebook

- Share this on Twitter

- Share this on Reddit

- Share All sharing options

Share All sharing options for: Gagarin Cup Preview: Atlant vs. Salavat Yulaev

Gagarin cup (khl) finals: atlant moscow oblast vs. salavat yulaev ufa.

Much like the Elitserien Finals, we have a bit of an offense vs. defense match-up in this league Final. While Ufa let their star top line of Alexander Radulov, Patrick Thoresen and Igor Grigorenko loose on the KHL's Western Conference, Mytischi played a more conservative style, relying on veterans such as former NHLers Jan Bulis, Oleg Petrov, and Jaroslav Obsut. Just reaching the Finals is a testament to Atlant's disciplined style of play, as they had to knock off much more high profile teams from Yaroslavl and St. Petersburg to do so. But while they did finish 8th in the league in points, they haven't seen the likes of Ufa, who finished 2nd.

This series will be a challenge for the underdog, because unlike some of the other KHL teams, Ufa's top players are generally younger and in their prime. Only Proshkin amongst regular blueliners is over 30, with the work being shared by Kirill Koltsov (28), Andrei Kuteikin (26), Miroslav Blatak (28), Maxim Kondratiev (28) and Dmitri Kalinin (30). Oleg Tverdovsky hasn't played a lot in the playoffs to date. Up front, while led by a fairly young top line (24-27), Ufa does have a lot of veterans in support roles: Vyacheslav Kozlov , Viktor Kozlov , Vladimir Antipov, Sergei Zinovyev and Petr Schastlivy are all over 30. In fact, the names of all their forwards are familiar to international and NHL fans: Robert Nilsson , Alexander Svitov, Oleg Saprykin and Jakub Klepis round out the group, all former NHL players.

For Atlant, their veteran roster, with only one of their top six D under the age of 30 (and no top forwards under 30, either), this might be their one shot at a championship. The team has never won either a Russian Superleague title or the Gagarin Cup, and for players like former NHLer Oleg Petrov, this is probably the last shot at the KHL's top prize. The team got three extra days rest by winning their Conference Final in six games, and they probably needed to use it. Atlant does have younger regulars on their roster, but they generally only play a few shifts per game, if that.

The low event style of game for Atlant probably suits them well, but I don't know how they can manage to keep up against Ufa's speed, skill, and depth. There is no advantage to be seen in goal, with Erik Ersberg and Konstantin Barulin posting almost identical numbers, and even in terms of recent playoff experience Ufa has them beat. Luckily for Atlant, Ufa isn't that far away from the Moscow region, so travel shouldn't play a major role.

I'm predicting that Ufa, winners of the last Superleague title back in 2008, will become the second team to win the Gagarin Cup, and will prevail in five games. They have a seriously well built team that would honestly compete in the NHL. They represent the potential of the league, while Atlant represents closer to the reality, as a team full of players who played themselves out of the NHL.

- Atlant @ Ufa, Friday Apr 8 (3:00 PM CET/10:00 PM EST)

- Atlant @ Ufa, Sunday Apr 10 (1:00 PM CET/8:00 AM EST)

- Ufa @ Atlant, Tuesday Apr 12 (5:30 PM CET/12:30 PM EST)

- Ufa @ Atlant, Thursday Apr 14 (5:30 PM CET/12:30 PM EST)

Games 5-7 are as yet unscheduled, but every second day is the KHL standard, so expect Game 5 to be on Saturday, like an early start.

Loading comments...

IMAGES

VIDEO

COMMENTS

These are my solutions to the assignments of CS224n (Natural Language Processing with Deep Learning) offered by Stanford University in Winter 2021. There are five assignments in total. ... Assignment 3: Dependency parsing a3/a3.pdf; Assignment 4: Neural machine translation with seq2seq and attention a4/a4.pdf;

To associate your repository with the cs224n-assignment-solutions topic, visit your repo's landing page and select "manage topics." GitHub is where people build software. More than 100 million people use GitHub to discover, fork, and contribute to over 420 million projects.

cs224n, CS224N | Winter2019 | Assignment 3 Solution. ... CS224n, 2019W - Assignment3 Solution. HW3: Dependency parsing and neural network foundations you can find material: code handout. Table of contents. 1. Machine Learning & Neural Networks (8 points) (a) Adam Optimizer. i. ii. (b) Dropout. i. ii. 2. Neural Transition-Based Dependency ...

Handout: CS 224n Assignment #3: Dependency Parsing. 1. Machine Learning & Neural Networks. (a). Adam Optimizer. Adam: m ← β 1 m + ( 1 − β 1) ∇ θ J minibatch ( θ) θ ← θ − α m The Adam algorithm calculates an exponential weighted moving average of the gradient which makes it perform well with nosiy or sparse gradients by minizing ...

CS 224n Assignment 3 Page 4 of 12 Model.train on batch (which NERModel inherits from) and write the training procedure that loops over all minibatches in the dataset. iv.(2 points) Train your model using the command python q1 window.py train. The code should take only about 2{3 minutes to run and you should get a development score of at least ...

Assignment 3 (14%): Neural Machine Translation with sequence-to-sequence, attention, and subwords; ... Students are required to independently submit their solutions for CS224N homework assignments. Collaboration with generative AI tools such as Co-Pilot and ChatGPT is allowed, treating them as collaborators in the problem-solving process. ...

Foundations of Machine Learning (e.g. CS 221 or CS 229) We will be formulating cost functions, taking derivatives and performing optimization with gradient descent. If you already have basic machine learning and/or deep learning knowledge, the course will be easier; however it is possible to take CS224n without it.

For more information about Stanford's Artificial Intelligence professional and graduate programs visit: https://stanford.io/3vUDzRvThis lecture covers:1. Why...

From Assignment 2 and onwards you will need to edit latex files for your written solutions. I can recommend this wikibook as an up-to-date, comprehensive, and accessible reference. To have git integration I would use Vscode with a LateX extension as a LateX editor. For personal use, overleaf might be easier and quicker to use.

CS224N - Natural Language Processing. Course is Inactive. This course is designed to introduce students to the fundamental concepts and ideas in natural language processing (NLP), and to get them up to speed with current research in the area. It develops an in-depth understanding of both the algorithms available for the processing of linguistic ...

CS 224n Spring 2024: Assignment #3 Due Date: April 30th, Tuesday, 4:30 PM PST. This assignment is split into two sections: Neural Machine Translation with RNNs and Analyzing NMT Systems. The first is primarily coding and implementation focused, whereas the second entirely consists of written, analysis questions.

Under two circumstances in i. 1. Averaging between different samples makes k a closer to its expectation μ a, the result is that c is closer to 1 2 ( v a + v b). 2. c is always approximately 1 2 ( v a + v b), exactly when α = 0. 2. Pretrained Transformer models and knowledge access. Implementation: Assignment 5 Code.

My solutions to the assignments of Stanford CS224n: Natural Language Processing with Deep Learning (Winter 2022). - Tender-sun/CS224n-Winter-2023 ... Assignment 2; Assignment 3; Assignment 4; Assignment 5 (Self-Attention, Transformers, and Pretraining) Default Final Project (IID SQuAD track)

The crew was engaged in a local training flight at Moscow-Sheremetyevo Airport consisting of takeoff and landings in strong cross winds. At takeoff, the right engine was voluntarily shut down.

Burial 5 was the most unique, it was found in a coffin made of a larch trunk, with a tightly closed lid. Due to the preservative properties of larch and lack of air access, the coffin contained a well-preserved mummy of a child with an accompanying set of grave goods. The interred individual retained the skin on his face and had a leather ...

This assignment has two parts which are about representing words with dense vectors. Having these vectors can be useful in downstream tasks in NLP. The first method of deriving word vector stems from the co-occurrence matrices and SVD decomposition. The second method is based on maximum-likelihood training in ML. 1.

Zvenigorod's most famous sight is the Savvino-Storozhevsky Monastery, which was founded in 1398 by the monk Savva from the Troitse-Sergieva Lavra, at the invitation and with the support of Prince Yury Dmitrievich of Zvenigorod. Savva was later canonised as St Sabbas (Savva) of Storozhev. The monastery late flourished under the reign of Tsar ...

CS 224n Assignment 4 Page 4 of 8 (d) (8 points) (coding) Implement the encode function in nmt_model.py. This function converts the padded source sentences into the tensor X, generates henc 1,...,hencm, and computes the initial state hdec 0 and initial cell cdec 0 for the Decoder. You can run a non-comprehensive sanity check by execut-

Complete solutions for Stanford CS224n, winter, 2019 - ZacBi/CS224n-2019-solutions. Skip to content. Navigation Menu Toggle navigation. Sign in Product Actions. Automate any workflow Packages. Host and manage packages Security ... CS 224n Assignment #3: Dependency Parsing. 1. Machine Learning & Neural Networks

Much like the Elitserien Finals, we have a bit of an offense vs. defense match-up in this league Final. While Ufa let their star top line of Alexander Radulov, Patrick Thoresen and Igor Grigorenko loose on the KHL's Western Conference, Mytischi played a more conservative style, relying on veterans such as former NHLers Jan Bulis, Oleg Petrov, and Jaroslav Obsut.

CS 224N: Assignment #1 (b)(3 points) Derive the gradient with regard to the inputs of a softmax function when cross entropy loss is used for evaluation, i.e., nd the gradients with respect to the softmax input vector , when the prediction is made by y^ = softmax( ). Remember the cross entropy function is CE(y;y^) = X i y i log(^y i) (3)

CS 224n: Assignment #5 [updated] This is the last assignment before you begin working on your projects. It is designed to prepare you for implementing things by yourself. This assignment is coding-heavy and written-question-light. The complexity of the code itself is similar to the complexity of the code you wrote in Assignment 4. What