fastapi-queue 0.1.1

pip install fastapi-queue Copy PIP instructions

Released: Apr 23, 2022

A task queue based on redis that can serve as a peak shaver and protect your app.

Verified details

Maintainers.

Unverified details

Project links, github statistics.

- Open issues:

View statistics for this project via Libraries.io , or by using our public dataset on Google BigQuery

License: MIT License

Author: WEN

Tags fastapi-queue, fastapi, queue

Requires: Python >=3.7

Classifiers

- OSI Approved :: MIT License

- Microsoft :: Windows

- POSIX :: Linux

- Python :: 3

- Python :: 3.7

- Python :: 3.8

- Python :: 3.9

- Python :: 3.10

Project description

Fastapi-queue.

A python implementation of a task queue based on Redis that can serve as a peak shaver and protect your app.

What is fastapi-queue?

Fastapi-queue provides a high-performance redis-based task queue that allows requests sent by clients to the FastAPI server to be cached in the queue for delayed execution. This means that you don't have to worry about overwhelming your back-end data service, nor do you have to worry about requests being immediately rejected due to exceeding the load limit, when there is an influx of requests to your app in a very short period of time.

Why fastapi-queue?

This module is for people who want to use task queues but don't want to start too many dependencies to prevent increased maintenance costs. For example if you want to enjoy the benefits of queues but want to maintain a lightweight application and don't want to install RabbitMQ , then fastapi-queue is your choice, you just need to rely on python runtime and Redis environment.

- Separate gateway and service nodes.

- Superior horizontal scalability.

- Fully asynchronous framework, ultra fast.

Requirements

- aioredis >= 2.0.0

- ThreadPoolExecutorPlus >= 0.2.2

- msgpack >= 1.0.0

Documentation

https://fastapi-queue.readthedocs.io (on going)

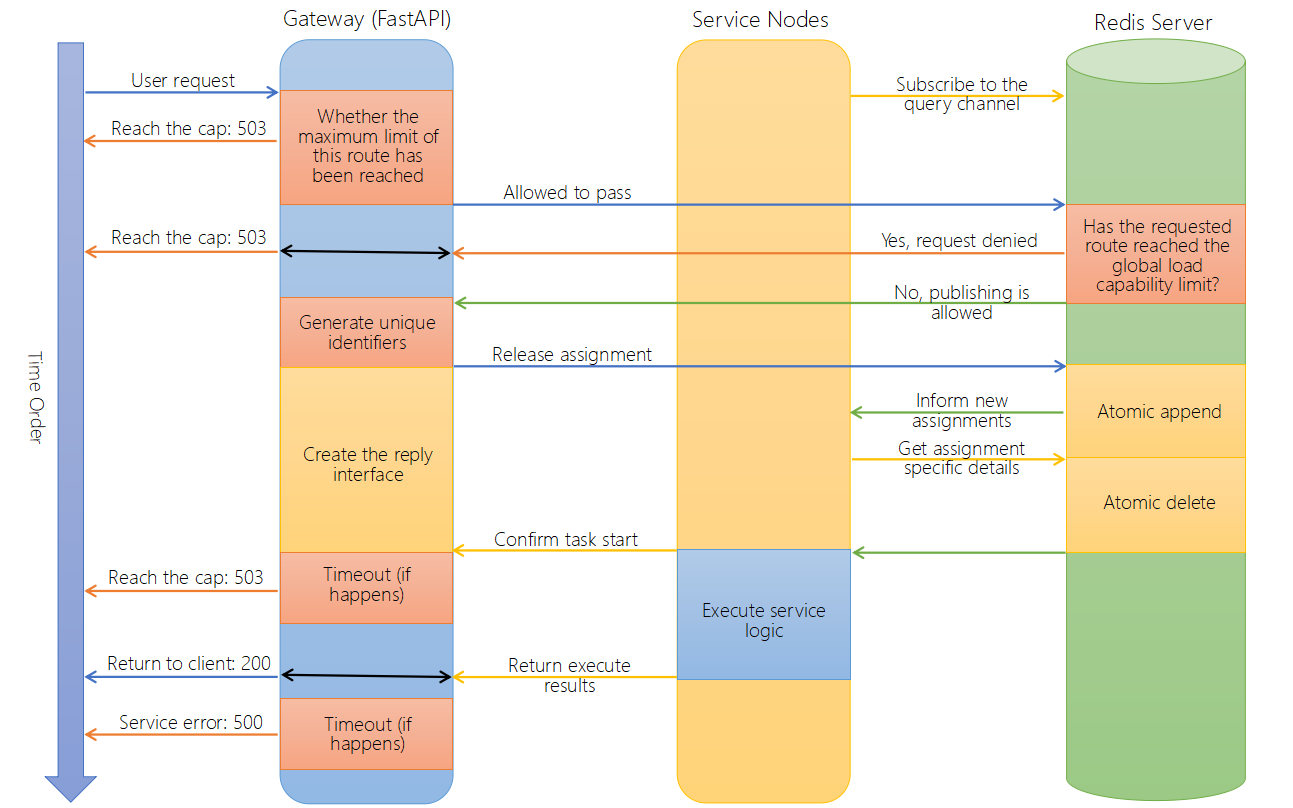

Response sequence description

Service nodes

Performance

Due to the fully asynchronous support, complex interprocedural calls exhibit a very low processing latency.

(Latency vs. number of request threads on going)

(Maximum capability requests per second vs. number of service nodes on going)

- The code has been carefully debugged and functions reliably, but I haven't spent much time making it a generic module, which means that if you encounter bugs you'll need to modify the code yourself, and they're usually caused by oversights of detail somewhere.

- The service has undergone rigorous stress tests and can work for hours under concurrent requests from hundreds of clients, but for reliability of protection, you need to carefully set the upper limit of your load. Where RateLimiter can provide you with a low consumption roughly pre-intercepted function.

For example,

Project details

Release history release notifications | rss feed.

Apr 23, 2022

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages .

Source Distribution

Uploaded Apr 23, 2022 Source

Built Distribution

Uploaded Apr 23, 2022 Python 3

Hashes for fastapi-queue-0.1.1.tar.gz

Hashes for fastapi_queue-0.1.1-py3-none-any.whl.

- português (Brasil)

Supported by

Asynchronous Tasks with Flask and Redis Queue

Share this tutorial.

- Hacker News

If a long-running task is part of your application's workflow you should handle it in the background, outside the normal flow.

Perhaps your web application requires users to submit a thumbnail (which will probably need to be re-sized) and confirm their email when they register. If your application processed the image and sent a confirmation email directly in the request handler, then the end user would have to wait for them both to finish. Instead, you'll want to pass these tasks off to a task queue and let a separate worker process deal with it, so you can immediately send a response back to the client. The end user can do other things on the client-side and your application is free to respond to requests from other users.

This tutorial looks at how to configure Redis Queue (RQ) to handle long-running tasks in a Flask app.

Celery is a viable solution as well. Check out Asynchronous Tasks with Flask and Celery for more.

Project Setup

Trigger a task, redis queue, task status.

By the end of this tutorial, you will be able to:

- Integrate Redis Queue into a Flask app and create tasks.

- Containerize Flask and Redis with Docker.

- Run long-running tasks in the background with a separate worker process.

- Set up RQ Dashboard to monitor queues, jobs, and workers.

- Scale the worker count with Docker.

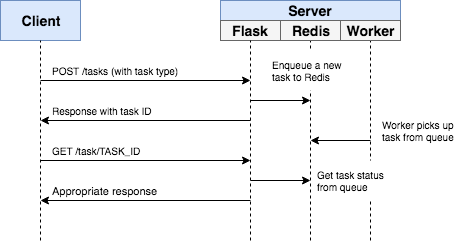

Our goal is to develop a Flask application that works in conjunction with Redis Queue to handle long-running processes outside the normal request/response cycle.

- The end user kicks off a new task via a POST request to the server-side

- Within the view, a task is added to the queue and the task id is sent back to the client-side

- Using AJAX, the client continues to poll the server to check the status of the task while the task itself is running in the background

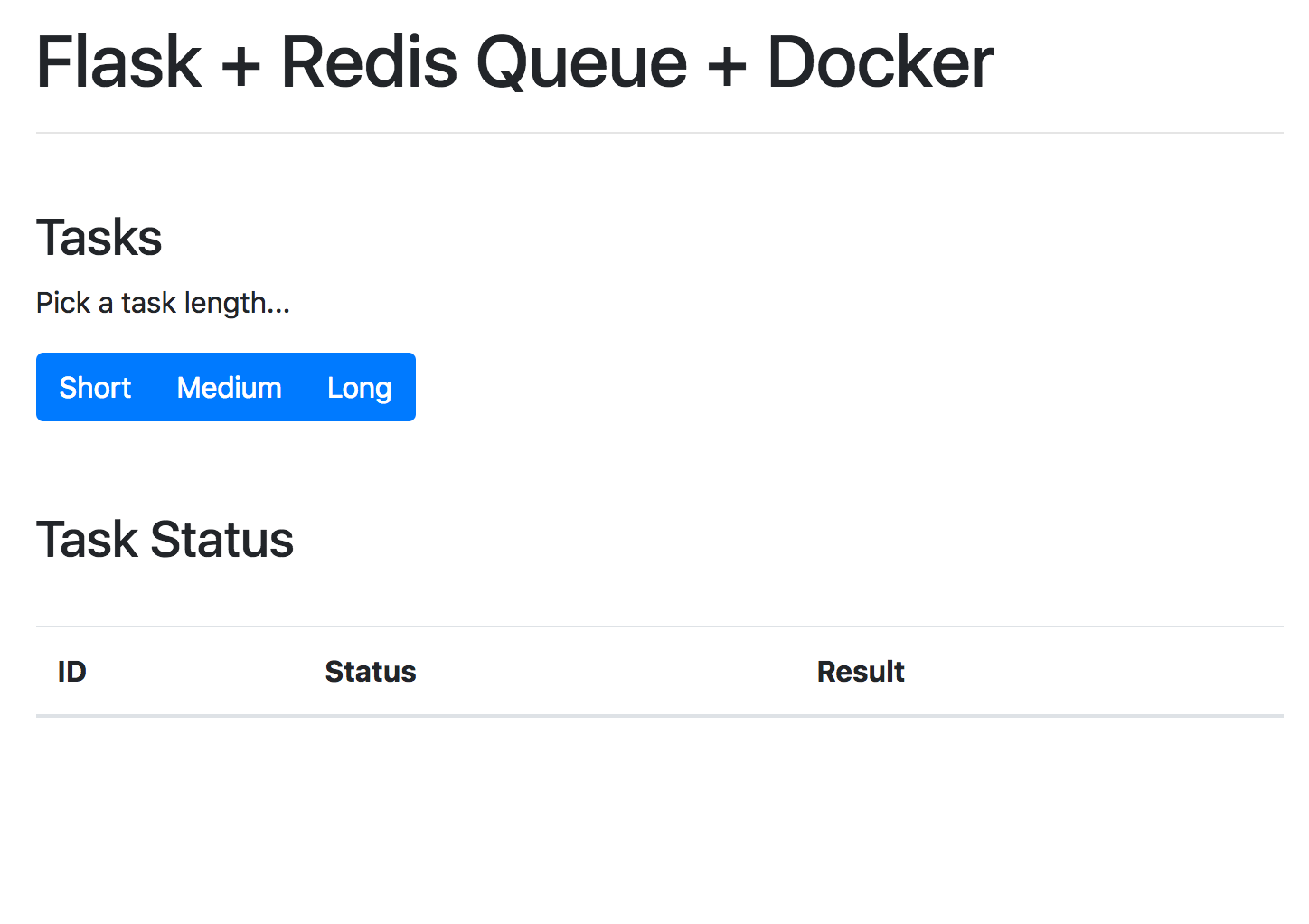

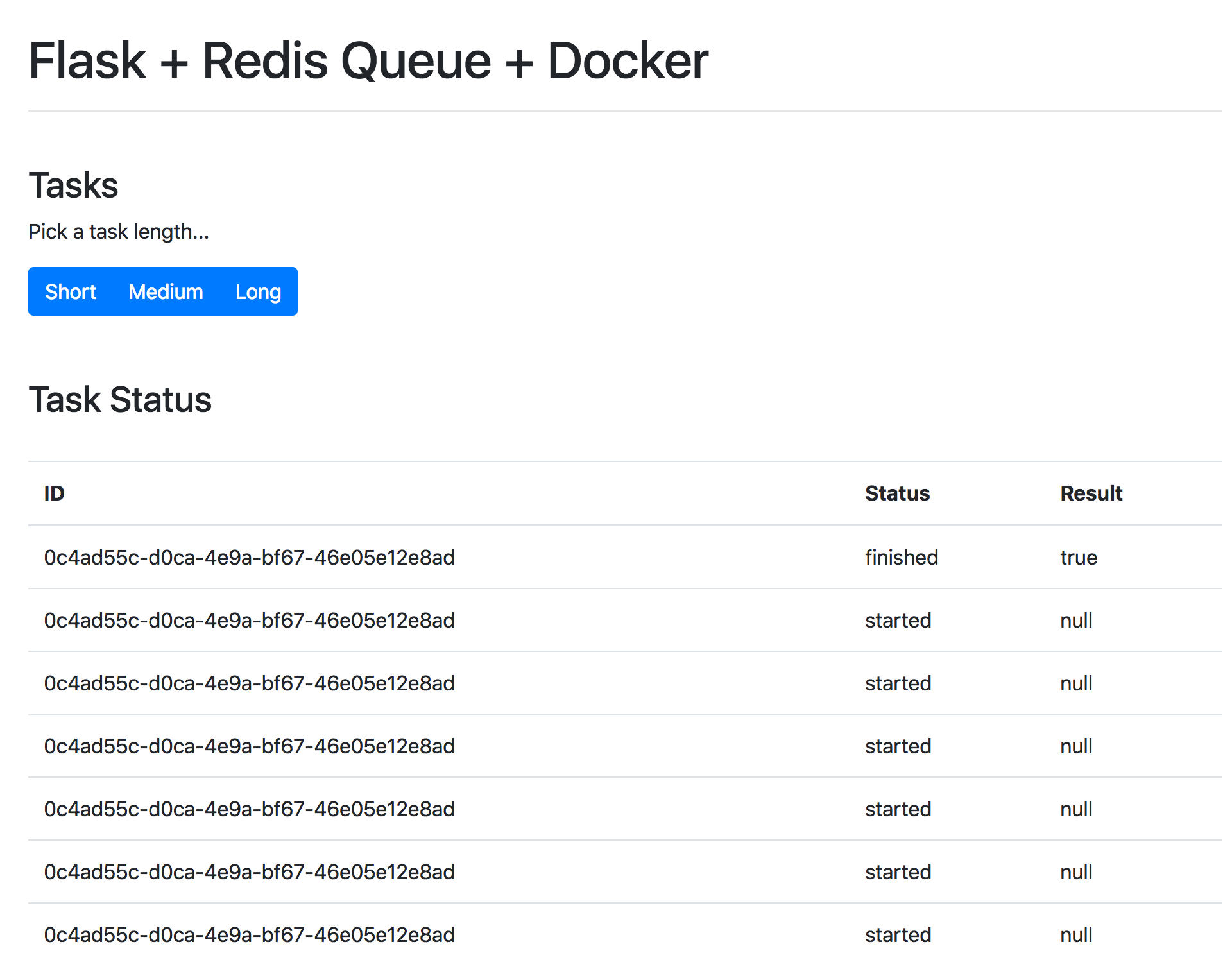

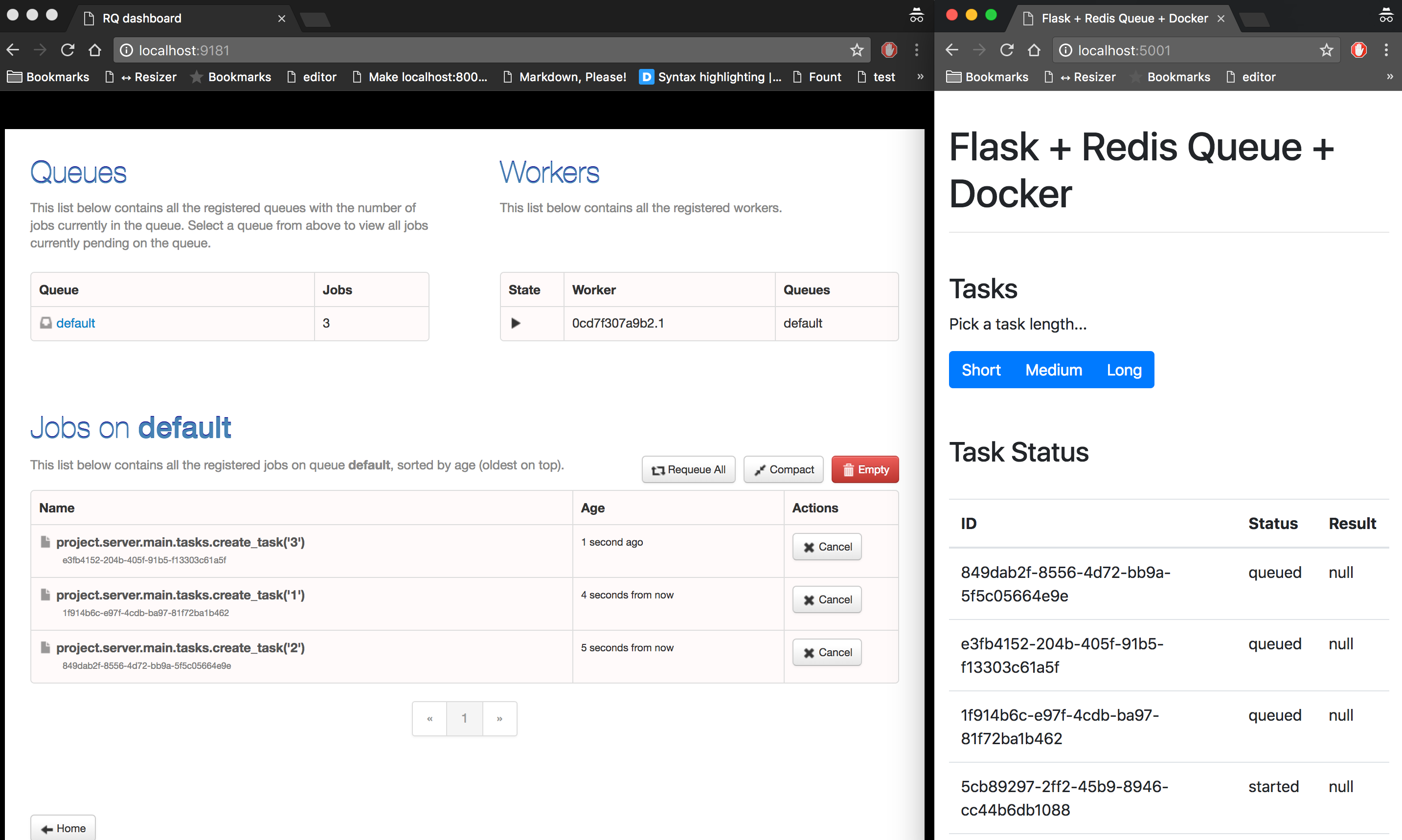

In the end, the app will look like this:

Want to follow along? Clone down the base project, and then review the code and project structure:

Since we'll need to manage three processes in total (Flask, Redis, worker), we'll use Docker to simplify our workflow so they can be managed from a single terminal window.

To test, run:

Open your browser to http://localhost:5004 . You should see:

An event handler in project/client/static/main.js is set up that listens for a button click and sends an AJAX POST request to the server with the appropriate task type: 1 , 2 , or 3 .

On the server-side, a view is already configured to handle the request in project/server/main/views.py :

We just need to wire up Redis Queue.

So, we need to spin up two new processes: Redis and a worker. Add them to the docker-compose.yml file:

Add the task to a new file called tasks.py in "project/server/main":

Update the view to connect to Redis, enqueue the task, and respond with the id:

Don't forget the imports:

Update BaseConfig :

Did you notice that we referenced the redis service (from docker-compose.yml ) in the REDIS_URL rather than localhost or some other IP? Review the Docker Compose docs for more info on connecting to other services via the hostname.

Finally, we can use a Redis Queue worker , to process tasks at the top of the queue.

manage.py :

Here, we set up a custom CLI command to fire the worker.

It's important to note that the @cli.command() decorator will provide access to the application context along with the associated config variables from project/server/config.py when the command is executed.

Add the imports as well:

Add the dependencies to the requirements file:

Build and spin up the new containers:

To trigger a new task, run:

You should see something like:

Turn back to the event handler on the client-side:

Once the response comes back from the original AJAX request, we then continue to call getStatus() with the task id every second. If the response is successful, a new row is added to the table on the DOM.

Update the view:

Add a new task to the queue:

Then, grab the task_id from the response and call the updated endpoint to view the status:

Test it out in the browser as well:

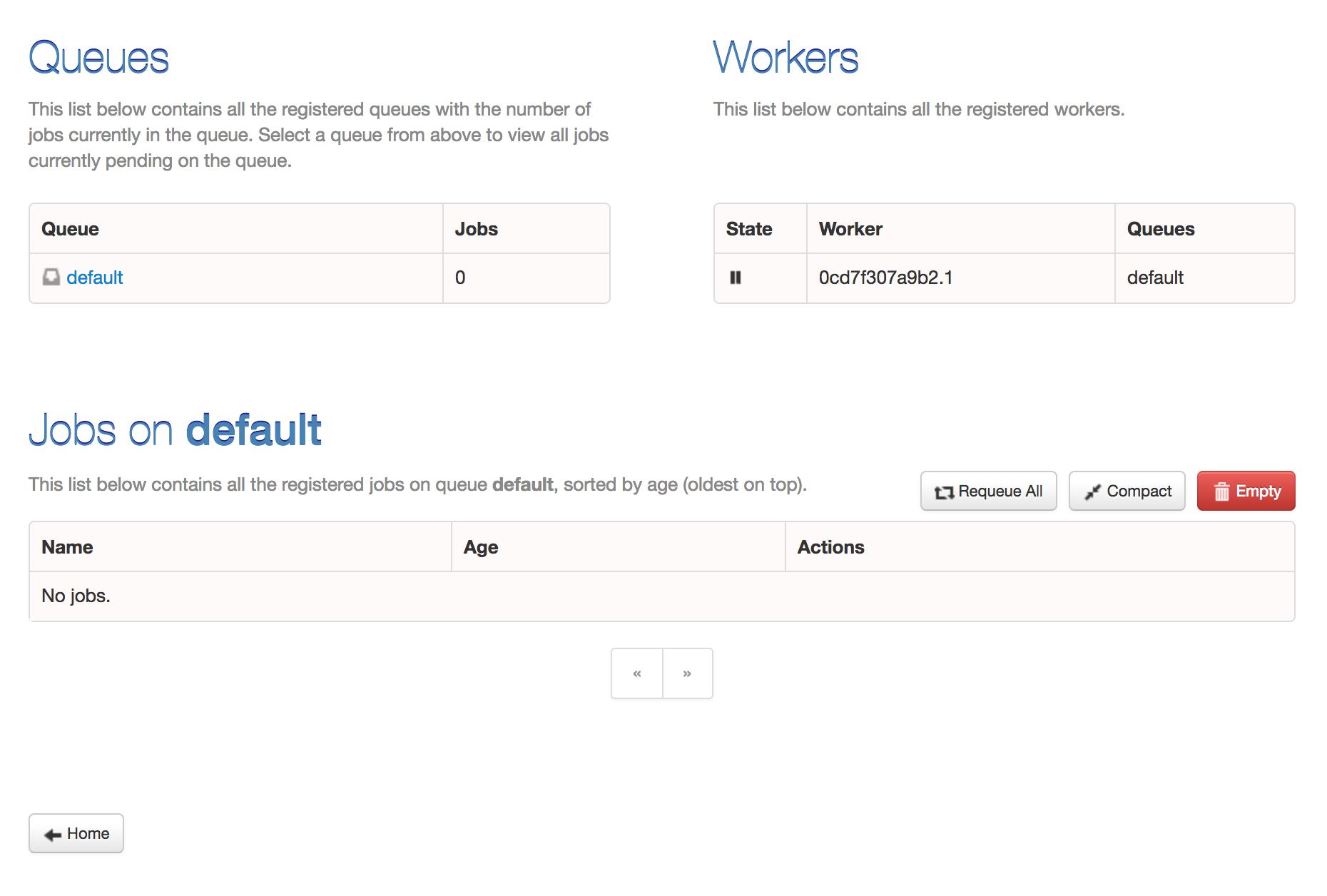

RQ Dashboard is a lightweight, web-based monitoring system for Redis Queue.

To set up, first add a new directory to the "project" directory called "dashboard". Then, add a new Dockerfile to that newly created directory:

Simply add the service to the docker-compose.yml file like so:

Build the image and spin up the container:

Navigate to http://localhost:9181 to view the dashboard:

Kick off a few jobs to fully test the dashboard:

Try adding a few more workers to see how that affects things:

This has been a basic guide on how to configure Redis Queue to run long-running tasks in a Flask app. You should let the queue handle any processes that could block or slow down the user-facing code.

Looking for some challenges?

- Deploy this application across a number of DigitalOcean droplets using Kubernetes or Docker Swarm.

- Write unit tests for the new endpoints. (Mock out the Redis instance with fakeredis )

- Instead of polling the server, try using Flask-SocketIO to open up a websocket connection.

Grab the code from the repo .

Test-Driven Development with Python, Flask, and Docker

In this course, you'll learn how to set up a development environment with Docker in order to build and deploy a microservice powered by Python and Flask. You'll also apply the practices of Test-Driven Development with pytest as you develop a RESTful API.

Recommended Tutorials

Stay sharp with course updates.

Join our mailing list to be notified about updates and new releases.

Send Us Feedback

- Contributing

RQ ( Redis Queue ) is a simple Python library for queueing jobs and processing them in the background with workers. It is backed by Redis and it is designed to have a low barrier to entry. It can be integrated in your web stack easily.

RQ requires Redis >= 3.0.0.

Getting started

First, run a Redis server. You can use an existing one. To put jobs on queues, you don’t have to do anything special, just define your typically lengthy or blocking function:

Then, create a RQ queue:

And enqueue the function call:

Scheduling jobs are similarly easy:

You can also ask RQ to retry failed jobs:

To start executing enqueued function calls in the background, start a worker from your project’s directory:

That’s about it.

Installation

Simply use the following command to install the latest released version:

If you want the cutting edge version (that may well be broken), use this:

Project history

This project has been inspired by the good parts of Celery , Resque and this snippet , and has been created as a lightweight alternative to existing queueing frameworks, with a low barrier to entry.

How to Run Your First Task with RQ, Redis, and Python

Time to read: 7 minutes

- Facebook logo

- Twitter Logo Follow us on Twitter

- LinkedIn logo

As a developer, it can be very useful to learn how to run functions in the background while being able to monitor the queue in another tab or different system. This is incredibly helpful when managing heavy workloads that might not work efficiently when called all at once, or when making large numbers of calls to a database that returns data slowly over time rather than all at once.

In this tutorial we will implement a RQ queue in Python with the help of Redis to schedule and execute tasks in a timely manner.

Tutorial Requirements

- Python 3.6 or newer. If your operating system does not provide a Python interpreter, you can go to python.org to download an installer.

Let’s talk about task queues

Task queues are a great way to allow tasks to work asynchronously outside of the main application flow. There are many task queues in Python to assist you in your project, however, we’ll be discussing a solution today known as RQ.

RQ, also known as Redis Queue , is a Python library that allows developers to enqueue jobs to be processed in the background with workers . The RQ workers will be called when it's time to execute the queue in the background. Using a connection to Redis , it’s no surprise that this library is super lightweight and offers support for those getting started for the first time.

By using this particular task queue, it is possible to process jobs in the background with little to no hassle.

Set up the environment

Create a project directory in your terminal called “rq-test” to follow along.

Install a virtual environment and copy and paste the commands to install rq and related packages. If you are using a Unix or MacOS system, enter the following commands:

If you are on a Windows machine, enter the following commands in a prompt window:

RQ requires a Redis installation on your machine which can be done using the following commands using wget . Redis is on version 6.0.6 at the time of this article publication.

If you are using a Unix or MacOS system, enter these commands to install Redis. This is my personal favorite way to install Redis, but there are alternatives below:

If you have Homebrew installed, you can type brew install redis in the terminal and refer to this GitHub gist to install Redis on the Mac . For developers using Ubuntu Linux, the command sudo apt-get install redis would get the job done as well.

Run the Redis server in a separate terminal window on the default port with the command src/redis-server from the directory where it's installed.

For Windows users, you would have to follow a separate tutorial to run Redis on Windows . Download the latest zip file on GitHub and extract the contents. Run the redis-server.exe file that was extracted from the zip file to start the Redis server.

The output should look similar to the following after running Redis:

Build out the tasks

In this case, a task for Redis Queue is merely a Python function. For this article, we’ll tell the task to print a message to the terminal for a “x” amount of seconds to demonstrate the use of RQ.

Copy and paste the following code to a file named “tasks.py” in your directory.

These are simple tasks that print out numbers and text on the terminal so that we can see if the tasks are executed properly. Using the time.sleep(1) function from the Python time library will allow your task to be suspended for the given number of seconds and overall extend the time of the task so that we can examine their progress.

Feel free to alter this code after the tutorial and create your own tasks. Some other popular tasks are sending a fax message or email by connecting to your email client.

Create your queue

Create another file in the root directory and name it “app.py”. Copy and paste the following code:

The queue object sets up a connection to Redis and initializes a queue based on that connection. This queue can hold all the jobs required to run in the background with workers.

As seen in the code, the tasks.print_task function is added using the enqueue function. This means that the task added to the queue will be executed immediately

The enqueue_in function is another nifty RQ function because it expects a timedelta in order to schedule the specified job. In this case, seconds is specified, but this variable can be changed according to the time schedule expected for your usage. Check out other ways to schedule a job on this GitHub README.

Since we are testing out the RQ queue, I have enqueued both the tasks.print_task and tasks.print_numbers functions so that we can see their output on the terminal. The third argument passed in is a "5" which also stands for the argument passed into the respective functions. In this case, we are expecting to see print_task() print "Hello World!" five times and for print_numbers() to print 5 numbers in order.

If you have created any additional task, be sure to import your tasks at the top of the file so that all the tasks in your Python file can be accessed.

Run the queue

For the purposes of this article, the gif demo below will show a perfect execution of the tasks in queue so no exceptions will be raised.

The Redis server should still be running in a tab from earlier in the tutorial at this point. If it stopped, run the command src/redis-server inside the redis-6.0.6 folder on one tab, or for developers with a Windows machine, start redis-cli.exe . Open another tab solely to run the RQ scheduler with the command rq worker --with-scheduler .

This should be the output after running the command above.

The worker command activated a worker process in order to connect to Redis and look for any jobs assigned to the queue from the code in app.py .

Lastly, open a third tab in the terminal for the root project directory. Start up the virtual environment again with the command source venv/bin/activate . Then type python app.py to run the project.

Go back to the tab that is running rq worker --with-scheduler . Wait 5 more seconds after the first task is executed to see the next task. Although the live demo gif below wasn’t able to capture the best timing due to having to run the program and record, it is noticeable that there was a pause between tasks until execution and that both tasks were completed within 15 seconds.

Here’s the sample output inside of the rqworker tab:

As seen in the output above, if the tasks written in task.py had a line to return anything, then the result of both tasks are kept for 500 seconds which is the default. A developer can alter the return value's time to live by passing in a result_ttl parameter when adding tasks to the queue.

Handle exceptions and try again

If a job were to fail, you can always set up a log to keep track of the error messages, or you can use the RQ queue to enqueue and retry failed jobs. By using RQ's FailedJobRegistry package, you can keep track of the jobs that failed during runtime. The RQ documentation discusses how it handles the exceptions and how data regarding the job can help the developer figure out how to resubmit the job.

However, RQ also supports developers in handling exceptions in their own way by injecting your own logic to the rq workers . This may be a helpful option for you if you are executing many tasks in your project and those that failed are not worth retrying.

Force a failed task to retry

Since this is an introductory article to run your first task with RQ, let's try to purposely fail one of the tasks from earlier to test out RQ's retry object.

Go to the tasks.py file and alter the print_task() function so that random numbers can be generated and determine if the function will be executed or not. We will be using the random Python library to assist us in generating numbers. Don't forget to include the import random at the top of the file.

Copy and paste the following lines of code to change the print_task() function in the tasks.py file.

Go back to the app.py file to change the queue. Instead of using the enqueue_in function to execute the tasks.print_task function, delete the line and replace it with queue.enqueue(tasks.print_task, 5, retry=Retry(max=2)) .

The retry object is imported with rq so make sure you add from rq import Retry at the top of the file as well in order to use this functionality. This object accepts max and interval arguments to specify when the particular function will be retried. In the newly changed line, the tasks.print_task function will pass in the function we want to retry, the argument parameter "5" which stands for the seconds of execution, and lastly the maximum amount of times we want the queue to retry.

The tasks in queue should now look like this:

When running the print_task task, there is a 50/50 chance that tasks.print_task() will execute properly since we're only generating a 1 or 2, and the print statement will only happen if you generate a 1. A RuntimeError will be raised otherwise and the queue will retry the task immediately as many times as it takes to successfully print "Hello World!".

What’s next for task queues?

Congratulations! You have successfully learned and implemented the basics of scheduling tasks in the RQ queue. Perhaps now you can tell the worker command to add a task that prints out an infinite number of "Congratulations" messages in a timely manner!

Otherwise, check out these different tasks that you can build in to your Redis Queue:

- Schedule Twilio SMS to a list of contacts quickly!

- Use Redis Queue to generate a fan fiction with OpenAI GPT-3

- Queue Emails with Twilio SendGrid using Redis Queue

Let me know what you have been building by reaching out to me over email!

Diane Phan is a developer on the Developer Voices team. She loves to help programmers tackle difficult challenges that might prevent them from bringing their projects to life. She can be reached at dphan [at] twilio.com or LinkedIn .

Related Posts

Related Resources

Twilio docs, from apis to sdks to sample apps.

API reference documentation, SDKs, helper libraries, quickstarts, and tutorials for your language and platform.

Resource Center

The latest ebooks, industry reports, and webinars.

Learn from customer engagement experts to improve your own communication.

Twilio's developer community hub

Best practices, code samples, and inspiration to build communications and digital engagement experiences.

- Dynos (app containers)

- Stacks (operating system images)

- Networking & DNS

- Platform Policies

- Platform Principles

- Command Line

- Deploying with Git

- Deploying with Docker

- Deployment Integrations

- Continuous Integration

- Working with Bundler

- Rails Support

- Background Jobs in Python

- Working with Django

- Working with Maven

- Java Database Operations

- Java Advanced Topics

- Working with Spring Boot

- Go Dependency Management

- Postgres Basics

- Postgres Getting Started

- Postgres Performance

- Postgres Data Transfer & Preservation

- Postgres Availability

- Postgres Special Topics

- Heroku Data For Redis

- Apache Kafka on Heroku

- Other Data Stores

- App Performance

- All Add-ons

- Collaboration

- App Security

- Single Sign-on (SSO)

- Infrastructure Networking

- Enterprise Accounts

- Enterprise Teams

- Heroku Connect Administration

- Heroku Connect Reference

- Heroku Connect Troubleshooting

- Patterns & Best Practices

- Platform API

- App Webhooks

- Heroku Labs

- Add-on Development Tasks

- Add-on APIs

- Add-on Guidelines & Requirements

- Building CLI Plugins

- Developing Buildpacks

- Accounts & Billing

- Troubleshooting & Support

- Integrating with Salesforce

Background Tasks in Python with RQ

Last updated July 18, 2022

Table of Contents

Configuration, create a worker, queuing jobs, troubleshooting.

RQ (Redis Queue) makes it easy to add background tasks to your Python applications on Heroku. RQ uses a Redis database as a queue to process background jobs. To get started using RQ, you need to configure your application and then run a worker process in your application.

To setup RQ and its dependencies, install it using pip:

Be sure to add rq to your requirements.txt file as well.

Now that we have everything we need to create a worker process, let’s create one.

Create a file called worker.py . This module will listen to queued tasks and process them as they are received.

Now you can run your new worker process:

To send a job to your new Redis Queue, you need to send your jobs to Redis in your code. Let’s pretend we have a blocking function in an external module, utils.py :

In your application, create a RQ queue:

And enqueue the function call:

The blocking function will automatically be executed in the background worker process.

To deploy your new worker system to Heroku, you need to add the worker process to the Procfile in the root of the project.

Additionally, provision an instance of Redis with the Heroku Data for Redis (heroku-redis) addon and deploy with a git push .

Once everything’s pushed up, scale your workers according to your needs:

View worker process output by filtering the logs with the -p flag and the name of the worker process type.

The worker process can be manually invoked for further isolation.

Keep reading

Log in to submit feedback.

Redis Queue (RQ)

Redis Queue (RQ) is a Python task queue implementation that uses Redis to keep track of tasks in the queue that need to be executed.

RQ resources

Asynchronous Tasks in Python with Redis Queue is a quickstart-style tutorial that shows how to use RQ to fetch data from the Mars Rover web API and process URLs for each of the photos taken by NASA's Mars rover. There is also a follow-up post on Scheduling Tasks in Python with Redis Queue and RQ Scheduler that shows how to schedule tasks in advance, which is a common way of working with task queues .

The RQ intro post contains information on design decisions and how to use RQ in your projects.

Build a Ghostwriting App for Scary Halloween Stories with OpenAI's GPT-3 Engine and Task Queues in Python is a fun tutorial that uses RQ with OpenAI's GPT-3 API randomly write original stories inspired by creepy Halloween tales.

International Space Station notifications with Python and Redis Queue (RQ) shows how to combine the RQ task queue library with Flask to send text message notifications every time a condition is met - in this blog post's case that the ISS is currently flying over your location on Earth.

Asynchronous Tasks with Flask and Redis Queue looks at how to configure RQ to handle long-running tasks in a Flask app.

How We Spotted and Fixed a Performance Degradation in Our Python Code is a quick story about how an engineering team moving from Celery to RQ fixed some deficiencies in their RQ performance as they started to understand the difference between how the two tools execute workers.

Flask by Example - Implementing a Redis Task Queue explains how to install and use RQ in a Flask application.

rq-dashboard is an awesome Flask -based dashboard for viewing queues, workers and other critical information when using RQ.

Sending Confirmation Emails with Flask, Redis Queue, and Amazon SES shows how RQ fits into a real-world application that uses many libraries and third party APIs.

Background Tasks in Python using Redis Queue gives a code example for web scraping data from the Goodreads website. Note that the first sentence in the post is not accurate: it's not the Python language that is linear, but instead the way workers in WSGI servers handle a single request at a time by blocking. Nevertheless, the example is a good one for understanding how RQ can execute.

Do you want to learn more about task queues, or another topic?

How do I execute code outside the HTTP request-response cycle?

I've built a Python web app, now how do I deploy it?

How do I log errors that occur in my application?

Table of Contents

Full stack python.

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

Python task queue using Redis

closeio/tasktiger

Folders and files, repository files navigation.

TaskTiger is a Python task queue using Redis.

(Interested in working on projects like this? Close is looking for great engineers to join our team)

Per-task fork or synchronous worker

By default, TaskTiger forks a subprocess for each task, This comes with several benefits: Memory leaks caused by tasks are avoided since the subprocess is terminated when the task is finished. A hard time limit can be set for each task, after which the task is killed if it hasn't completed. To ensure performance, any necessary Python modules can be preloaded in the parent process.

TaskTiger also supports synchronous workers, which allows for better performance due to no forking overhead, and tasks have the ability to reuse network connections. To prevent memory leaks from accumulating, workers can be set to shutdown after a certain amount of time, at which point a supervisor can restart them. Workers also automatically exit on on hard timeouts to prevent an inconsistent process state.

Unique queues

TaskTiger has the option to avoid duplicate tasks in the task queue. In some cases it is desirable to combine multiple similar tasks. For example, imagine a task that indexes objects (e.g. to make them searchable). If an object is already present in the task queue and hasn't been processed yet, a unique queue will ensure that the indexing task doesn't have to do duplicate work. However, if the task is already running while it's queued, the task will be executed another time to ensure that the indexing task always picks up the latest state.

TaskTiger can ensure to never execute more than one instance of tasks with similar arguments by acquiring a lock. If a task hits a lock, it is requeued and scheduled for later executions after a configurable interval.

Task retrying

TaskTiger lets you retry exceptions (all exceptions or a list of specific ones) and comes with configurable retry intervals (fixed, linear, exponential, custom).

Flexible queues

Tasks can be easily queued in separate queues. Workers pick tasks from a randomly chosen queue and can be configured to only process specific queues, ensuring that all queues are processed equally. TaskTiger also supports subqueues which are separated by a period. For example, you can have per-customer queues in the form process_emails.CUSTOMER_ID and start a worker to process process_emails and any of its subqueues. Since tasks are picked from a random queue, all customers get equal treatment: If one customer is queueing many tasks it can't block other customers' tasks from being processed. A maximum queue size can also be enforced.

Batch queues

Batch queues can be used to combine multiple queued tasks into one. That way, your task function can process multiple sets of arguments at the same time, which can improve performance. The batch size is configurable.

Scheduled and periodic tasks

Tasks can be scheduled for execution at a specific time. Tasks can also be executed periodically (e.g. every five seconds).

Structured logging

TaskTiger supports JSON-style logging via structlog, allowing more flexibility for tools to analyze the log. For example, you can use TaskTiger together with Logstash, Elasticsearch, and Kibana.

The structlog processor tasktiger.logging.tasktiger_processor can be used to inject the current task id into all log messages.

Reliability

TaskTiger atomically moves tasks between queue states, and will re-execute tasks after a timeout if a worker crashes.

Error handling

If an exception occurs during task execution and the task is not set up to be retried, TaskTiger stores the execution tracebacks in an error queue. The task can then be retried or deleted manually. TaskTiger can be easily integrated with error reporting services like Rollbar.

Admin interface

A simple admin interface using flask-admin exists as a separate project ( tasktiger-admin ).

Quick start

It is easy to get started with TaskTiger.

Create a file that contains the task(s).

Queue the task using the delay method.

Run a worker (make sure the task code can be found, e.g. using PYTHONPATH ).

Configuration

A TaskTiger object keeps track of TaskTiger's settings and is used to decorate and queue tasks. The constructor takes the following arguments:

Redis connection object. The connection should be initialized with decode_responses=True to avoid encoding problems on Python 3.

Dict with config options. Most configuration options don't need to be changed, and a full list can be seen within TaskTiger 's __init__ method.

Here are a few commonly used options:

ALWAYS_EAGER

If set to True , all tasks except future tasks ( when is a future time) will be executed locally by blocking until the task returns. This is useful for testing purposes.

BATCH_QUEUES

Set up queues that will be processed in batch, i.e. multiple jobs are taken out of the queue at the same time and passed as a list to the worker method. Takes a dict where the key represents the queue name and the value represents the batch size. Note that the task needs to be declared as batch=True . Also note that any subqueues will be automatically treated as batch queues, and the batch value of the most specific subqueue name takes precedence.

ONLY_QUEUES

If set to a non-empty list of queue names, a worker only processes the given queues (and their subqueues), unless explicit queues are passed to the command line.

setup_structlog

If set to True, sets up structured logging using structlog when initializing TaskTiger. This makes writing custom worker scripts easier since it doesn't require the user to set up structlog in advance.

Task decorator

TaskTiger provides a task decorator to specify task options. Note that simple tasks don't need to be decorated. However, decorating the task allows you to use an alternative syntax to queue the task, which is compatible with Celery:

Task options

Tasks support a variety of options that can be specified either in the task decorator, or when queueing a task. For the latter, the delay method must be called on the TaskTiger object, and any options in the task decorator are overridden.

When queueing a task, the task needs to be defined in a module other than the Python file which is being executed. In other words, the task can't be in the __main__ module. TaskTiger will give you back an error otherwise.

The following options are supported by both delay and the task decorator:

Name of the queue where the task will be queued.

hard_timeout

If the task runs longer than the given number of seconds, it will be killed and marked as failed.

Boolean to indicate whether the task will only be queued if there is no similar task with the same function, arguments, and keyword arguments in the queue. Note that multiple similar tasks may still be executed at the same time since the task will still be inserted into the queue if another one is being processed. Requeueing an already scheduled unique task will not change the time it was originally scheduled to execute at.

If set, this implies unique=True and specifies the list of kwargs to use to construct the unique key. By default, all args and kwargs are serialized and hashed.

Boolean to indicate whether to hold a lock while the task is being executed (for the given args and kwargs). If a task with similar args/kwargs is queued and tries to acquire the lock, it will be retried later.

If set, this implies lock=True and specifies the list of kwargs to use to construct the lock key. By default, all args and kwargs are serialized and hashed.

max_queue_size

A maximum queue size can be enforced by setting this to an integer value. The QueueFullException exception will be raised when queuing a task if this limit is reached. Tasks in the active , scheduled , and queued states are counted against this limit.

Takes either a datetime (for an absolute date) or a timedelta (relative to now). If given, the task will be scheduled for the given time.

Boolean to indicate whether to retry the task when it fails (either because of an exception or because of a timeout). To restrict the list of failures, use retry_on . Unless retry_method is given, the configured DEFAULT_RETRY_METHOD is used.

If a list is given, it implies retry=True . The task will be only retried on the given exceptions (or its subclasses). To retry the task when a hard timeout occurs, use JobTimeoutException .

retry_method

If given, implies retry=True . Pass either:

- a function that takes the retry number as an argument, or,

- a tuple (f, args) , where f takes the retry number as the first argument, followed by the additional args.

The function needs to return the desired retry interval in seconds, or raise StopRetry to stop retrying. The following built-in functions can be passed for common scenarios and return the appropriate tuple:

fixed(delay, max_retries)

Returns a method that returns the given delay (in seconds) or raises StopRetry if the number of retries exceeds max_retries .

linear(delay, increment, max_retries)

Like fixed , but starts off with the given delay and increments it by the given increment after every retry.

exponential(delay, factor, max_retries)

Like fixed , but starts off with the given delay and multiplies it by the given factor after every retry.

For example, to retry a task 3 times (for a total of 4 executions), and wait 60 seconds between executions, pass retry_method=fixed(60, 3) .

runner_class

If given, a Python class can be specified to influence task running behavior. The runner class should inherit tasktiger.runner.BaseRunner and implement the task execution behavior. The default implementation is available in tasktiger.runner.DefaultRunner . The following behavior can be achieved:

Execute specific code before or after the task is executed (in the forked child process), or customize the way task functions are called in either single or batch processing.

Note that if you want to execute specific code for all tasks, you should use the CHILD_CONTEXT_MANAGERS configuration option.

- Control the hard timeout behavior of a task.

- Execute specific code in the main worker process after a task failed permanently.

This is an advanced feature and the interface and requirements of the runner class can change in future TaskTiger versions.

The following options can be only specified in the task decorator:

If set to True , the task will receive a list of dicts with args and kwargs and can process multiple tasks of the same type at once. Example: [{"args": [1], "kwargs": {}}, {"args": [2], "kwargs": {}}] Note that the list will only contain multiple items if the worker has set up BATCH_QUEUES for the specific queue (see the Configuration section).

If given, makes a task execute periodically. Pass either:

- a function that takes the current datetime as an argument.

- a tuple (f, args) , where f takes the current datetime as the first argument, followed by the additional args.

The schedule function must return the next task execution datetime, or None to prevent periodic execution. The function is executed to determine the initial task execution date when a worker is initialized, and to determine the next execution date when the task is about to get executed.

For most common scenarios, the below mentioned built-in functions can be passed:

periodic(seconds=0, minutes=0, hours=0, days=0, weeks=0, start_date=None, end_date=None)

Use equal, periodic intervals, starting from start_date (defaults to 2000-01-01T00:00Z , a Saturday, if not given), ending at end_date (or never, if not given). For example, to run a task every five minutes indefinitely, use schedule=periodic(minutes=5) . To run a task every every Sunday at 4am UTC, you could use schedule=periodic(weeks=1, start_date=datetime.datetime(2000, 1, 2, 4)) .

cron_expr(expr, start_date=None, end_date=None)

start_date , to specify the periodic task start date. It defaults to 2000-01-01T00:00Z , a Saturday, if not given. end_date , to specify the periodic task end date. The task repeats forever if end_date is not given. For example, to run a task every hour indefinitely, use schedule=cron_expr("0 * * * *") . To run a task every Sunday at 4am UTC, you could use schedule=cron_expr("0 4 * * 0") .

Custom retrying

In some cases the task retry options may not be flexible enough. For example, you might want to use a different retry method depending on the exception type, or you might want to like to suppress logging an error if a task fails after retries. In these cases, RetryException can be raised within the task function. The following options are supported:

Specify a custom retry method for this retry. If not given, the task's default retry method is used, or, if unspecified, the configured DEFAULT_RETRY_METHOD . Note that the number of retries passed to the retry method is always the total number of times this method has been executed, regardless of which retry method was used.

original_traceback

If RetryException is raised from within an except block and original_traceback is True, the original traceback will be logged (i.e. the stacktrace at the place where the caught exception was raised). False by default.

If set to False and the task fails permanently, a warning will be logged instead of an error, and the task will be removed from Redis when it completes. True by default.

Example usage:

The tasktiger command is used on the command line to invoke a worker. To invoke multiple workers, multiple instances need to be started. This can be easily done e.g. via Supervisor. The following Supervisor configuration file can be placed in /etc/supervisor/tasktiger.ini and runs 4 TaskTiger workers as the ubuntu user. For more information, read Supervisor's documentation.

Workers support the following options:

-q , --queues

If specified, only the given queue(s) are processed. Multiple queues can be separated by comma. Any subqueues of the given queues will be also processed. For example, -q first,second will process items from first , second , and subqueues such as first.CUSTOMER1 , first.CUSTOMER2 .

-e , --exclude-queues

If specified, exclude the given queue(s) from processing. Multiple queues can be separated by comma. Any subqueues of the given queues will also be excluded unless a more specific queue is specified with the -q option. For example, -q email,email.incoming.CUSTOMER1 -e email.incoming will process items from the email queue and subqueues like email.outgoing.CUSTOMER1 or email.incoming.CUSTOMER1 , but not email.incoming or email.incoming.CUSTOMER2 .

-m , --module

Module(s) to import when launching the worker. This improves task performance since the module doesn't have to be reimported every time a task is forked. Multiple modules can be separated by comma.

Another way to preload modules is to set up a custom TaskTiger launch script, which is described below.

-h , --host

Redis server hostname (if different from localhost ).

-p , --port

Redis server port (if different from 6379 ).

-a , --password

Redis server password (if required).

Redis server database number (if different from 0 ).

-M , --max-workers-per-queue

Maximum number of workers that are allowed to process a queue.

--store-tracebacks/--no-store-tracebacks

Store tracebacks with execution history (config defaults to True ).

Can be fork (default) or sync . Whether to execute tasks in a separate process via fork, or execute them synchronously in the same proces. See "Features" section for the benefits of either approach.

--exit-after

Exit the worker after the time in minutes has elapsed. This is mainly useful with the synchronous executor to prevent memory leaks from accumulating.

In some cases it is convenient to have a custom TaskTiger launch script. For example, your application may have a manage.py command that sets up the environment and you may want to launch TaskTiger workers using that script. To do that, you can use the run_worker_with_args method, which launches a TaskTiger worker and parses any command line arguments. Here is an example:

Inspect, requeue and delete tasks

TaskTiger provides access to the Task class which lets you inspect queues and perform various actions on tasks.

Each queue can have tasks in the following states:

- queued : Tasks that are queued and waiting to be picked up by the workers.

- active : Tasks that are currently being processed by the workers.

- scheduled : Tasks that are scheduled for later execution.

- error : Tasks that failed with an error.

To get a list of all tasks for a given queue and state, use Task.tasks_from_queue . The method gives you back a tuple containing the total number of tasks in the queue (useful if the tasks are truncated) and a list of tasks in the queue, latest first. Using the skip and limit keyword arguments, you can fetch arbitrary slices of the queue. If you know the task ID, you can fetch a given task using Task.from_id . Both methods let you load tracebacks from failed task executions using the load_executions keyword argument, which accepts an integer indicating how many executions should be loaded.

Tasks can also be constructed and queued using the regular constructor, which takes the TaskTiger instance, the function name and the options described in the Task options section. The task can then be queued using its delay method. Note that the when argument needs to be passed to the delay method, if applicable. Unique tasks can be reconstructed using the same arguments.

The Task object has the following properties:

- id : The task ID.

- data : The raw data as a dict from Redis.

- executions : A list of failed task executions (as dicts). An execution dict contains the processing time in time_started and time_failed , the worker host in host , the exception name in exception_name and the full traceback in traceback .

- serialized_func , args , kwargs : The serialized function name with all of its arguments.

- func : The imported (executable) function

The Task object has the following methods:

- cancel : Cancel a scheduled task.

- delay : Queue the task for execution.

- delete : Remove the task from the error queue.

- execute : Run the task without queueing it.

- n_executions : Queries and returns the number of past task executions.

- retry : Requeue the task from the error queue for execution.

- update_scheduled_time : Updates a scheduled task's date to the given date.

The current task can be accessed within the task function while it's being executed: In case of a non-batch task, the current_task property of the TaskTiger instance returns the current Task instance. In case of a batch task the current_tasks property must be used which returns a list of tasks that are currently being processed (in the same order as they were passed to the task).

Example 1: Queueing a unique task and canceling it without a reference to the original task.

Example 2: Inspecting queues and retrying a task by ID.

Example 3: Accessing the task instances within a batch task function to determine how many times the currently processing tasks were previously executed.

Pause queue processing

The --max-workers-per-queue option uses queue locks to control the number of workers that can simultaneously process the same queue. When using this option a system lock can be placed on a queue which will keep workers from processing tasks from that queue until it expires. Use the set_queue_system_lock() method of the TaskTiger object to set this lock.

Rollbar error handling

TaskTiger comes with Rollbar integration for error handling. When a task errors out, it can be logged to Rollbar, grouped by queue, task function name and exception type. To enable logging, initialize rollbar with the StructlogRollbarHandler provided in the tasktiger.rollbar module. The handler takes a string as an argument which is used to prefix all the messages reported to Rollbar. Here is a custom worker launch script:

Cleaning Up Error'd Tasks

Error'd tasks occasionally need to be purged from Redis, so TaskTiger exposes a purge_errored_tasks method to help. It might be useful to set this up as a periodic task as follows:

Running The Test Suite

Tests can be run locally using the provided docker compose file. After installing docker, tests should be runnable with:

Tests can be more granularly run using normal pytest flags. For example:

Releasing a New Version

- Make sure the code has been thoroughly reviewed and tested in a realistic production environment.

- Update setup.py and CHANGELOG.md . Make sure you include any breaking changes.

- Run python setup.py sdist and twine upload dist/<PACKAGE_TO_UPLOAD> .

- Push a new tag pointing to the released commit, format: v0.13 for example.

- Mark the tag as a release in GitHub's UI and include in the description the changelog entry for the version. An example would be: https://github.com/closeio/tasktiger/releases/tag/v0.13 .

Releases 16

Contributors 29.

- Python 98.2%

- Dockerfile 0.1%

- Python »

- 3.13.0b1 Documentation »

- What’s New in Python »

- What’s New In Python 3.13

- Theme Auto Light Dark |

What’s New In Python 3.13 ¶

Thomas Wouters

This article explains the new features in Python 3.13, compared to 3.12.

For full details, see the changelog .

PEP 719 – Python 3.13 Release Schedule

Prerelease users should be aware that this document is currently in draft form. It will be updated substantially as Python 3.13 moves towards release, so it’s worth checking back even after reading earlier versions.

Summary – Release Highlights ¶

Python 3.13 beta is the pre-release of the next version of the Python programming language, with a mix of changes to the language, the implementation and the standard library. The biggest changes to the implementation include a new interactive interpreter, and experimental support for dropping the Global Interpreter Lock ( PEP 703 ) and a Just-In-Time compiler ( PEP 744 ). The library changes contain removal of deprecated APIs and modules, as well as the usual improvements in user-friendliness and correctness.

Interpreter improvements:

A greatly improved interactive interpreter and improved error messages .

Color support in the new interactive interpreter , as well as in tracebacks and doctest output. This can be disabled through the PYTHON_COLORS and NO_COLOR environment variables.

PEP 744 : A basic JIT compiler was added. It is currently disabled by default (though we may turn it on later). Performance improvements are modest – we expect to be improving this over the next few releases.

PEP 667 : The locals() builtin now has defined semantics when mutating the returned mapping. Python debuggers and similar tools may now more reliably update local variables in optimized frames even during concurrent code execution.

New typing features:

PEP 696 : Type parameters ( typing.TypeVar , typing.ParamSpec , and typing.TypeVarTuple ) now support defaults.

PEP 702 : Support for marking deprecations in the type system using the new warnings.deprecated() decorator.

PEP 742 : typing.TypeIs was added, providing more intuitive type narrowing behavior.

PEP 705 : typing.ReadOnly was added, to mark an item of a typing.TypedDict as read-only for type checkers.

Free-threading:

PEP 703 : CPython 3.13 has experimental support for running with the global interpreter lock disabled when built with --disable-gil . See Free-threaded CPython for more details.

Platform support:

PEP 730 : Apple’s iOS is now an officially supported platform. Official Android support ( PEP 738 ) is in the works as well.

Removed modules:

PEP 594 : The remaining 19 “dead batteries” have been removed from the standard library: aifc , audioop , cgi , cgitb , chunk , crypt , imghdr , mailcap , msilib , nis , nntplib , ossaudiodev , pipes , sndhdr , spwd , sunau , telnetlib , uu and xdrlib .

Also removed were the tkinter.tix and lib2to3 modules, and the 2to3 program.

Release schedule changes:

PEP 602 (“Annual Release Cycle for Python”) has been updated:

Python 3.9 - 3.12 have one and a half years of full support, followed by three and a half years of security fixes.

Python 3.13 and later have two years of full support, followed by three years of security fixes.

New Features ¶

A better interactive interpreter ¶.

On Unix-like systems like Linux or macOS, Python now uses a new interactive shell. When the user starts the REPL from an interactive terminal, and both curses and readline are available, the interactive shell now supports the following new features:

Colorized prompts.

Multiline editing with history preservation.

Interactive help browsing using F1 with a separate command history.

History browsing using F2 that skips output as well as the >>> and … prompts.

“Paste mode” with F3 that makes pasting larger blocks of code easier (press F3 again to return to the regular prompt).

The ability to issue REPL-specific commands like help , exit , and quit without the need to use call parentheses after the command name.

If the new interactive shell is not desired, it can be disabled via the PYTHON_BASIC_REPL environment variable.

For more on interactive mode, see Interactive Mode .

(Contributed by Pablo Galindo Salgado, Łukasz Langa, and Lysandros Nikolaou in gh-111201 based on code from the PyPy project.)

Improved Error Messages ¶

The interpreter now colorizes error messages when displaying tracebacks by default. This feature can be controlled via the new PYTHON_COLORS environment variable as well as the canonical NO_COLOR and FORCE_COLOR environment variables. See also Controlling color . (Contributed by Pablo Galindo Salgado in gh-112730 .)

A common mistake is to write a script with the same name as a standard library module. When this results in errors, we now display a more helpful error message:

Similarly, if a script has the same name as a third-party module it attempts to import, and this results in errors, we also display a more helpful error message:

(Contributed by Shantanu Jain in gh-95754 .)

When an incorrect keyword argument is passed to a function, the error message now potentially suggests the correct keyword argument. (Contributed by Pablo Galindo Salgado and Shantanu Jain in gh-107944 .)

Classes have a new __static_attributes__ attribute, populated by the compiler, with a tuple of names of attributes of this class which are accessed through self.X from any function in its body. (Contributed by Irit Katriel in gh-115775 .)

Defined mutation semantics for locals() ¶

Historically, the expected result of mutating the return value of locals() has been left to individual Python implementations to define.

Through PEP 667 , Python 3.13 standardises the historical behaviour of CPython for most code execution scopes, but changes optimized scopes (functions, generators, coroutines, comprehensions, and generator expressions) to explicitly return independent snapshots of the currently assigned local variables, including locally referenced nonlocal variables captured in closures.

To ensure debuggers and similar tools can reliably update local variables in scopes affected by this change, FrameType.f_locals now returns a write-through proxy to the frame’s local and locally referenced nonlocal variables in these scopes, rather than returning an inconsistently updated shared dict instance with undefined runtime semantics.

See PEP 667 for more details, including related C API changes and deprecations.

(PEP and implementation contributed by Mark Shannon and Tian Gao in gh-74929 . Documentation updates provided by Guido van Rossum and Alyssa Coghlan.)

Incremental Garbage Collection ¶

The cycle garbage collector is now incremental. This means that maximum pause times are reduced by an order of magnitude or more for larger heaps.

Support For Mobile Platforms ¶

iOS is now a PEP 11 supported platform. arm64-apple-ios (iPhone and iPad devices released after 2013) and arm64-apple-ios-simulator (Xcode iOS simulator running on Apple Silicon hardware) are now tier 3 platforms.

x86_64-apple-ios-simulator (Xcode iOS simulator running on older x86_64 hardware) is not a tier 3 supported platform, but will be supported on a best-effort basis.

See PEP 730 : for more details.

(PEP written and implementation contributed by Russell Keith-Magee in gh-114099 .)

Experimental JIT Compiler ¶

When CPython is configured using the --enable-experimental-jit option, a just-in-time compiler is added which may speed up some Python programs.

The internal architecture is roughly as follows.

We start with specialized Tier 1 bytecode . See What’s new in 3.11 for details.

When the Tier 1 bytecode gets hot enough, it gets translated to a new, purely internal Tier 2 IR , a.k.a. micro-ops (“uops”).

The Tier 2 IR uses the same stack-based VM as Tier 1, but the instruction format is better suited to translation to machine code.

We have several optimization passes for Tier 2 IR, which are applied before it is interpreted or translated to machine code.

There is a Tier 2 interpreter, but it is mostly intended for debugging the earlier stages of the optimization pipeline. The Tier 2 interpreter can be enabled by configuring Python with --enable-experimental-jit=interpreter .

When the JIT is enabled, the optimized Tier 2 IR is translated to machine code, which is then executed.

The machine code translation process uses a technique called copy-and-patch . It has no runtime dependencies, but there is a new build-time dependency on LLVM.

The --enable-experimental-jit flag has the following optional values:

no (default) – Disable the entire Tier 2 and JIT pipeline.

yes (default if the flag is present without optional value) – Enable the JIT. To disable the JIT at runtime, pass the environment variable PYTHON_JIT=0 .

yes-off – Build the JIT but disable it by default. To enable the JIT at runtime, pass the environment variable PYTHON_JIT=1 .

interpreter – Enable the Tier 2 interpreter but disable the JIT. The interpreter can be disabled by running with PYTHON_JIT=0 .

(On Windows, use PCbuild/build.bat --experimental-jit to enable the JIT or --experimental-jit-interpreter to enable the Tier 2 interpreter.)

See PEP 744 for more details.

(JIT by Brandt Bucher, inspired by a paper by Haoran Xu and Fredrik Kjolstad. Tier 2 IR by Mark Shannon and Guido van Rossum. Tier 2 optimizer by Ken Jin.)

Free-threaded CPython ¶

CPython will run with the global interpreter lock (GIL) disabled when configured using the --disable-gil option at build time. This is an experimental feature and therefore isn’t used by default. Users need to either compile their own interpreter, or install one of the experimental builds that are marked as free-threaded . See PEP 703 “Making the Global Interpreter Lock Optional in CPython” for more detail.

Free-threaded execution allows for full utilization of the available processing power by running threads in parallel on available CPU cores. While not all software will benefit from this automatically, programs designed with threading in mind will run faster on multicore hardware.

Work is still ongoing: expect some bugs and a substantial single-threaded performance hit.

The free-threaded build still supports optionally running with the GIL enabled at runtime using the environment variable PYTHON_GIL or the command line option -X gil .

To check if the current interpreter is configured with --disable-gil , use sysconfig.get_config_var("Py_GIL_DISABLED") . To check if the GIL is actually disabled in the running process, the sys._is_gil_enabled() function can be used.

C-API extension modules need to be built specifically for the free-threaded build. Extensions that support running with the GIL disabled should use the Py_mod_gil slot. Extensions using single-phase init should use PyUnstable_Module_SetGIL() to indicate whether they support running with the GIL disabled. Importing C extensions that don’t use these mechanisms will cause the GIL to be enabled, unless the GIL was explicitly disabled with the PYTHON_GIL environment variable or the -X gil=0 option.

pip 24.1b1 or newer is required to install packages with C extensions in the free-threaded build.

Other Language Changes ¶

Allow the count argument of str.replace() to be a keyword. (Contributed by Hugo van Kemenade in gh-106487 .)

Compiler now strip indents from docstrings. This will reduce the size of bytecode cache (e.g. .pyc file). For example, cache file size for sqlalchemy.orm.session in SQLAlchemy 2.0 is reduced by about 5%. This change will affect tools using docstrings, like doctest . (Contributed by Inada Naoki in gh-81283 .)

The compile() built-in can now accept a new flag, ast.PyCF_OPTIMIZED_AST , which is similar to ast.PyCF_ONLY_AST except that the returned AST is optimized according to the value of the optimize argument. (Contributed by Irit Katriel in gh-108113 ).

multiprocessing , concurrent.futures , compileall : Replace os.cpu_count() with os.process_cpu_count() to select the default number of worker threads and processes. Get the CPU affinity if supported. (Contributed by Victor Stinner in gh-109649 .)

os.path.realpath() now resolves MS-DOS style file names even if the file is not accessible. (Contributed by Moonsik Park in gh-82367 .)

Fixed a bug where a global declaration in an except block is rejected when the global is used in the else block. (Contributed by Irit Katriel in gh-111123 .)

Many functions now emit a warning if a boolean value is passed as a file descriptor argument. This can help catch some errors earlier. (Contributed by Serhiy Storchaka in gh-82626 .)

Added a new environment variable PYTHON_FROZEN_MODULES . It determines whether or not frozen modules are ignored by the import machinery, equivalent of the -X frozen_modules command-line option. (Contributed by Yilei Yang in gh-111374 .)

Add support for the perf profiler working without frame pointers through the new environment variable PYTHON_PERF_JIT_SUPPORT and command-line option -X perf_jit (Contributed by Pablo Galindo in gh-118518 .)

The new PYTHON_HISTORY environment variable can be used to change the location of a .python_history file. (Contributed by Levi Sabah, Zackery Spytz and Hugo van Kemenade in gh-73965 .)

Add PythonFinalizationError exception. This exception derived from RuntimeError is raised when an operation is blocked during the Python finalization .

The following functions now raise PythonFinalizationError, instead of RuntimeError :

_thread.start_new_thread() .

subprocess.Popen .

os.fork() .

os.forkpty() .

(Contributed by Victor Stinner in gh-114570 .)

Added name and mode attributes for compressed and archived file-like objects in modules bz2 , lzma , tarfile and zipfile . (Contributed by Serhiy Storchaka in gh-115961 .)

Allow controlling Expat >=2.6.0 reparse deferral ( CVE-2023-52425 ) by adding five new methods:

xml.etree.ElementTree.XMLParser.flush()

xml.etree.ElementTree.XMLPullParser.flush()

xml.parsers.expat.xmlparser.GetReparseDeferralEnabled()

xml.parsers.expat.xmlparser.SetReparseDeferralEnabled()

xml.sax.expatreader.ExpatParser.flush()

(Contributed by Sebastian Pipping in gh-115623 .)

The ssl.create_default_context() API now includes ssl.VERIFY_X509_PARTIAL_CHAIN and ssl.VERIFY_X509_STRICT in its default flags.

ssl.VERIFY_X509_STRICT may reject pre- RFC 5280 or malformed certificates that the underlying OpenSSL implementation otherwise would accept. While disabling this is not recommended, you can do so using:

(Contributed by William Woodruff in gh-112389 .)

The configparser.ConfigParser now accepts unnamed sections before named ones if configured to do so. (Contributed by Pedro Sousa Lacerda in gh-66449 .)

annotation scope within class scopes can now contain lambdas and comprehensions. Comprehensions that are located within class scopes are not inlined into their parent scope. (Contributed by Jelle Zijlstra in gh-109118 and gh-118160 .)

Classes have a new __firstlineno__ attribute, populated by the compiler, with the line number of the first line of the class definition. (Contributed by Serhiy Storchaka in gh-118465 .)

from __future__ import ... statements are now just normal relative imports if dots are present before the module name. (Contributed by Jeremiah Gabriel Pascual in gh-118216 .)

New Modules ¶

Improved modules ¶.

Add parameter deprecated in methods add_argument() and add_parser() which allows to deprecate command-line options, positional arguments and subcommands. (Contributed by Serhiy Storchaka in gh-83648 .)

Add 'w' type code ( Py_UCS4 ) that can be used for Unicode strings. It can be used instead of 'u' type code, which is deprecated. (Contributed by Inada Naoki in gh-80480 .)

Add clear() method in order to implement MutableSequence . (Contributed by Mike Zimin in gh-114894 .)

The constructors of node types in the ast module are now stricter in the arguments they accept, and have more intuitive behaviour when arguments are omitted.

If an optional field on an AST node is not included as an argument when constructing an instance, the field will now be set to None . Similarly, if a list field is omitted, that field will now be set to an empty list, and if a ast.expr_context field is omitted, it defaults to Load() . (Previously, in all cases, the attribute would be missing on the newly constructed AST node instance.)

If other arguments are omitted, a DeprecationWarning is emitted. This will cause an exception in Python 3.15. Similarly, passing a keyword argument that does not map to a field on the AST node is now deprecated, and will raise an exception in Python 3.15.

These changes do not apply to user-defined subclasses of ast.AST , unless the class opts in to the new behavior by setting the attribute ast.AST._field_types .

(Contributed by Jelle Zijlstra in gh-105858 , gh-117486 , and gh-118851 .)

ast.parse() now accepts an optional argument optimize which is passed on to the compile() built-in. This makes it possible to obtain an optimized AST. (Contributed by Irit Katriel in gh-108113 .)

asyncio.loop.create_unix_server() will now automatically remove the Unix socket when the server is closed. (Contributed by Pierre Ossman in gh-111246 .)

asyncio.DatagramTransport.sendto() will now send zero-length datagrams if called with an empty bytes object. The transport flow control also now accounts for the datagram header when calculating the buffer size. (Contributed by Jamie Phan in gh-115199 .)

Add asyncio.Server.close_clients() and asyncio.Server.abort_clients() methods which allow to more forcefully close an asyncio server. (Contributed by Pierre Ossman in gh-113538 .)

asyncio.as_completed() now returns an object that is both an asynchronous iterator and a plain iterator of awaitables. The awaitables yielded by asynchronous iteration include original task or future objects that were passed in, making it easier to associate results with the tasks being completed. (Contributed by Justin Arthur in gh-77714 .)

When asyncio.TaskGroup.create_task() is called on an inactive asyncio.TaskGroup , the given coroutine will be closed (which prevents a RuntimeWarning about the given coroutine being never awaited). (Contributed by Arthur Tacca and Jason Zhang in gh-115957 .)

Improved behavior of asyncio.TaskGroup when an external cancellation collides with an internal cancellation. For example, when two task groups are nested and both experience an exception in a child task simultaneously, it was possible that the outer task group would hang, because its internal cancellation was swallowed by the inner task group.

In the case where a task group is cancelled externally and also must raise an ExceptionGroup , it will now call the parent task’s cancel() method. This ensures that a asyncio.CancelledError will be raised at the next await , so the cancellation is not lost.

An added benefit of these changes is that task groups now preserve the cancellation count ( asyncio.Task.cancelling() ).

In order to handle some corner cases, asyncio.Task.uncancel() may now reset the undocumented _must_cancel flag when the cancellation count reaches zero.

(Inspired by an issue reported by Arthur Tacca in gh-116720 .)

Add asyncio.Queue.shutdown() (along with asyncio.QueueShutDown ) for queue termination. (Contributed by Laurie Opperman and Yves Duprat in gh-104228 .)

Accept a tuple of separators in asyncio.StreamReader.readuntil() , stopping when one of them is encountered. (Contributed by Bruce Merry in gh-81322 .)

Add base64.z85encode() and base64.z85decode() functions which allow encoding and decoding Z85 data. See Z85 specification for more information. (Contributed by Matan Perelman in gh-75299 .)

Add copy.replace() function which allows to create a modified copy of an object, which is especially useful for immutable objects. It supports named tuples created with the factory function collections.namedtuple() , dataclass instances, various datetime objects, Signature objects, Parameter objects, code object , and any user classes which define the __replace__() method. (Contributed by Serhiy Storchaka in gh-108751 .)

Add dbm.gnu.gdbm.clear() and dbm.ndbm.ndbm.clear() methods that remove all items from the database. (Contributed by Donghee Na in gh-107122 .)

Add new dbm.sqlite3 backend, and make it the default dbm backend. (Contributed by Raymond Hettinger and Erlend E. Aasland in gh-100414 .)

Change the output of dis module functions to show logical labels for jump targets and exception handlers, rather than offsets. The offsets can be added with the new -O command line option or the show_offsets parameter. (Contributed by Irit Katriel in gh-112137 .)

Color is added to the output by default. This can be controlled via the new PYTHON_COLORS environment variable as well as the canonical NO_COLOR and FORCE_COLOR environment variables. See also Controlling color . (Contributed by Hugo van Kemenade in gh-117225 .)

The doctest.DocTestRunner.run() method now counts the number of skipped tests. Add doctest.DocTestRunner.skips and doctest.TestResults.skipped attributes. (Contributed by Victor Stinner in gh-108794 .)

email.utils.getaddresses() and email.utils.parseaddr() now return ('', '') 2-tuples in more situations where invalid email addresses are encountered instead of potentially inaccurate values. Add optional strict parameter to these two functions: use strict=False to get the old behavior, accept malformed inputs. getattr(email.utils, 'supports_strict_parsing', False) can be used to check if the strict parameter is available. (Contributed by Thomas Dwyer and Victor Stinner for gh-102988 to improve the CVE-2023-27043 fix.)

fractions ¶

Formatting for objects of type fractions.Fraction now supports the standard format specification mini-language rules for fill, alignment, sign handling, minimum width and grouping. (Contributed by Mark Dickinson in gh-111320 .)

The cyclic garbage collector is now incremental, which changes the meanings of the results of gc.get_threshold() and gc.set_threshold() as well as gc.get_count() and gc.get_stats() .

gc.get_threshold() returns a three-item tuple for backwards compatibility. The first value is the threshold for young collections, as before; the second value determines the rate at which the old collection is scanned (the default is 10, and higher values mean that the old collection is scanned more slowly). The third value is meaningless and is always zero.

gc.set_threshold() ignores any items after the second.

gc.get_count() and gc.get_stats() return the same format of results as before. The only difference is that instead of the results referring to the young, aging and old generations, the results refer to the young generation and the aging and collecting spaces of the old generation.

In summary, code that attempted to manipulate the behavior of the cycle GC may not work exactly as intended, but it is very unlikely to be harmful. All other code will work just fine.

Add glob.translate() function that converts a path specification with shell-style wildcards to a regular expression. (Contributed by Barney Gale in gh-72904 .)

importlib ¶

Previously deprecated importlib.resources functions are un-deprecated:

is_resource() open_binary() open_text() path() read_binary() read_text()

All now allow for a directory (or tree) of resources, using multiple positional arguments.

For text-reading functions, the encoding and errors must now be given as keyword arguments.

The contents() remains deprecated in favor of the full-featured Traversable API. However, there is now no plan to remove it.

(Contributed by Petr Viktorin in gh-106532 .)

The io.IOBase finalizer now logs the close() method errors with sys.unraisablehook . Previously, errors were ignored silently by default, and only logged in Python Development Mode or on Python built on debug mode . (Contributed by Victor Stinner in gh-62948 .)

ipaddress ¶

Add the ipaddress.IPv4Address.ipv6_mapped property, which returns the IPv4-mapped IPv6 address. (Contributed by Charles Machalow in gh-109466 .)

Fix is_global and is_private behavior in IPv4Address , IPv6Address , IPv4Network and IPv6Network .

itertools ¶

Added a strict option to itertools.batched() . This raises a ValueError if the final batch is shorter than the specified batch size. (Contributed by Raymond Hettinger in gh-113202 .)

Add the allow_code parameter in module functions. Passing allow_code=False prevents serialization and de-serialization of code objects which are incompatible between Python versions. (Contributed by Serhiy Storchaka in gh-113626 .)

A new function fma() for fused multiply-add operations has been added. This function computes x * y + z with only a single round, and so avoids any intermediate loss of precision. It wraps the fma() function provided by C99, and follows the specification of the IEEE 754 “fusedMultiplyAdd” operation for special cases. (Contributed by Mark Dickinson and Victor Stinner in gh-73468 .)

mimetypes ¶

Add the guess_file_type() function which works with file path. Passing file path instead of URL in guess_type() is soft deprecated . (Contributed by Serhiy Storchaka in gh-66543 .)

The mmap.mmap class now has an seekable() method that can be used when a seekable file-like object is required. The seek() method now returns the new absolute position. (Contributed by Donghee Na and Sylvie Liberman in gh-111835 .)

mmap.mmap now has a trackfd parameter on Unix; if it is False , the file descriptor specified by fileno will not be duplicated. (Contributed by Zackery Spytz and Petr Viktorin in gh-78502 .)

mmap.mmap is now protected from crashing on Windows when the mapped memory is inaccessible due to file system errors or access violations. (Contributed by Jannis Weigend in gh-118209 .)

Move opcode.ENABLE_SPECIALIZATION to _opcode.ENABLE_SPECIALIZATION . This field was added in 3.12, it was never documented and is not intended for external usage. (Contributed by Irit Katriel in gh-105481 .)

Removed opcode.is_pseudo , opcode.MIN_PSEUDO_OPCODE and opcode.MAX_PSEUDO_OPCODE , which were added in 3.12, were never documented or exposed through dis , and were not intended to be used externally.

Add os.process_cpu_count() function to get the number of logical CPUs usable by the calling thread of the current process. (Contributed by Victor Stinner in gh-109649 .)

Add a low level interface for Linux’s timer notification file descriptors via os.timerfd_create() , os.timerfd_settime() , os.timerfd_settime_ns() , os.timerfd_gettime() , and os.timerfd_gettime_ns() , os.TFD_NONBLOCK , os.TFD_CLOEXEC , os.TFD_TIMER_ABSTIME , and os.TFD_TIMER_CANCEL_ON_SET (Contributed by Masaru Tsuchiyama in gh-108277 .)

os.cpu_count() and os.process_cpu_count() can be overridden through the new environment variable PYTHON_CPU_COUNT or the new command-line option -X cpu_count . This option is useful for users who need to limit CPU resources of a container system without having to modify the container (application code). (Contributed by Donghee Na in gh-109595 .)

Add support of os.lchmod() and the follow_symlinks argument in os.chmod() on Windows. Note that the default value of follow_symlinks in os.lchmod() is False on Windows. (Contributed by Serhiy Storchaka in gh-59616 .)

Add support of os.fchmod() and a file descriptor in os.chmod() on Windows. (Contributed by Serhiy Storchaka in gh-113191 .)

os.posix_spawn() now accepts env=None , which makes the newly spawned process use the current process environment. (Contributed by Jakub Kulik in gh-113119 .)

os.posix_spawn() gains an os.POSIX_SPAWN_CLOSEFROM attribute for use in file_actions= on platforms that support posix_spawn_file_actions_addclosefrom_np() . (Contributed by Jakub Kulik in gh-113117 .)

os.mkdir() and os.makedirs() on Windows now support passing a mode value of 0o700 to apply access control to the new directory. This implicitly affects tempfile.mkdtemp() and is a mitigation for CVE-2024-4030 . Other values for mode continue to be ignored. (Contributed by Steve Dower in gh-118486 .)

Add os.path.isreserved() to check if a path is reserved on the current system. This function is only available on Windows. (Contributed by Barney Gale in gh-88569 .)

On Windows, os.path.isabs() no longer considers paths starting with exactly one (back)slash to be absolute. (Contributed by Barney Gale and Jon Foster in gh-44626 .)

Add support of dir_fd and follow_symlinks keyword arguments in shutil.chown() . (Contributed by Berker Peksag and Tahia K in gh-62308 )

Add pathlib.UnsupportedOperation , which is raised instead of NotImplementedError when a path operation isn’t supported. (Contributed by Barney Gale in gh-89812 .)

Add pathlib.Path.from_uri() , a new constructor to create a pathlib.Path object from a ‘file’ URI ( file:// ). (Contributed by Barney Gale in gh-107465 .)

Add pathlib.PurePath.full_match() for matching paths with shell-style wildcards, including the recursive wildcard “ ** ”. (Contributed by Barney Gale in gh-73435 .)