Usability Testing: Everything You Need to Know (Methods, Tools, and Examples)

As you crack into the world of UX design, there’s one thing you absolutely must understand and learn to practice like a pro: usability testing.

Precisely because it’s such a critical skill to master, it can be a lot to wrap your head around. What is it exactly, and how do you do it? How is it different from user testing? What are some actual methods that you can employ?

In this guide, we’ll give you everything you need to know about usability testing—the what, the why, and the how.

Here’s what we’ll cover:

- What is usability testing and why does it matter?

- Usability testing vs. user testing

- Formative vs. summative usability testing

- Attitudinal vs. behavioral research

Performance testing

Card sorting, tree testing, 5-second test, eye tracking.

- How to learn more about usability testing

Ready? Let’s dive in.

1. What is usability testing and why does it matter?

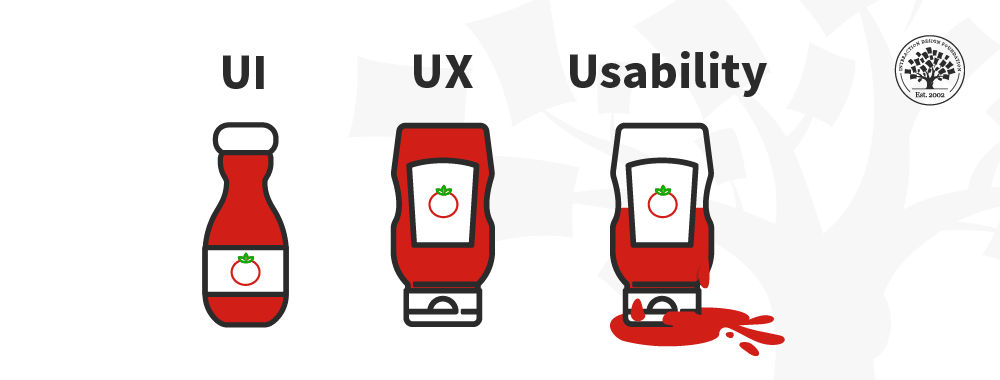

Simply put, usability testing is the process of discovering ways to improve your product by observing users as they engage with the product itself (or a prototype of the product). It’s a UX research method specifically trained on—you guessed it—the usability of your products. And what is usability ? Usability is a measure of how easily users can accomplish a given task with your product.

Usability testing, when executed well, uncovers pain points in the user journey and highlights barriers to good usability. It will also help you learn about your users’ behaviors and preferences as these relate to your product, and to discover opportunities to design for needs that you may have overlooked.

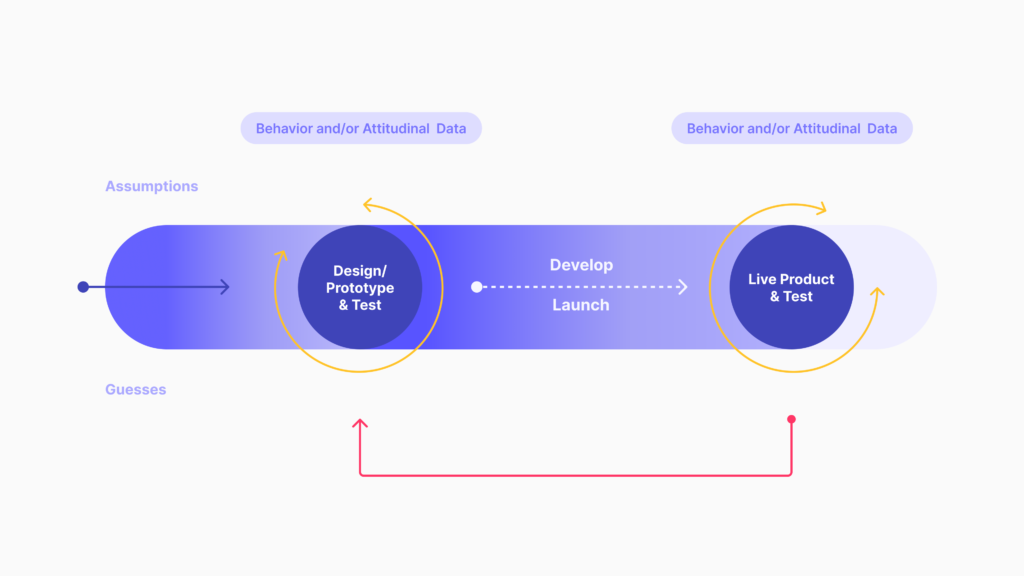

You can conduct usability testing at any point in the design process when you’ve turned initial ideas into design solutions, but the earlier the better. Test early and test often! You can conduct some kind of usability testing with low- and high- fidelity prototypes alike—and testing should continue after you’ve got a live, out-in-the-world product.

2. Usability testing vs. user testing

Though they sound similar and share a somewhat similar end goal, usability testing and user testing are two different things. We’ll look at the differences in a moment, but first, here’s what they have in common:

- Both share the end goal of creating a design solution to meet real user needs

- Both take the time to observe and listen to the user to hear from them what needs/pain points they experience

- Both look for feasible ways of meeting those needs or addressing those pain points

User testing essentially asks if this particular kind of user would want this particular kind of product—or what kind of product would benefit them in the first place. It is entirely user-focused.

Usability testing, on the other hand, is more product-focused and looks at users’ needs in the context of an existing product (even if that product is still in prototype stages of development). Usability testing takes your existing product and places it in the hands of your users (or potential users) to see how the product actually works for them—how they’re able to accomplish what they need to do with the product.

3. Formative vs. summative usability testing

Alright! Now that you understand what usability testing is, and what it isn’t, let’s get into the various types of usability testing out there.

There are two broad categories of usability testing that are important to understand— formative and summative . These have to do with when you conduct the testing and what your broad objectives are—what the overarching impact the testing should have on your product.

Formative usability testing:

- Is a qualitative research process

- Happens earlier in the design, development, or iteration process

- Seeks to understand what about the product needs to be improved

- Results in qualitative findings and ideation that you can incorporate into prototypes and wireframes

Summative usability testing:

- Is a research process that’s more quantitative in nature

- Happens later in the design, development, or iteration process

- Seeks to understand whether the solutions you are implementing (or have implemented) are effective

- Results in quantitative findings that can help determine broad areas for improvement or specific areas to fine-tune (this can go hand in hand with competitive analysis )

4. Attitudinal vs. behavioral research

Alongside the timing and purpose of the testing (formative vs. summative), it’s important to understand two broad categories that your research (both your objectives and your findings) will fall into: behavioral and attitudinal.

Attitudinal research is all about what people say—what they think and communicate about your product and how it works. Behavioral research focuses on what people do—how they actually do interact with your product and the feelings that surface as a result.

What people say and what people do are often two very different things. These two categories help define those differences, choose our testing methods more intentionally, and categorize our findings more effectively.

5. Five essential usability testing methods

Some usability testing methods are geared more towards uncovering either behavioral or attitudinal findings; but many have the potential to result in both.

Of the methods you’ll learn about in this section, performance testing has the greatest potential for targeting both—and will perhaps require the greatest amount of thoughtfulness regarding how you approach it.

Naturally, then, we’ll spend a little more time on that method than the other four, though that in no way diminishes their usefulness! Here are the methods we’ll cover:

These are merely five common and/or interesting methods—it is not a comprehensive list of every method you can use to get inside the hearts and minds of your users. But it’s a place to start. So here we go!

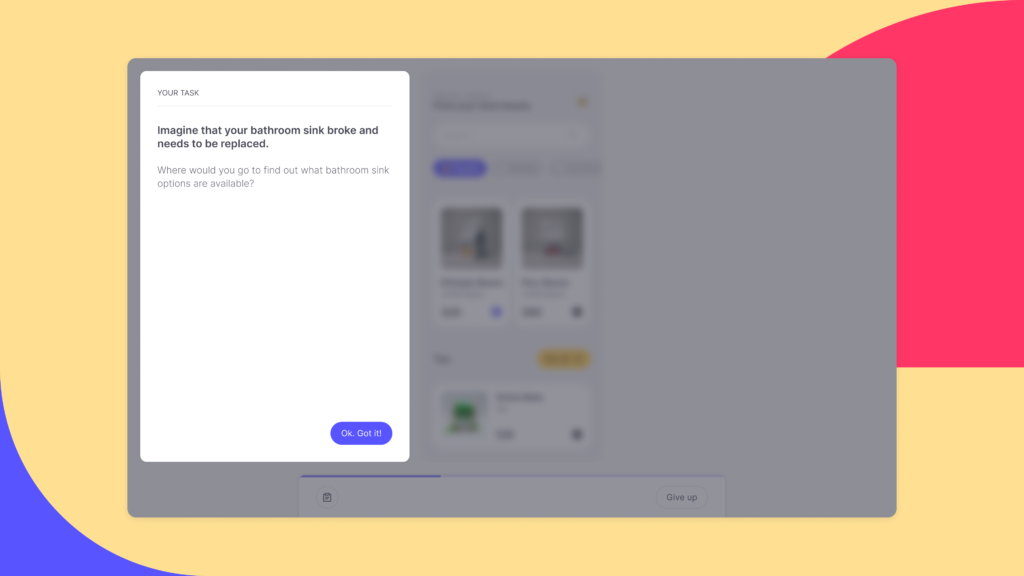

In performance testing, you sit down with a user and give them a task (or set of tasks) to complete with the product.

This is often a combination of methods and approaches that will allow you to interview users, see how they use your product, and find out how they feel about the experience afterward. Depending on your approach, you’ll observe them, take notes, and/or ask usability testing questions before, after, or along the way.

Performance testing is by far the most talked-about form of usability testing—especially as it’s often combined with other methods. Performance testing is what most commonly comes to mind in discussions of usability testing as a whole, and it’s what many UX design certification programs focus on—because it’s so broadly useful and adaptive.

While there’s no one right way to conduct performance testing, there are a number of approaches and combinations of methods you can use, and you’ll want to be intentional about it.

It’s a method that you can adapt to your objectives—so make sure you do! Ask yourself what kind of attitudinal or behavioral findings you’re really looking for, how much time you’ll have for each testing session, and what methods or approaches will help you reach your objectives most efficiently.

Performance testing is often combined with user interviews . For a quick guide on how to ask great questions during this part of a testing session, watch this video:

Even if you choose not to combine performance testing with user interviews, good performance testing will still involve some degree of questioning and moderating.

Performance testing typically results in a pretty massive chunk of qualitative insights, so you’ll need to devote a fair amount of intention and planning before you jump in.

Maximize the usefulness of your research by being thoughtful about the task(s) you assign and what approach you take to moderating the sessions. As your test participants go about the task(s) you assign, you’ll watch, take notes, and ask questions either during or after the test—depending on your approach.

Four approaches to performance testing

There are four ways you can go about moderating a performance test , and it’s worth understanding and choosing your approach (or combination of approaches) carefully and intentionally. As you choose, take time to consider:

- How much guidance the participant will actually need

- How intently participants will need to focus

- How guidance or prompting from you might affect results or observations

With these things in mind, let’s look at the four approaches.

Concurrent Think Aloud (CTA)

With this approach, you’ll encourage participants to externalize their thought process—to think out loud. Your job during the session will be to keep them talking through what they’re looking for, what they’re doing and why, and what they think about the results of their actions.

A CTA approach often uncovers a lot of nuanced details in the user journey, but if your objectives include anything related to the accuracy or time for task completion, you might be better off with a Retrospective Think Aloud.

Retrospective Think Aloud (RTA)

Here, you’ll allow participants to complete their tasks and recount the journey afterward . They can complete tasks in a more realistic time frame and degree of accuracy, though there will certainly be nuanced details of participants’ thoughts and feelings you’ll miss out on.

Concurrent Probing (CP)

With Concurrent Probing, you ask participants about their experience as they’re having it. You prompt them for details on their expectations, reasons for particular actions, and feeling about results.

This approach can be distracting, but used in combination with CTA, you can allow participants to complete the tasks and prompt only when you see a particularly interesting aspect of their experience, and you’d like to know more. Again, if accuracy and timing are critical objectives, you might be better off with Retrospective Probing.

Retrospective Probing (RP)

If you note that a participant says or does something interesting as they complete their task(s), you can note it and ask them about it later—this is Retrospective Probing. This is an approach very often combined with CTA or RTA to ensure that you’re not missing out on those nuanced details of their experience without distracting them from actually completing the task.

Whew! There’s your quick overview of performance testing. To learn more about it, read to the final section of this article: How to learn more about usability testing.

With this under our belts, let’s move on to our other four essential usability testing methods.

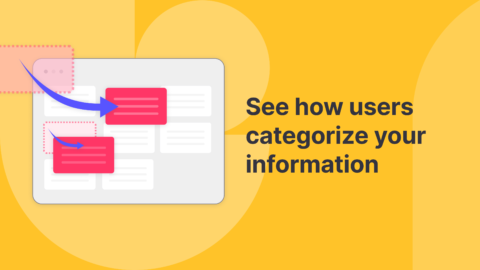

Card sorting is a way of testing the usability of your information architecture. You give users blank cards (open card sorting) or cards labeled with the names and short descriptions of the main items/sections of the product (closed card sorting), then ask them to sort the cards into piles according to which items seem to go best together. You can go even further by asking them to sort the cards into larger groups and to name the groups or piles.

Rather than structuring your site or app according to your understanding of the product, card sorting allows the information architecture to mirror the way your users are thinking.

This is a great technique to employ very early in the design process as it is inexpensive and will save the time and expense of making structural adjustments later in the process. And there’s no technology required! If you want to conduct it remotely, though, there are tools like OptimalSort that do this effectively.

For more on how to conduct card sorting, watch this video:

Tree testing is a great follow up to card sorting, but it can be conducted on its own as well. In tree testing, you create a visual information hierarchy (or “tree) and ask users to complete a task using the tree. For example, you might ask users, “You want to accomplish X with this product. Where do you go to do that?” Then you observe how easily users are able to find what they’re looking for.

This is another great technique to employ early in the design process. It can be conducted with paper prototypes or spreadsheets, but you can also use tools such as TreeJack to accomplish this digitally and remotely.

In the 5-second test, you expose your users to one portion of your product (one screen, probably the top half of it) for five seconds and then interview them to see what they took away regarding:

- The product/page’s purpose and main features or elements

- The intended audience and trustworthiness of the brand

- Their impression of the usability and design of the product

You can conduct this kind of testing in person rather simply, or remotely with tools like UsabilityHub .

This one may seem somewhat new, but it’s been around for a while–though the tools and technology around it have evolved. Eye tracking on its own isn’t enough to determine usability, but it’s a great compliment to your other usability testing measures.

In eye tracking you literally track where most users’ eyes land on the screen you’re designing. The reason this is important is that you want to make sure that the elements users’ eyes are drawn to are the ones that communicate the most important information. This is a difficult one to conduct in any kind of analog fashion, but there are a lot of tools out there that make it simple— CrazyEgg and HotJar are both great places to start.

6. How to learn more about usability testing

There you have it: your 15-minute overview of the what, why, and how of usability testing. But don’t stop here! Usability testing and UX research as a whole have a deeply humanizing impact on the design process. It’s a fascinating field to discover and the result of this kind of work has the power of keeping companies, design teams, and even the lone designer accountable to what matters most: the needs of the end user.

If you’d like to learn more about usability testing and UX research, take the free UX Research for Beginners Course with CareerFoundry. This tutorial is jam-packed with information that will give you a deeper understanding of the value of this kind of testing as well as a number of other UX research methods.

You can also enroll in a UX design course or bootcamp to get a comprehensive understanding of the entire UX design process (to which usability testing and UX research are an integral part). For guidance on the best programs, check out our list of the 10 best UX design certification programs . And if you’ve already started your learning process, and you’re thinking about the job hunt, here are the top 5 UX research interview questions to be ready for.

For further reading about usability testing and UX research, check out these other articles:

- How to conduct usability testing: a step-by-step guide

- What does a UX researcher actually do? The ultimate career guide

- 11 usability heuristics every designer should know

- How to conduct a UX audit

Product Design Bundle and save

User Research New

Content Design

UX Design Fundamentals

Software and Coding Fundamentals for UX

- UX training for teams

- Hire our alumni

- Student Stories

- State of UX Hiring Report 2024

- Our mission

- Advisory Council

Education for every phase of your UX career

Professional Diploma

Learn the full user experience (UX) process from research to interaction design to prototyping.

Combine the UX Diploma with the UI Certificate to pursue a career as a product designer.

Professional Certificates

Learn how to plan, execute, analyse and communicate user research effectively.

Master content design and UX writing principles, from tone and style to writing for interfaces.

Understand the fundamentals of UI elements and design systems, as well as the role of UI in UX.

Short Courses

Gain a solid foundation in the philosophy, principles and methods of user experience design.

Learn the essentials of software development so you can work more effectively with developers.

Give your team the skills, knowledge and mindset to create great digital products.

Join our hiring programme and access our list of certified professionals.

Learn about our mission to set the global standard in UX education.

Meet our leadership team with UX and education expertise.

Members of the council connect us to the wider UX industry.

Our team are available to answer any of your questions.

Fresh insights from experts, alumni and the wider design community.

Success stories from our course alumni building thriving careers.

Discover a wealth of UX expertise on our YouTube channel.

Latest industry insights. A practical guide to landing a job in UX.

The ultimate guide to usability testing for UX in 2024

In this guide, we’ll show you exactly what usability testing is, why it matters, and how you can conduct your usability testing for more effective product design.

Free course: Introduction to UX Design

What is UX? Why has it become so important? Could it be a career for you? Learn the answers, and more, with a free 7-lesson video course.

When designing a new product or feature, you want to make sure that it’s usable and user-friendly before you get it developed. And, even once a product has launched, it’s important to continuously evaluate and improve the user experience it provides.

This can be done through usability testing: putting your product or feature in front of real people and observing how easy (or difficult) it is for them to use it.

You can’t build great products without usability testing. In this guide, we’ll show you exactly what usability testing is, why it matters, and how you can conduct your own usability testing for more effective product design.

What is usability testing?

Usability testing is a user-centred research method aimed at evaluating the usability of digital products.

It involves watching users as they complete specific tasks. This enables researchers and designers to see whether the product is easy to use, whether users enjoy it, and what usability issues might exist with the product. UX designers can then update and improve the product as necessary.

For example: imagine you’re testing an e-commerce app’s checkout process. You observe several users as they attempt to purchase something, and in the process, uncover issues like unclear form fields and confusing payment options. Based on these observations, you can improve the checkout process by simplifying the language used in the forms and presenting the payment options more clearly.

Why is usability testing important in UX?

Usability testing enables you to identify design and usability flaws you might otherwise miss. Most importantly, it provides you with first-hand insights from your target users—the people you’re designing the product for. And that’s invaluable if you want to create an effective and enjoyable user experience!

Through usability testing, you can:

- Validate ideas : Usability testing can start as soon as UX designers have a prototype or rough draft of the product. This kind of testing can help UX designers validate whether their ideas are working before they’ve gone too far with them (i.e. before spending time and money developing and launching them!)

- Identify usability problems : Users can find usability problems that UX designers have overlooked, from navigation errors to finding something on a particular page. By having users identify these problems, UX designers can adjust and deliver a better product.

- Understand user behaviour : Observing the behaviour of the product’s target users can provide insight into how they will navigate and interact with your product. Even if they don’t find any usability errors on a given test, understanding their users will help UX designers provide an optimal user experience.

- Reduce costs and save time: Usability testing delivers significant cost savings by resolving potential issues before going to market. Allowing actual users to inform and guide the development process can prevent costly failures and streamline the time the design process takes.

[GET CERTIFIED IN UX]

When should you conduct usability testing for UX?

Usability testing is a flexible testing method, and therefore can be used at any point in the design process . You can conduct usability tests on early prototypes, later in the design process on live apps or websites, or during redesigns.

While conducting usability tests can be expensive, it’s much more cost-effective than the alternative: spending time and money getting the product or feature developed, only to find that it doesn’t work as intended and needs to be redesigned and rebuilt.

Usability testing should feature continuously throughout the product design process. Run usability tests to ensure that your early ideas and designs are indeed usable and user-friendly, and continue to run usability tests even after the product is launched. This will help you to improve the product—and the user experience it provides—on an ongoing basis.

What are the different types of usability testing?

There are several types of usability testing to choose from, and they each have benefits of their own.

Qualitative vs. quantitative usability testing

Qualitative testing focuses on the question of “Why?” Why do people like or dislike something? Why do they find something easy or difficult to use?

Quantitative testing focuses on the question of “How many?” with a focus on hard numbers and statistics, such as the time it takes a user to perform a particular task or press a particular button.

Both quantitative and qualitative usability testing have their benefits. Quantitative usability testing gives us objective, measurable data which is easier to analyse. Qualitative usability testing allows us to dive deeper into the users’ needs, expectations, and subjective experience in relation to the product. Most designers and researchers will conduct a mixture of both where possible.

In-person vs. remote usability testing

In-person testing takes place in the same room with the user and the researcher face-to-face. This kind of testing can be more time-consuming and expensive, but there’s nothing like seeing a person’s subtle body language as they navigate your website.

Remote testing takes place virtually, usually over the internet. As a result, testing can take place anywhere, cutting across geographical boundaries.

Moderated vs. unmoderated usability testing

In addition, usability testing can be moderated or unmoderated. Moderated testing mimics the circumstances of in-person testing, so the researcher can speak to the participant and observe their screen through the internet while they’re completing the test.

Unmoderated testing, on the other hand, allows the user to conduct the usability test on their own time. The user follows a list of tasks and the company is sent a recording of the test at the end of the session. This is a popular format because it requires the least amount of time.

Traditional vs. guerrilla usability testing

In traditional usability testing, the user is approached about being in a usability test and sets up a time to come in and take it.

Guerrilla, or hallway, usability testing is different. Researchers set up a table in a high-traffic public area, and ask random people to participate in their test right then. This allows researchers to choose people with no experience with these kinds of tests to provide feedback about their product for the first time.

[GET CERTIFIED IN USER RESEARCH]

Common usability testing methods and techniques

Some of the most popular usability methods and techniques used by UX designers include:

Think-aloud protocol

To implement this method, ask users to verbalise their actions as they navigate through the test. As they describe their reasonings and issues, researchers gain insights into their usability struggles.

Heatmaps and analytics

Heatmaps provide visual representations of high engagement areas and show which areas users pay less attention to. Combining heatmaps with analytics can help you understand users’ behaviour patterns and optimise your website or app.

Learn more: The 7 most important UX KPIs (and how to measure them) .

How to conduct usability testing for UX: A step-by-step framework

There are six steps to follow to run a usability test:

1. Define the goals of your study

Determine clear goals for your usability test—and decide how you’ll measure them. For example, if you have an e-commerce website, you might want to test how easy it is for users to purchase a product and go through the checkout process. You might measure this by evaluating how long it takes the user to complete this task (time on task) or how many errors they make (error rate).

2. Write tasks and a script

Usability testing usually involves asking your users (or test participants) to complete a particular task.

Writing tasks for a usability test is tricky business. You need to avoid bias in your wording and tone of voice so you don’t influence your users. As a result, you need to constantly be asking yourself: what should the user be able to do? The answer will allow you to prioritise the testing of the most important functionalities.

For example, say you want to test the checkout process for a clothing app. You need the task to be realistic and actionable, and you don’t want to give away the solution. So a good task would be: “You are looking for a dress for a wedding. Choose the one you like, select your size, and order it.”

The results will provide you with a wealth of information about the buyer’s journey from the troubles they may have encountered to the number of people who actually managed to make a purchase.

3. Recruit participants

There are a lot of ways to recruit people to take part in your study. You can recruit people using email newsletters or via social media for free, or you can use a paid service that will find participants for you. And remember: you don’t need more than five users if you’re doing a qualitative study.

4. Conduct your usability test

You could conduct your study in-person, which means you will have to be present and guide the user, asking follow-up questions as required. Or you could do an unmoderated study and trust your testing script will do the job with participants. Either way, your participants should get your prepared scenarios and complete your tasks, leaving you with a ton of data to analyse.

5. Analyse results

Use all the data you gathered to analyse what users did right and wrong during the test. Make sure to pay attention to both what the user did and how it made them feel. Analysis should give you an idea of the patterns of problems and help you provide recommendations to the UX team.

6. Report your findings

Make sure to keep your goals in mind and organise everyone’s insights into a functional document. Report the main takeaways and next steps for improving your product.

[GET CERTIFIED IN PRODUCT DESIGN]

The best usability testing tools

Some of the best usability testing tools include:

- Looppanel : Looppanel streamlines usability tests, recording, transcribing, and organising your data for analysis. It also integrates with Zoom, Google Meet, and Teams to auto-record calls. It’s like having a really good research assistant right there to generate notes, annotate your transcripts, and view your analysis by question or tag. It also offers a 15-day free trial to determine if Looppanel is for you.

- Maze : Maze is for all things UX research and that includes prototype testing. It integrates standard UX tools like Figma, Sketch, and Adobe XD, and it handles analytics, presenting them as a visual report. Perhaps best of all, Maze has a built-in panel of user testers, and once you release your test, they promise results in two hours. Maze is free for a single active project and up to 100 responses per month, although it costs $50/month (or about €44) for a professional plan.

- UserZoom : UserZoom is an all-purpose UX research solution for remote testing. It can handle moderated and unmoderated usability tests and integrate with platforms like Adobe XD, Miro, Jira, and more. It also has a participant recruitment engine with more than 120 million users around the world. The price of plans for UserZoom varies.

- Reframer : Reframer is part of Optimal Workshop . It’s a complete solution for synthesising all your qualitative research findings in one place. It will help you analyse and make sense of your qualitative research. There are a variety of plans for Reframer as part of the Optimal Workshop suite of UX research tools.

- Hotjar : If you decide to do a heatmap study as part of your usability testing, you can use Hotjar to create heatmaps and capture the way people are using your website. You can also get real-time user feedback and screen recordings to see how people interact with your app. But remember, if you’re doing heatmaps for usability testing, you’ll need at least 39 users to take your test. Hotjar has a basic free plan that is fairly extensive as well as a number of paid plans.

Usability testing for UX: best practices

There’s a lot to do when you’re running a usability test. Here are some best practices to ensure your usability tests are effective:

1. Get participants’ consent

Before you start your usability test, you must get consent from your users. Participants often don’t know why they’re participating in a usability study. As a result, you must inform them and get their sign off to use the data they provide.

2. Bring in a broader demographic

Make sure you recruit people with different perspectives on your product to your test. You should bring in people from different demographics and market segments to give you different perspectives on your product. Each demographic will have something different to point out.

3. Pilot testing is important

To ensure your usability test is in good shape, run a pilot test of your study with someone who was not involved in the project. This could be another person in your department or a friend. Either way, this will help you solve any issues you’re having before you do an official usability test.

Pilot tests are especially important for remote or unmoderated usability tests because test participants will rely heavily on your instructions in these circumstances.

4. Know your goals

Make sure you know what your exact goals are and when the results qualify as a failure. Knowing this in advance will help you run an effective usability study.

5. Consider the length of the test

While you may be able to spend all day testing your product, users aren’t so patient. Make sure the tasks you’ve chosen for your usability study are enough to ensure you’re confident with the results, but not so much that your users are exhausted. If necessary, you can run multiple tests. Remember: asking too much from your participants will lead to poor test results.

Usability testing is crucial to UX designers. Learn more about usability testing by checking out these articles:

- Are user research and UX research the same thing?

- The importance of user research in UX design

- How to incorporate user feedback in product design (and why it matters)

Subscribe to our newsletter

Get the best UX insights and career advice direct to your inbox each month.

Thanks for subscribing to our newsletter

You'll now get the best career advice, industry insights and UX community content, direct to your inbox every month.

Upcoming courses

Professional diploma in ux design.

Learn the full UX process, from research to design to prototyping.

Professional Certificate in UI Design

Master key concepts and techniques of UI design.

Certificate in Software and Coding Fundamentals for UX

Collaborate effectively with software developers.

Certificate in UX Design Fundamentals

Get a comprehensive introduction to UX design.

Professional Certificate in Content Design

Learn the skills you need to start a career in content design.

Professional Certificate in User Research

Master the research skills that make UX professionals so valuable.

Upcoming course

Build your UX career with a globally-recognised, industry-approved certification. Get the mindset, the skills and the confidence of UX designers.

You may also like

10 UX writing examples to inspire you in 2024

3 real-world UX research case studies from Airbnb, Google, and Spotify—and what we can learn from them

AI for UX: 5 ways you can use AI to be a better UX designer

Build your UX career with a globally recognised, industry-approved qualification. Get the mindset, the confidence and the skills that make UX designers so valuable.

4 June 2024

- Reviews / Why join our community?

- For companies

- Frequently asked questions

Usability Testing

What is usability testing.

Usability testing is the practice of testing how easy a design is to use with a group of representative users. It usually involves observing users as they attempt to complete tasks and can be done for different types of designs. It is often conducted repeatedly, from early development until a product’s release.

“It’s about catching customers in the act, and providing highly relevant and highly contextual information.”

— Paul Maritz, CEO at Pivotal

- Transcript loading…

Usability Testing Leads to the Right Products

Through usability testing, you can find design flaws you might otherwise overlook. When you watch how test users behave while they try to execute tasks, you’ll get vital insights into how well your design/product works. Then, you can leverage these insights to make improvements. Whenever you run a usability test, your chief objectives are to:

1) Determine whether testers can complete tasks successfully and independently .

2) Assess their performance and mental state as they try to complete tasks, to see how well your design works.

3) See how much users enjoy using it.

4) Identify problems and their severity .

5) Find solutions .

While usability tests can help you create the right products, they shouldn’t be the only tool in your UX research toolbox. If you just focus on the evaluation activity, you won’t improve the usability overall.

There are different methods for usability testing. Which one you choose depends on your product and where you are in your design process.

Usability Testing is an Iterative Process

To make usability testing work best, you should:

a. Define what you want to test . Ask yourself questions about your design/product. What aspect/s of it do you want to test? You can make a hypothesis from each answer. With a clear hypothesis, you’ll have the exact aspect you want to test.

b. Decide how to conduct your test – e.g., remotely. Define the scope of what to test (e.g., navigation) and stick to it throughout the test. When you test aspects individually, you’ll eventually build a broader view of how well your design works overall.

2) Set user tasks –

a. Prioritize the most important tasks to meet objectives (e.g., complete checkout), no more than 5 per participant. Allow a 60-minute timeframe.

b. Clearly define tasks with realistic goals .

c. Create scenarios where users can try to use the design naturally . That means you let them get to grips with it on their own rather than direct them with instructions.

3) Recruit testers – Know who your users are as a target group. Use screening questionnaires (e.g., Google Forms) to find suitable candidates. You can advertise and offer incentives . You can also find contacts through community groups , etc. If you test with only 5 users, you can still reveal 85% of core issues.

4) Facilitate/Moderate testing – Set up testing in a suitable environment . Observe and interview users . Notice issues . See if users fail to see things, go in the wrong direction or misinterpret rules. When you record usability sessions, you can more easily count the number of times users become confused. Ask users to think aloud and tell you how they feel as they go through the test. From this, you can check whether your designer’s mental model is accurate: Does what you think users can do with your design match what these test users show?

If you choose remote testing , you can moderate via Google Hangouts, etc., or use unmoderated testing. You can use this software to carry out remote moderated and unmoderated testing and have the benefit of tools such as heatmaps.

Keep usability tests smooth by following these guidelines.

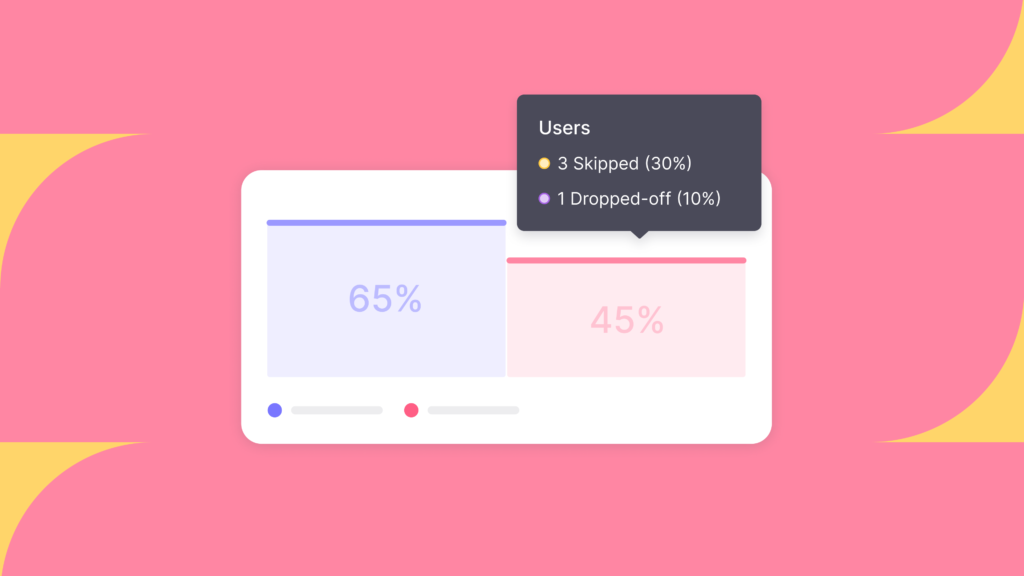

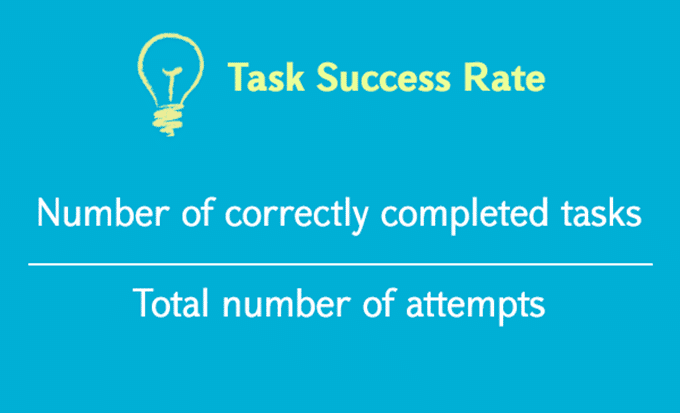

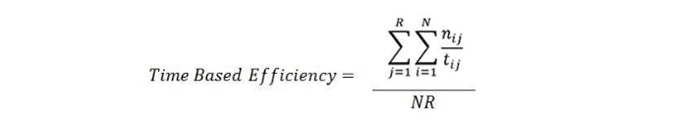

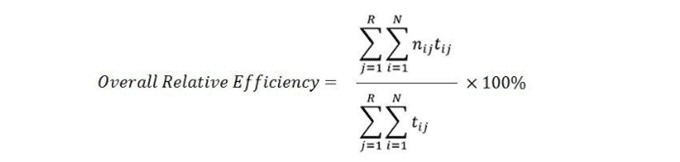

1) Assess user behavior – Use these metrics:

Quantitative – time users take on a task, success and failure rates, effort (how many clicks users take, instances of confusion, etc.)

Qualitative – users’ stress responses (facial reactions, body-language changes, squinting, etc.), subjective satisfaction (which they give through a post-test questionnaire) and perceived level of effort/difficulty

2) Create a test report – Review video footage and analyzed data. Clearly define design issues and best practices. Involve the entire team.

Overall, you should test not your design’s functionality, but users’ experience of it . Some users may be too polite to be entirely honest about problems. So, always examine all data carefully.

Learn More about Usability Testing

Take our course on usability testing .

Here’s a quick-fire method to conduct usability testing .

See some real-world examples of usability testing .

Take some helpful usability testing tips .

Questions related to Usability Testing

To conduct usability testing effectively:

Start by defining clear, objective goals and recruit representative users.

Develop realistic tasks for participants to perform and set up a controlled, neutral environment for testing.

Observe user interactions, noting difficulties and successes, and gather qualitative and quantitative data.

After testing, analyze the results to identify areas for improvement.

For a comprehensive understanding and step-by-step guidance on conducting usability testing, refer to our specialized course on Conducting Usability Testing .

Conduct usability testing early and often, from the design phase to development and beyond. Early design testing uncovers issues when they are more accessible and less costly to fix. Regular assessments throughout the project lifecycle ensure continued alignment with user needs and preferences. Usability testing is crucial for new products and when redesigning existing ones to verify improvements and discover new problem areas. Dive deeper into optimal timing and methods for usability testing in our detailed article “Usability: A part of the User Experience.”

Incorporate insights from William Hudson, CEO of Syntagm, to enhance usability testing strategies. William recommends techniques like tree testing and first-click testing for early design phases to scrutinize navigation frameworks. These methods are exceptionally suitable for isolating and evaluating specific components without visual distractions, focusing strictly on user understanding of navigation. They're advantageous for their quantitative nature, producing actionable numbers and statistics rapidly, and being applicable at any project stage. Ideal for both new and existing solutions, they help identify problem areas and assess design elements effectively.

To conduct usability testing for a mobile application:

Start by identifying the target users and creating realistic tasks for them.

Collect data on their interactions and experiences to uncover issues and areas for improvement.

For instance, consider the concept of ‘tappability’ as explained by Frank Spillers, CEO: focusing on creating task-oriented, clear, and easily tappable elements is crucial.

Employing correct affordances and signifiers, like animations, can clarify interactions and enhance user experience, avoiding user frustration and errors. Dive deeper into mobile usability testing techniques and insights by watching our insightful video with Frank Spillers.

For most usability tests, the ideal number of participants depends on your project’s scope and goals. Our video featuring William Hudson, CEO of Syntagm, emphasizes the importance of quality in choosing participants as it significantly impacts the usability test's results.

He shares insightful experiences and stresses on carefully selecting and recruiting participants to ensure constructive and reliable feedback. The process involves meticulous planning and execution to identify and discard data from non-contributive participants and to provide meaningful and trustworthy insights are gathered to improve the interactive solution, be it an app or a website. Remember the emphasis on participant's attentiveness and consistency while performing tasks to avoid compromising the results. Watch the full video for a more comprehensive understanding of participant recruitment and usability testing.

To analyze usability test results effectively, first collate the data meticulously. Next, identify patterns and recurrent issues that indicate areas needing improvement. Utilize quantitative data for measurable insights and qualitative data for understanding user behavior and experience. Prioritize findings based on their impact on user experience and the feasibility of implementation. For a deeper understanding of analysis methods and to ensure thorough interpretation, refer to our comprehensive guides on Analyzing Qualitative Data and Usability Testing . These resources provide detailed insights, aiding in systematically evaluating and optimizing user interaction and interface design.

Usability testing is predominantly qualitative, focusing on understanding users' thoughts and experiences, as highlighted in our video featuring William Hudson, CEO of Syntagm.

It enables insights into users' minds, asking why things didn't work and what's going through their heads during the testing phase. However, specific methods, like tree testing and first-click testing , present quantitative aspects, providing hard numbers and statistics on user performance. These methods can be executed at any design stage, providing actionable feedback and revealing navigation and visual design efficacy.

To conduct remote usability testing effectively, establish clear objectives, select the right tools, and recruit participants fitting your user profile. Craft tasks that mirror real-life usage and prepare concise instructions. During the test, observe users’ interactions and note their challenges and behaviors. For an in-depth understanding and guide on performing unmoderated remote usability testing, refer to our comprehensive article, Unmoderated Remote Usability Testing (URUT): Every Step You Take, We Won’t Be Watching You .

Some people use the two terms interchangeably, but User Testing and Usability Testing, while closely related, serve distinct purposes. User Testing focuses on understanding users' perceptions, values, and experiences, primarily exploring the 'why' behind users' actions. It is crucial for gaining insights into user needs, preferences, and behaviors, as elucidated by Ann Blanford, an HCI professor, in our enlightening video.

She elaborates on the significance of semi-structured interviews in capturing users' attitudes and explanations regarding their actions. Usability Testing primarily assesses users' ability to achieve their goals efficiently and complete specific tasks with satisfaction, often emphasizing the ease of interface use. Balancing both methods is pivotal for comprehensively understanding user interaction and product refinement.

Usability testing is crucial as it determines how usable your product is, ensuring it meets user expectations. It allows creators to validate designs and make informed improvements by observing real users interacting with the product. Benefits include:

Clarity and focus on user needs.

Avoiding internal bias.

Providing valuable insights to achieve successful, user-friendly designs.

By enrolling in our Conducting Usability Testing course, you’ll gain insights from Frank Spillers, CEO of Experience Dynamics, extensive experience learning to develop test plans, recruit participants, and convey findings effectively.

Explore our dedicated Usability Expert Learning Path at Interaction Design Foundation to learn Usability Testing. We feature a specialized course, Conducting Usability Testing , led by Frank Spillers, CEO of Experience Dynamics. This course imparts proven methods and practical insights from Frank's extensive experience, guiding you through creating test plans, recruiting participants, moderation, and impactful reporting to refine designs based on the results. Engage with our quality learning materials and expert video lessons to become proficient in usability testing and elevate user experiences!

Answer a Short Quiz to Earn a Gift

What is the primary purpose of usability testing?

- To assess how easily users can use a product and complete tasks

- To document the number of users visiting a product’s webpage

- To test the market viability of a new product

At what stage should designers conduct usability testing?

- Only after the product is fully developed

- Only during the initial concept phase

- Throughout all stages of product development

Why do designers perform usability testing multiple times during the development process?

- To increase the product cost

- To lengthen the development timeline

- To refine the design based on user feedback and improve user satisfaction

What type of data does usability testing typically generate?

- Only qualitative

- Only quantitative

Which method is a common practice in usability testing?

- To ask users only closed-ended questions post-test

- To observe users as they perform tasks without intervention

- To provide users with solutions before testing

Better luck next time!

Do you want to improve your UX / UI Design skills? Join us now

Congratulations! You did amazing

You earned your gift with a perfect score! Let us send it to you.

Check Your Inbox

We’ve emailed your gift to [email protected] .

Literature on Usability Testing

Here’s the entire UX literature on Usability Testing by the Interaction Design Foundation, collated in one place:

Learn more about Usability Testing

Take a deep dive into Usability Testing with our course Conducting Usability Testing .

Do you know if your website or app is being used effectively? Are your users completely satisfied with the experience? What is the key feature that makes them come back? In this course, you will learn how to answer such questions—and with confidence too—as we teach you how to justify your answers with solid evidence .

Great usability is one of the key factors to keep your users engaged and satisfied with your website or app. It is crucial you continually undertake usability testing and perceive it as a core part of your development process if you want to prevent abandonment and dissatisfaction. This is especially important when 79% of users will abandon a website if the usability is poor, according to Google! As a designer, you also have another vital duty—you need to take the time to step back, place the user at the center of the development process and evaluate any underlying assumptions. It’s not the easiest thing to achieve, particularly when you’re in a product bubble, and that makes usability testing even more important. You need to ensure your users aren’t left behind!

As with most things in life, the best way to become good at usability testing is to practice! That’s why this course contains not only lessons built on evidence-based approaches, but also a practical project . This will give you the opportunity to apply what you’ve learned from internationally respected Senior Usability practitioner, Frank Spillers, and carry out your own usability tests .

By the end of the course, you’ll have hands-on experience with all stages of a usability test project— how to plan, run, analyze and report on usability tests . You can even use the work you create during the practical project to form a case study for your portfolio, to showcase your usability test skills and experience to future employers!

All open-source articles on Usability Testing

7 great, tried and tested ux research techniques.

- 1.2k shares

- 3 years ago

How to Conduct a Cognitive Walkthrough

How to Conduct User Observations

Mobile Usability Research – The Important Differences from the Desktop

How to Recruit Users for Usability Studies

Best Practices for Mobile App Usability from Google

- 11 mths ago

Unmoderated Remote Usability Testing (URUT) - Every Step You Take, We Won’t Be Watching You

Making Use of the Crowd – Social Proof and the User Experience

Agile Usability Engineering

Four Assumptions for Usability Evaluations

- 7 years ago

Enhance UX: Top Insights from an IxDF Design Course

- 2 weeks ago

Design Thinking: Top Insights from the IxDF Course

Open Access—Link to us!

We believe in Open Access and the democratization of knowledge . Unfortunately, world-class educational materials such as this page are normally hidden behind paywalls or in expensive textbooks.

If you want this to change , cite this page , link to us, or join us to help us democratize design knowledge !

Privacy Settings

Our digital services use necessary tracking technologies, including third-party cookies, for security, functionality, and to uphold user rights. Optional cookies offer enhanced features, and analytics.

Experience the full potential of our site that remembers your preferences and supports secure sign-in.

Governs the storage of data necessary for maintaining website security, user authentication, and fraud prevention mechanisms.

Enhanced Functionality

Saves your settings and preferences, like your location, for a more personalized experience.

Referral Program

We use cookies to enable our referral program, giving you and your friends discounts.

Error Reporting

We share user ID with Bugsnag and NewRelic to help us track errors and fix issues.

Optimize your experience by allowing us to monitor site usage. You’ll enjoy a smoother, more personalized journey without compromising your privacy.

Analytics Storage

Collects anonymous data on how you navigate and interact, helping us make informed improvements.

Differentiates real visitors from automated bots, ensuring accurate usage data and improving your website experience.

Lets us tailor your digital ads to match your interests, making them more relevant and useful to you.

Advertising Storage

Stores information for better-targeted advertising, enhancing your online ad experience.

Personalization Storage

Permits storing data to personalize content and ads across Google services based on user behavior, enhancing overall user experience.

Advertising Personalization

Allows for content and ad personalization across Google services based on user behavior. This consent enhances user experiences.

Enables personalizing ads based on user data and interactions, allowing for more relevant advertising experiences across Google services.

Receive more relevant advertisements by sharing your interests and behavior with our trusted advertising partners.

Enables better ad targeting and measurement on Meta platforms, making ads you see more relevant.

Allows for improved ad effectiveness and measurement through Meta’s Conversions API, ensuring privacy-compliant data sharing.

LinkedIn Insights

Tracks conversions, retargeting, and web analytics for LinkedIn ad campaigns, enhancing ad relevance and performance.

LinkedIn CAPI

Enhances LinkedIn advertising through server-side event tracking, offering more accurate measurement and personalization.

Google Ads Tag

Tracks ad performance and user engagement, helping deliver ads that are most useful to you.

Share Knowledge, Get Respect!

or copy link

Cite according to academic standards

Simply copy and paste the text below into your bibliographic reference list, onto your blog, or anywhere else. You can also just hyperlink to this page.

New to UX Design? We’re Giving You a Free ebook!

Download our free ebook The Basics of User Experience Design to learn about core concepts of UX design.

In 9 chapters, we’ll cover: conducting user interviews, design thinking, interaction design, mobile UX design, usability, UX research, and many more!

Task-Based Usability Testing: Key to Product Development Success

Optimize product success with usability testing. Dive into task-based testing and uncover user preferences, navigational woes, and more. In this article, learn about the importance of usability testing as well as its types and uses.

Product development success is in large part due to the efforts put into usability testing. It assists in locating and resolving product usability issues, which can enhance user experience and engagement. Task-based usability testing evaluates a product’s usability by having users complete specific tasks. User preferences, navigational issues, and points of confusion can all be uncovered through this method. This article will discuss the definition of task-based usability testing, its benefits, different types of tests, when to use it, how to conduct a test, and examples.

What is Task-Based Usability Testing?

Task-based usability testing focuses on how users interact with a product when they are completing particular tasks. It is used to determine how effectively a product satisfies user needs and to pinpoint areas where the product could be improved. Users are required to execute a set of tasks during these tests, such as completing a form, navigating a website, or making a transaction. The product is then modified to enhance the user experience based on the test’s findings.

Task-Based Usability Testing Benefits

The development of products can benefit greatly from task-based usability testing. It can be useful to pinpoint usability problems like unclear navigation, unclear directions, or unclear information. This can lead to better use of resources and lower development costs. On the list of pros for task-based usability testing is that it aids in finding out what features are most useful to users or what kind of information they prefer to view. Decisions about future product iterations can benefit from this.

The performance of product design choices can also be assessed through usability testing. For instance, it can be used to compare different websites or alternative designs to choose the one that is most user-friendly. In doing so, you can make sure that the product is designed in such a way that users can easily comprehend and use.

Types of Task-Based Usability Testing

Usability testing can be divided into two main types: qualitative and quantitative. Inquiries into consumers’ subjective opinions of a product or service are the main goal of qualitative usability testing. Open Analytics is a testing technique that Useberry offers, in which users are asked to self-report when they believe that they have completed the task. Understanding the user’s thoughts, feelings, and behaviors as they interact with the product is the aim of qualitative usability testing.

Comparatively, quantitative usability testing focuses on gathering metrics about users’ success rates and time spent on tasks while using a product. Larger participant groups are often included in this kind of testing, which can also use techniques like Single Task tests that aim to evaluate a product’s usability by asking users to accomplish one or more tasks, such as completing a form.

With the use of Video Shoots , both Open Analytics and Single Task tests can gain qualitative data by observing the user’s face, voice, and screen. Both kinds of usability testing are crucial for comprehending the user experience and developing products that are simple to use and satisfy user needs.

When to Use Task-Based Usability Testing

Incorporating usability testing at multiple points in the development process is essential. You could use it throughout the design phase to try out alternative concepts and check for usability issues before the product goes into production. With Useberry , you can import your design or prototype and start testing immediately. Testing a product during development or after it has been released allows you to assess the quality of the user experience and locate areas for growth and any unresolved usability issues. With website usability testing , you can find out how real people use your live product.

How to Conduct a Task-Based Usability Test

Conducting a usability test based on a set of tasks requires a number of different actions to be taken.

Step 1: Create a test plan

This should contain a list of the tasks the user will be required to perform as well as any guidelines for doing so. The tasks should be relevant to the product, and participants should receive clear instructions on how to complete them. Learn how to write usability test tasks that are practical and motivate action.

Giving participants adequate time to finish the activities is crucial, too. Participants may grow discouraged, and the findings may be misleading if the tasks are excessively difficult or lengthy.

Step 2: Create a realistic task test

With the use of a user testing tool, such as Useberry, it’s easy to set up and saves you time and money. Follow these six steps by using Useberry’s Single Task Block to create a task-based test:

- Create an account

- Select a prototype or website from your library

- Fill in the action you want participants to take

- Let participants know more about the context of their task

- Set the screen your prototype or website will begin with

- Select when this task is successfully completed

Step 3: Pilot test before sharing

Before distributing a usability test to participants, developing a pilot test can be an essential step in ensuring the study’s success. This can help find and fix any issues with the test’s design, instructions, or materials while also ensuring that the test is appropriate for the intended audience. The study’s feasibility can be tested, and any necessary alterations can be made, based on factors like the time needed to complete the test.

Step 4: Recruit participants for the test

Identifying your target audience is crucial for producing accurate data from your usability tests. The participants should be representative of the target market if the product is designed for that group. Consider characteristics like age, place of residence, work, and interests of your users. Once your target audience has been identified, you can use Useberry’s Participant Pool to recruit vetted and verified participants for your test. There are more than 100 targeting attributes to choose from, and you can start collecting data right away. Another option is to share a link to your test with your audience via your own channels, like email and social media. To find out how many users you should test with, read here .

Step 4: Evaluate the findings

To improve the user experience, the product should be modified in light of the findings. By observing participants as they complete the tasks, you can determine by their clicks, taps, and scrolls where they got confused or stuck with the session recordings Useberry provides.

You can identify areas for improvement by looking at how many participants completed a task, how long they spent on it, and how often they misclicked. By visualizing the path a user follows from one screen to the next with User Flows , you can discover how your users behave and which screens they’re on when they decide to leave.

Click tracking shows you exactly where users click or tap on your prototype or website’s UI.

Examples of Task-based Usability Testing

Task-based usability testing has various applications. A great example is evaluating a website’s usability. This could entail asking visitors to perform actions like using the website, completing a form, or looking up information. Watch our how-to video on setting up and testing a website’s usability with a real-life example. Another example is evaluating a mobile app’s usability. This might include asking users to perform actions, including starting the program, using the menus, and completing a task.

Product success depends on usability testing to identify areas for improvement. It can be used to determine user preferences, assess the success of product design decisions, and find usability problems. The effectiveness of various designs or features can also be determined by conducting usability tests. Use Useberry to track tasks and results during a usability test and ensure the tasks are relevant to the product. This article will enable product teams to get reliable and actionable task-based usability testing results.

Ready to start testing your product?

Optimize product success with Useberry!

Open and Closed Card Sorting Explained

21 Terms Everyone in the UX Design Industry Should Know

- What is task analysis?

Last updated

28 February 2023

Reviewed by

Miroslav Damyanov

Every business and organization should understand the needs and challenges of its customers, members, or users. Task analysis allows you to learn about users by observing their behavior. The process can be applied to many types of actions, such as tracking visitor behavior on websites, using a smartphone app, or completing a specific action such as filling out a form or survey.

In this article, we'll look at exactly what task analysis is, why it's so valuable, and provide some examples of how it is used.

All your UX research in one place

Surface patterns and tie themes together across all your UX research when you analyze it with Dovetail

Task analysis is learning about users by observing their actions. It entails breaking larger tasks into smaller ones so you can track the specific steps users take to complete a task.

Task analysis can be useful in areas such as the following:

Website users signing up for a mailing list or free trial. Track what steps visitors typically take, such as where they find your site and how many pages they visit before taking action. You'd also track the behavior of visitors who leave without completing the task.

Teaching children to read. For example, a task analysis for second-graders may identify steps such as matching letters to sounds, breaking longer words into smaller chunks, and teaching common suffixes such as "ing" and "ies."

- Benefits of task analysis

There are several benefits to using task analysis for understanding user behavior:

Simplifies long and complex tasks

Allows for the introduction of new tasks

Reduces mistakes and improves efficiency

Develops a customized approach

- Types of task analysis

There are two main categories of task analysis, cognitive and hierarchical.

Cognitive task analysis

Cognitive task analysis, also known as procedural task analysis, is concerned with understanding the steps needed to complete a task or solve a problem. It is visualized as a linear diagram, such as a flowchart. This is used for fairly simple tasks that can be performed sequentially.

Hierarchical task analysis

Hierarchical task analysis identifies a hierarchy of goals or processes. This is visualized as a top-to-bottom process, where the user needs top-level knowledge to proceed to subsequent tasks. A hierarchical task analysis is top-to-bottom, as in Google's example following the user journey of a student completing a class assignment .

What is the difference between cognitive and hierarchical task analysis?

There are a few differences between cognitive and hierarchical task analysis. While cognitive task analysis is concerned with the user experience when performing tasks, hierarchical task analysis looks at how each part of a system relates to the whole.

- When to use task analysis

A task analysis is useful for any project where you need to know as much as possible about the user experience. To be helpful, you need to perform a task analysis early in the process before you invest too much time or money into features or processes you'll need to change later.

You can take what you learn from task analysis and apply it to other user design processes such as website design , prototyping , wireframing , and usability testing .

- How to conduct a task analysis

There are several steps involved in conducting a task analysis.

Identify one major goal (the task) you want to learn about. One challenge is knowing what steps to include. If you are studying users performing a task on your website, do you want to start the analysis when they actually land on your site or earlier? You may also want to know how they got there, such as by searching on Google.

Break the main task into smaller subtasks. "Going to the store" might be separated into getting dressed, getting your wallet, leaving the house, walking or driving to the store. You can decide which sub-tasks are meaningful enough to include.

Draw a diagram to visualize the process. A diagram makes it easier to understand the process.

Write down a list of the steps to accompany the diagram to make it more useful to those who were not familiar with the tasks you analyzed.

Share and validate the results with your team to get feedback on whether your description of the tasks and subtasks, as well as the diagram, are clear and consistent.

- Task analysis in UX

One of the most valuable uses of task analysis is for improving user experience (UX) . The entire goal of UX is to identify and overcome user problems and challenges. Task analysis can be helpful in a number of ways.

Identify the steps users take when using a product. Can some of the steps be simplified or eliminated?

Finding areas in the process that users find difficult or frustrating. For example, if many users abandon a task at a certain stage, you'll want to introduce changes that improve the completion rate.

Hierarchical analysis reveals what users need to know to get from one step to the next. If there are gaps (i.e., not all users have the expertise to complete the steps), they should be filled.

- Task analysis is a valuable tool for developers and project managers

Task analysis is a process that can improve the quality of training, software, product prototypes, website design, and many other areas. By helping you identify user experience, you can make improvements and solve problems. It's a tool that you can continually refine as you observe results.

By consistently applying the most appropriate kind of task analysis (e.g., cognitive or hierarchical), you can make consistent improvements to your products and processes. Task analysis is valuable for the entire product team, including product managers , UX designers , and developers .

Should you be using a customer insights hub?

Do you want to discover previous user research faster?

Do you share your user research findings with others?

Do you analyze user research data?

Start for free today, add your research, and get to key insights faster

Editor’s picks

Last updated: 25 June 2023

Last updated: 18 April 2023

Last updated: 15 January 2024

Last updated: 27 February 2023

Last updated: 24 June 2023

Last updated: 29 May 2023

Last updated: 14 March 2023

Last updated: 19 May 2023

Last updated: 30 April 2024

Last updated: 13 April 2023

Last updated: 7 July 2023

Last updated: 3 June 2023

Last updated: 11 January 2024

Last updated: 13 May 2024

Latest articles

Related topics, .css-je19u9{-webkit-align-items:flex-end;-webkit-box-align:flex-end;-ms-flex-align:flex-end;align-items:flex-end;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-flex-direction:row;-ms-flex-direction:row;flex-direction:row;-webkit-box-flex-wrap:wrap;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;-webkit-box-pack:center;-ms-flex-pack:center;-webkit-justify-content:center;justify-content:center;row-gap:0;text-align:center;max-width:671px;}@media (max-width: 1079px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}}@media (max-width: 799px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}} decide what to .css-1kiodld{max-height:56px;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;}@media (max-width: 1079px){.css-1kiodld{display:none;}} build next, decide what to build next.

Users report unexpectedly high data usage, especially during streaming sessions.

Users find it hard to navigate from the home page to relevant playlists in the app.

It would be great to have a sleep timer feature, especially for bedtime listening.

I need better filters to find the songs or artists I’m looking for.

Log in or sign up

Get started for free

Learn / Guides / Usability testing guide

Back to guides

Usability evaluation and analysis

Once you've finished running your usability testing sessions, it's time to evaluate the findings. In this chapter, we explain how to extract the data from your results, analyze it, and turn it into an action plan for improving your site.

Last updated

Reading time, take your first usability testing step today.

Sign up for a free Hotjar account and make sure your site behaves as you intend it to.

How to evaluate usability testing results [in 5 steps]

The process of turning a mass of qualitative data, transcripts, and observations into an actionable report on usability issues can seem overwhelming at first—but it's simply a matter of organizing your findings and looking for patterns and recurring issues in the data.

1. Define what you're looking for

Before you start analyzing the results, review your original goals for testing. Remind yourself of the problem areas of your website, or pain points, that you wanted to evaluate.

Once you begin reviewing the testing data, you will be presented with hundreds, or even thousands, of user insights. Identifying your main areas of interest will help you stay focused on the most relevant feedback.

Use those areas of focus to create overarching categories of interest. Most likely, each category will correspond to one of the tasks that you asked users to complete during testing. They may be things like: logging in, searching for an item, or going through the payment process, etc.

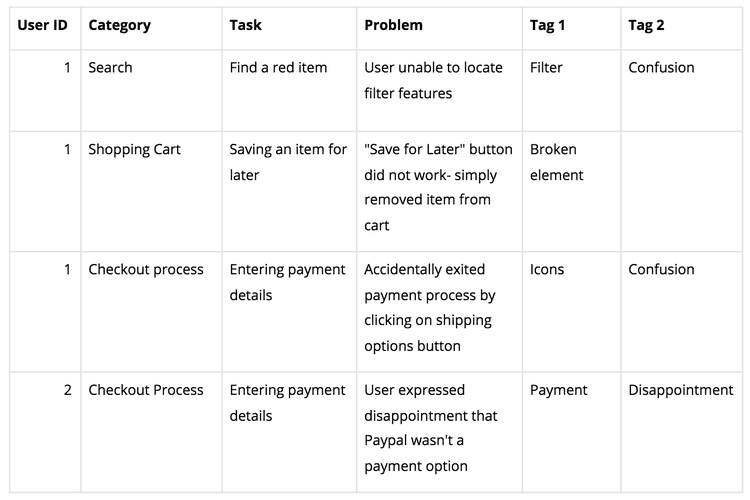

2. Organize the data

Review your testing sessions one by one. Watch the recordings, read the transcripts, and carefully go over your notes.

Issues the user encountered while performing tasks

Actions they took

Comments (both positive and negative) they made

For each issue a user discovered, or unexpected action they took, make a separate note. Record the task the user was attempting to complete and the exact problem they encountered, and add specific categories and tags (for example, location tags such as check out or landing page, or experience-related ones such as broken element or hesitation ) so you can later sort and filter. If you previously created user personas or testing groups, record that here as well.

It's best to do this digitally, with a tool like Excel or Airtable, as you want to be able to move the data around, apply tags, and sort it by category.

Pro tip: make sure your statements are concise and exactly describe the issue.

Bad example: the user clicked on the wrong link

Good example: the user clicked on the link for Discounts Codes instead of the one for Payment Info

When you're done, your data might look similar to this:

3. Draw conclusions

Assess your data with both qualitative and quantitative measures :

Quantitative analysis will give you statistics that can be used to identify the presence and severity of issues

Qualitative analysis will give you an insight into why the issues exist, and how to fix them.

In most usability studies, your focus and the bulk of your findings will be qualitative, but calculating some key numbers can give your findings credibility and provide baseline metrics for evaluating future iterations of the website.

Quantitative data analysis

Extract hard numbers from the data to employ quantitative data analysis. Figures like rankings and statistics will help you determine where the most common issues are on your website and their severity.

Quantitative data metrics for user testing include:

Success rate: the percentage of users in the testing group who ultimately completed the assigned task

Error rate : the percentage of users that made or encountered the same error

Time to complete task : the average time it took to complete a given task

Satisfaction rankings : an average of users' self-reported satisfaction measured on a numbered scale

Qualitative data analysis

Qualitative data is just as, if not more, important than quantitative analysis because it helps to illustrate why certain problems are happening, and how they can be fixed. Such anecdotes and insights will help you come up with solutions to increase usability.

Sort the data in your spreadsheet so that issues involving the same tasks are grouped together. This will give you an idea of how many users experienced problems with a certain step (e.g., check out) and the overlap of these problems. Look for patterns and repetitions in the data to help identify recurring issues.

Keep a running tally of each issue, and how common it was. You are creating a list of problems with the website. For example, you may find that several users had issues with entering their payment details on the checkout page. If they all encountered the same problem, then conclude that there is an issue that needs to be resolved.

Try and broaden the insight if it isn’t exactly identical with another, but is still strongly related. For example, a user who could not find a support phone number to call and another who couldn’t find an email address should be grouped together, with the overall conclusion that contact details for the company were difficult to find.

4. Prioritize the issues

Now that you have a list of problems, rank them based on their impact, if solved. Consider how global the problem is throughout the site, and how severe it is; acknowledge the implications of specific problems when extended sitewide (e.g., if one page is full of typos, you should probably get the rest of the site proofread as well).

Categorize the problems into:

Critical: impossible for users to complete tasks

Serious: frustrating for many users

Minor: annoying, but not going to drive users away

For example: being unable to complete payments is a more urgent issue than disliking the site's color scheme. The first is a critical issue that should be corrected immediately, while the second is a minor issue that can be put on the back burner for some time in the future.

5. Compile a report of your results

To benefit from website usability testing , you must ultimately use the results to improve your site. Once you've evaluated the data and prioritized the most common issues, leverage those insights to encourage positive changes to your site's usability.

In some cases, you may have the power to just make the changes yourself. In other situations, you may need to make your case to higher-ups at your company—and when that happens, you’ll likely need to draft a report that explains the problems you discovered and your proposed solutions.

Qualities of an effective usability report

It's not enough to simply present the raw data to decision-makers and hope it inspires change. A good report should:

Showcase the highest priority issues

. Don't just present a laundry list of everything that went wrong. Focus on the most pressing issues.

Be specific . It's not enough to simply say “users had difficulty with entering payment information.” Identify the specific area of design, interaction, or flow that caused the problem.

Include evidence . Snippets of videos, screenshots, or transcripts from actual tests can help make your point (for certain stakeholders, actually seeing someone struggle is more effective than simply hearing about it secondhand). Consider presenting your report in slideshow form, instead of as a written document, for this reason.

Present solutions. Brainstorm solutions for the highest priority issues. There are usually many ways to attack any one problem. For example: if your problem is that users don't understand the shipping options, that could be a design issue or a copywriting issue. It will be up to you and your team to figure out the most efficient change to shift user behavior in the direction you desire.

Include positive findings . In addition to the problems you've identified, include any meaningful positive feedback you received. This helps the team know what is working well so they can maintain those features in future website iterations.

Visit our page on reporting templates for more guidance on how to structure your findings.

Acting on your usability testing analysis