Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

The constrained minimax linear assignment problem

2000, Optimization Methods and Software

Related Papers

Uwe Zimmermann

Theoretical Computer Science

Alexander Kononov

IOP Publishing

Hussein Ali Hussein Al-Dallal Al-Saeedi

Discrete Applied Mathematics

Kurt Jörnsten

International Journal for Research in Applied Science & Engineering Technology (IJRASET)

IJRASET Publication

In this paper a new method is proposed for finding an optimal solution of a wide range of assignment problems, directly. A numerical illustration is established and the optimality of the result yielded by this method is also checked. The most attractive feature of this method is that it requires very simple arithmetical and logical calculations. The method is illustrated through an example.

Shalini Arora

YMER Digital

Assignment model comes under the class of linear programming model which is the most used techniques of operations research, which looks alike with transportation model with an objective function of minimizing the time or cost of manufacturing the products by allocating one job to one machine or one machine to one job or one destination to one origin or one origin to one destination only. In this paper, we represent linear mathematical formulation of Assignment problem and solved using Lingo Software. Keyword: Resource Allocation, Optimization Problem, Lingo Software, Assignment problem.

MATHEMATICAL PROGRAMMING

David Shmoys

Bhausaheb G Kore

In this paper I have proposed a new approach to solve an unbalanced assignment problem (UBAP). This approach includes two parts. First is to obtain an initial basic feasible solution (IBFS) and second part is to test optimality of an IBFS. I have proposed two new methods Row Penalty Assignment Method (RPAM) and Column Penalty Assignment Method (CPAM) to obtain an IBFS of an UBAP. Also I have proposed a new method Non-basic Smallest Effectiveness Method (NBSEM) to test optimality of an IBFS. We can solve an assignment problem of maximization type using this new approach in opposite sense. By this new approach, we achieve the goal with less number of computations and steps. Further we illustrate the new approach by suitable examples. INTRODUCTION The assignment problem is a special case of the transportation problem where the resources are being allocated to the activities on a one-to-one basis. Thus, each resource (e.g. an employee, machine or time slot) is to be assigned uniquely to a particular activity (e.g. a task, site or event). In assignment problems, supply in each row represents the availability of a resource such as a man, machine, vehicle, product, salesman, etc. and demand in each column represents different activities to be performed such as jobs, routes, factories, areas, etc. for each of which only one man or vehicle or product or salesman respectively is required. Entries in the square being costs, times or distances. The assignment method is a special linear programming technique for solving problems like choosing the right man for the right job when more than one choice is possible and when each man can perform all of the jobs. The ultimate objective is to assign a number of tasks to an equal number of facilities at minimum cost (or maximum profit) or some other specific goal. Let there be 'm' resources and 'n' activities. Let c ij be the effectiveness (in terms of cost, profit, time, etc.) of assigning resource i to activity j (i = 1, 2, …., m; j = 1, 2,…., n). Let x ij = 0, if resource i is not assigned to activity j and x ij = 1, if resource i is assigned to activity j. Then the objective is to determine x ij 's that will optimize the total effectiveness (Z) satisfying all the resource constraints and activity constraints. 1. Mathematical Formulation Let number of rows = m and number of columns = n. If m = n then an AP is said to be BAP otherwise it is said to be UBAP. A) Case 1: If m < n then mathematically the UBAP can be stated as follows:

RELATED TOPICS

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

Online learning of network bottlenecks via minimax paths

- Open access

- Published: 08 November 2022

- Volume 112 , pages 131–150, ( 2023 )

Cite this article

You have full access to this open access article

- Niklas Åkerblom 1 , 2 na1 ,

- Fazeleh Sadat Hoseini ORCID: orcid.org/0000-0003-0766-0493 1 na1 &

- Morteza Haghir Chehreghani 1

2403 Accesses

2 Citations

3 Altmetric

Explore all metrics

This article has been updated

In this paper, we study bottleneck identification in networks via extracting minimax paths. Many real-world networks have stochastic weights for which full knowledge is not available in advance. Therefore, we model this task as a combinatorial semi-bandit problem to which we apply a combinatorial version of Thompson Sampling and establish an upper bound on the corresponding Bayesian regret. Due to the computational intractability of the problem, we then devise an alternative problem formulation which approximates the original objective. Finally, we experimentally evaluate the performance of Thompson Sampling with the approximate formulation on real-world directed and undirected networks.

Similar content being viewed by others

Understanding the limitations of network online learning

Exploring Partially Observed Networks with Nonparametric Bandits

Deep Reinforcement Learning for Task-Driven Discovery of Incomplete Networks

Avoid common mistakes on your manuscript.

1 Introduction

Bottleneck identification constitutes an important task in network analysis, with applications including transportation planning and management (Berman & Handler, 1987 ), routing in computer networks (Shacham, 1992 ) and various bicriterion path problems (Hansen, 1980 ). The path-specific bottleneck, on a path between a source and a target node in a network, is defined as the edge with a maximal cost or weight according to some criterion such as transfer time, load, commute time, distance, etc. The goal of bottleneck identification and avoidance is then to find a path whose bottleneck is minimal. Thus, one may model bottleneck identification as the problem of computing the minimax edge over the given network/graph, to obtain an edge with a minimal largest gap between the source and target nodes. Equivalently, it can be formulated as a widest path problem or maximum capacity path problem (Pollack, 1960 ) where the edge weights have been negated.

In transportation, identifying bottlenecks is important for city governments and traffic managers to monitor and improve overall performance. For example, when driving between two locations, identifying a bottleneck means finding the section of road that slows down the traffic between two locations. Consider a social network where there is an information flow between two nodes (source and destination). Then we can define the bottleneck as a link in all the paths between these two nodes that has the weakest connection and causes the information flow to fail. So, bottlenecks here are related to the possibility of information loss.

The aforementioned formulations assume that the network or the graph is fully specified, i.e., that all the edge weights are fully known. However, in practice, the edge weights might not be known in advance or they might include some inherent uncertainty. In this paper, we tackle such situations by developing an online learning framework to learn the edge weight distributions of the underlying network, while solving the bottleneck identification problem for different problem instances.

For example, in the transportation scenario, city governments often have access to fleets of vehicles utilized for various municipal services. These may be used to sequentially and continuously to gain knowledge about traffic flow from the environment, while it is still desirable to avoid causing unnecessary inconvenience and stress (Hennessy & Wiesenthal, 1999 ) to the employees operating the vehicles by excessively exploring congested paths. If care is taken to spread the costs over time, exploration may be performed continuously without having a specific end time known in advance (i.e., the time horizon of the sequential decision making problem).

For this purpose, we view this as a multi-armed bandit (MAB) problem and focus on Thompson Sampling (TS) (Thompson, 1933 ), a method that suits probabilistic online learning well. Thompson Sampling is an early Bayesian method for addressing the trade-off between exploration and exploitation in sequential decision making problems. It balances these by randomly sampling available actions according to their posterior probability of being optimal, given prior beliefs and observations from previously selected actions. An action is more likely to be sampled if the posterior distribution over the expected reward of that action has high uncertainty (exploration) or high mean (exploitation).

The method has only recently been thoroughly evaluated through experimental studies (Chapelle & Li, 2011 ; Graepel et al., 2010 ) and theoretical analyses (Kaufmann et al., 2012 ; Agrawal et al., 2012 ; Russo & Van Roy, 2014 ), where it has been shown to be asymptotically optimal in the sense that it matches well-known lower bounds of these types of problems (Lai & Robbins, 1985 ). Furthermore, the algorithm does not assume knowledge of the time horizon, i.e., it is an anytime algorithm.

Among many other problem settings, Thompson Sampling has been adapted to online versions of combinatorial optimization problems with retained theoretical guarantees (Wang & Chen, 2018 ), where one application is to find shortest paths in graphs (Liu & Zhao, 2012 ; Gai et al., 2012 ; Zou et al., 2014 ; Åkerblom et al., 2020 ).

Another commonly used method for these problems is Upper Confidence Bound (UCB) (Auer, 2003 ), which utilizes optimism to balance exploration and exploitation. UCB has been adapted to combinatorial settings (Chen et al., 2013 ), and also exists in Bayesian variants (Kaufmann et al., 2012 ). Recently, a variant of UCB has been studied for bottleneck avoidance problems in a combinatorial pure exploration setting (Du et al., 2021 ). They consider a different problem setting and method than those we present in this paper, though their bottleneck reward function is similar to the one we use in our approximation method. The main difference between their setting and the standard combinatorial semi-bandit setting in how agents interact with the environment, is that instead of being restricted to selecting sets of actions respecting combinatorial constraints, they allow agents to sequentially try individual arms to identify the best feasible solution to the combinatorial problem. This is not applicable to our setting, since we may not observe the feedback of individual edges without also traversing a path containing those edges, potentially incurring cost from some other edge on that path.

Moreover, the objective in a pure exploration problem is to find the best action as quickly as possible, with either a fixed time budget or confidence level, using agents dedicated for this task. While identifying the best path is desirable in our problem setting as well, we are specifically interested in the case where existing agents are utilized and where using them exclusively for exploration is too costly. For that reason, we focus on anytime methods capable of distributing exploratory actions over time.

In this paper, we model the online bottleneck identification task as a stochastic combinatorial semi-bandit problem, for which we develop a combinatorial variant of Thompson Sampling. We then derive an upper bound on the corresponding Bayesian regret that is tight up to a polylogarithmic factor, which is consistent with the existing lower bounds for combinatorial semi-bandit problems. We face the issue of computational intractability with the exact problem formulation. We thus propose an approximation scheme, along with a theoretical analysis of its properties. Finally, we experimentally investigate the performance of the proposed method on directed and undirected real-world networks from transport and collaboration domains.

2 Bottleneck identification model

In this section, we first introduce the bottleneck identification problem over a fixed network and then describe a probabilistic model to be used in stochastic and uncertain situations.

2.1 Bottleneck identification over a network

We model a network by a graph G ( V , E , w ), where V denotes the set of vertices (nodes) and each \(e = (u,v) \in E\) indicates an edge between vertices u and v where \(u,v \in V\) and \(u \ne v\) . Moreover, \(w: E\rightarrow \mathbb {R}\) is a weight function defined for each edge of the graph, where for convenience, we use \(w_e\) to denote the weight of edge e . If G is directed, the pair ( u , v ) is ordered, otherwise, it is not (i.e., \((u,v)\equiv (v,u)\) for undirected graphs). A path p from vertex u (source) to vertex v (target) over G is a sequence of vertices \(<v_1, v_2, \dots ,v_{k-1}, v_k>\) where \(v_1=u\) , \(v_k=v\) and \((v_i,v_{i+1}) \in E, \forall i \in [1, k-1]\) . It can also be seen as a sequence of edges \(< (v_1, v_2),(v_2,v_3), \dots ,(v_{k-1}, v_k)>\) .

As previously mentioned, a bottleneck on a path p can be described as an edge with a maximal weight on that path. To find the smallest feasible bottleneck edge between the source node u and the target node v , we consider all the paths between them. For each path, we pick an edge with a maximal weight, to obtain all path-specific bottleneck edges. We then identify the smallest path-specific bottleneck edge in order to find the best feasible bottleneck edge, i.e., such that bottleneck edges with higher weights are avoided.

Therefore, given graph G , the bottleneck edge between \(u \in V\) and \(v \in V\) can be identified via extracting the minimax edge between them. With \(P_{u,v}\) denoting the set of all possible paths from u to v over G , the bottleneck weight (incurred by the bottleneck edge) can be computed by

The quantity in Eq. 1 satisfies the (ultra) metric properties under some basic assumptions on the edge weights such as symmetry and nonnegativity. Hence, it is sometimes used as a proper distance measure to extract manifolds and elongated clusters in a non-parametric way (Haghir Chehreghani, 2020 ; Kim & Choi, 2007 ).

However, in our setting, such conditions do not need to be fulfilled by the edge weights. In general, we tolerate positive as well as negative edge weights, and we assume the graph might be directed, i.e., the edge weights are not necessarily symmetric. Therefore, despite the absence of (ultra) metric properties, the concept of minimax edges is still relevant for bottleneck identification.

To compute the minimax edge, one does not need to investigate all possible paths between the source and target nodes, which might be computationally infeasible. As studied by Hu ( 1961 ), minimax edges and paths over an arbitrary undirected graph are equal to the minimax edges over any minimum spanning tree (MST) computed over that graph. This equivalence simplifies the calculation of minimax edges, as there is only one path between every two vertices over an MST, whose maximal edge weight yields the minimax edge, i.e., the desired bottleneck.

For directed graphs, an MST might not represent the minimax edges in a straightforward manner. Hence, we instead rely on a modification (Berman & Handler, 1987 ) of Dijkstra’s algorithm (Dijkstra, 1959 ) to extract minimax paths rather than the shortest paths.

2.2 Probabilistic model for bottleneck identification

In this paper, we study bottleneck identification in uncertain and stochastic settings. Therefore, instead of considering the weights \(w_e\) for \(e \in E\) to be fixed, we view them as stochastic with fixed, albeit unknown, distribution parameters. Additionally, we assume that the weight of each edge follows a Gaussian distribution with known and finite variance. The Gaussian edge weight assumption is common for many important problem settings, like minimization of travel time (Seshadri & Srinivasan, 2010 ) or energy consumption (Åkerblom et al., 2020 ) in road networks. Furthermore, we assume that all edge weights are mutually independent. Hence,

where \(\theta _e^*\) denotes the unknown mean of edge e , and \(\sigma _e^2\) is the known variance. To reduce cumbersome notation in the proofs, since the variance is assumed to be finite, we let \(\sigma _e^2 \le 1\) (by scaling the edge weight distributions). However, we emphasize that we do not assume that \(w_e\) and \(\theta _e^*\) are bounded or non-negative.

It is convenient to be able to make use of prior knowledge in online learning problems where the action space is large, which motivates a Bayesian approach where we assume that the unknown mean \(\theta ^*_e\) is sampled from a known prior distribution:

We use a Gaussian prior for \(\theta _e^*\) since it is conjugate to the Gaussian likelihood and allows for efficient recursive updates of posterior parameters upon a new weight observation \(w_{e,t}\) at time t :

Since our long-term objective is to find a path which minimizes the expected maximum edge weight along that path, we need a framework to sequentially select paths to update these parameters and learn enough information about the edge weight distributions.

The assumptions in this section might seem restrictive, and indeed, when the edge weights represent e.g., traffic congestion in a road network, it is reasonable to believe that edges are not independent, especially for neighboring road segments. There are ways of extending this setting to capture such dependencies, while retaining similar regret guarantees for the studied methods. Such extensions include the contextual setting, where expected edge weights are assumed to follow parameterized functions of contextual features (e.g., time-of-day, local ambient temperature, precipitation) revealed to the agent in each time step, before each action is taken. We leave such extensions to future work, though we note that the proofs in this work may be extended in a straightforward manner, analogous to the analysis of linear contextual Thompson Sampling by Russo and Van Roy ( 2014 ). Similarly, Thompson Sampling may be extended to the case where both the mean and variance are unknown, by assignment of a joint prior distribution over the parameters (Riquelme et al., 2018 ).

3 Online bottleneck learning framework

Consider a stochastic combinatorial semi-bandit problem (Cesa-Bianchi & Lugosi, 2012 ) with time horizon T , formulated as a problem of cost minimization rather than reward maximization. There is a set of base arms \(\mathcal {A}\) (where we let \(d := \vert \mathcal {A} \vert\) ) from which we may, at each time step \(t \in [T]\) , select a subset (or super arm ) \(\varvec{a}_t \subseteq \mathcal {A}\) . The selection is further restricted such that \(\varvec{a}_t \in \mathcal {I} \subseteq 2^\mathcal {A}\) , where \(\mathcal {I}\) is called the set of feasible super arms.

Upon selection of \(\varvec{a}_t\) , the environment reveals a feedback \(X_{i,t}\) drawn from some fixed and unknown distribution for each base arm \(i \in \varvec{a}_t\) (i.e., semi-bandit feedback). Furthermore, we then receive a super arm cost from the environment, \(c(\varvec{a}_t) := \max _{i \in \varvec{a}_t} X_{i,t}\) , i.e., the maximum of all base arm feedback for the selected super arm and the current time step. The objective is to select super arms \(\varvec{a}_t\) to minimize \(\mathbb {E}\left[ \sum _{t=1}^{T} c(\varvec{a}_t)\right]\) . This objective is typically reformulated as an equivalent regret minimization problem, where the (expected) regret is defined as

To connect this to the probabilistic bottleneck identification model introduced in the previous section, we let each edge \(e \in E\) in the graph G correspond to exactly one base arm \(i \in \mathcal {A}\) . For the online minimax path problem, the feasible set of super arms is then the set of all admissible paths in the graph, where the paths are directed or undirected depending on the type of graph. The feedback of each base arm i is simply the Gaussian weight of the matching edge e , with known variance \(\sigma _i^2\) and unknown mean \(\theta ^*_i\) .

We denote the expected cost of a super arm \(f_{\varvec{\theta }}(\varvec{a})\) , where \(\varvec{\theta }\) is a mean vector and \(f_{\varvec{\theta }}(\varvec{a}) := \mathbb {E}\left[ \max _{i \in \varvec{a}} C_i\right]\) such that \(C_i \sim \mathcal {N}(\theta _i, \sigma _i^2)\) . For Bayesian bandit settings and algorithms, it is common to consider the notion of Bayesian regret , with an additional expectation over problem instances drawn from the prior distribution (where we denote the prior distribution \(\lambda\) , over mean vectors \(\varvec{\theta }^*\) ):

3.1 Thompson sampling with exact objective

It is not sufficient to find the super arm \(\varvec{a}\) which minimizes \(f_{\varvec{\mu }_t}(\varvec{a})\) in each time step t , since a strategy which is greedy with respect to possibly imperfect current cost estimates may converge to a sub-optimal super arm. Thompson Sampling is one of several methods developed to address the trade-off between exploration and exploitation in stochastic online learning problems. It has been shown to exhibit good performance in many formulations, e.g., linear contextual bandits and combinatorial semi-bandits.

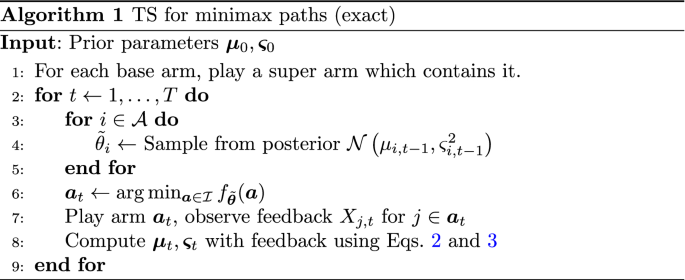

The steps performed in each time step t by Thompson Sampling, adapted to our setting, are described in Algorithm 1 . First, a mean vector \(\tilde{\varvec{\theta }}\) is sampled from the current posterior distribution (or from the prior in the first time step). Then, an arm \(\varvec{a}_t\) is selected which minimizes the expected cost \(f_{\tilde{\varvec{\theta }}} (\varvec{a}_t)\) with respect to the sampled mean vector. These first two steps are equivalent to selecting the arm according to the posterior probability of it being optimal. In combinatorial semi-bandit problems, the method of finding the best super arm according to the sampled parameters is often called an oracle .

When the super arm \(\varvec{a}_t\) is played, the environment reveals the feedback \(X_{i,t}\) if and only if \(i \in \varvec{a}_t\) , which is a property called semi-bandit feedback . Finally, these observations are used to update the posterior distribution parameters.

3.2 Regret analysis of Thompson sampling for minimax paths

We use the technique to analyze the Bayesian regret of Thompson Sampling for general bandit problems introduced by Russo and Van Roy ( 2014 ) and further elaborated by Slivkins ( 2019 ), carefully adapting it to our problem setting. This technique was originally devised to enable convenient conversion of existing UCB regret analyses to Thompson Sampling, but can also be applied to new TS applications. Here, we do a novel extension to combinatorial bandits with minimax super-arm cost functions, which includes establishing concentration properties for the mean estimates of the non-linear super-arm costs. In the rest of this section, we outline the most important steps of the proof of Theorem 1, leaving technical details to the supplementary material (Online Resource 1). In the analysis, for convenience, we assume that \(T \ge d\) .

The Bayesian regret of Algorithm 1 is \(\mathcal {O}(d\sqrt{T \log T})\) .

We initially define a sequence of upper and lower confidence bounds, for each time step t :

where \(\hat{\theta }_{i,t}\) is the average feedback of base arm \(i \in \mathcal {A}\) until time t , \(\hat{\varvec{\theta }}_{t}\) is the average feedback vector for all arms in \(\mathcal {A}\) , and \(N_t(i)\) is the number of times base arm \(i \in \mathcal {A}\) has been played as part of a super arm until time t .

For Algorithm 1, we have that:

This Bayesian regret decomposition is a direct application of Proposition 1 of Russo and Van Roy ( 2014 ). It utilizes the fact that given the history of selected arms and received feedback until time t , the played super arm \(\varvec{a}_t\) and the best possible super arm \(\varvec{a}^* := \arg \min _{\varvec{a} \in \mathcal {I}} f_{\varvec{\theta }^*}(\varvec{a})\) are identically distributed under Thompson Sampling. Furthermore, also given the history, \(U_t(\varvec{a})\) and \(L_t(\varvec{a})\) are deterministic functions of the super arm \(\varvec{a}\) . This enables the decomposition of the regret into terms of the expected confidence width, the expected overestimation of the super arm with least mean cost, and the expected underestimation of the selected super arm. By showing that \(f_{\varvec{\theta }^*}(\varvec{a}) \in [L_t(\varvec{a}), U_t(\varvec{a})]\) with high probability, we can bound the last two of these terms.

For any \(t \in [T]\) , we have that \(\mathbb {E}\left[ f_{\varvec{\theta }^*}(\varvec{a}_t) - U_t (\varvec{a}_t) \right] \le \frac{4d}{T}\) and \(\mathbb {E}\left[ L_t (\varvec{a}^*) - f_{\varvec{\theta }^*}(\varvec{a}^*) \right] \le \frac{4d}{T}\) .

Both terms are bounded in the same way, for which we need a few intermediary results. Focusing on the underestimation of the played super arm, we can see that:

First, in Lemma 4 , the difference between the true mean cost \(f_{\varvec{\theta }^*}(\varvec{a})\) of a super arm \(\varvec{a}\) and the corresponding estimated mean \(f_{\hat{\varvec{\theta }}}(\varvec{a})\) is bounded. The resulting upper bound is the maximum of the differences of the true and estimated means of each individual base arm feedback, such that:

For any super arm \(\varvec{a} \in \mathcal {I}\) and time step \(t \in [T]\) , we have that \(\vert f_{\varvec{\theta }^*}(\varvec{a}) - f_{\hat{\varvec{\theta }}_{t-1}}(\varvec{a}) \vert \le 2 \max _{i\in \varvec{a}} \vert \theta ^*_i - \hat{\theta }_{i,t-1} \vert\) .

This is achieved by decomposing the absolute value into a sum of the positive and negative portions of the difference, then bounding each individually. Focusing on the positive portion by assuming that \(f_{\varvec{\theta }^*}(\varvec{a}) \ge f_{\hat{\varvec{\theta }}_{t-1}}(\varvec{a})\) , and letting \(Z_i \sim \mathcal {N}(\hat{\theta }_{i,t-1}, \sigma ^2_i)\) , \(Y_i \sim \mathcal {N}(\theta ^*_{i}, \sigma ^2_i)\) , \(\delta _{i,t-1} := \theta ^*_i - \hat{\theta }_{i,t-1}\) and \(Q_i := Y_i - \delta _{i,t-1}\) , for \(i \in \varvec{a}\) , we can see that:

The negative portion is bounded in the same way, directly leading to the result of Lemma 4 . With this result, we can proceed with Lemma 3 , where we let \([x]^+ := \max (0, x)\) :

The probability in Eq. 6 is of the event that the difference between the estimated and true means of an arm i exceeds the confidence radius \(\sqrt{8 \log T / N_{t-1}(i)}\) , while Eq. 7 is the expected difference conditional on that event. We bound Eq. 6 with Lemma 5 and Eq. 7 with Lemma 6 .

\(\text {Pr}\left\{ \forall t \in [T]\; \forall i \in \mathcal {A},\;\; \vert \delta _{i,t-1} \vert \le \sqrt{\frac{8 \log T}{N_{t-1}(i)}}\right\} \ge 1 - \frac{2}{T}\) .

It is now sufficient to show that the difference \(\delta _{i,t-1}\) is small for all base arms \(i \in \mathcal {A}\) with high probability, which we accomplish using a standard concentration analysis through application of Hoeffding’s inequality and union bounds.

For any \(t \in [T]\) and \(i \in \mathcal {A}\) , we have

Though the rewards are unbounded, this expectation can be bounded by utilizing the fact that the mean of a truncated Gaussian distribution is increasing in the mean of the distribution before truncation, by Theorem 2 of Horrace ( 2015 ). We can see that:

We know that \(\delta _{i,t-1}\) is zero-mean Gaussian with variance at most one, hence \(\mathbb {E}\left[ \delta _{i,t-1} \;\;\bigg \vert \;\; \delta _{i,t-1} > 0\right] \le 1\) .

With the result from Lemma 3 , the last two terms of the regret decomposition in Lemma 2 are bounded by constants in T . Focusing on the remaining term, we just need to show that \(\sum _{t \in [T]} \mathbb {E}\left[ U_t(\varvec{a}_t) - L_t(\varvec{a}_t)\right] \le \mathcal {O} (d \sqrt{T \log T})\) to prove Theorem 1 :

We note that the final upper bound is tight up to a polylogarithmic factor, according to existing lower bounds for combinatorial semi-bandit problems (Kveton et al., 2015 ).

3.3 Thompson sampling with approximate objective

Unfortunately, exact expressions for computing the expected maximum of Gaussian random variables only exist when the variables are few. In other words, we cannot compute \(f_{\varvec{\theta }} (\varvec{a})\) exactly for a super arm \(\varvec{a}\) containing many base arms, necessitating some form of approximation approach. While it is possible to approximate \(f_{\varvec{\theta }} (\varvec{a})\) through e.g., Monte Carlo simulations, we want to be able to perform the cost minimization step using a computationally efficient oracle.

We note that, even with the capability to exactly compute \(f_{\varvec{\theta }} (\varvec{a})\) , it would not be feasible to solve the minimization problem in line 6 of Algorithm 1. The expected cost \(f_{\varvec{\theta }} (\varvec{a})\) of a super arm \(\varvec{a}\) (i.e., the expected maximum base arm feedback) depends not only on the individual expected values of the base arm feedback distributions, but also on the shape of the joint distribution of all base arms in \(\varvec{a}\) . Due to this fact, the stochastic version of the minimization problem lacks the property of optimal substructure (i.e., an optimal path does not necessarily consist of optimal sub-paths). For the deterministic version of the problem, as defined in Eq. 1 , the presence of this property enables the usage of computationally efficient dynamic programming strategies, like Dijkstra’s algorithm, which is consequently infeasible with the objective in Algorithm 1.

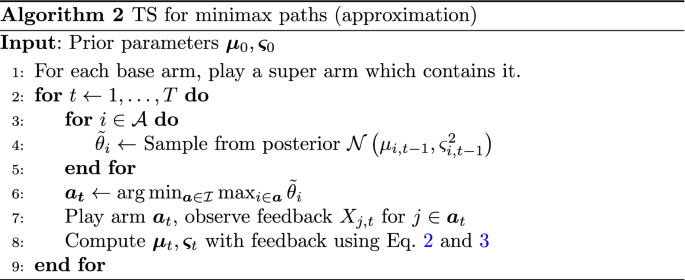

Therefore, we propose the approximation method outlined in Algorithm 2, where the minimization step of line 6 has been modified from Algorithm 1 with an alternative super arm cost function \(\tilde{f}_{\tilde{\varvec{\theta }}} (\varvec{a}) := \max _{i \in \varvec{a}} \tilde{\theta }_i\) . Switching objectives, from finding the super arm which minimizes the expected maximum base arm feedback, to instead minimize the maximum expected feedback, has the benefit of allowing us to utilize the efficient deterministic minimax path algorithms introduced earlier for both directed and undirected graphs. For directed graphs, the modified version of Dijkstra’s algorithm by Berman and Handler ( 1987 ) has a worst-case running time of \(\mathcal {O}(\vert E \vert + \vert V \vert \log \vert V \vert )\) with an efficient implementation using Fibonacci heaps (Fredman & Tarjan, 1987 ). Similarly, for undirected graphs, finding an MST (and subsequently a minimax path) can be achieved using Prim’s algorithm (Prim, 1957 ), with the same running time of \(\mathcal {O}(\vert E \vert + \vert V \vert \log \vert V \vert )\) if Fibonacci heaps are used. The other operations performed for each \(t \in [T]\) in Algorithm 2 (i.e., the posterior samples and updates) have a combined running time of, at worst, \(\mathcal {O}(\vert E \vert )\) . The same oracles are also used for the baseline algorithms evaluated in Sections 4.1 and 4.2 , with comparable running times.

It is possible to use alternative notions of regret to evaluate combinatorial bandit algorithms with approximate oracles (Chen et al., 2013 , 2016 ). For our experimental evaluation of Algorithm 2, we introduce the following definition of approximate regret:

An alternative Bayesian bandit algorithm which can be used with the alternative objective is BayesUCB (Kaufmann et al., 2012 ), which we use as a baseline for our experiments. Like Thompson Sampling, BayesUCB has been adapted to combinatorial semi-bandit settings (Nuara et al., 2018 ; Åkerblom et al., 2020 ). Whereas Thompson Sampling in Algorithm 2 encourages exploration by applying the oracle to parameters sampled from the posterior distribution, with BayesUCB, the oracle is instead applied to optimistic estimates based on the posterior distribution. In practice, this is accomplished for our cost minimization problem by using lower quantiles of the posterior distribution of each base arm. This principle of selecting plausibly optimal arms is called optimism in the face of uncertainty and is the underlying idea of all bandit algorithms based on UCB.

We note that while in BayesUCB, as outlined in Algorithm 1 of Kaufmann et al. ( 2012 ), the horizon is used to calculate UCB values, the authors of that work also explain that upper quantiles of order \(1 - 1/t\) (calculated without the horizon) achieve good results in practice. For that reason, we use lower quantiles of order 1/ t in the version of BayesUCB studied in this work, making it an anytime algorithm, like Thompson Sampling.

To connect the different objectives in Algorithm 1 and Algorithm 2, we note that by Jensen’s inequality, \(\tilde{f}_{\tilde{\varvec{\theta }}} (\varvec{a}) \le f_{\tilde{\varvec{\theta }}} (\varvec{a})\) and that the approximation objective consequently will underestimate super arm costs. However, we establish an upper bound on this difference through Theorem 7 .

Given the optimal super arm \(\varvec{a^*}\) for Algorithm 1 and the optimal super arm \(\tilde{\varvec{a}}^*\) for Algorithm 2, we have that \(f_{\varvec{\theta }^*}(\tilde{\varvec{a}}^*) - f_{\varvec{\theta }^*}(\varvec{a}^*) \le \sqrt{2 \log d}\) .

For any super arm \(\varvec{a} \in \mathcal {I}\) , let \(Y_i\) for \(i \in \varvec{a}\) be Gaussian random variables with \(Y_i \sim \mathcal {N}(\theta ^*_i, \sigma _i^2)\) . Furthermore, let \(W_i := Y_i - \theta ^*_i\) , such that \(W_i \sim \mathcal {N}(0, \sigma ^2_i)\) . Then, the following holds:

where the last inequality is due to Lemma 9 of Orabona et al. ( 2015 ) and since \(\sigma _i^2 \le 1\) for all \(i \in \varvec{a}\) . We also note that, by Jensen’s inequality, we have \(\max _{i \in \varvec{a}} \mathbb {E}\left[ Y_i\right] \le \mathbb {E}\left[ \max _{i \in \varvec{a}} Y_i\right]\) . Moreover, by definition we know that \(\varvec{a}^* = \arg \min _{\varvec{a} \in \mathcal {I}} \mathbb {E}\left[ \max _{i \in \varvec{a}} Y_i\right]\) and \(\tilde{\varvec{a}}^* = \arg \min _{\varvec{a} \in \mathcal {I}} \max _{i \in \varvec{a}} \mathbb {E}\left[ Y_i\right]\) . Consequently, we have,

Hence, we can conclude that

In other words, Theorem 7 holds and the optimal solutions of the exact Algorithm 1 and the approximate Algorithm 2 differ by at most \(\sqrt{2 \log d}\) . This bound is independent of the mean vector \(\varvec{\theta }^*\) , depending only on the number of base arms and that the variance is bounded.

4 Experimental results

In this section, we conduct bottleneck identification experiments using Algorithm 2 for two real-world applications, i) road (transport) networks, and ii) collaboration (social) networks. These experiments are performed with an extended version of the simulation framework by Russo et al. ( 2018 ) and evaluated using our approximate definition of regret. In addition, we compare Algorithm 1 to Algorithm 2 through a toy example.

4.1 Road networks

A bottleneck in a network is a segment of a path in the network that obstructs or stops flow. Identification of bottlenecks in a road network is a vital tool for traffic planners to analyze the network and prevent congestion. In this application, our goal is to find the bottleneck between a source and a target, i.e., a road segment which is necessary to pass and also has minimal traffic flow. In the road network model, we let the nodes represent intersections and the directed edges represent road segments, with travel time divided by distance (seconds per meter) as edge weights. The bottleneck between a pair of intersections is the minimum bottleneck over all paths connecting them, where the bottleneck for each of these paths is the largest weight over all road segments along it. Note that in order for the bottleneck between a pair of intersections to have a meaning, there needs to exist at least one path connecting them.

We collect road networks of four cities, shown in Table 1 , from OpenStreetMap contributors ( 2017 ), where the average travel time as well as the distance is provided for each (directed) edge. We simulate an environment with the stochastic edge weights sampled from \(w_e \sim \mathcal {N}(\theta _e^*, \sigma _e^2)\) , where the observation noise is \(\sigma _e = 0.4\) . For the experiments, the environment samples the true unknown mean \(\theta _e^*\) from the known prior \(\theta _e^* \sim \mathcal {N}(\mu _{e,0}, {\varsigma _{e,0}}^2)\) , where \(\varsigma _{e,0} = 0.4 \text {s/m}\) , and \(\mu _{e,0}\) is the average travel time divided by distance provided by OpenStreetMap (OSM).

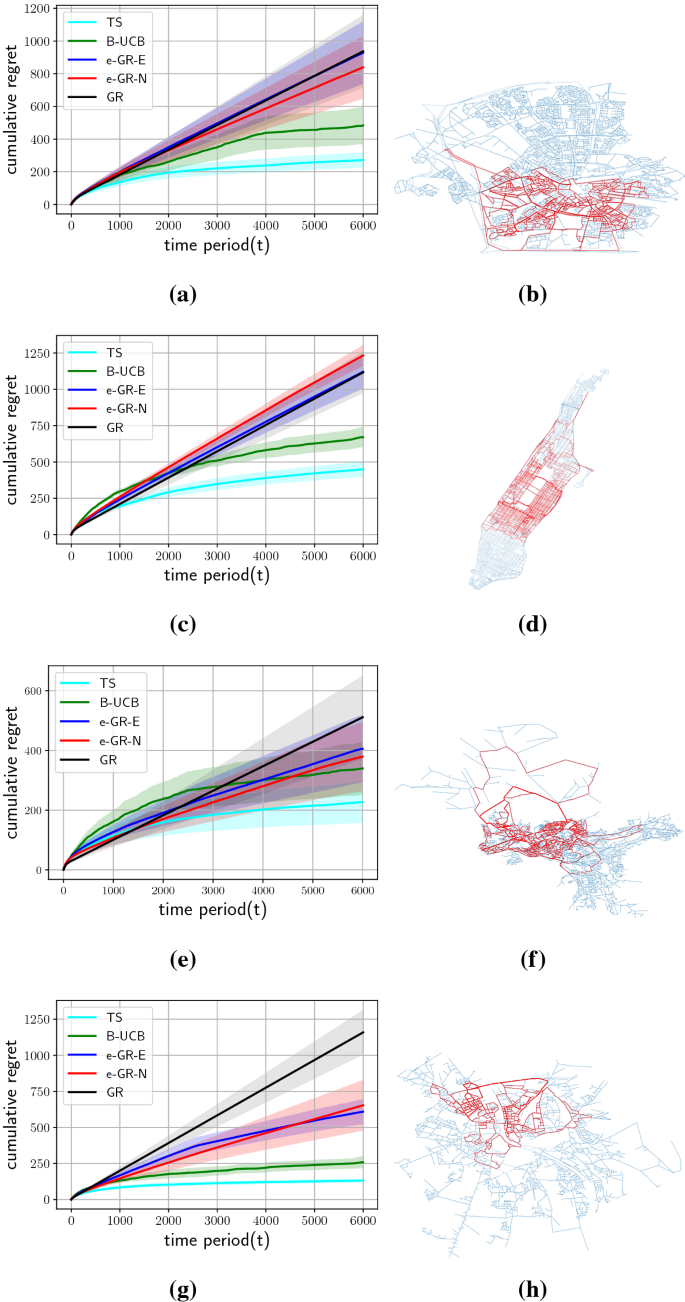

We consider one greedy agent (GR) and two \(\epsilon _t\) -greedy agents (e-GR) as baselines. The greedy agent (GR) always chooses the path with the lowest current estimate of expected cost. In each time step, each e-GR agent, with probability \(\epsilon _t\) decreasing with t (specifically, we let \(\epsilon _t = \min (1, 1/\sqrt{t})\) ), chooses a random path, and acts like the greedy agent otherwise. In our experiments, we implement the two e-GR agents based on the combinatorial version of \(\epsilon _t\) -greedy introduced in Algorithm 1 in the Supplementary Material of Chen et al. ( 2013 ). The first e-GR agent chooses a path between the source and the target containing a uniformly chosen random node (e-GR-N), and the second e-GR agent chooses a path with a uniformly selected random edge (e-GR-E). We evaluate how the performance of the Thompson Sampling agent (TS) and the BayesUCB agent (B-UCB) compare to the baselines. We run the simulations with all five agents for each road network and report the cumulative regret at a given horizon T , averaged over five repetitions. The horizon is chosen such that the instant regret is almost stabilized for the agents.

Table 2 shows the average cumulative regrets and their corresponding standard error over five runs at the horizon T . For all four road networks, the TS agent incurs the lowest average cumulative regret and standard error over five runs. Then, B-UCB follows TS and yields a better result than the baselines (GR and both e-GR variants).

Cumulative regret averaged over 5 runs with shaded standard error bars, for Thompson Sampling (TS), Bayes UCB (B-UCB), \(\epsilon _t\) -greedy agents (e-GR-N and e-GR-E), and greedy (GR) with horizon \(T= 6000\) , on Eindhoven ( a ), Manhattan ( c ), Oslo ( e ) and Salzburg ( g ) road networks. Visualizations of the paths explored by the TS agent are shown in red, for Eindhoven ( b ), Manhattan ( d ), Oslo ( f ) and Salzburg ( h ) road networks. Opacity illustrates the exploration of each of the road segments.

Figure 1 illustrates the average cumulative regret with standard error (SE) bars on the road networks of the four aforementioned cities. For Eindhoven, Figure 1 a shows the average cumulative regret, where at horizon \(T=6000\) the TS agent yields the lowest cumulative regret. Then, B-UCB follows TS and achieves a better result compared to the other baselines. As time progresses, we can see that first TS and then B-UCB start saturating by performing sufficient exploration. With respect to the SE bars, there are differences between the five agents. The TS agent has the smallest SE bars. Figure 1 b visualizes the Eindhoven road network, where the paths explored by the TS agent are shown in red. The road segments explored (tried) more often by the TS agent are displayed more opaque. Figure 1 c, e, and g show the average cumulative regret with SE bars for Manhattan, Oslo, and Salzburg, respectively. The results show that TS incurs the lowest cumulative regret and smallest SE bars. Then, B-UCB follows TS in both aspects and obtains a better result than the other baselines.

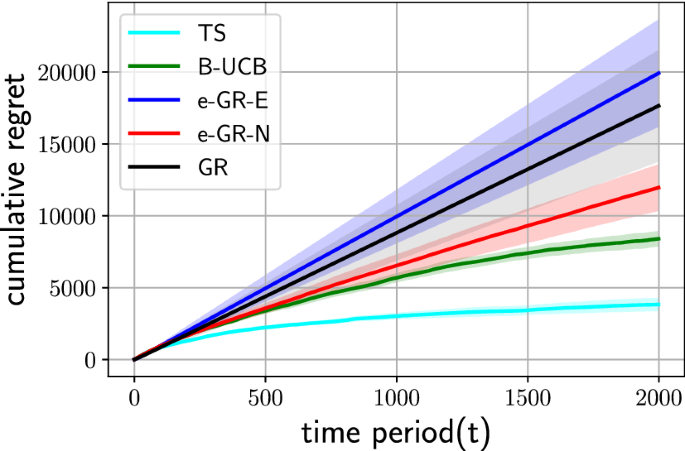

Cumulative regret averaged over 5 runs with shaded standard error bars, for Thompson Sampling (TS), Bayes UCB (B-UCB), \(\epsilon _t\) -greedy agnets (e-GR-N and e-GR-E), and greedy (GR) with horizon \(T= 2000\) for the collaboration network.

4.2 Collaboration network

We consider a collaboration network from computational geometry (Geom) (Jones, 2002 ) as an application of our approach to social networks. More specifically, we use the version provided by Handcock et al. ( 2003 ) and distributed among the Pajek datasets (Batagelj et al., 2006 ) where certain author duplicates, occurring in minor or major name variations, have been merged. The Handcock et al. ( 2003 ) version is based on the BibTeX bibliography (Beebe, 2002 ), to which the database from Jones ( 2002 ) has been exported. The network has 9072 vertices representing the authors and 22577 edges with the edge weights representing the number of mutual works between a pair of authors.

We simulate an environment where each edge weight is sampled as \(w_e \sim \mathcal {N}(\theta _e^*, \sigma _e^2)\) , within which \(\theta _e^*\) is regarded as the true (negative) mean number of shared publications between a pair of authors linked by the edge e , and the observation noise is \(\sigma _e = 5\) . Furthermore, in this experiment, while the true negative mean number of mutual publications are assumed (by the agent) to be distributed according to the prior \(\theta _e^* \sim \mathcal {N}(\mu _{e,0}, \varsigma _{e,0}^2)\) with \(\varsigma _{e,0} = 10\) , we instead generate the mean from a wider prior \(\theta _e^* \sim \mathcal {N}(\mu _{e,0}, 20^2)\) , simulating a scenario where the prior belief of the agent is too high. The assumed mean \(\mu _{e,0}\) of the prior is however consistent with the distribution from which \(\theta _e^*\) is sampled, and is directly determined by the pairwise negative number of mutual collaborations from the dataset by Handcock et al. ( 2003 ).

Figure 2 shows the cumulative regret, averaged over five runs for the different agents with horizon \(T=2000\) , again chosen such that the regret is stabilized for all agents. One can see that the TS agent reaches the lowest cumulative regret, similar to the experimental studies on road networks.

4.3 Exact objective toy example

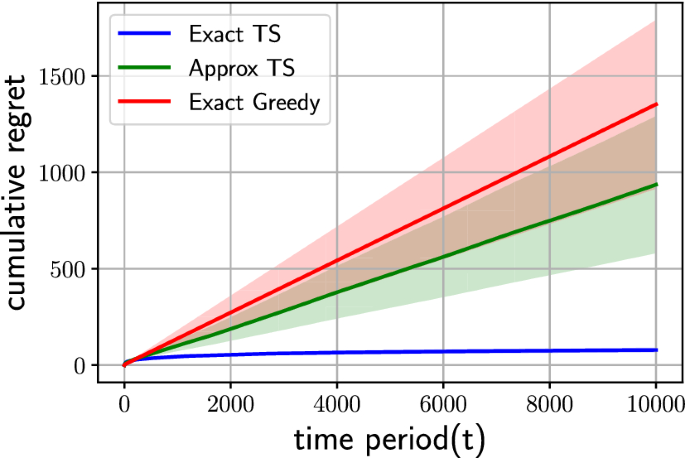

Experimental results with average cumulative regret on the toy example, with \(T=10000\) , on exact TS, approximate TS and exact greedy.

While it is not feasible to evaluate Algorithm 1 on graphs representing real-life transportation or social networks, it is possible for small synthetic graphs. We construct a graph consisting of 6 nodes and 10 edges, with the source and target nodes connected by four paths of length 2 and four paths of length 3. For each edge e , we use the sample the mean from a standard Gaussian prior, such that \(\theta _e^* \sim \mathcal {N}(0,1)\) . The stochastic weights are then generated in each time step t such that \(w_{e,t} \sim \mathcal {N}(\theta ^*_e, 1)\) .

In order to calculate the expected cost of each path, we use existing exact expressions for the expected maximum of two (Clark, 1961 ) and three (Lo, 2020 ; DasGupta, 2021 ) independent Gaussian random variables. Instead of using an oracle, we simply enumerate the paths to find the one with minimum expected cost.

In Figure 3 , we compare Algorithm 1 (TS with exact objective) and Algorithm 2 (TS with approximate objective) using the exact notion of (cumulative) regret as defined in Eq. 4 . Furthermore, we include a greedy baseline which also uses the exact objective. We use a horizon of \(T=10000\) and average the results over 20 experiments, wherein each algorithm is applied to a problem instance sampled from the prior.

We can see that the regret of exact TS quickly saturates, while approximate TS and the greedy method tend to end up in sub-optimal solutions. For approximate TS, this is to be expected since optimal arms for the exact and approximate problems may be different. It is worth noting, however, that approximate TS performs better than the exact greedy method on average.

5 Conclusion

We developed an online learning framework for bottleneck identification in networks via minimax paths. In particular, we modeled this task as a combinatorial semi-bandit problem for which we proposed a combinatorial version of Thompson Sampling. We then established an upper bound on the Bayesian regret of the Thompson Sampling method. To deal with the computational intractability of the problem, we devised an alternative problem formulation which approximates the original objective. Finally, we investigated the framework on several directed and undirected real-world networks from transport and collaboration domains. Our experimental results demonstrate its effectiveness compared to alternatives such as greedy and B-UCB methods.

Availability of data and material

Only public datasets, clearly cited in the text, are used.

Change history

19 november 2022.

Missing ESM file added to article

Agrawal, S., Goyal, N. (2012) Analysis of thompson sampling for the multi-armed bandit problem. In: Mannor, S., Srebro, N., Williamson, R.C. (eds.) Proceedings of the 25th Annual Conference on Learning Theory. Proceedings of Machine Learning Research. vol. 23, pp. 39–13926. PMLR, Edinburgh, Scotland.

Åkerblom, N., Chen, Y., Haghir Chehreghani, M. (2020) An online learning framework for energy-efficient navigation of electric vehicles. In: Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence (IJCAI), pp. 2051–2057. 10.24963/ijcai.2020/284

Auer, P. (2003). Using confidence bounds for exploitation-exploration trade-offs. Journal of Machine Learning Research., 3 (null), 397–422.

MATH Google Scholar

Batagelj, V., Mrvar, A. (2006) Pajek datasets. http://vlado.fmf.uni-lj.si/pub/networks/data/ . Accessed: 2021-09-08

Beebe, N.H.F. (2002) Nelson H. F. Beebe’s Bibliographies Page. http://www.math.utah.edu/~beebe/bibliographies.html . Accessed: 2021-09-08

Berman, O., & Handler, G. Y. (1987). Optimal minimax path of a single service unit on a network to nonservice destinations. Transportation Science, 21 (2), 115–122. https://doi.org/10.1287/trsc.21.2.115

Article MATH Google Scholar

Cesa-Bianchi, N., & Lugosi, G. (2012). Combinatorial bandits. Journal of Computer and System Sciences, 78 (5), 1404–1422. https://doi.org/10.1016/j.jcss.2012.01.001.JCSS

Chapelle, O., & Li, L. (2011). An empirical evaluation of thompson sampling. In J. Shawe-Taylor, R. Zemel, P. Bartlett, F. Pereira, & K. Q. Weinberger (Eds.), Advances in neural information processing systems. London: Springer.

Google Scholar

Chen, W., Hu, W., Li, F., Li, J., Liu, Y., Lu, P. (2016) Combinatorial multi-armed bandit with general reward functions. In: Lee, D, Sugiyama, M, Luxburg, U, Guyon, I, Garnett, R (eds) Advances in Neural Information Processing Systems. p 29

Chen, W., Wang, Y., Yuan, Y. (2013) Combinatorial multi-armed bandit: General framework and applications. In: Dasgupta, S., McAllester, D. (eds.) Proceedings of the 30th International Conference on Machine Learning. Proceedings of Machine Learning Research, vol. 28, pp. 151–159. Atlanta, Georgia, USA

Clark, C. E. (1961). The greatest of a finite set of random variables. Operations Research, 9 (2), 145–162. https://doi.org/10.1287/opre.9.2.145

DasGupta, A. (2021) A Formula for the Expected Value of the Maximum of Three Independent Normals and a Sparse High Dimensional Case. https://www.stat.purdue.edu/~dasgupta/orderstat.pdf . Accessed: 2021-09-07 (n.d.)

Dijkstra, E. W. (1959). A note on two problems in connexion with graphs. Numerische mathematik, 1 (1), 269–271. https://doi.org/10.1007/bf01386390

Du, Y., Kuroki, Y., Chen, W. (2021) Combinatorial Pure Exploration with Bottleneck Reward Function. arXiv. 1048550/ARXIV.2102.12094

Fredman, M. L., & Tarjan, R. E. (1987). Fibonacci heaps and their uses in improved network optimization algorithms. Journal ACM, 34 (3), 596–615. https://doi.org/10.1145/28869.28874

Gai, Y., Krishnamachari, B., & Jain, R. (2012). Combinatorial network optimization with unknown variables: Multi-armed bandits with linear rewards and individual observations. IEEE/ACM Transactions on Networking, 20 (5), 1466–1478. https://doi.org/10.1109/TNET.2011.2181864

Article Google Scholar

Graepel, T., Candela, J.Q.n., Borchert, T., Herbrich, R. (2010) Web-scale bayesian click-through rate prediction for sponsored search advertising in microsoft’s bing search engine. In: Proceedings of the 27th International Conference on International Conference on Machine Learning. ICML’10, pp. 13–20. Omnipress, Madison, WI, USA

Haghir Chehreghani, M. (2020). Unsupervised representation learning with minimax distance measures. Machine Learning, 109 (11), 2063–2097. https://doi.org/10.1007/s10994-020-05886-4

Handcock, M.S., Hunter, D., Butts, C.T., M., G.S., Morris, M. (2003) Statnet: An R package for the Statistical Modeling of Social Networks. http://www.csde.washington.edu/statnet . Accessed: 2021-09-08

Hansen, P. (1980). Bicriterion path problems. In G. Fandel & T. Gal (Eds.), Multiple criteria decision making theory and application (pp. 109–127). Berlin: Springer.

Chapter Google Scholar

Hennessy, D. A., & Wiesenthal, D. L. (1999). Traffic congestion, driver stress, and driver aggression. Aggressive Behavior, 25 (6), 409–423. https://doi.org/10.1002/(SICI)1098-2337(1999)25:6<409::AID-AB2>3.0.CO;2-0

Horrace, W. C. (2015). Moments of the truncated normal distribution. Journal of Productivity Analysis, 43 (2), 133–138. https://doi.org/10.1007/s11123-013-0381-8

Hu, T. C. (1961). The maximum capacity route problem. Operations Research, 9 , 898–900. https://doi.org/10.1287/opre.9.6.898

Jones, B. (2002) Computational geometry database. http://jeffe.cs.illinois.edu/compgeom/biblios.html . Accessed: 2021-09-08

Kaufmann, E., Cappe, O., Garivier, A. (2012) On bayesian upper confidence bounds for bandit problems. In: Lawrence, N.D., Girolami, M. (eds.) Proceedings of the Fifteenth International Conference on Artificial Intelligence and Statistics. Proceedings of Machine Learning Research, vol. 22, pp. 592–600. La Palma, Canary Islands

Kaufmann, E., Korda, N., Munos, R. (2012) Thompson sampling: An asymptotically optimal finite-time analysis. In: Algorithmic Learning Theory - 23rd International Conference, ALT, pp. 199–213. 10.1007/978-3-642-34106-9_18

Kim, K.-H., Choi, S. (2007) Neighbor search with global geometry: A minimax message passing algorithm. In: Proceedings of the 24th International Conference on Machine Learning. ICML ’07, pp. 401–408, New York, NY, USA. 10.1145/1273496.1273547

Kveton, B., Wen, Z., Ashkan, A., Szepesvari, C. (2015) Tight Regret Bounds for Stochastic Combinatorial Semi-Bandits. In: Lebanon, G., Vishwanathan, S.V.N. (eds.) Proceedings of the Eighteenth International Conference on Artificial Intelligence and Statistics. Proceedings of Machine Learning Research, vol. 38, pp. 535–543. San Diego, California, USA

Lai, T. L., & Robbins, H. (1985). Asymptotically efficient adaptive allocation rules. Advances in Applied Mathematics, 6 (1), 4–22. https://doi.org/10.1016/0196-8858(85)90002-8

Lo, C. (2020) Improving hardware design reuse through design-space exploration. PhD thesis, University of Toronto (Canada)

Liu, K., Zhao, Q. (2012) Adaptive shortest-path routing under unknown and stochastically varying link states. In: 2012 10th International Symposium on Modeling and Optimization in Mobile, Ad Hoc and Wireless Networks (WiOpt). pp. 232–237.

Nuara, A., Trovo, F., Gatti, N., Restelli, M. (2018) A combinatorial-bandit algorithm for the online joint bid/budget optimization of pay-per-click advertising campaigns. In: Thirty-Second AAAI Conference on Artificial Intelligence. 10.1609/aaai.v32i1.11888

OpenStreetMap contributors (2017) Planet dump retrieved from. www.openstreetmap.org . Accessed: 2021-09-08

Orabona, F., Pal, D. (2015) Optimal Non-Asymptotic Lower Bound on the Minimax Regret of Learning with Expert Advice. arXiv. 1048550/ARXIV.1511.02176

Pollack, M. (1960). The maximum capacity through a network. Operations Research . https://doi.org/10.1287/opre.8.5.733

Prim, R. C. (1957). Shortest connection networks and some generalizations. The Bell System Technical Journal, 36 (6), 1389–1401. https://doi.org/10.1002/j.1538-7305.1957.tb01515.x

Riquelme, C., Tucker, G., Snoek, J. (2018) Deep bayesian bandits showdown: An empirical comparison of bayesian deep networks for thompson sampling. In: International Conference on Learning Representations.

Russo, D., & Van Roy, B. (2014). Learning to optimize via posterior sampling. Mathematics of Operations Research, 39 (4), 1221–1243. https://doi.org/10.1287/moor.2014.0650

Russo, D. J., Roy, B. V., Kazerouni, A., Osband, I., & Wen, Z. (2018). A tutorial on thompson sampling. Foundations and Trends in Machine Learning., 11 (1), 1–96. https://doi.org/10.1561/2200000070

Seshadri, R., & Srinivasan, K. K. (2010). Algorithm for determining most reliable travel time path on network with normally distributed and correlated link travel times. Transportation research record, 2196 (1), 83–92. https://doi.org/10.3141/2196-09

Shacham, N. (1992). Multicast routing of hierarchical data. In: [Conference Record] SUPERCOMM/ICC 92 Discovering a New World of Communications, pp. 1217–12213. doi: https://doi.org/10.1109/ICC.1992.268047

Slivkins, A. (2019). Introduction to multi-armed bandits. Foundations and Trends in Machine Learning, 12 (1–2), 1–286. https://doi.org/10.1561/2200000068

Thompson, W. R. (1933). On the likelihood that one unknown probability exceeds another in view of the evidence of two samples. Biometrika, 25 (3–4), 285–294. https://doi.org/10.2307/2332286

Wang, S., Chen, W. (2018) Thompson sampling for combinatorial semi-bandits. In: Dy, J., Krause, A. (eds.) Proceedings of the 35th International Conference on Machine Learning. Proceedings of Machine Learning Research. vol. 80, pp. 5114–5122.

Zou, Z., Proutiere, A., Johansson, M. (2014) Online shortest path routing: The value of information. In: 2014 American Control Conference. pp. 2142–2147. 10.1109/ACC.2014.6859133

Download references

Acknowledgements

This work is partially funded by the Strategic Vehicle Research and Innovation Programme (FFI) of Sweden, through the project EENE (reference number: 2018-01937). We want to thank the reviewers for their helpful comments and suggestions. We also want to thank Emilio Jorge, Emil Carlsson and Tobias Johansson for insightful discussions around the proofs.

Open access funding provided by Chalmers University of Technology. Niklas Åkerblom is employed by Volvo Car Corporation with funding from Sweden’s Innovation Agency (Vinnova), through the Strategic Vehicle Research and Innovation Programme (FFI) of Sweden (project EENE, reference number: 2018-01937).

Author information

Niklas Åkerblom, Fazeleh Sadat Hoseini have contributed equally to this work.

Authors and Affiliations

Chalmers University of Technology, Gothenburg, Sweden

Niklas Åkerblom, Fazeleh Sadat Hoseini & Morteza Haghir Chehreghani

Volvo Car Corporation, Gothenburg, Sweden

Niklas Åkerblom

You can also search for this author in PubMed Google Scholar

Contributions

All authors have contributed significantly to the conception, design and writing of this work. The theoretical analysis was primarily performed by Niklas Åkerblom, and the experimental study was primarily performed by Fazeleh Sadat Hoseini.

Corresponding authors

Correspondence to Niklas Åkerblom or Fazeleh Sadat Hoseini .

Ethics declarations

Conflict of interest.

There is no conflict of interest.

Ethics approval

Not applicable.

Consent to participate

Consent for publication, code availability.

Contact the authors for access to the code.

Supplementary information

The detailed proof of Theorem 1 is provided in Online Resource 1.

Additional information

Editors: Krzysztof Dembczynski and Emilie Devijver.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file1 (PDF 293 kb)

Rights and permissions.

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Åkerblom, N., Hoseini, F.S. & Haghir Chehreghani, M. Online learning of network bottlenecks via minimax paths. Mach Learn 112 , 131–150 (2023). https://doi.org/10.1007/s10994-022-06270-0

Download citation

Received : 10 December 2021

Revised : 06 July 2022

Accepted : 07 October 2022

Published : 08 November 2022

Issue Date : January 2023

DOI : https://doi.org/10.1007/s10994-022-06270-0

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Online Learning

- Combinatorial Semi-bandit

- Thompson Sampling

- Bottleneck Identification

Advertisement

- Find a journal

- Publish with us

- Track your research

Title: Uncertain bottleneck assignment problem using credibility theory

Authors : Debapriya Dey Sarkar; Shyamal Kumar Mondal; Kajla Basu

Addresses : Department of Mathematics, National Institute of Technology Durgapur, Durgapur, India ' Department of Applied Mathematics with Oceanology and Computer Programming, Vidyasagar University, Midnapore, India ' Department of Mathematics, National Institute of Technology Durgapur, Durgapur, India

Abstract : In this paper, two types of generalised bottleneck assignment problem (BGAP) namely task-BGAP and agent-BGAP have been considered with fuzzy costs, capacities and resources. In reality, most of the data are uncertain or vague in nature. The objective of this paper is to formulate and solve a more realistic model under uncertainty. A robust counterpart of these two BGAP models have been constructed using credibility measure theory to solve these optimal mini-max regret problems. Credibility theory helps the actuaries to understand the risk associated with historical data and try to reduce the losses for any organisation. So, by this approach, chance constrained programming (CCP) models have been developed. Finally, the CCP models are solved to get the optimal solution using LINGO software. The method has been illustrated using a real life application of a production factory in Section 5.

Keywords : trapezoidal fuzzy number; bottleneck assignment problem; confidence interval; credibility measure theory; robust optimisation.

DOI : 10.1504/IJMOR.2023.135549

International Journal of Mathematics in Operational Research, 2023 Vol.26 No.4, pp.502 - 522

Received: 05 Apr 2022 Accepted: 16 Oct 2022 Published online: 18 Dec 2023 *

Keep up-to-date

- Our Newsletter ( subscribe for free )

- New issue alerts

- Inderscience is a member of publishing organisations including:

IMAGES

VIDEO

COMMENTS

In this sensitivity analysis, we provide a sufficient but not necessary condition for the invariance of the optimal assignment, in the form of an interval test. n×m Let Λ ⊆ R be an n × m array of intervals over the extended reals. For each edge e ∈ E, let [−λe, λe] be the interval corresponding to edge e. Remark 1.

In this sensitivity analysis, we provide a sufficient but not necessary condition for the invariance of the optimal assignment, in the form of an interval test. Let Λ ⊆ R n × m be an n × m array of intervals over the extended reals. For each edge e ∈ E, let [ − λ e, λ e] be the interval corresponding to edge e. Remark 1.

We discuss bottleneck (or minimax) versions of the generalized assignment problem. The basic problem involves the assignment of a number of jobs to a number of agents such that each job is performed by a unique agent, and capacity limitations on the agents are not exceeded.

For the bottleneck assignment problem there are many algorithms which provide solution in polynomial time, reviewed in Pentico (2007) and Pferschy (1997). ... If the uncertainty is known a priori a minimax approach may be used, identifying an assignment which minimises the assignment cost for the worst case realisation of the uncertainty ...

We discuss bottleneck (or minimax) versions of the generalized assignment problem. The basic problem involves the assignment of a number of jobs to a number of agents such that each job is performed by a unique agent, and capacity limitations on the agents are not exceeded. Two versions of the bottleneck generalized problem (BGAP) are defined.

A minimax constraint eliminates edges in the network, and can improve the worst-case time bound for the performance of the minimum cost flow algorithm, that is, a better match from a practical perspective may take less time to construct. ... the bottleneck assignment problem, whose sole objective is to minimize the maximum within-pair ...

bottleneck identification as the problem of computing the minimax edge over the given network/graph, to obtain an edge with a minimal largest gap between the source and target nodes. Equivalently, it can be formulated as a widest path problem or maximum capacity path problem [35] where the edge weights have been negated.

In this paper, a new approach for solving the bottleneck assignment problem is presented. The problem is treated as a special class of permutation problems which we call max-min permutation problems. By defining a suitable neighborhood system in the space of permutations and designating certain permutations as critical solutions, it is shown ...

Keywords: Bottleneck problem, minimax, maxmin, linear programming problem. 1.1 Introduction The bottleneck assignment problem is an interesting problem in combinatorial optimization. It has many variants and is as well a variant of the assignment problem. In gene ral, the problem is defined based on a

Let T * = Min T . Step 3 Solve the linear sum assignment problem with the matrix [cij] and let Z+be the optimal objective value and To be the Downloaded by [Peerayuth Charnsethikul] at 06:50 15 April 2013 maximal ti, for the set of assignments with Z'. Step 4 If To= T * and Z * 5 B then the solution found in Step 3 is optimal and stop else if Z ...

In this paper the linear bottleneck assignment problem and the linear sum assignment problem with interval costs are examined. In order to calculate the optimal solution to these problems one of the robust criteria, called the minimax regret, is adopted. It is shown that the robust linear bottleneck assignment problem can be solved in polynomial time while the robust linear sum assignment ...

The absolute regret (regret) is used to evaluate a solution which gives the minmax regret binary optimization problem and the evolutionary heuristic solution algorithm is experimentally compared with a simple middle interval heuristic algorithm for three machines instances. Expand. 9. PDF.

minimal. Thus, one may model bottleneck identification as the problem of computing the minimax edge over the given network/graph, to obtain an edge with a minimal largest gap between the source and target nodes. Equivalently, it can be formulated as a widest path problem or maximum capacity path problem (Pollack 1960) where the edge weights have

In this paper, a new approach for solving the bottleneck assignment problem is presented. The problem is treated as a special class of permutation problems which we call max-min permutation problems. By defining a suitable neighborhood system in the space of permutations and designating certain permutations as critical solutions, it is shown that any critical solution yields a global optimum ...

We developed an online learning framework for bottleneck identification in networks via minimax paths. In particular, we modeled this task as a combinatorial semi-bandit problem for which we proposed a combinatorial version of Thompson Sampling. We then established an upper bound on the Bayesian regret of the Thompson Sampling method.

Abstract. We discuss bottleneck (or minimax) versions of the generalized assignment problem. The basic problem involves the assignment of a number of jobs to a number of agents such that each job is performed by a unique agent, and capacity limitations on the agents are not exceeded. Two versions of the bottleneck generalized assignment problem ...

The TBGAP algorithm is tested on a set of test problems drawn from the GAP literature. The computational results suggest that the algorithm is extremely effective in solving TBGAP problems to optimality. Abstracte discuss a bottleneck (or minimax) version of the generalized assignment problem, known as the task bottleneck generalized assignment ...

DOI: 10.1016/j.cor.2004.08.004 Corpus ID: 12001782; Solving the minmax product rate variation problem (PRVP) as a bottleneck assignment problem @article{Palli2006SolvingTM, title={Solving the minmax product rate variation problem (PRVP) as a bottleneck assignment problem}, author={Natalia Moreno Palli and Albert Corominas}, journal={Comput.

Abstract: In this paper, two types of generalised bottleneck assignment problem (BGAP) namely task-BGAP and agent-BGAP have been considered with fuzzy costs, capacities and resources. In reality, most of the data are uncertain or vague in nature. The objective of this paper is to formulate and solve a more realistic model under uncertainty.

As with many similar problems, the bottleneck problem is symmetrical. In fact, you can talk of two different problems:. Find a path that has its shortest edge as long as possible; Find a path that has its longest edge as short as possible; The algorithm for both versions is the same, except that you reverse all weight relations (change max-heap to min-heap or vice-versa, change the sign of ...

In this paper, we consider the minmax product rate variation problem (PRVP), which consists in sequencing copies of different products on an assembly line in such a way that the maximum value of a discrepancy function between actual and ideal productions is minimum. One means of solving this problem lies in its reduction to a bottleneck assignment problem with a matrix of a special structure.

In combinatorial optimization, a field within mathematics, the linear bottleneck assignment problem (LBAP) is similar to the linear assignment problem.. In plain words the problem is stated as follows: There are a number of agents and a number of tasks.Any agent can be assigned to perform any task, incurring some cost that may vary depending on the agent-task assignment.

tleneck is minimal. Thus, one may model bottleneck identi-fication as the problem of computing the minimax edge over the given network/graph, to obtain an edge with a minimal largest gap between the source and target nodes. Equiva-lently, it can be formulated as a widest path problem or max-imum capacity path problem (Pollack 1960) where the edge