15 Best Academic Networking and Collaboration Platforms

This post may contain affiliate links that allow us to earn a commission at no expense to you. Learn more

Want to break free from scholarly isolation? Uncover the best academic networking and collaboration platforms to transform your research journey.

Have you ever found yourself submerged in heaps of academic papers, isolated in the pursuit of a research question that burns within your curious mind? Although occasionally necessary, the seclusion of academic research can stifle innovation and create echo chambers.

The antidote lies in robust academic networking and collaboration – the highway to diverse knowledge exchange.

Table of Contents

Academic networking and collaboration platforms are a global gathering of brilliant minds convening to discuss, debate, share, and collaborate in a virtual world.

These platforms are fertile grounds that nurture academic growth, streamline collaboration, and enhance scholarly visibility. They offer diverse benefits, from tracking citations and managing references to facilitating interdisciplinary conversations.

Let’s embark on an enlightening journey through some of the top networking and collaboration platforms.

Best Academic Networking and Collaboration Platforms

#1. academia.edu – best for sharing research papers.

- Global hub connecting millions of researchers

- Streamlines the process of sharing papers

- Keeps you abreast with state-of-the-art research in your field

Academia.edu is a bustling online city square solely dedicated to the academic world. This platform provides an extensive environment for scholars to share papers, receive feedback, and stay updated with the latest research in their areas of interest.

What are the benefits of Academia.edu?

- Direct Communication: Academia.edu offers a platform to directly connect with researchers around the world, facilitating communication and collaboration.

- Paper Sharing and Discoverability: Users can share their own academic papers and discover those written by others, enhancing exposure and learning.

- Analytics: Provides detailed statistics on who is reading and citing your work, thus helping to track the impact of your research.

If your research papers crave a global platform and you’re keen on networking with academicians, Academia.edu has a lot to offer. However, the platform’s push towards premium memberships might be a concern for some.

How much does it cost?

- Premium: $9 per month

Source: https://www.academia.edu/

#2. Google Scholar – Best for Broad Literature Search and Citation Tracking

- Comprehensive database for literature search

- Efficient citation tracking system

- Authoritative profile management

Google Scholar operates like a relentless detective in the realm of academia. With its wide reach, it helps you navigate the vast sea of literature and keeps a sharp eye on who cites your work.

This platform ensures you’re not just studying, but also effectively weaving your research into the global academic network.

What are the benefits of Google Scholar?

- Broad Scope: Google Scholar gives access to a vast array of academic articles, theses, books, conference papers, and patents across many subject areas.

- Citation Tracking: This platform sasy tracking of citations to articles, which helps measure the impact of research.

- Personal Scholar Profiles: Users can create profiles displaying their publications, facilitating professional visibility.

Google Scholar is a formidable tool for literature search and citation tracking. But, if you’re seeking a platform that offers active networking or community building, you may need to explore further.

Source: https://scholar.google.com/

#3. LinkedIn – Best for Professional Networking Across All Fields

- Premier platform for professional networking

- Provides job listings and career opportunities

- Facilitates industry-academia interactions

Like an interactive professional directory on steroids, LinkedIn goes beyond traditional networking. It bridges the gap between academia and industry, fostering connections and conversations that could ignite your next career-defining opportunity.

What are the benefits of LinkedIn?

- Professional Networking: Allows for networking with professionals not only from academia but also from various industries.

- Job Market Insight: This platform provides information about job opportunities, trends, and professional development resources.

- Group Discussions: LinkedIn enables engagement in professional group discussions, offering space to share insights and gain knowledge from peers.

LinkedIn is your go-to if you’re seeking to extend your network beyond academia. While it might not be the primary choice for academic research, it’s an invaluable platform for career development and industry insights.

- Premium starts at $39.99 per month to $149.99 per month

Source: https://www.techtarget.com/

#4. Mendeley – Best for Reference Management and Discovery of New Research

- Robust tool for reference management

- Curates personalized research recommendations

- Facilitates collaborative work on papers

Mendeley is like the diligent research assistant you always needed. This tool handles your references with deftness, suggests new research tailored to your interests, and allows you to collaborate on papers with your team.

What are the benefits of Mendeley?

- Reference Management: This tool helps to organize, read, annotate, and cite literature effectively, easing the research process.

- Collaborative Work: Mendeley enables sharing and collaborating on documents with others privately or in public groups.

- Research Network: It also connects with a global research community, providing updates from the fields of interest.

Mendeley is a handy tool for handling references and discovering new research. However, its networking capabilities are limited compared to other platforms.

- Basic: $4.99 per month

- Pro: $9.99 per month

- Max: 14.99 per month

Source: https://www.mendeley.com

#5. ResearchGate – Best for Interdisciplinary Networking and Collaboration

- Dedicated platform for researchers across disciplines

- Helps share and discover scholarly content

- Fosters collaboration and discussion among peers

ResearchGate is a cross-disciplinary academic hub, buzzing with intellectual dialogue, paper sharing, and collaboration. The platform stands out by facilitating academic networking across disciplines, fostering a rich scholarly exchange.

What are the benefits of ResearchGate?

- Collaborative Projects: ResearchGate enables sharing and following of research projects, helping foster collaborations.

- Q&A Forum: A platform to ask research-related questions and receive answers from professionals in the field.

- Open Reviews: It also offers a space for open peer review, enhancing the transparency and rigor of research.

ResearchGate offers an excellent platform for interdisciplinary networking and collaboration. However, its utility may be limited if you’re not involved in active research or uncomfortable with content behind memberships.

Source: https://www.researchgate.net/

#6. ORCID – Best for Ensuring Researcher Uniqueness

- Provides unique identifiers for researchers

- Prevents identity confusion in academic work

- Allows easy tracking of individual research contributions

ORCID is like your unique academic fingerprint, ensuring your work never gets mixed up with someone else’s. It grants researchers unique identifiers, making it easier to track and attribute your academic contributions.

What are the benefits of ORCID?

- Unique Identifier: It provides a unique digital identifier that distinguishes a researcher and ensures their work is correctly attributed.

- Integration: This tool is broadly integrated with many publishers, funders, and institutions, allowing for ease of workflow.

- Record Keeping: ORCID keeps a track of all professional activities (publications, grants, patents etc.) in a centralized place, ensuring the researcher’s profile is up-to-date.

ORCID is a valuable tool for ensuring researcher uniqueness, but it’s not a full-fledged networking platform. It’s a must-have for academics, though its importance might be underestimated by those with less common names.

Source: https://orcid.org/

#7. Publons – Best for Tracking Peer Reviews and Editorships

- Tracks and validates peer review contributions

- Supports discovery of editorial opportunities

- Facilitates open recognition for reviewers

Picture a stage where your often unnoticed work as a peer reviewer or editor gets the spotlight. Publons does just that, giving recognition to your behind-the-scenes contributions to the scholarly world.

What are the benefits of Publons?

- Recognition of Review Work: Provides credit for peer review and editorial work which is often overlooked in academia.

- Review History: Maintains a verifiable record of a researcher’s contribution to peer review and editorial work.

- Training: Offers resources and training for peer reviewing, improving review quality and skills.

Publons excels at recognizing the often-invisible labor of peer review and editorship. It may have limited scope for broader academic networking, but its unique focus makes it stand out.

Source: https://publons.com/

#8. OSF (Open Science Framework) – Best for Full Project Lifecycle Management

- Manages project lifecycle from planning to publishing

- Supports collaboration and data sharing

- Champions the cause of open science

OSF is like your research’s trustworthy custodian, guiding it from its infancy (planning) to maturity (publishing). Besides collaboration, it offers comprehensive tools to manage your project’s life cycle while championing the cause of open science.

What are the benefits of OSF?

- Project Management: Assists in managing projects with a suite of collaborative tools, aiding the organization of research.

- Open Science: Promotes transparency and reproducibility by enabling public sharing of datasets, protocols, and research outputs.

- Cross-Platform Integration: Supports integration with many other tools like GitHub, Google Drive, and Mendeley.

For academics seeking a comprehensive platform to manage research projects from start to finish, OSF is an excellent tool. Its learning curve may be a hurdle, but the benefits it offers for project management and open science make it worth the effort.

Source: https://osf.io/

#9. GitHub – Best for Collaborative Coding and Version Control

- Ideal for coding collaborations and version control

- Provides open-source platforms for various projects

- Hosts a vibrant community of developers

GitHub is like a beehive for coding enthusiasts. The platform thrives with collaborative projects, offering unparalleled version control. It’s a dynamic platform where coders and researchers converge to build and refine code.

What are the benefits of GitHub?

- Version Control: GitHub offers a robust version control system, facilitating collaborative coding and data analysis projects.

- Repository: This tool allows the hosting of programming projects and open access of code, promoting open source development.

- Community: Large community of users contributes to learning, problem-solving, and improvement of code.

If your research involves coding, GitHub is a must-have tool. While it might be intimidating for beginners, the benefits it offers for collaborative coding and version control are unmatched.

- Starts from $4 per user

Source: https://github.com/

#10. StackExchange (Academia) – Best for Question-and-Answer Format Discussions Related to Academia

- Q&A platform specifically for academia

- Facilitates sharing of knowledge and advice

- Hosts a diverse community of researchers and academics

StackExchange (Academia) is like your reliable academic counsel. This Q&A platform fuels robust discussions on academic issues, connecting you with a community eager to share knowledge and insights.

What are the benefits of Stack Exchange?

- Expertise: Provides a forum for asking and answering questions related to academic life, drawing from a wide pool of experiences and expertise.

- Categorized Discussions: Allows for categorization of discussions by topics, making it easier to find relevant information.

- Reputation Points: Users earn reputation points for quality questions and answers, encouraging a high standard of contributions.

StackExchange (Academia) shines as a Q&A platform specifically for academic discussions. While it’s not suitable for document sharing or formal networking, it’s an excellent resource for tapping into collective academic wisdom.

Source: https://academia.stackexchange.com/

#11. Reddit – Best for Informal Academic Discussions and Advice

- Platform for informal academic discussions

- Wide variety of topic-specific communities

- Enables anonymity in conversation

Reddit is a bustling online tavern where academic conversations flow as freely as the drinks. With its countless communities and casual tone, it opens up a world of informal academic discussions and advice.

What are the benefits of Reddit?

- Subreddit Communities: Offers numerous academic subreddits, enabling discussion and advice on niche topics.

- Anonymous Interaction: Allows users to maintain anonymity, encouraging open and honest discussion.

- Global Perspectives: Provides a platform to interact with a diverse, worldwide user base, broadening perspectives on various topics.

Reddit is a treasure trove for those seeking informal academic discussions. While it’s not ideal for formal networking, the depth and breadth of its communities make it a unique platform for candid conversations.

Source: https://www.redditinc.com/

#12. Quora – Best for Receiving Expert Answers and Sharing Knowledge

- Q&A platform with diverse range of topics

- Hosts experts across various fields

- Great for knowledge sharing and learning

Quora is like a dynamic global seminar, buzzing with questions and brimming with expert answers. This social networking site is an invaluable platform for sharing your knowledge and quenching your thirst for insights from various fields.

What are the benefits of Quora?

- Diverse Topics: Covers a wide range of topics, enabling users to ask and answer questions on virtually any subject.

- Expert Answers: Often features responses from industry and academic experts, providing authoritative answers.

- Personalized Feed: Users can follow topics of interest to customize their feed, staying updated on their preferred subjects.

Quora is a versatile platform for knowledge sharing and gaining expert insights. While it may not be a traditional academic networking tool, its strength lies in the diversity of its topics and the expertise of its contributors.

Source: https://www.quora.com/

#13. Facebook – Best for Building Community and Networking in Field-Specific Groups

- Popular platform for building communities

- Houses a multitude of field-specific groups

- Allows for event organization and announcement sharing

Facebook might be known for connecting friends and families, but it’s also a melting pot for academic networking. With its diverse field-specific groups and community-building features, it’s a trove of academic possibilities.

What are the benefits of Facebook?

- Social Networking: Connects researchers on a social level, enabling informal discussions and relationship building.

- Academic Groups: Hosts numerous academic and research-focused groups for collaboration, discussion, and sharing of resources.

- Events: Provides a platform for promoting and discovering academic events, lectures, and webinars.

Facebook is one of the most useful social networking sites for academic networking due to its wide reach and diverse groups. However, it may not cater to every academic need and its data handling practices might give privacy-conscious users pause.

Source: https://edu.gcfglobal.org/

#14. Twitter – Best for Sharing Quick Research Updates and Engaging in Academic Discussions

- Microblogging social media platform ideal for quick updates

- Connects academics and researchers globally

- Hashtag system enables focused conversations

Twitter is the academic equivalent of the town crier, broadcasting research updates in quick, digestible bites. Its global reach and hashtag system offer unique ways to engage with the academic community.

What are the benefits of Twitter?

- Real-Time Updates: Provides real-time updates from conferences, symposia, and fellow academics, keeping users up to date.

- Networking: Enables networking with a broad audience, allowing for the sharing and promotion of research.

- Hashtag Use: Allows for the organization of content using hashtags, aiding discoverability of research topics.

If brevity is your thing, Twitter excels at disseminating quick research updates. Its potential for distractions and information overload should be considered, but its wide reach and hashtag-driven discussions can be invaluable. Therefore, Twitter is one of the best academic social networks.

Source: https://about.twitter.com/

#15. Scopus – Best for Abstract and Citation Searching

- Specializes in abstract and citation searching

- Houses extensive database of research literature

- Provides analytical tools to track citation impact

Scopus is akin to a scholarly lighthouse, guiding researchers through the dense sea of abstracts and citations. Its expansive database and analytical tools make it a valuable asset for any academic.

What are the benefits of Scopus?

- Comprehensive Database: Offers access to a large database of peer-reviewed literature from various fields.

- Analytic Tools: Provides various tools for analyzing research output and trends, supporting academic decision-making.

- Author Profiles: This tool facilitates the tracking of an author’s work and citation impact, aiding reputation management.

Scopus is a powerhouse for abstract and citation searching, though it doesn’t offer much in the way of networking or collaboration. While it is a subscription-based service, the depth and breadth of its tools and database can justify the cost for many researchers.

- Custom price

Source: https://www.scopus.com/

Academic networking and collaboration platforms are the lifeblood of today’s scholarly community. They offer many opportunities for sharing research, connecting with fellow academics, and advancing your academic career.

Whether you are looking for strictly academic or social media platforms, these blog post provide the tools and resources to take your academic journey to new heights.

There is more.

Check out our other articles on the Best Academic Tools Series for Research below.

- Learn how to get more done with these Academic Writing Tools

- Learn how to proofread your work with these Proofreading Tools

- Learn how to broaden your research landscape with these Academic Search Engines

- Learn how to manage multiple research projects with these Project Management Tools

- Learn how to run effective survey research with these Survey Tools for Research

- Learn how get more insights from important conversations and interviews with Transcription Tools

- Learn how to manage the ever-growing list of references with these Reference Management Software

- Learn how to double your productivity with literature reviews with these AI-Based Summary Generators

- Learn how to build and develop your audience with these Academic Social Network Sites

- Learn how to make sure your content is original and trustworthy with these Plagiarism Checkers

- Learn how to talk about your work effectively with these Science Communication Tools

Leave a Comment Cancel reply

Save my name, email, and website in this browser for the next time I comment.

We maintain and update science journals and scientific metrics. Scientific metrics data are aggregated from publicly available sources. Please note that we do NOT publish research papers on this platform. We do NOT accept any manuscript.

2012-2024 © scijournal.org

Download 55 million PDFs for free

Explore our top research interests.

Engineering

Anthropology

- Earth Sciences

- Computer Science

- Mathematics

- Health Sciences

Join 257 million academics and researchers

Track your impact.

Share your work with other academics, grow your audience and track your impact on your field with our robust analytics

Discover new research

Get access to millions of research papers and stay informed with the important topics around the world

Publish your work

Publish your research with fast and rigorous service through Academia.edu Publishing. Get instant worldwide dissemination of your work

Unlock the most powerful tools with Academia Premium

Work faster and smarter with advanced research discovery tools

Search the full text and citations of our millions of papers. Download groups of related papers to jumpstart your research. Save time with detailed summaries and search alerts.

- Advanced Search

- PDF Packages of 37 papers

- Summaries and Search Alerts

Share your work, track your impact, and grow your audience

Get notified when other academics mention you or cite your papers. Track your impact with in-depth analytics and network with members of your field.

- Mentions and Citations Tracking

- Advanced Analytics

- Publishing Tools

Real stories from real people

Used by academics at over 16,000 universities

Get started and find the best quality research

- Academia.edu Publishing

- We're Hiring!

- Help Center

- Find new research papers in:

- Cognitive Science

- Academia ©2024

🇺🇦 make metadata, not war

A comprehensive bibliographic database of the world’s scholarly literature

The world’s largest collection of open access research papers, machine access to our vast unique full text corpus, core features, indexing the world’s repositories.

We serve the global network of repositories and journals

Comprehensive data coverage

We provide both metadata and full text access to our comprehensive collection through our APIs and Datasets

Powerful services

We create powerful services for researchers, universities, and industry

Cutting-edge solutions

We research and develop innovative data-driven and AI solutions

Committed to the POSI

Cost-free PIDs for your repository

OAI identifiers are unique identifiers minted cost-free by repositories. Ensure that your repository is correctly configured, enabling the CORE OAI Resolver to redirect your identifiers to your repository landing pages.

OAI IDs provide a cost-free option for assigning Persistent Identifiers (PIDs) to your repository records. Learn more.

Who we serve?

Enabling others to create new tools and innovate using a global comprehensive collection of research papers.

“ Our partnership with CORE will provide Turnitin with vast amounts of metadata and full texts that we can ... ” Show more

Gareth Malcolm, Content Partner Manager at Turnitin

Academic institutions.

Making research more discoverable, improving metadata quality, helping to meet and monitor open access compliance.

“ CORE’s role in providing a unified search of repository content is a great tool for the researcher and ex... ” Show more

Nicola Dowson, Library Services Manager at Open University

Researchers & general public.

Tools to find, discover and explore the wealth of open access research. Free for everyone, forever.

“ With millions of research papers available across thousands of different systems, CORE provides an invalu... ” Show more

Jon Tennant, Rogue Paleontologist and Founder of the Open Science MOOC

Helping funders to analyse, audit and monitor open research and accelerate towards open science.

“ Aggregation plays an increasingly essential role in maximising the long-term benefits of open access, hel... ” Show more

Ben Johnson, Research Policy Adviser at Research England

Our services, access to raw data.

Create new and innovative solutions.

Content discovery

Find relevant research and make your research more visible.

Managing content

Manage how your research content is exposed to the world.

Companies using CORE

Gareth Malcolm

Content Partner Manager at Turnitin

Our partnership with CORE will provide Turnitin with vast amounts of metadata and full texts that we can utilise in our plagiarism detection software.

Academic institution using CORE

Kathleen Shearer

Executive Director of the Confederation of Open Access Repositories (COAR)

CORE has significantly assisted the academic institutions participating in our global network with their key mission, which is their scientific content exposure. In addition, CORE has helped our content administrators to showcase the real benefits of repositories via its added value services.

Partner projects

Ben Johnson

Research Policy Adviser

Aggregation plays an increasingly essential role in maximising the long-term benefits of open access, helping to turn the promise of a 'research commons' into a reality. The aggregation services that CORE provides therefore make a very valuable contribution to the evolving open access environment in the UK.

Advertisement

- Previous Article

- Next Article

1. Introduction

2. related works, 3. methodology, 4. application, 5. data set, 6. conclusion, author contributions, aminer: search and mining of academic social networks.

Department of Computer Science and Technology, Tsinghua University, Beijing 100084, China

Information School, Renmin University of China, Beijing 100872, China

- Cite Icon Cite

- Open the PDF for in another window

- Permissions

- Article contents

- Figures & tables

- Supplementary Data

- Peer Review

- Search Site

Huaiyu Wan , Yutao Zhang , Jing Zhang , Jie Tang; AMiner: Search and Mining of Academic Social Networks. Data Intelligence 2019; 1 (1): 58–76. doi: https://doi.org/10.1162/dint_a_00006

Download citation file:

- Ris (Zotero)

- Reference Manager

AMiner is a novel online academic search and mining system, and it aims to provide a systematic modeling approach to help researchers and scientists gain a deeper understanding of the large and heterogeneous networks formed by authors, papers, conferences, journals and organizations. The system is subsequently able to extract researchers’ profiles automatically from the Web and integrates them with published papers by a way of a process that first performs name disambiguation. Then a generative probabilistic model is devised to simultaneously model the different entities while providing a topic-level expertise search. In addition, AMiner offers a set of researcher-centered functions, including social influence analysis, relationship mining, collaboration recommendation, similarity analysis, and community evolution. The system has been in operation since 2006 and has been accessed from more than 8 million independent IP addresses residing in more than 200 countries and regions.

A variety of academic social networking websites including Google Scholar ① , Microsoft Academic ② , Semantic Scholar ③ , ResearchGate ④ and Academia.edu ⑤ have gained great popularity over the past decade. The common purpose of these academic social networking systems is to provide researchers with an integrated platform to query academic information and resources, share their own achievements, and connect with other researchers.

Several issues within academic social networks have been investigated in these systems. However, most of the issues are investigated separately through independent processes. As such, there is not a congruent process or series of methods for mining the whole of disparate academic social networks. The lack of such methods can be attributed to two reasons:

Lack of semantic-based information. The user profile information obtained solely from the user who entered his or her information or extracted by heuristics is sometimes incomplete or inconsistent. Users do not fill in personal information merely because they are unwilling to do so;

Lack of a unified modeling approach for effective mining of the social network. Traditionally, different types of information sources in the academic social network were modeled individually, and thus dependencies between them cannot be captured. However, dependencies may exist between social data. High-quality search services need to consider the intrinsic dependencies between the different heterogeneous information sources.

AMiner ⑥ [ 1 ], the second generation of the ArnetMiner system, is designed to search and perform data mining operations against academic publications on the Internet, using social network analysis to identify connections between researchers, conferences, and publications. In AMiner, our objective is to answer four questions:

How to automatically extract the researcher profile from the existing Web?

How to integrate the extracted information (i.e., researchers’ profiles and publications) from different sources?

How to model the different types of information sources in a unified model?

How to provide powered search services in a constructed network?

To answer the above questions, a series of novel approaches are implemented within the AMiner system. The overall architecture of the system is shown in Figure 1 .

The architecture of AMiner.

The system mainly consists of five components:

Extraction. Focus is on automatically extracting researchers’ profiles from the Web. The service first collects and identifies one's relevant pages (e.g., homepages or introducing pages) from the Web, then uses a unified approach [ 2 , 3 ] to extract data from the identified documents. It also extracts publications from online digital libraries using heuristic rules. In addition, a simple but very effective approach is taken for profiling Web users by leveraging the power of big data [ 4 ].

Integration. Joins and integrates the extracted researchers’ profiles and the extracted publications. The application employs the researcher name as the identifier. A probabilistic model [ 5 ] and a comprehensive framework [ 6 ] have been developed to deal with the name ambiguity problem in the integration. The integrated data are then stored, sorted and indexed into a research network knowledge base.

Storage and Access. Provides storage and indexing for the extracted and integrated data in the researcher network knowledge base. Specifically, for storage it employs Jena [ 7 ], a tool to store and retrieve ontological data; for indexing, it employs the inverted file indexing method, an established method for facilitating information retrieval [ 8 ].

Modeling. Utilizes a generative probabilistic model [ 1 ] to simultaneously model the different types of information sources. The system estimates a mixture of topic distribution associated with the different information sources.

Services. Provides several powered services based on the modeling results: profile search, expert finding, conference analysis, course search, sub-graph search, topic browser, academic ranks, and user management.

For several features in the system, e.g., profile extraction, name disambiguation, academic topic modeling, expertise search and academic social network mining, we propose some new approaches to overcome the drawbacks that exist in the conventional methods.

The rest of this paper is organized as follows. Section 2 discusses related works, and Section 3 presents our proposed approaches in the system. Section 4 shows some applications of AMiner. Section 5 lists the data sets we constructed. Finally, Section 6 makes a conclusion.

Previously several issues in academic social networks have been investigated and some systems were developed.

Google Scholar provides a search engine to identify the hyperlinks of publications that are publicly available or may be obtained through institutional libraries. Google Scholar is not a social networking website in the general sense, but yet it has become an important platform for searching academic resources, keeping up with the latest research, promoting one's own achievements, and tracking academic impact. Registered users can create a personal Google Scholar profile to post their research interests, manage their publications, correct their co-authors, and access their citations per year metrics. The social part of Google Scholar is very simple: a user can follow a researcher so that when he or she has a new publication or citation the user will receive an email; the user can also set up alerts based on his or her own research field.

Microsoft Academic [ 9 ] employs technologies of machine learning, semantic analysis and data mining to help users explore academic information more powerfully. A user can create an account and a public profile by claiming the publications he or she authored. Microsoft Academic provides more extensive “follow” functions. Users can follow researchers, publications, journals, conferences, organizations and research topics. Based on a user's publication history and the events the user is following, Microsoft Academic will show the most relevant items and news on his or her personalized homepage. In addition, rather than providing a simple keyword-based search engine, Microsoft Academic presents relevant results and recommendations to help users discover more academic information resources of interest to support a more expansive learning and research experience.

Semantic Scholar is designed to be a “smart” search engine to help researchers find better academic publications faster. It uses a combination of machine learning, natural language processing, and machine vision to analyze publications and extract important features, adding a supplementary layer of semantic analysis to the traditional methods of citation analysis. In comparison to Google Scholar and Microsoft Academic, Semantic Scholar can quickly highlight the most important papers and identify the connections between them. The resulting influential citations, images and key phrases that the engine provides quickly become more relevant and impactful to the user's work.

ResearchGate's aim and objective is to connect geographically distant researchers and allow them to communicate continuously. Registered users of the site each have a user profile and can share their research output including papers, data, book chapters, patents, research proposals, algorithms, presentations and software source code. Users can also follow the activities of others and engage in discussions with them. ResearchGate organizes itself mainly around research topics and maintains its own index, i.e., the ResearchGate Score, based on the user's contribution to content, profile details and participation in interaction on the site. An example is asking questions and offering answers.

Academia.edu is a for-profit academic social networking website. It allows its users to create a profile, share their works, monitor their academic impact, select areas of interests and follow the research evolving in particular fields. Users can browse the networks of people with similar interests from around the world on the website. Academia.edu includes an analytics dashboard where users can see the influence and diffusion of their works in real time. In addition, Academia.edu has an alert service that sends registered users an email whenever a person whom they are following publishes a new paper. Academia.edu alerts anyone who is following a certain topic. In this way the awareness of a paper can be raised by potential citators through the alert system.

Although most of the above systems have integrated a gigantic amount of academic resources and provided abundant means of searching and querying social networking functions, they have not performed systematic semantic-level analysis or mining. Consequently, in our AMiner system, our primary objective is to provide a unified modeling approach to gaining a greater and deeper understanding of the semantic connection in large and heterogeneous academic networks consisting of authors, papers, conferences, journals and organizations. As a result, our system can provide topic-level expertise search and researcher-centered functions.

In this section we introduce in detail the challenges we are addressing with our AMiner system, and we present our methods and solutions.

3.1 Profile Extraction

We define the schema of the researcher profile by extending the FOAF ontology [ 10 ], as shown in Figure 2 . In the schema, 24 properties and two relations are defined [ 2 , 3 ].

The schema of the researcher profile.

It is certainly not a trivial task to extract the research network from the Web. The researchers from different universities, institutes or companies have disparate page and profile templates and data feeds. So an ideal extraction method should consider processing all kinds of templates and formats. The approach we proposed consists of three steps:

Relevant page identification. Given a researcher name, we first get a list of Web pages by a search engine (Google API is used) and then identify the homepage or introducing page using a classifier. We define a set of features, such as whether the title of the page contains the person name and whether the URL address (partly) contains the person name, and employ SVM [ 11 ] for the classification.

Preprocessing. We separate the text into tokens and assign possible tags to each token. The tokens form the basic units and the pages form the sequences of units in the following tagging step.

Tagging. Given a sequence of units, we determine the most likely corresponding sequence of tags by using a trained tagging model. The type of tag corresponds with the property defined in Figure 2 . We define five types of tokens (i.e., standard word, special word, image token, term, and punctuation mark) and use heuristics to identify tokens on the Web. After that, we assign several possible tags to each token based on the token type, and then a trained CRF model [ 12 ] is used to find the best tag assignment having the highest likelihood.

Recently, we revisit the problem of Web user profiling in the big data and propose a simple but very effective approach, referred to as MagicFG [ 4 ], for profiling Web users by leveraging the power of big data. To avoid error propagation, the approach integrates page identification and profile extraction in an unified framework. To improve the profiling performance, we present the concept of contextual credibility. The proposed framework also supports the incorporation of human knowledge. It defines human knowledge as Markov logics statements and formalizes them into a factor graph model. The MagicFG method has been deployed in AMiner system for profiling millions of researchers.

Figure 3 gives an example of researcher profile.

An example of researcher profile.

3.2 Name Disambiguation

We have collected more than 200 million publications from existing online data libraries, including DBLP ⑦ , ACM DL ⑧ , CiteSeerX ⑨ and others. In each data source, authors are identified by their names. For integrating the researcher profiles and the publication data, we use researcher name and the author name as the identifier. This process inevitably has the ambiguous problem.

A few years ago, we had proposed a probabilistic framework [ 5 ] based on Hidden Markov Random Fields (HMRF) [ 13 ] which is able to capture dependencies between observations (here each paper is viewed as an observation). The disambiguation problem is cast as assigning a tag to each paper with each tag representing an actual researcher.

More recently we proposed an additional comprehensive framework [ 6 ] to address the name disambiguation problem. The overview of the framework is as shown in Figure 4 . A novel representation learning method is proposed, which incorporates both global and local information. In addition, an end-to-end cluster size estimation method is presented in the framework. To improve the accuracy, we involve human annotators into the disambiguation process. The method has now been deployed in AMiner to deal with the name disambiguation problem at the billion scale, which demonstrates its effectiveness and efficiency.

An overview of the name disambiguation framework in AMiner.

3.3 Topic Modeling

In academic search, representation of the content of text documents, authors interests and conferences themes is a critical issue of any approach. Traditionally, documents are represented based on the “bag of words” (BOW) assumption. However, this representation cannot utilize the “semantic” dependencies between words. In addition, in the course of an academic search there are different types of information sources, thus how to capture the dependencies between them becomes a challenging issue. Unfortunately, existing topic models such as probabilistic Latent Semantic Indexing (pLSI) [ 14 ], Latent Dirichlet Allocation (LDA) [ 15 ] and Author-Topic model [ 16 , 17 ] cannot be directly applied to the context of academic search. This is because they simply cannot capture all intrinsic dependencies between papers and conferences.

A unified topic modeling approach [ 1 ] is proposed for simultaneously modeling characteristics of documents, authors, conferences and dependencies among them. (For simplicity, we use conference to denote conference, journal and book in the model.) The proposed model is called Author-Conference-Topic (ACT) model. More specifically, different strategies can be employed to model the topic distributions (as shown in Figure 5 ) and consequently the implemented models can have different knowledge representation capacities. In Figure 5 (a) each author is associated with a mixture of weights over topics. For example, each word token correlated to a paper, and likewise a conference stamp associated to each word token, is generated from a sampled topic. In Figure 5 (b) each author-conference pair is associated with a mixture of weights over the topics, and word tokens are then generated from the sampled topics. In Figure 5 (c), each author is associated with topics, each word token is generated from a sampled topic, and then the conference is generated from the sampled topics of all word tokens in a paper.

Graphical representation of the three Author-Conference-Topic (ACT) models.

3.4 Expertise Search

When searching for academic resources and formulating a query, a user endeavors to find authors with specific expertise, and papers and conferences related to the research areas of interest.

In the AMiner system we present a topic level expertise search framework [ 18 ]. Different from the traditional Web search engines that perform retrieval and ranking at document level, we study the expertise search problem at topic level over disparate heterogenous networks. A unified topic model, namely Citation-Tracing-Topic (CTT), is proposed to simultaneously model topical aspects of different objects in the academic network. Based on the learned topic models, we investigate the expertise search problem from three dimensions: ranking, citation tracing analysis and topic graph search. Specifically, we propose a topic level random walk method for ranking different objects. In citation tracing analysis we seek to uncover how a study influences its follow-up study. Finally, we have developed a topical graph search function, based on the topic modeling and citation tracing analysis.

Figure 6 gives an example result of experts found for the query “Data Mining”.

An example result of experts found for “Data Mining”.

3.5 Academic Social Network Mining

Based on the AMiner system, this set of researcher-centric academic social network mining functions includes social influence analysis, relationship mining, collaboration recommendation, similarity analysis and community evolution.

Social Influence Analysis. In large social networks, persons are influenced by others for various reasons. We propose a Topic Affinity Propagation (TAP) model [ 19 ] to differentiate and quantify the social influence. TAP can take results of any topic modeling and the existing network structure to perform topic-level influence propagation. Recently we design an end-to-end framework that we call DeepInf for feature representation learning and to predict social influence [ 20 ]. Each user is represented with a local subnetwork which he or she is embedded in. A graph neural network is used to learn the representation of the sub-network which in turn effectively integrates the user-specific features and network structures. The framework of DeepInf is shown in Figure 7 .

Model Framework of DeepInf.

Social Relationship Mining. Inferring the type of social relationships between two users is a very important task in social relationship mining. We propose a two-stage framework named Time-constrained Probabilistic Factor Graph model (TPFG) [ 21 ] for inferring advisor-advisee relationships in the co-author network. The main idea is to leverage a time-constrained probabilistic factor graph model to decompose the joint probability of the unknown advisors over all the authors. Furthermore, we develop a framework named TranFG for classifying the type of social relationships across disparate heterogeneous resources [ 22 ]. The framework incorporates social theories into a factor graph model, which effectively improves the accuracy of predicting the types of social relationships in a target network by borrowing knowledge from another source network.

Similarity Analysis. Estimating similarity between vertices is a fundamental issue in social network analysis. We propose a sampling-based method to estimate the top- k similar vertices [ 23 ]. The method is based on the novel idea of the random path sampling method known as Panther. Given a particular network as a starting point, Panther randomly generates a number of paths of a pre-defined length, and then the similarity between two vertices can be modeled as estimating the possibility that the two vertices appear on the same paths.

Collaboration Recommendation. Interdisciplinary collaborations have generated a huge impact on society. However, it is usually hard for researchers to establish such cross-domain collaborations. We analyze the cross-domain collaboration data from research publications and propose a Cross-domain Topic Learning (CTL) model [ 24 ] for collaboration recommendation. For handling sparse connections, CTL consolidates the existing cross-domain collaborations through topic layers as opposed to utilizing author layers. This alleviates the sparseness issue. For handling complementary expertise, CTL models topic distributions from source and target domains separately, as well as the correlation across domains. For handling topic skewness, CTL only models relevant topics to the cross-domain collaboration.

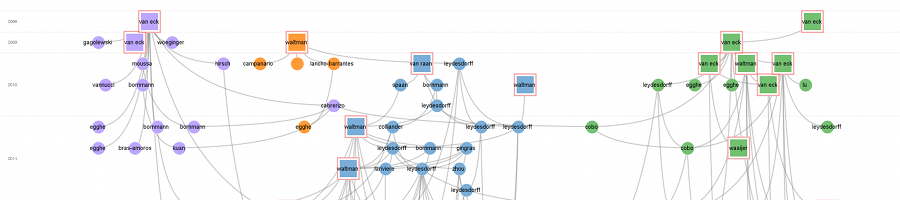

Community Evolution. Since social networks are rather dynamic, it is interesting to study how persons in the networks form different clusters and how the various clusters evolve over time. We study mining co-evolution of multi-typed objects in a special type of heterogeneous network, called a star network. We subsequently examine how the multi-typed objects influence each other in the network evolution [ 25 ]. A Hierarchical Dirichlet Process Mixture Model-based evolution is proposed which detects the co-evolution of multi-typed objects in the form of a multi-typed cluster evolution in dynamic star networks. An efficient inference algorithm is provided to learn the proposed model.

AMiner is developed to provide comprehensive search and mining services for researcher social networks. In this system we focus on: (1) creating a semantic-based profile for each researcher by extracting information from the distributed Web; (2) integrating academic data (e.g., the bibliographic data and the researcher profiles) from multiple sources; (3) accurately searching the heterogeneous network; (4) analyzing and discovering interesting patterns from the built researcher social network. The main search and analysis functions in AMiner are summarized in the following section.

Profile Search. Input a researcher name (e.g., Jie Tang). The system will return the semantic-based profile created for the researcher using information extraction techniques. In the profile page, the extracted and integrated information include: contact information, photo, citation statistics, academic achievement evaluation, (temporal) research interest, educational history, personal social graph, research funding (currently only US and CN) and publication records (including citation information and the papers that are automatically assigned to several different domains).

Expert Finding. Input a query (e.g., data mining). The system will return experts on this topic. In addition, the system will suggest the top conference and the top ranked papers on this topic. There are two ranking algorithms: VSM and ACT. The former is similar to the conventional language model and the latter is based on our Author-Conference-Topic (ACT) model. Users can also provide feedbacks to the search results.

Conference Analysis. Input a conference name (e.g., KDD). The system returns those who are the most active researchers on this conference as well as the top-ranked papers.

Course Search. Input a query (e.g., data mining). The system will return those who are teaching courses relevant to the query.

Sub-graph Search. Input a query (e.g., data mining). The system first tells you what topics are relevant to the query (e.g., five topics “Data mining”, “XML Data”, “Data Mining/Query Processing”, “Web Data/Database design” and “Web Mining” are relevant) and subsequently display the most important sub-graph discovered on each relevant topic augmented with a summary for the sub-graph.

Topic Browser. Based on our Author-Conference-Topic (ACT) model, we automatically discover 200 hot topics from the publications. For each topic we automatically assign a label to represent its meanings. Furthermore, the browser presents the most active researchers, the most relevant conferences/papers and the evolution trend of the topics that are discovered.

Academic Ranks. We define eight measures to evaluate the researcher's achievement. The measures include “h-index”, “Citation”, “Uptrend”, “Activity”, “Longevity”, “Diversity”, “Sociability” and “New Star”. For each measure, we output a ranking list in different domains. For example, one can search those who have the highest citation numbers in the “data mining” domain. Figure 8 gives an example of researcher ranking by sociability index.

An example of researcher ranking by sociability index.

User Management. One can register as a user to: (1) modify the extracted profile information; (2) provide feedback on the search results; (3) follow researchers in AMiner; and (4) to create an AMiner page (which can be used to advertise conferences and workshops, or to recruit students).

AMiner has collected a large scholar data set with more than 130,000,000 researcher profiles and 233,000,000 publications from the Internet by June 2018 along with a number of subsets that were constructed for different research purposes. The details of these subsets are as follows and can be found at https://www.aminer.cn/data .

Citation Network. The citation data are extracted from DBLP, ACM DL and other sources. The data set contains 1,572,277 papers and 2,084,019 citation relationships. Each paper is associated with abstract, authors, year, venue, and title. The data set can be used for clustering with network and side information, studying influence in the citation network, finding the most influential papers, topic modeling analysis, etc.

Academic Social Network. These data include papers, paper citation, author information and author collaboration. The data set contains 1,712,433 authors, 2,092,356 papers, 8,024,869 citation relationships and 4,258,615 collaboration relationships noted between authors.

Advisor-advisee: The data set is comprised of 815,946 authors and 2,792,833 co-author relationships. For evaluating the performance of inferring advisor-advisee relationships between co-authors we created a smaller ground truth data using the following method: (1) collecting the advisor-advisee information from the Mathematics Genealogy project and the AI Genealogy project; (2) manually crawling the advisor-advisee information from researchers’ homepages. Finally, we have labeled 1,534 co-author relationships of which 514 are advisor-advisee relationships.

Topic-co-author. It is a topic-based co-author network, which contains 640,134 authors of 8 topics and 1,554,643 co-author relationships. The eight topics are: Data Mining/Association Rules, Web Services, Bayesian Networks/Belief Function, Web Mining/Information Fusion, Semantic Web/Description Logics, Machine Learning, Database Systems/XML Data and Information Retrieval.

Topic-paper-author. The data set is collected for the purpose of cross domain recommendation which contains 33,739 authors associated to5 topics as well as 139,278 co-author relationships. The five topics are Data Mining (with 6,282 authors and 22,862 co-author relationships), Medical Informatics (with 9,150 authors and 31,851 co-author relationships), Theory (with 5,449 authors and 27,712 co-author relationships), Visualization (with 5,268 authors and 19,261 co-author relationships) and Database (with 7,590 authors and 37,592 co-author relationships).

Topic-citation. It is a topic-based citation network which contains 2,329,760 papers of 10 topics and 12,710,347 citations relationships. The 10 topics are: Data Mining/Association Rules, Web Services, Bayesian Networks/Belief Function, Web Mining/Information Fusion, Semantic Web/Description Logics, Machine Learning, Database Systems/XML Data, Pattern Recognition/Image Analysis, Information Retrieval, and Natural Language System/Statistical Machine Translation.

Kernel Community. It is a co-authorship network with 822,415 nodes and 2,928,360 undirected edges. Each vertex represents an author and each edge represents a co-author relationship.

Dynamic Co-author. The data set contains 1,768,776 papers published during the time period from 1986 to 2012 with 1,629,217 authors involved. Each year is regarded as a time stamp and there are 27 time stamps in total. At each time stamp, we create an edge between two authors if they have co-authored at least one paper in the most recent three years (including the current year). We convert the undirected co-author network into a directed network by regarding each undirected edge as two symmetric directed edges.

Expert Finding. This data set is a benchmark for expert finding which contains 1,781 experts of 13 topics.

Association Search. This data set is used to evaluate the effectiveness of association search approaches which contains 8,369 author pairs specific to nine topics. Each author pair contains a source author and target author.

Topic Model Results for AMiner Data Set: There are the results of ACT model on the AMiner data set which contains the top 1,000,000 papers and authors of 200 topics.

Co-author. This is a co-author network on the AMiner system which contains 1,560,640 authors and 4,258,946 co-author relationships.

Disambiguation. This data set is used for studying name disambiguation in a digital library. It contains 110 authors and their affiliations as well as their disambiguation results (ground truth).

In this paper we present a novel online academic searching and mining system, Aminer. It is the second generation of the ArnetMiner system. We first present the overview architecture of the system which consists of five main components, i.e., extraction, integration, storage and access, modeling and services. Then we follow this by introducing the important methodologies proposed in the system including the profile extraction and user profiling methods, name disambiguation algorithms, topic modeling methods, expertise search strategies and series of academic social network mining methods. Furthermore, we introduce the typical applications as well as a broad and significant offering of available data sets already presented on the platform.

We acknowledge that AMiner is still at its developmental stage on both the scale of resources and the quality of services. However, in the future we are going to exploit additional intelligent methods for mining deep knowledge from scientific networks and we will deploy a more convenient and personalized framework for delivering academic search and finding services.

This work was a collaboration between all of the authors. J. Tang ([email protected], corresponding author) is the leader of the AMiner project, who drew the whole picture of the system. Y.T. Zhang ([email protected]) and J. Zhang ([email protected]) summarized the methodology part of this paper. H.Y. Wan ([email protected]) summarized the applications and data sets in the AMiner system and drafted the paper. All the authors have made meaningful and valuable contributions in revising and proofreading the resulting manuscript.

https://scholar.google.com/

https://academic.microsoft.com/

https://www.semanticscholar.org/

https://www.researchgate.net/

https://www.academia.edu/

https://www.aminer.cn/

https://dblp.uni-trier.de/

https://dl.acm.org/

http://citeseerx.ist.psu.edu/

Author notes

Email alerts

Related articles, affiliations.

- Online ISSN 2641-435X

A product of The MIT Press

Mit press direct.

- About MIT Press Direct

Information

- Accessibility

- For Authors

- For Customers

- For Librarians

- Direct to Open

- Open Access

- Media Inquiries

- Rights and Permissions

- For Advertisers

- About the MIT Press

- The MIT Press Reader

- MIT Press Blog

- Seasonal Catalogs

- MIT Press Home

- Give to the MIT Press

- Direct Service Desk

- Terms of Use

- Privacy Statement

- Crossref Member

- COUNTER Member

- The MIT Press colophon is registered in the U.S. Patent and Trademark Office

This Feature Is Available To Subscribers Only

Sign In or Create an Account

Academic Networks

In the academic world networking was, and often still is, mediated through a mentor or other superior. Collaborative opportunities often come through mutual projects or existing networks already established through your mentor. Often these networks, constructed slowly over time through common work, can be narrowly focused on subject matter that may be limited in diversity and scope. It may miss the work and collaborative potential of a key professional right around the corner simply because they weren’t in your mentor’s network. This could be because their subject matter was not obviously related to your mentor’s. Lost opportunities for innovative and productive collaborations may have a significant impact on your research and career.

To address these lost opportunities, academia has begun to encourage networking among faculty and trainees to enhance innovation and collaboration to advance a new research paradigm – Team Science. Maintaining a personal network is extremely important to engage in the vibrant nature of Team Science. Academic relationships are dynamic, reflecting the diverse expertise and influence of all individuals, which in turn are always in flux. These factors are often rapidly changing, and in an unpredictable fashion. To manage this ever-changing academic environment, personal network management is a crucial aspect of one’s professional information management. It is the practice of managing multiple collaborative contacts and connections for social and professional benefits. If you can successfully tap key influential networks within your institution and wider academic discipline, you will be more likely to be nurtured toward success. In other words, it is not just what you know, but also who you know and can work with that matters in achieving academic success. Who you know may facilitate knowledge of essential methods and processes ranging from the science itself to accessing funding to “grantsmanship” to policy impact.

Building and Nurturing a Network

Although it may be easy to build a network by simply accruing a list of contacts, the real challenge is maintaining and leveraging those connections effectively. Information fragmentation can lead to difficulties encountered in ensuring co-operation and keeping track of different personal information assets (e.g. Facebook, Twitter etc.). Simply maintaining a contact list with accurate contact information (office phone, cell phone, email address, etc.) is a simple yet essential piece of maintaining a network. Devising and committing to a sustainable and organized approach to personal networking including the use of social media resources is becoming increasingly important to academic efficiency and success

Being Engaged in Interdisciplinary Team Research: Is Your Network Working for You?

If building and nurturing a professional network is requisite to being a successful academic researcher, then to catalyze and seize opportunities for interdisciplinary team-based research, the argument for Academic Networking couldn’t be stronger. Each academic has a professional network. Some build a deep network of people mostly in the same field, which can unwittingly limit potential interdisciplinary opportunities. Some build widely diverse networks using carefully selected individuals who can optimize their chances for interdisciplinary research. Others have made it a numbers game, focusing on the quantity of people and longstanding list of mentees in their network. But, have you taken a moment to look carefully at what kind of network you have? What strategies do you use to connect with others in a way that helps you harness opportunities for doing research in interdisciplinary teams? How many interdisciplinary research projects are you working on now?

Having a targeted and effective professional network can make the difference between working hard and working smart in the Team Science paradigm. Effective professional networking on-line can provide access to quick conversations, expert opinions, issues or systems scans. It can lead to new ideas, new connections and provide real-time insights about your research or your discipline. It can be an efficient way to find out what people in your network are doing and whether to reconnect with them. It can facilitate connections at conferences and meetings, open doors and build relationships with experts, influencers, and others key individuals.

If you don’t know the answers to or have never thought about these questions, consider taking some time to review, enhance and nurture your network. Your self-reflection should focus on “who” should be in your network – identifying those individuals who best facilitate your participation in team science. When considering your personal network, keep in mind that to be effective, your core connections and relationships should bridge smaller, more-diverse groups and geography. These relationships should also result in more learning, less bias, and greater personal growth by modeling positive behaviors: generosity, authenticity, and enthusiasm. Once you have defined your core network and how they relate to you and others within your network, consider who in your core can help with your professional and academic challenges. Is your core network group diverse enough and are you generating new ideas from this core? Are there people who take but don’t give? Should you continue your affiliation to them? Are there gaps in expertise, skill, support or availability?

The “benefits” of effective and well-curated networks that facilitates your ability to actively engage in team science include: 1. Communicating with peers and colleagues to keep informed and up to date about who is doing what; 2. To learn about new methods and tools people are using; 3. To create visibility for yourself that can help you develop a reputation (your brand); 4. To build career stability for yourself; 5. To move new ideas through the network and test it out; 6. To seek placement opportunities for your trainees – just to name a few.

Remember that the type, degree and targets for academic networking will evolve throughout the course of your career depending on your professional and academic needs. The Academic Network required by a trainee during transitions (e.g. new GMS student or Post-Doc) will vary. But what they all have in common is the absolute need to establish and nurture a well curated network of supporters and collaborators as they proceed within their academic field. Networking continues to be important even in mid to late career as ones needs and capacity to support others evolves. Each collaborator plays a different but critical role in the scientific enterprise. At times, special networks may be important based on other important commonalities. For example, for women, networking can be particularly challenging because attempts at networking requires self-promotion (which can be unfamiliar or uncomfortable for some), and can be misunderstood by others. Similar issues may exist for underrepresented minority researchers, and having a robust and supportive network can be invaluable to their success.

Effective networking is a critical yes often underestimated factor in establishing and sustaining a successful academic career in an ever-changing, increasingly more collaborative and competitive research environment. In a future blog post we will address a key personal networking activity – networking at a professional conference.

By C. Shanahan

Share this:

- Click to email this to a friend

- Click to Press This!

- Click to share on Twitter

- Share on Facebook

- Click to share on LinkedIn

- Click to share on Google+

View all posts

Explore millions of high-quality primary sources and images from around the world, including artworks, maps, photographs, and more.

Explore migration issues through a variety of media types

- Part of The Streets are Talking: Public Forms of Creative Expression from Around the World

- Part of The Journal of Economic Perspectives, Vol. 34, No. 1 (Winter 2020)

- Part of Cato Institute (Aug. 3, 2021)

- Part of University of California Press

- Part of Open: Smithsonian National Museum of African American History & Culture

- Part of Indiana Journal of Global Legal Studies, Vol. 19, No. 1 (Winter 2012)

- Part of R Street Institute (Nov. 1, 2020)

- Part of Leuven University Press

- Part of UN Secretary-General Papers: Ban Ki-moon (2007-2016)

- Part of Perspectives on Terrorism, Vol. 12, No. 4 (August 2018)

- Part of Leveraging Lives: Serbia and Illegal Tunisian Migration to Europe, Carnegie Endowment for International Peace (Mar. 1, 2023)

- Part of UCL Press

Harness the power of visual materials—explore more than 3 million images now on JSTOR.

Enhance your scholarly research with underground newspapers, magazines, and journals.

Explore collections in the arts, sciences, and literature from the world’s leading museums, archives, and scholars.

For full functionality of this site it is necessary to enable JavaScript. Here are the instructions how to enable JavaScript in your web browser .

Welcome to CitNetExplorer

Citnetexplorer is a software tool for visualizing and analyzing citation networks of scientific publications. the tool allows citation networks to be imported directly from the web of science database. citation networks can be explored interactively, for instance by drilling down into a network and by identifying clusters of closely related publications., why use citnetexplorer.

Examples of applications of CitNetExplorer include:

Analyzing the development of a research field over time. CitNetExplorer visualizes the most important publications in a field and shows the citation relations between these publications to indicate how publications build on each other.

Identifying the literature on a research topic. CitNetExplorer delineates the literature on a research topic by identifying publications that are closely connected to each other in terms of citation relations.

Exploring the publication oeuvre of a researcher. CitNetExplorer visualizes the citation network of the publications of a researcher and shows how the work of a researcher has influenced the publications of other researchers.

Supporting literature reviewing. CitNetExplorer facilitates systematic literature reviewing by identifying publications cited by or citing to one or more selected publications.

Download CitNetExplorer

Problems opening Web of Science files

Users of CitNetExplorer may experience problems when opening files downloaded from Web of Science. Opening these files may result in a so-called null pointer exception. This problem can be avoided by excluding publications of the document type 'early access' from the search results in Web of Science.

You may also be interested in our VOSviewer tool

VOSviewer is a software tool for constructing and visualizing bibliometric networks. Networks can be constructed based on citation relations. Examples of such networks are bibliographic coupling and co-citation networks of journals, researchers, and individual publications. VOSviewer also offers text mining functionality that can be used to construct and visualize co-occurrence networks of important terms extracted from a body of scientific literature.

VOSviewer website

Share this page

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Elsevier - PMC COVID-19 Collection

Using the full-text content of academic articles to identify and evaluate algorithm entities in the domain of natural language processing

Associated data.

- • We extract algorithms from articles and evaluate the impact of algorithms based on the number of papers and mention duration.

- • We analyze the algorithms with high impact in different years, and explore the evolution of influence over time.

- • Algorithms and sentences we extracted can be used as training data for automatic extraction of algorithms in the future.

In the era of big data, the advancement, improvement, and application of algorithms in academic research have played an important role in promoting the development of different disciplines. Academic papers in various disciplines, especially computer science, contain a large number of algorithms. Identifying the algorithms from the full-text content of papers can determine popular or classical algorithms in a specific field and help scholars gain a comprehensive understanding of the algorithms and even the field. To this end, this article takes the field of natural language processing (NLP) as an example and identifies algorithms from academic papers in the field. A dictionary of algorithms is constructed by manually annotating the contents of papers, and sentences containing algorithms in the dictionary are extracted through dictionary-based matching. The number of articles mentioning an algorithm is used as an indicator to analyze the influence of that algorithm. Our results reveal the algorithm with the highest influence in NLP papers and show that classification algorithms represent the largest proportion among the high-impact algorithms. In addition, the evolution of the influence of algorithms reflects the changes in research tasks and topics in the field, and the changes in the influence of different algorithms show different trends. As a preliminary exploration, this paper conducts an analysis of the impact of algorithms mentioned in the academic text, and the results can be used as training data for the automatic extraction of large-scale algorithms in the future. The methodology in this paper is domain-independent and can be applied to other domains.

1. Introduction

The speed of social development is accelerating, and new technologies are born every day. Societal developments provide people with new opportunities and conveniences. However, human beings still face new challenges and problems. At the end of 2019, a novel coronavirus (SARS-CoV-2) was detected. The virus spreads very quickly, causing substantial losses to the entire society. Issues such as how to find a cure for the virus, how to find the source of the virus, how to develop a vaccine, and how to distribute materials during the epidemic require experts and scholars to find new or more suitable methods in their research field.

Among different categories of methods, algorithms are bound to have an important role; algorithms are ubiquitous and offer precise methodologies to solve problems ( Carman, 2013 ). Informally, an algorithm is any well-defined computational procedure that takes a set of values as input and produces some value as output ( Cormen, Leiserson, Rivest, & Stein, 2009 ), which is needed in scientific research. Especially in the era of big data, data-driven research requires algorithms to extract, process, and analyze massive amounts of data. Therefore, algorithms have become research objects, as well as useful technologies, of scholars in different fields. In the "Venice Time Machine" project in the field of digital humanities, researchers used machine learning algorithms to reveal Venice's history in a dynamic digital form to reproduce the glorious style of the ancient city ( Abbott, 2017 ). In the field of computer science, scientists have used machine learning algorithms to combat the novel coronavirus, including the use of algorithms to detect infections, differentiate COVID-19 from the common flu and to predict the epidemic situation ( Dave, 2020 ).

Academic papers in many disciplines, especially in the computer science domain, propose, improve, and use various algorithms ( Tuarob & Tucker, 2015 ). However, not everyone is an algorithm expert. For many researchers, especially beginners in a field, gaining a thorough understanding of the algorithms and finding one that is suitable for their own research are urgent problems. Scholars usually find suitable algorithms through two methods. One method is direct consultation with more experienced scholars, but this method depends on the advisers’ knowledge and does not guarantee the comprehensiveness of the algorithm suggestions. Another method is reading academic literature and finding algorithms from the research of other people, which provides scholars with more algorithms. Academic papers are a perfect source of algorithms; however, information overload cannot be ignored. The research has pointed out that the number of academic literature entries generated worldwide has reached the level of millions, and it continues to increase at a rate of approximately 3% each year ( Bornmann & Mutz, 2015 ). If scientists only search for algorithms by reading articles, it will be a time-consuming and labor-intensive challenge. If the algorithms mentioned in papers, namely, any algorithm appearing in the papers, including the algorithm proposed, used, improved, described or simply mentioned by the author, can be identified and evaluated, it can save time for scholars and provide a solid foundation for them to sort out the algorithms of specific disciplines or research topics.

To this end, this article aims to collect algorithms in research papers in a domain and further explore the influence of algorithms. Tuarob et al. (2020) defined a standard algorithm in academic papers as one that is well known by people in a field and is usually recognized by its name, including Dijkstra’s shortest-path algorithm, the Bellman-Ford algorithm, the Quicksort algorithm, etc. On this basis, we use our experience, authors’ descriptions and other external knowledge to annotate the named algorithms in articles. In addition, we posit that, when an algorithm appears in an article, it has an influence on the article. Therefore, we evaluate the influence of an algorithm based on the number of papers that mention the algorithm in the full-text content. Mention count has proven to be a suitable indicator to measure the influence of entities in academic papers ( Howison & Bullard, 2016 ; Ma & Zhang, 2017 ; Pan, Yan, Wang, & Hua, 2015 ). Therefore, we take the field of natural language processing as an example and explore three research questions:

What are the high-influence algorithms in natural language processing?

What are the differences in the influential algorithms in different years?

How does the influence of the algorithm change over time?