Embedding Ethics in Computer Science

This site provides access to curricular materials created by the Embedded Ethics team at Stanford for undergraduate computer science courses. The materials are designed to expose students to ethical issues that are relevant to the technical content of CS courses, to provide students structured opportunities to engage in ethical reflection through lectures, problem sets, and assignments, and to build the ethical character and habits of young computer scientists.

Modules (Assignments + Lectures)

Banking on security.

Course: Intro to Systems

This assignment is about assembly, reverse engineering, security, privacy and trust. An earlier version of the assignment by Randal Bryant & David O'Hallaron (CMU), [accessible here](http://csapp.cs.cmu.edu/public/labs.html), used the framing story that students were defusing a ‘bomb’.

Bits, Bytes, and Overflows

The assignment is the first in an introduction to systems course. It covers bits, bytes and overflow, continuing students’ introduction to bitwise and arithmetic operations. Following the saturating arithmetic problem, we added a case study analysis about the Ariane-5 rocket launch failure. This provided students with a vivid illustration of the potential consequences of overflows as well as an opportunity to reflect on their responsibilities as engineers. The starter code is the full project provided to students.

Climate Change & Calculating Risk

Course: Probability

This assignment uses the tools of probability theory to introduce students to _risk weighted expected utility_ models of decision making. The risk weighted expected utility framework is then used to understand decision-making under uncertainty in the context of climate change. Which of the IPCC’s forecasts should we use? Do we owe it to future people to adopt a conservative risk profile when making decisions on their behalf? The assignment also introduces normative principles for allocating responsibility for addressing climate change. Students apply these formal tools and frameworks to understanding the ethical dimensions of climate change.

Fairness, Representation, and Machine Learning

This assignment builds on introductory knowledge of machine learning techniques, namely the naïve Bayes algorithm and logistic regression, to introduce concepts and definitions of algorithmic fairness. Students analyze sources of bias in algorithmic systems, then learn formal definitions of algorithmic fairness such as independence, separation, and fairness through awareness or unawareness. They are also introduced to notions of fairness that complicate the formal paradigms, including intersectionality and subgroup analysis, representation, and justice beyond distribution.

Lab: Therac-25 Case Study

This lab, the last of the course, asks students to discuss the case of Therac-25, a medical device that delivered lethal radiation due to a race condition.

Responsible Disclosure & Partiality

This assignment is about void * and generics. We added a case study about responsible disclosure and partiality. Students read a summary of researcher Dan Kaminsky’s discovery of a DNS vulnerability and answer questions about his decisions regarding disclosure of vulnerabilities as well as their own thoughts on partiality. The starter code is the full project provided to students.

Responsible Documentation

When functions have assumptions, limitations or flaws, it is vital that the documentation makes those clear. Without documentation, developers don’t have the information they need to make good decisions when writing their programs. We added a documentation component to this C string assignment. Students write a manual page for the skan_token function they have implemented, learning responsible documentation practice as they go. The starter code is the full project provided to students.

Design Discovery and Needfinding

Course: Intro to HCI

The lecture covers topics associated with power relations, the use of language, standpoint and inclusion as they arise in the context of design discovery.

Values in Design

The lecture presents the concept of values in design. It introduced the distinction between intended and collateral values; discusses the importance of assumptions in the value encoding process; and presents three strategies to address value conflicts that arise as a result of design decisions.

Assignments

Concept video.

This assignment asks students to consider what values are encoded in their product and the decisions they make in the design process; whether there are conflicting values; and how they address existing value conflicts.

Ethics in Advanced Technology

Course: AI Principles

After successfully creating a component of a self-driving car – a (virtual) sensor system that tracks other surrounding cars based on noisy sensor readings – students are prompted to reflect on ethical issues related to the creation, deployment, and policy governance of advanced technologies like self-driving cars. Students encounter classic concerns in the ethics of technology such as surveillance, ethics dumping, and dual-use technologies, and apply these concepts to the case of self-driving cars.

Foundations: Code of Ethics

In Problem 3 of this assignment, “Ethical Issue Spotting,” students explore the ethics of four different real-world scenarios using the ethics guidelines produced by a machine learning research venue, the NeurIPS conference. Students write a potential negative social impacts statement for each scenario, determining if the algorithm violates one of the sixteen guidelines listed in the NeurIPS Ethical Guidelines. In doing so, they practice spotting potential ethical concerns in real-world applications of AI and begin taking on the role of a responsible AI practitioner.

Heuristic Evaluation

This assignment asks students to evaluate their peers’ projects through a series of heuristics and to respond to others’ evaluations of their projects. By incorporating ethics questions to this evaluation, we prompt them to consider ethical aspects as part of a product’s design features which should be evaluated alongside other design aspects.

Medium-Fi Prototype

Modeling sea level rise.

This assignment is about Markov Decision Processes (MDPs). In Problem 5, we use the MDP the students have created to model how a coastal city government’s mitigation choices will affect its ability to adapt to rising sea levels over the course of multiple decades. At each timestep, the government may choose to invest in infrastructure or save its surplus budget. But the amount that the sea will rise is uncertain: each choice is a risk. Students model the city’s decision-making under two different time horizons, 40 or 100 years, and with different discount factors for the well-being of future people. In both cases, they see that choosing a longer time horizon or a smaller discount factor will lead to more investment now. Students then are introduced to five ethical positions on the comparative value of current and future generations’ well being. They evaluate their modeling choices in light of their choice of ethical position.

Needfinding

With this assignment, students will reflect on the group of users their project is intended to serve; their reasons for selecting these users; the notion of an “extreme user;” and the reason why their perspectives are valuable for the design process. I also asks them to reflect on what accommodations they make for their interviewees.

POV and Experience Prototypes

With this assignment, students are prompted to reflect on how proposed solutions to the problems they identify may exclude members of certain communities.

Residency Hours Scheduling

In this assignment, students explore constraint satisfaction problems (CSP) and use backtracking search to solve them. Many uses of constraint satisfaction in real-world scenarios involve assignment of resources to entities, like assigning packages to different trucks to optimize delivery. However, when the agents are people, the issue of fair division arises. In this question, students will consider the ethics of what constraints to remove in a CSP when the CSP is unsatisfiable.

Sentiment Classification and Maximum Group Loss

Although each of the problems in the problem set build on one another, the ethics assignment itself begins with Problem 4: Toxicity Classification and Maximum Group Loss. Toxicity classifiers are designed to assist in moderating online forums by predicting whether an online comment is toxic or not so that comments predicted to be toxic can be flagged for humans to review. Unfortunately, such models have been observed to be biased: non-toxic comments mentioning demographic identities often get misclassified as toxic (e.g., “I am a [demographic identity]”). These biases arise because toxic comments often mention and attack demographic identities, and as a result, models learn to _spuriously correlate_ toxicity with the mention of these identities. Therefore, some groups are more likely to have comments incorrectly flagged for review: their group-level loss is higher than other groups.

We could't find any materials that match that combination of CS and ethics topics.

Artificial Intelligence: Principles and Techniques

Artificial intelligence (AI) has had a huge impact in many areas, including medical diagnosis, speech recognition, robotics, web search, advertising, and scheduling. This course focuses on the foundational concepts that drive these applications. In short, AI is the mathematics of making good decisions given incomplete information (hence the need for probability) and limited computation (hence the need for algorithms). Specific topics include search, constraint satisfaction, game playing,n Markov decision processes, graphical models, machine learning, and logic.

Introduction to Computer Organization & Systems

Introduction to the fundamental concepts of computer systems. Explores how computer systems execute programs and manipulate data, working from the C programming language down to the microprocessor.

Introduction to Human-Computer Interaction

Introduces fundamental methods and principles for designing, implementing, and evaluating user interfaces. Topics: user-centered design, rapid prototyping, experimentation, direct manipulation, cognitive principles, visual design, social software, software tools. Learn by doing: work with a team on a quarter-long design project, supported by lectures, readings, and studios.

Probability for Computer Scientists

Introduction to topics in probability including counting and combinatorics, random variables, conditional probability, independence, distributions, expectation, point estimation, and limit theorems. Applications of probability in computer science including machine learning and the use of probability in the analysis of algorithms.

Programming Methodology

Introduction to the engineering of computer applications emphasizing modern software engineering principles: object-oriented design, decomposition, encapsulation, abstraction, and testing. Emphasis is on good programming style and the built-in facilities of respective languages. No prior programming experience required.

Programming Abstractions

Abstraction and its relation to programming. Software engineering principles of data abstraction and modularity. Object-oriented programming, fundamental data structures (such as stacks, queues, sets) and data-directed design. Recursion and recursive data structures (linked lists, trees, graphs). Introduction to time and space complexity analysis. Uses the programming language C++ covering its basic facilities.

Reinforcement Learning

To realize the dreams and impact of AI requires autonomous systems that learn to make good decisions. Reinforcement learning is one powerful paradigm for doing so, and it is relevant to an enormous range of tasks, including robotics, game playing, consumer modeling and healthcare. This class will provide a solid introduction to the field of reinforcement learning and students will learn about the core challenges and approaches, including generalization and exploration. Through a combination of lectures, and written and coding assignments, students will become well versed in key ideas and techniques for RL. Assignments will include the basics of reinforcement learning as well as deep reinforcement learning — an extremely promising new area that combines deep learning techniques with reinforcement learning.

Operating Systems Principles

This class introduces the basic facilities provided by modern operating systems. The course divides into three major sections. The first part of the course discusses concurrency: how to manage multiple tasks that execute at the same time and share resources. Topics in this section include processes and threads, context switching, synchronization, scheduling, and deadlock. The second part of the course addresses the problem of memory management; it will cover topics such as linking, dynamic memory allocation, dynamic address translation, virtual memory, and demand paging. The third major part of the course concerns file systems, including topics such as storage devices, disk management and scheduling, directories, protection, and crash recovery. After these three major topics, the class will conclude with a few smaller topics such as virtual machines.

Design and Analysis of Algorithms

Worst and average case analysis. Recurrences and asymptotics. Efficient algorithms for sorting, searching, and selection. Data structures: binary search trees, heaps, hash tables. Algorithm design techniques: divide-and-conquer, dynamic programming, greedy algorithms, amortized analysis, randomization. Algorithms for fundamental graph problems: minimum-cost spanning tree, connected components, topological sort, and shortest paths. Possible additional topics: network flow, string searching.

Design for Behavior Change

Over the last decade, tech companies have invested in shaping user behavior, sometimes for altruistic reasons like helping people change bad habits into good ones, and sometimes for financial reasons such as increasing engagement. In this project-based hands-on course, students explore the design of systems, information and interface for human use. We will model the flow of interactions, data and context, and crafting a design that is useful, appropriate and robust. Students will design and prototype utility apps or games as a response to the challenges presented. We will also examine the ethical consequences of design decisions and explore current issues arising from unintended consequences. Prerequisite: CS147 or equivalent.

A new initiative seeks to integrate ethical thinking into computing

Technology is facing a bit of a reckoning.

Algorithms impact free speech, privacy, and autonomy. They, or the datasets on which they are trained, are often infused with bias or used to inappropriately manipulate people. And many technology companies are facing pushback against their immense power to impact the wellbeing of individuals and democratic institutions. Policymakers clearly need to address these problems. But universities also have an important role to play in preparing the next generation of computer scientists, says Mehran Sahami , professor and associate chair for education in the Computer Science department at Stanford University. “Computer scientists need to think about ethical issues from the outset rather than just building technology and letting problems surface downstream.”

To that end, the Stanford Computer Science department , the McCoy Family Center for Ethics in Society and the Institute for Human-Centered Artificial Intelligence (HAI) are jointly launching an initiative to create ethics-based curriculum modules that will be embedded in the university’s core undergraduate computer science courses. Called Embedded EthiCS (the uppercase CS stands for computer science), the program is being developed in collaboration with a network of researchers who launched a similar program at Harvard University in 2017.

“Embedded EthiCS will allow us to revisit different ethical topics throughout the curriculum and have students get a better appreciation that these issues come up in a more constant and consistent manner, rather than just being addressed on the side or after the fact,” Sahami says.

Once the modules have been successfully implemented at Stanford, they will be disseminated online (under a Creative Commons license) and available for other universities to use or adapt as a part of their own core undergraduate computer science courses. “We hope, through this initiative, to make an engagement with ethical questions inescapable for people majoring in computer science everywhere,” says Rob Reich , professor of political science in the School of Humanities and Sciences, director of the McCoy Family Center for Ethics in Society, and associate director of Stanford HAI.

Expanding the Curriculum

Teaching ethics to Stanford undergraduate computer science students is not new. Individual courses have been around for more than 20 years, and a new interdisciplinary Ethics and Technology course was launched three years ago by Reich, Sahami, professor of political science in the School of Humanities and Sciences Jeremy Weinstein , and other collaborators. But the Embedded EthiCS initiative will ensure that more students understand the importance of ethics in a technological context, Sahami says. And it signals to students that ethics is absolutely integral to their computer science education.

The initiative, which is funded by a member of the HAI advisory board, has already taken its first step: hiring Embedded EthiCS fellow Kathleen Creel . She will collaborate with computer science faculty to develop ethics modules that will be integrated into core undergraduate computer science courses during the next two years.

Creel, who says she feels as if she’s been training for this job her whole life, double majored in computer science and philosophy as an undergraduate before working in tech and then getting her PhD in the history and philosophy of science.

“Studying computer science changed the way I think about everything,” Creel says. She remembers being delighted by the way her mindset shifted as she learned how to formulate problems, define variables, and create optimization algorithms. She also realized (with help from her philosophy coursework) that each of those steps raised ethical questions. For example: For whom is this a problem? Who benefits from the solution to this problem? How does the formulation of this problem have ethical consequences? What am I trying to optimize?

“One of the hopes behind the Embedded EthiCS curriculum is that as you’re learning this whole computational mindset that will change your life and the way you think about everything, you’ll also practice, throughout the whole curriculum, building ethical thinking into that mindset.”

‘Spaces to Think’

The Embedded EthiCS modules created by Creel and her collaborators will be deployed in one class during the fall quarter of 2020, and two classes in each of the Winter and Spring quarters of 2021. Each module will include at least one lecture and one assignment that grapples with ethical issues relevant to the course. But Creel says she and her collaborators are also working on ways to more deeply embed the modules — so that they aren’t just stand-alone days.

Topics covered will vary depending on the course, but will include fairness and bias in machine learning algorithms, the manipulation of digital images, and other issues of interpersonal ethics in technology, such as how a self-driving car should behave in order to preserve human life or minimize suffering. Creel says modules will also address how technology should function in a democratic society, as well as “meta-ethical” issues such as how a person might balance duties as a software engineer for a particular company with duties as a moral agent more generally. “Students often want very much to do the right thing and want opportunities and spaces to think about how to do it,” Creel says.

The goal, says Anne Newman , research director at the McCoy Family Center for Ethics in Society, is “for students to gain the skills to be good reasoners about ethical dilemmas, and to understand what the competing values are — that there are value tensions and how to muddle through those.”

As Reich sees it, “We want the pipeline of first-rate computer scientists coming out of Stanford to have a full complement of ethical frameworks to accompany their technical prowess.” At the same time, he hopes that the many students at Stanford who take intro computer science courses but don’t major in the field will also benefit from understanding the ethical, social, and political implications of technology — whether as informed citizens, consumers, policy experts, researchers, or civil society leaders. “We won’t create overnight a new landscape for the governance or regulation of technology or professional ethics for computer scientists or technologists, but rather by educating the next generation,” he says.

Mehran Sahami , professor and associate chair for education in the Computer Science department

The future of brain science

AI and holography bring 3D augmented reality to regular glasses

The future of cybersecurity

How a new program at Stanford is embedding ethics into computer science

Shortly after Kathleen Creel started her position at Stanford as the inaugural Embedded EthiCS fellow some two years ago, a colleague sent her a 1989 newspaper clipping about the launch of Stanford’s first computer ethics course to show her how the university has long been committed to what Creel was tasked with: helping Stanford students understand the moral and ethical dimensions of technology .

Kathleen Creel is training the next generation of entrepreneurs and engineers to identify and work through various ethical and moral problems they will encounter in their careers. (Image credit: Courtesy Kathleen Creel)

While much has changed since the article was first published in the San Jose Mercury News , many of the issues that reporter Tom Philp discussed with renowned Stanford computer scientist Terry Winograd in the article remain relevant.

Describing some of the topics Stanford students were going to deliberate in Winograd’s course – a period Philp described as “rapidly changing” – he wrote: “Should students freely share copyrighted software? Should they be concerned if their work has military applications? Should they submit a project on deadline if they are concerned that potential bugs could ruin peoples’ work?”

Three decades later, Winograd’s course on computer ethics has evolved , but now it is joined by a host of other efforts to expand ethics curricula at Stanford. Indeed, one of the main themes of the university’s Long Range Vision is embedding ethics across research and education. In 2020, the university launched the Ethics, Society, and Technology (EST) Hub , whose goal is to help ensure that technological advances born at Stanford address the full range of ethical and societal implications.

That same year, the EST Hub, in collaboration with Stanford Institute for Human-Centered Artificial Intelligence (HAI), the McCoy Family Center for Ethics in Society , and the Computer Science Department, created the Embedded EthiCS program, which will embed ethics modules into core computer science courses. Creel is Embedded EthiCS’ first fellow.

Stanford University, situated in the heart of Silicon Valley and intertwined with the influence and impact inspired by technological innovations in the region and beyond, is a vital place for future engineers and technologists to think through their societal responsibilities, Creel said.

“I think teaching ethics specifically at Stanford is very important because many Stanford students go on to be very influential in the world of tech,” said Creel, whose own research explores the moral, political, and epistemic implications of how machine learning is used in the world.

“If we can make any difference in the culture of tech, Stanford is a good place to be doing it,” she said.

Establishing an ethical mindset

Creel is both a computer scientist and a philosopher. After double-majoring in both fields at Williams College in Massachusetts, she worked as a software engineer at MIT Lincoln Laboratory on a large-scale satellite project. There, she found herself asking profound, philosophical questions about the dependence on technology in high-stake situations, particularly when it comes to how AI-based systems have evolved to inform people’s decision-making. She wondered, how do people know they can trust these tools and what information do they need to have in order to believe that it can be a reliable addition or substitution for human judgment?

Creel decided to confront these questions head-on at graduate school, and in 2020, she earned her PhD in history and the philosophy of science at the University of Pittsburgh.

During her time at Stanford, Creel has collaborated with faculty and lecturers across Stanford’s Computer Science department to identify various opportunities for students to think through the social consequences of technology – even if it’s just one or five minutes at a time.

Rather than have ethics be its own standalone seminar or dedicated class topic that is often presented at either the beginning or end of a course, the Embedded EthiCS program aims to intersperse ethics throughout the quarter by integrating it into core course assignments, class discussions, and lectures.

“The objective is to weave ethics into the curriculum organically so that it feels like a natural part of their practice,” said Creel. Creel has worked with professors on nine computer science courses, including: CS106A: Programming Methodology ; CS106B: Programming Abstractions ; CS107: Computer Organization and Systems ; CS109: Introduction to Probability for Computer Scientists ; CS221: Artificial Intelligence: Principles and Techniques ; CS161: Design and Analysis of Algorithms; and CS47B: Design for Behavior Change.

During her fellowship, Creel gave engaging lectures about specific ethical issues and worked with professors to develop new coursework that demonstrates how the choices students will make as engineers carry broader implications for society.

One of the instructors Creel worked with was Nick Troccoli , a lecturer in the Computer Science Department. Troccoli teaches CS 107: Computer Organization & Systems , the third course in Stanford’s introductory programming sequence, which focuses mostly on how computer systems execute programs. Although some initially wondered how ethics would fit into such a technical curriculum, Creel and Troccoli, along with course assistant Brynne Hurst, found clear hooks for ethics discussions in assignments, lectures, and labs throughout the course.

For example, they refreshed a classic assignment about how to figure out a program’s behavior without seeing its code (“reverse engineering”). Students were asked to imagine they were security researchers hired by a bank to discover how a data breach had occurred, and how the hacked information could be combined with other publicly-available information to discover bank customers’ secrets.

Creel talked about how anonymized datasets can be reverse engineered to reveal identifying information and why that is a problem. She introduced the students to different models of privacy, including differential privacy, a technique that can make privacy in a database more robust by minimizing identifiable information.

Students were then tasked to provide recommendations to further anonymize or obfuscate data to avoid breaches.

“Katie helped students understand what potential scenarios may arise as a result of programming and how ethics can be a tool to allow you to better understand those kinds of issues,” Troccoli said.

Another instructor Creel worked with was Assistant Professor Aviad Rubinstein , who teaches CS161: Design and Analysis of Algorithms .

Creel and Rubinstein, joined by research assistant Ananya Karthik and course assistant Golrokh Emami, came up with an assignment where students were asked to create an algorithm that would help a popular distributor decide the locations of their warehouses and determine which customers received one versus two-day delivery.

Students worked through the many variables to determine warehouse location, such as optimizing cost with existing customer demand and driver route efficiency. If the algorithm prioritized these features, closer examination would reveal that historically redlined Black American neighborhoods would be excluded from receiving one-day delivery.

Students were then asked to develop another algorithm that would address the delivery issue while also optimizing even coverage and cost.

The goal of the exercise was to show students that as engineers, they are also decision-makers whose choices carry real-world consequences that can affect equity and inclusion in communities across the country. Students were asked to also share what those concepts mean to them.

“The hope is to show them this is a problem they might genuinely face and that they might use algorithms to solve, and that ethics will guide them in making this choice,” Creel said. “Using the tools that we’ve taught them in the ethics curriculum, they will now be able to understand that choosing an algorithm is indeed a moral choice that they are making, not only a technical one.”

Developing moral co urage

Some students have shared with Creel how they themselves have been subject to algorithmic biases.

For example, when the pandemic shuttered high schools across the country, some school districts turned to online proctoring services to help them deliver exams remotely. These services automated the supervision of students and their space while they take a test.

However, these AI-driven services have come under criticism, particularly around issues concerning privacy and racial bias. For example, the scanning software sometimes fails to detect students with darker skin, Creel said.

Sometimes, there are just glitches in the computer system and the AI will flag a student even though no offense has taken place. But because of the proprietary nature of the technology, how the algorithm came to its decision is not always entirely apparent.

“Students really understand how if these services were more transparent, they could have pointed to something that could prove why an automated flag that may have gone up was wrong,” said Creel.

Overall, Creel said, students have been eager to develop the skillset to help them discuss and deliberate on the ethical dilemmas they could encounter in their professional careers.

“I think they are very aware that they, as young engineers, could be in a situation where someone above them asks them to do something that they don’t think is right,” she added. “They want tools to figure out what is right, and I think they also want help building the moral courage to figure out how to say no and to interact in an environment where they may not have a lot of power. For many of them, it feels very important and existential.”

Creel is now transitioning from her role at Stanford to Northeastern University where she will hold a joint appointment as an assistant professor of philosophy and computer science.

Building an Ethical Computational Mindset

Stanford launches an embedded EthiCS program to help students consistently think through the common issues that arise in computer science.

Linda A. Cicero

Stanford's embedded ethics program will ensure that more students understand the importance of ethics in a technological context and signal that ethics is integral to their work.

Technology is facing a bit of a reckoning. Algorithms impact free speech, privacy, and autonomy. They, or the datasets on which they are trained, are often infused with bias or used to inappropriately manipulate people. And many technology companies are facing pushback against their immense power to impact the wellbeing of individuals and democratic institutions. Policymakers clearly need to address these problems. But universities also have an important role to play in preparing the next generation of computer scientists, says Mehran Sahami , professor and associate chair for education in the Computer Science department at Stanford University . “Computer scientists need to think about ethical issues from the outset rather than just building technology and letting problems surface downstream.”

To that end, the Stanford Computer Science department , the McCoy Family Center for Ethics in Society and the Institute for Human-Centered Artificial Intelligence (HAI) are jointly launching an initiative to create ethics-based curriculum modules that will be embedded in the university’s core undergraduate computer science courses. Called Embedded EthiCS (the uppercase CS stands for computer science), the program is being developed in collaboration with a network of researchers who launched a similar program at Harvard University in 2017.

“Embedded EthiCS will allow us to revisit different ethical topics throughout the curriculum and have students get a better appreciation that these issues come up in a more constant and consistent manner, rather than just being addressed on the side or after the fact,” Sahami says.

Once the modules have been successfully implemented at Stanford, they will be disseminated online (under a Creative Commons license) and available for other universities to use or adapt as a part of their own core undergraduate computer science courses. “We hope, through this initiative, to make an engagement with ethical questions inescapable for people majoring in computer science everywhere,” says Rob Reich , professor of political science in the School of Humanities and Sciences , director of the McCoy Family Center for Ethics in Society, and associate director of Stanford HAI.

Expanding the Curriculum

Teaching ethics to Stanford undergraduate computer science students is not new. Individual courses have been around for more than 20 years, and a new interdisciplinary Ethics and Technology course was launched three years ago by Reich, Sahami, professor of political science in the School of Humanities and Sciences Jeremy Weinstein , and other collaborators. But the Embedded EthiCS initiative will ensure that more students understand the importance of ethics in a technological context, Sahami says. And it signals to students that ethics is absolutely integral to their computer science education.

The initiative, which is funded by a member of the HAI advisory board, has already taken its first step: hiring Embedded EthiCS fellow Kathleen Creel . She will collaborate with computer science faculty to develop ethics modules that will be integrated into core undergraduate computer science courses during the next two years.

Creel, who says she feels as if she’s been training for this job her whole life, double majored in computer science and philosophy as an undergraduate before working in tech and then getting her PhD in the history and philosophy of science.

“Studying computer science changed the way I think about everything,” Creel says. She remembers being delighted by the way her mindset shifted as she learned how to formulate problems, define variables, and create optimization algorithms. She also realized (with help from her philosophy coursework) that each of those steps raised ethical questions. For example: For whom is this a problem? Who benefits from the solution to this problem? How does the formulation of this problem have ethical consequences? What am I trying to optimize?

“One of the hopes behind the Embedded EthiCS curriculum is that as you’re learning this whole computational mindset that will change your life and the way you think about everything, you’ll also practice, throughout the whole curriculum, building ethical thinking into that mindset.”

‘Spaces to Think’

The Embedded EthiCS modules created by Creel and her collaborators will be deployed in one class during the fall quarter of 2020, and two classes in each of the Winter and Spring quarters of 2021. Each module will include at least one lecture and one assignment that grapples with ethical issues relevant to the course. But Creel says she and her collaborators are also working on ways to more deeply embed the modules – so that they aren’t just stand-alone days.

Topics covered will vary depending on the course, but will include fairness and bias in machine learning algorithms, the manipulation of digital images, and other issues of interpersonal ethics in technology, such as how a self-driving car should behave in order to preserve human life or minimize suffering. Creel says modules will also address how technology should function in a democratic society, as well as “meta-ethical” issues such as how a person might balance duties as a software engineer for a particular company with duties as a moral agent more generally. “Students often want very much to do the right thing and want opportunities and spaces to think about how to do it,” Creel says.

The goal, says Anne Newman , research director at the McCoy Family Center for Ethics in Society, is “for students to gain the skills to be good reasoners about ethical dilemmas, and to understand what the competing values are – that there are value tensions and how to muddle through those.”

As Reich sees it, “We want the pipeline of first-rate computer scientists coming out of Stanford to have a full complement of ethical frameworks to accompany their technical prowess.” At the same time, he hopes that the many students at Stanford who take intro computer science courses but don’t major in the field will also benefit from understanding the ethical, social, and political implications of technology – whether as informed citizens, consumers, policy experts, researchers, or civil society leaders. “We won’t create overnight a new landscape for the governance or regulation of technology or professional ethics for computer scientists or technologists, but rather by educating the next generation,” he says.

Stanford HAI's mission is to advance AI research, education, policy and practice to improve the human condition. Learn more .

More News Topics

Related content.

David Magnus: How will artificial intelligence impact medical ethics?

In recent years, the explosion of artificial intelligence in medicine has yielded an increase in hope for patient...

What is the most effective way to bring AI into the classroom?

How An AI-based “Super Teaching Assistant” Could Revolutionize Learning

Stanford researchers propose a system that can better understand student needs and broaden STEM education.

Featured Topics

Featured series.

A series of random questions answered by Harvard experts.

Explore the Gazette

Read the latest.

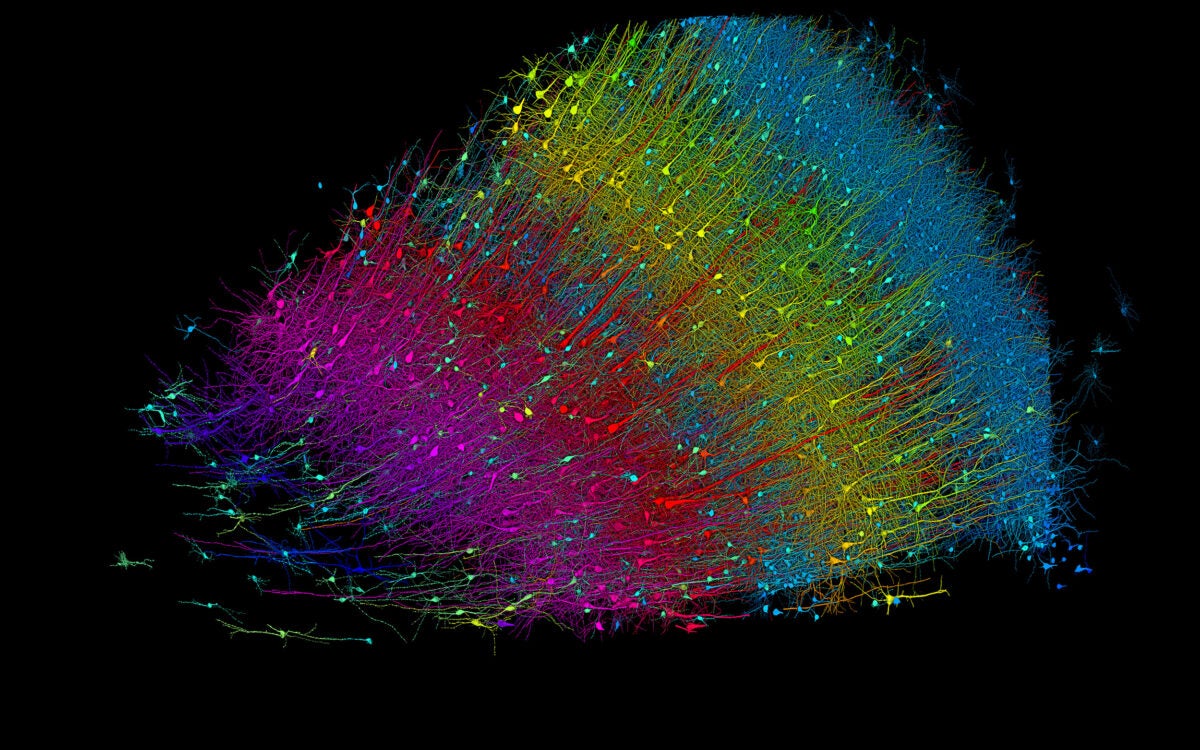

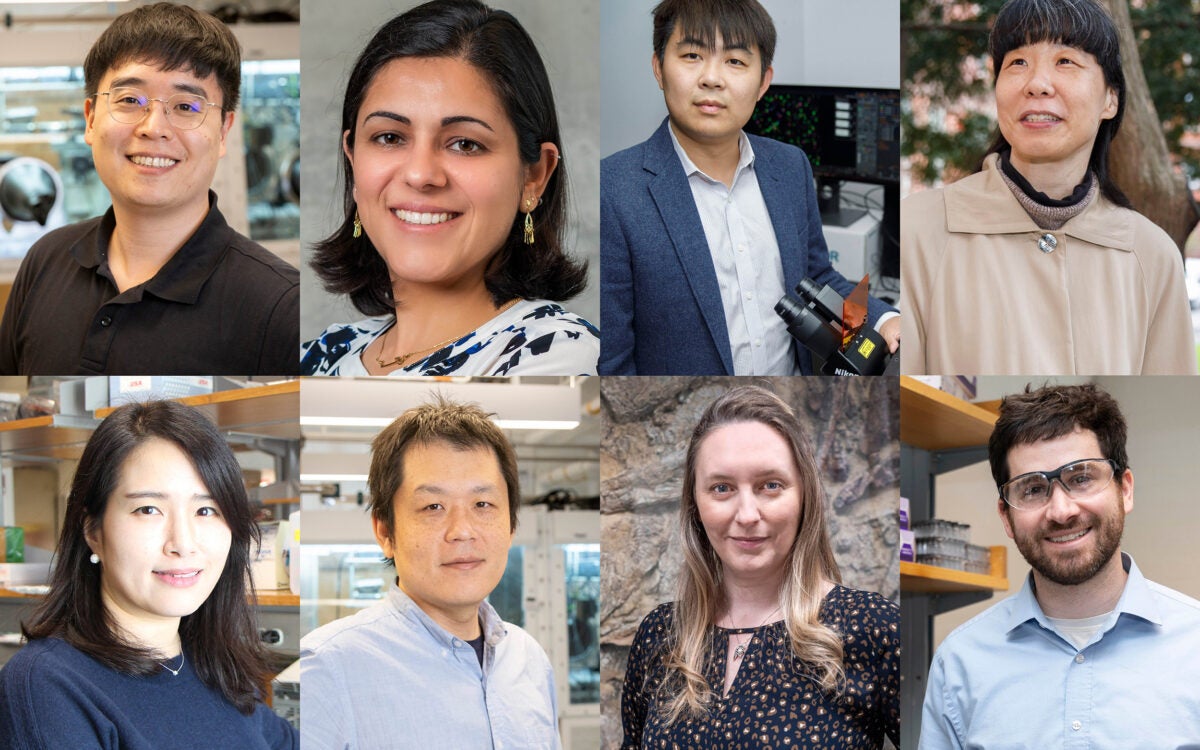

Epic science inside a cubic millimeter of brain

What is ‘original scholarship’ in the age of AI?

Complex questions, innovative approaches

Embedding ethics in computer science curriculum.

Photo illustration by Judy Blomquist/Harvard Staff

Paul Karoff

SEAS Communications

Harvard initiative seen as a national model

Barbara Grosz has a fantasy that every time a computer scientist logs on to write an algorithm or build a system, a message will flash across the screen that asks, “Have you thought about the ethical implications of what you’re doing?”

Until that day arrives, Grosz, the Higgins Professor of Natural Sciences at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS), is working to instill in the next generation of computer scientists a mindset that considers the societal impact of their work, and the ethical reasoning and communications skills to do so.

“Ethics permeates the design of almost every computer system or algorithm that’s going out in the world,” Grosz said. “We want to educate our students to think not only about what systems they could build, but whether they should build those systems and how they should design those systems.”

At a time when computer science departments around the country are grappling with how to turn out graduates who understand ethics as well as algorithms, Harvard is taking a novel approach.

In 2015, Grosz designed a new course called “Intelligent Systems: Design and Ethical Challenges.” An expert in artificial intelligence and a pioneer in natural language processing, Grosz turned to colleagues from Harvard’s philosophy department to co-teach the course. They interspersed into the course’s technical content a series of real-life ethical conundrums and the relevant philosophical theories necessary to evaluate them. This forced students to confront questions that, unlike most computer science problems, have no obvious correct answer.

Students responded. The course quickly attracted a following and by the second year 140 people were competing for 30 spots. There was a demand for more such courses, not only on the part of students, but by Grosz’s computer science faculty colleagues as well.

“The faculty thought this was interesting and important, but they didn’t have expertise in ethics to teach it themselves,” she said.

Barbara Grosz (from left), Jeffrey Behrends, and Alison Simmons hope Harvard’s approach to turning out graduates who understand ethics as well as algorithms becomes a national model.

Rose Lincoln/Harvard Staff Photographer

In response, Grosz and collaborator Alison Simmons, the Samuel H. Wolcott Professor of Philosophy, developed a model that draws on the expertise of the philosophy department and integrates it into a growing list of more than a dozen computer science courses, from introductory programming to graduate-level theory.

Under the initiative, dubbed Embedded EthiCS, philosophy graduate students are paired with computer science faculty members. Together, they review the course material and decide on an ethically rich topic that will naturally arise from the content. A graduate student identifies readings and develops a case study, activities, and assignments that will reinforce the material. The computer science and philosophy instructors teach side by side when the Embedded EthiCS material is brought to the classroom.

Grosz and her philosophy colleagues are at the center of a movement that they hope will spread to computer science programs around the country. Harvard’s “distributed pedagogy” approach is different from many university programs that treat ethics by adding a stand-alone course that is, more often than not, just an elective for computer science majors.

“Standalone courses can be great, but they can send the message that ethics is something that you think about after you’ve done your ‘real’ computer science work,” Simmons said. “We want to send the message that ethical reasoning is part of what you do as a computer scientist.”

Embedding ethics across the curriculum helps computer science students see how ethical issues can arise from many contexts, issues ranging from the way social networks facilitate the spread of false information to censorship to machine-learning techniques that empower statistical inferences in employment and in the criminal justice system.

Courses in artificial intelligence and machine learning are obvious areas for ethical discussions, but Embedded EthiCS also has built modules for less-obvious pairings, such as applied algebra.

“We really want to get students habituated to thinking: How might an ethical issue arise in this context or that context?” Simmons said.

“Standalone courses can be great, but they can send the message that ethics is something that you think about after you’ve done your ‘real’ computer science work.” Alison Simmons, Samuel H. Wolcott Professor of Philosophy

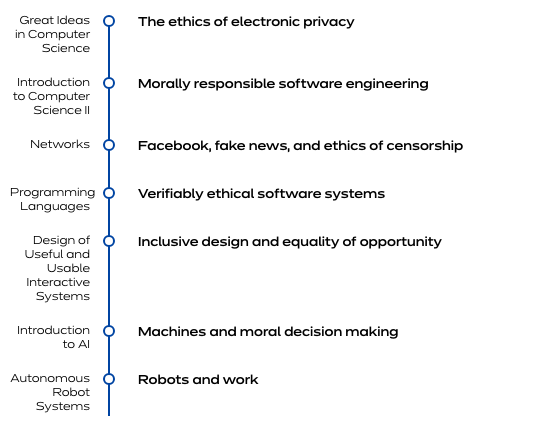

Curriculum at a glance

A sampling of classes from the Embedded EthiCS pilot program and the issues they address

- Great Ideas in Computer Science: The ethics of electronic privacy

- Introduction to Computer Science II: Morally responsible software engineering

- Networks: Facebook, fake news, and ethics of censorship

- Programming Languages: Verifiably ethical software systems

- Design of Useful and Usable Interactive Systems: Inclusive design and equality of opportunity

- Introduction to AI: Machines and moral decision making

- Autonomous Robot Systems: Robots and work

David Parkes, George F. Colony Professor of Computer Science, teaches a wide-ranging undergraduate class on topics in algorithmic economics. “Without this initiative, I would have struggled to craft the right ethical questions related to rules for matching markets, or choosing objectives for recommender systems,” he said. “It has been an eye-opening experience to get students to think carefully about ethical issues.”

Grosz acknowledged that it can be a challenge for computer science faculty and their students to wrap their heads around often opaque ethical quandaries.

“Computer scientists are used to there being ways to prove problem set answers correct or algorithms efficient,” she said. “To wind up in a situation where different values lead to there being trade-offs and ways to support different ‘right conclusions’ is a challenging mind shift. But getting these normative issues into the computer system designer’s mind is crucial for society right now.”

Jeffrey Behrends, currently a fellow-in-residence at Harvard’s Edmond J. Safra Center for Ethics, has co-taught the design and ethics course with Grosz. Behrends said the experience revealed greater harmony between the two fields than one might expect.

“Once students who are unfamiliar with philosophy are introduced to it, they realize that it’s not some arcane enterprise that’s wholly independent from other ways of thinking about the world,” he said. “A lot of students who are attracted to computer science are also attracted to some of the methodologies of philosophy, because we emphasize rigorous thinking. We emphasize a methodology for solving problems that doesn’t look too dissimilar from some of the methodologies in solving problems in computer science.”

The Embedded EthiCS model has attracted interest from universities — and companies — around the country. Recently, experts from more than 20 institutions gathered at Harvard for a workshop on the challenges and best practices for integrating ethics into computer science curricula. Mary Gray, a senior researcher at Microsoft Research (and a fellow at Harvard’s Berkman Klein Center for Internet and Society), who helped convene the gathering, said that in addition to impeccable technical chops, employers increasingly are looking for people who understand the need to create technology that is accessible and socially responsible.

“Our challenge in industry is to help researchers and practitioners not see ethics as a box that has to be checked at the end, but rather to think about these things from the very beginning of a project,” Gray said.

Those concerns recently inspired the Association for Computing Machinery (ACM), the world’s largest scientific and educational computing society, to update its code of ethics for the first time since 1992.

In hope of spreading the Embedded EthiCS concept widely across the computer science landscape, Grosz and colleagues have authored a paper to be published in the journal Communications of the ACM and launched a website to serve as an open-source repository of their most successful course modules.

They envision a culture shift that leads to a new generation of ethically minded computer science practitioners.

“In our dream world, success will lead to better-informed policymakers and new corporate models of organization that build ethics into all stages of design and corporate leadership,” Behrends says.

More like this

Corporate activism takes on precarious role

Bulgarian-born computer science student finds her niche

The experiment has also led to interesting conversations beyond the realm of computer science.

“We’ve been doing this in the context of technology, but embedding ethics in this way is important for every scientific discipline that is putting things out in the world,” Grosz said. “To do that, we will need to grow a generation of philosophers who will think about ways in which they can take philosophical ethics and normative thinking, and bring it to all of science and technology.”

Carefully designed course modules

At the heart of the Embedded EthiCS program are carefully designed, course-specific modules, collaboratively developed by faculty along with computer science and philosophy graduate student teaching fellows.

A module that Kate Vredenburgh, a philosophy Ph.D. student, created for a course taught by Professor Finale Doshi-Velez asks students to grapple with questions of how machine-learning models can be discriminatory, and how that discrimination can be reduced. An introductory lecture sets out a philosophical framework of what discrimination is, including the concepts of disparate treatment and impact. Students learn how eliminating discrimination in machine learning requires more than simply reducing bias in the technical sense. Even setting a socially good task may not be enough to reduce discrimination, since machine learning relies on predictively useful correlations and those correlations sometimes result in increased inequality between groups.

The module illuminates the ramifications and potential limitations of using a disparate impact definition to identify discrimination. It also introduces technical computer science work on discrimination — statistical fairness criteria. An in-class exercise focuses on a case in which an algorithm that predicts the success of job applicants to sales positions at a major retailer results in fewer African-Americans being recommended for positions than white applicants.

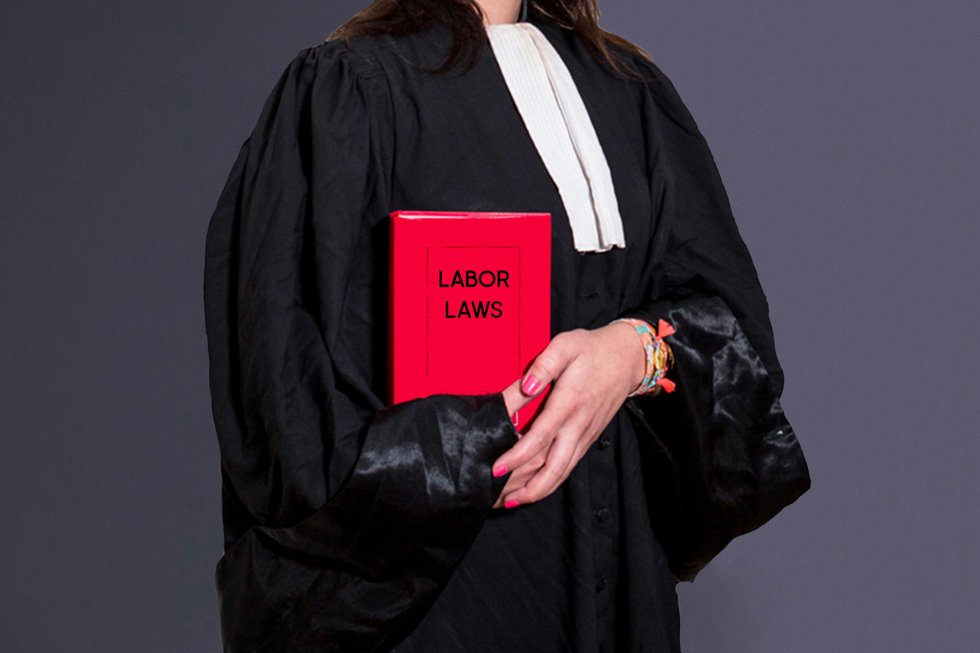

An out-of-class assignment asks students to draw on this grounding to address a concrete ethical problem faced by working computer scientists (that is, software engineers working for the Department of Labor). The assignment gives students an opportunity to apply the material to a real-world problem of the sort they might face in their careers, and asks them to articulate and defend their approach to solving the problem.

Share this article

You might like.

Researchers publish largest-ever dataset of neural connections

Symposium considers how technology is changing academia

Seven projects awarded Star-Friedman Challenge grants

How old is too old to run?

No such thing, specialist says — but when your body is trying to tell you something, listen

Excited about new diet drug? This procedure seems better choice.

Study finds minimally invasive treatment more cost-effective over time, brings greater weight loss

How far has COVID set back students?

An economist, a policy expert, and a teacher explain why learning losses are worse than many parents realize

- Related Organizations

- Current ACM Code

- Using the Code

- Software Engineering Code

- Other Codes

- Previous Versions

- Code 2018 Update Project

- Enforcement Procedures and Reporting

- Ask an Ethicist

- Case Studies

2018 ACM Code

Acm code of ethics and professional conduct.

This Code and its guidelines were adopted by the ACM Council on June 22nd, 2018. The Preamble was amended on October 22, 2021 to reflect changes in ACM award policies.

Computing professionals’ actions change the world. To act responsibly, they should reflect upon the wider impacts of their work, consistently supporting the public good. The ACM Code of Ethics and Professional Conduct (“the Code”) expresses the conscience of the profession.

The Code is designed to inspire and guide the ethical conduct of all computing professionals, including current and aspiring practitioners, instructors, students, influencers, and anyone who uses computing technology in an impactful way. Additionally, the Code serves as a basis for remediation when violations occur. The Code includes principles formulated as statements of responsibility, based on the understanding that the public good is always the primary consideration. Each principle is supplemented by guidelines, which provide explanations to assist computing professionals in understanding and applying the principle.

Section 1 outlines fundamental ethical principles that form the basis for the remainder of the Code. Section 2 addresses additional, more specific considerations of professional responsibility. Section 3 guides individuals who have a leadership role, whether in the workplace or in a volunteer professional capacity. Commitment to ethical conduct is required of every ACM member, ACM SIG member, ACM award recipient, and ACM SIG award recipient. Principles involving compliance with the Code are given in Section 4.

The Code as a whole is concerned with how fundamental ethical principles apply to a computing professional’s conduct. The Code is not an algorithm for solving ethical problems; rather it serves as a basis for ethical decision-making. When thinking through a particular issue, a computing professional may find that multiple principles should be taken into account, and that different principles will have different relevance to the issue. Questions related to these kinds of issues can best be answered by thoughtful consideration of the fundamental ethical principles, understanding that the public good is the paramount consideration. The entire computing profession benefits when the ethical decision-making process is accountable to and transparent to all stakeholders. Open discussions about ethical issues promote this accountability and transparency.

1. GENERAL ETHICAL PRINCIPLES.

A computing professional should…

1.1 Contribute to society and to human well-being, acknowledging that all people are stakeholders in computing.

This principle, which concerns the quality of life of all people, affirms an obligation of computing professionals, both individually and collectively, to use their skills for the benefit of society, its members, and the environment surrounding them. This obligation includes promoting fundamental human rights and protecting each individual’s right to autonomy. An essential aim of computing professionals is to minimize negative consequences of computing, including threats to health, safety, personal security, and privacy. When the interests of multiple groups conflict, the needs of those less advantaged should be given increased attention and priority.

Computing professionals should consider whether the results of their efforts will respect diversity, will be used in socially responsible ways, will meet social needs, and will be broadly accessible. They are encouraged to actively contribute to society by engaging in pro bono or volunteer work that benefits the public good.

In addition to a safe social environment, human well-being requires a safe natural environment. Therefore, computing professionals should promote environmental sustainability both locally and globally.

1.2 Avoid harm.

In this document, “harm” means negative consequences, especially when those consequences are significant and unjust. Examples of harm include unjustified physical or mental injury, unjustified destruction or disclosure of information, and unjustified damage to property, reputation, and the environment. This list is not exhaustive.

Well-intended actions, including those that accomplish assigned duties, may lead to harm. When that harm is unintended, those responsible are obliged to undo or mitigate the harm as much as possible. Avoiding harm begins with careful consideration of potential impacts on all those affected by decisions. When harm is an intentional part of the system, those responsible are obligated to ensure that the harm is ethically justified. In either case, ensure that all harm is minimized.

To minimize the possibility of indirectly or unintentionally harming others, computing professionals should follow generally accepted best practices unless there is a compelling ethical reason to do otherwise. Additionally, the consequences of data aggregation and emergent properties of systems should be carefully analyzed. Those involved with pervasive or infrastructure systems should also consider Principle 3.7.

A computing professional has an additional obligation to report any signs of system risks that might result in harm. If leaders do not act to curtail or mitigate such risks, it may be necessary to “blow the whistle” to reduce potential harm. However, capricious or misguided reporting of risks can itself be harmful. Before reporting risks, a computing professional should carefully assess relevant aspects of the situation.

1.3 Be honest and trustworthy.

Honesty is an essential component of trustworthiness. A computing professional should be transparent and provide full disclosure of all pertinent system capabilities, limitations, and potential problems to the appropriate parties. Making deliberately false or misleading claims, fabricating or falsifying data, offering or accepting bribes, and other dishonest conduct are violations of the Code.

Computing professionals should be honest about their qualifications, and about any limitations in their competence to complete a task. Computing professionals should be forthright about any circumstances that might lead to either real or perceived conflicts of interest or otherwise tend to undermine the independence of their judgment. Furthermore, commitments should be honored.

Computing professionals should not misrepresent an organization’s policies or procedures, and should not speak on behalf of an organization unless authorized to do so.

1.4 Be fair and take action not to discriminate.

The values of equality, tolerance, respect for others, and justice govern this principle. Fairness requires that even careful decision processes provide some avenue for redress of grievances.

Computing professionals should foster fair participation of all people, including those of underrepresented groups. Prejudicial discrimination on the basis of age, color, disability, ethnicity, family status, gender identity, labor union membership, military status, nationality, race, religion or belief, sex, sexual orientation, or any other inappropriate factor is an explicit violation of the Code. Harassment, including sexual harassment, bullying, and other abuses of power and authority, is a form of discrimination that, amongst other harms, limits fair access to the virtual and physical spaces where such harassment takes place.

The use of information and technology may cause new, or enhance existing, inequities. Technologies and practices should be as inclusive and accessible as possible and computing professionals should take action to avoid creating systems or technologies that disenfranchise or oppress people. Failure to design for inclusiveness and accessibility may constitute unfair discrimination.

1.5 Respect the work required to produce new ideas, inventions, creative works, and computing artifacts.

Developing new ideas, inventions, creative works, and computing artifacts creates value for society, and those who expend this effort should expect to gain value from their work. Computing professionals should therefore credit the creators of ideas, inventions, work, and artifacts, and respect copyrights, patents, trade secrets, license agreements, and other methods of protecting authors’ works.

Both custom and the law recognize that some exceptions to a creator’s control of a work are necessary for the public good. Computing professionals should not unduly oppose reasonable uses of their intellectual works. Efforts to help others by contributing time and energy to projects that help society illustrate a positive aspect of this principle. Such efforts include free and open source software and work put into the public domain. Computing professionals should not claim private ownership of work that they or others have shared as public resources.

1.6 Respect privacy.

The responsibility of respecting privacy applies to computing professionals in a particularly profound way. Technology enables the collection, monitoring, and exchange of personal information quickly, inexpensively, and often without the knowledge of the people affected. Therefore, a computing professional should become conversant in the various definitions and forms of privacy and should understand the rights and responsibilities associated with the collection and use of personal information.

Computing professionals should only use personal information for legitimate ends and without violating the rights of individuals and groups. This requires taking precautions to prevent re- identification of anonymized data or unauthorized data collection, ensuring the accuracy of data, understanding the provenance of the data, and protecting it from unauthorized access and accidental disclosure. Computing professionals should establish transparent policies and procedures that allow individuals to understand what data is being collected and how it is being used, to give informed consent for automatic data collection, and to review, obtain, correct inaccuracies in, and delete their personal data.

Only the minimum amount of personal information necessary should be collected in a system. The retention and disposal periods for that information should be clearly defined, enforced, and communicated to data subjects. Personal information gathered for a specific purpose should not be used for other purposes without the person’s consent. Merged data collections can compromise privacy features present in the original collections. Therefore, computing professionals should take special care for privacy when merging data collections.

1.7 Honor confidentiality.

Computing professionals are often entrusted with confidential information such as trade secrets, client data, nonpublic business strategies, financial information, research data, pre-publication scholarly articles, and patent applications. Computing professionals should protect confidentiality except in cases where it is evidence of the violation of law, of organizational regulations, or of the Code. In these cases, the nature or contents of that information should not be disclosed except to appropriate authorities. A computing professional should consider thoughtfully whether such disclosures are consistent with the Code.

2. PROFESSIONAL RESPONSIBILITIES.

2.1 strive to achieve high quality in both the processes and products of professional work..

Computing professionals should insist on and support high quality work from themselves and from colleagues. The dignity of employers, employees, colleagues, clients, users, and anyone else affected either directly or indirectly by the work should be respected throughout the process. Computing professionals should respect the right of those involved to transparent communication about the project. Professionals should be cognizant of any serious negative consequences affecting any stakeholder that may result from poor quality work and should resist inducements to neglect this responsibility.

2.2 Maintain high standards of professional competence, conduct, and ethical practice.

High quality computing depends on individuals and teams who take personal and group responsibility for acquiring and maintaining professional competence. Professional competence starts with technical knowledge and with awareness of the social context in which their work may be deployed. Professional competence also requires skill in communication, in reflective analysis, and in recognizing and navigating ethical challenges. Upgrading skills should be an ongoing process and might include independent study, attending conferences or seminars, and other informal or formal education. Professional organizations and employers should encourage and facilitate these activities.

2.3 Know and respect existing rules pertaining to professional work.

“Rules” here include local, regional, national, and international laws and regulations, as well as any policies and procedures of the organizations to which the professional belongs. Computing professionals must abide by these rules unless there is a compelling ethical justification to do otherwise. Rules that are judged unethical should be challenged. A rule may be unethical when it has an inadequate moral basis or causes recognizable harm. A computing professional should consider challenging the rule through existing channels before violating the rule. A computing professional who decides to violate a rule because it is unethical, or for any other reason, must consider potential consequences and accept responsibility for that action.

2.4 Accept and provide appropriate professional review.

High quality professional work in computing depends on professional review at all stages. Whenever appropriate, computing professionals should seek and utilize peer and stakeholder review. Computing professionals should also provide constructive, critical reviews of others’ work.

2.5 Give comprehensive and thorough evaluations of computer systems and their impacts, including analysis of possible risks.

Computing professionals are in a position of trust, and therefore have a special responsibility to provide objective, credible evaluations and testimony to employers, employees, clients, users, and the public. Computing professionals should strive to be perceptive, thorough, and objective when evaluating, recommending, and presenting system descriptions and alternatives. Extraordinary care should be taken to identify and mitigate potential risks in machine learning systems. A system for which future risks cannot be reliably predicted requires frequent reassessment of risk as the system evolves in use, or it should not be deployed. Any issues that might result in major risk must be reported to appropriate parties.

2.6 Perform work only in areas of competence.

A computing professional is responsible for evaluating potential work assignments. This includes evaluating the work’s feasibility and advisability, and making a judgment about whether the work assignment is within the professional’s areas of competence. If at any time before or during the work assignment the professional identifies a lack of a necessary expertise, they must disclose this to the employer or client. The client or employer may decide to pursue the assignment with the professional after additional time to acquire the necessary competencies, to pursue the assignment with someone else who has the required expertise, or to forgo the assignment. A computing professional’s ethical judgment should be the final guide in deciding whether to work on the assignment.

2.7 Foster public awareness and understanding of computing, related technologies, and their consequences.

As appropriate to the context and one’s abilities, computing professionals should share technical knowledge with the public, foster awareness of computing, and encourage understanding of computing. These communications with the public should be clear, respectful, and welcoming. Important issues include the impacts of computer systems, their limitations, their vulnerabilities, and the opportunities that they present. Additionally, a computing professional should respectfully address inaccurate or misleading information related to computing.

2.8 Access computing and communication resources only when authorized or when compelled by the public good.

Individuals and organizations have the right to restrict access to their systems and data so long as the restrictions are consistent with other principles in the Code. Consequently, computing professionals should not access another’s computer system, software, or data without a reasonable belief that such an action would be authorized or a compelling belief that it is consistent with the public good. A system being publicly accessible is not sufficient grounds on its own to imply authorization. Under exceptional circumstances a computing professional may use unauthorized access to disrupt or inhibit the functioning of malicious systems; extraordinary precautions must be taken in these instances to avoid harm to others.

2.9 Design and implement systems that are robustly and usably secure.

Breaches of computer security cause harm. Robust security should be a primary consideration when designing and implementing systems. Computing professionals should perform due diligence to ensure the system functions as intended, and take appropriate action to secure resources against accidental and intentional misuse, modification, and denial of service. As threats can arise and change after a system is deployed, computing professionals should integrate mitigation techniques and policies, such as monitoring, patching, and vulnerability reporting. Computing professionals should also take steps to ensure parties affected by data breaches are notified in a timely and clear manner, providing appropriate guidance and remediation.

To ensure the system achieves its intended purpose, security features should be designed to be as intuitive and easy to use as possible. Computing professionals should discourage security precautions that are too confusing, are situationally inappropriate, or otherwise inhibit legitimate use.

In cases where misuse or harm are predictable or unavoidable, the best option may be to not implement the system.

3. PROFESSIONAL LEADERSHIP PRINCIPLES.

Leadership may either be a formal designation or arise informally from influence over others. In this section, “leader” means any member of an organization or group who has influence, educational responsibilities, or managerial responsibilities. While these principles apply to all computing professionals, leaders bear a heightened responsibility to uphold and promote them, both within and through their organizations.

A computing professional, especially one acting as a leader, should…

3.1 Ensure that the public good is the central concern during all professional computing work.

People—including users, customers, colleagues, and others affected directly or indirectly— should always be the central concern in computing. The public good should always be an explicit consideration when evaluating tasks associated with research, requirements analysis, design, implementation, testing, validation, deployment, maintenance, retirement, and disposal. Computing professionals should keep this focus no matter which methodologies or techniques they use in their practice.

3.2 Articulate, encourage acceptance of, and evaluate fulfillment of social responsibilities by members of the organization or group.

Technical organizations and groups affect broader society, and their leaders should accept the associated responsibilities. Organizations—through procedures and attitudes oriented toward quality, transparency, and the welfare of society—reduce harm to the public and raise awareness of the influence of technology in our lives. Therefore, leaders should encourage full participation of computing professionals in meeting relevant social responsibilities and discourage tendencies to do otherwise.

3.3 Manage personnel and resources to enhance the quality of working life.

Leaders should ensure that they enhance, not degrade, the quality of working life. Leaders should consider the personal and professional development, accessibility requirements, physical safety, psychological well-being, and human dignity of all workers. Appropriate human-computer ergonomic standards should be used in the workplace.

3.4 Articulate, apply, and support policies and processes that reflect the principles of the Code.

Leaders should pursue clearly defined organizational policies that are consistent with the Code and effectively communicate them to relevant stakeholders. In addition, leaders should encourage and reward compliance with those policies, and take appropriate action when policies are violated. Designing or implementing processes that deliberately or negligently violate, or tend to enable the violation of, the Code’s principles is ethically unacceptable.

3.5 Create opportunities for members of the organization or group to grow as professionals.

Educational opportunities are essential for all organization and group members. Leaders should ensure that opportunities are available to computing professionals to help them improve their knowledge and skills in professionalism, in the practice of ethics, and in their technical specialties. These opportunities should include experiences that familiarize computing professionals with the consequences and limitations of particular types of systems. Computing professionals should be fully aware of the dangers of oversimplified approaches, the improbability of anticipating every possible operating condition, the inevitability of software errors, the interactions of systems and their contexts, and other issues related to the complexity of their profession—and thus be confident in taking on responsibilities for the work that they do.

3.6 Use care when modifying or retiring systems.

Interface changes, the removal of features, and even software updates have an impact on the productivity of users and the quality of their work. Leaders should take care when changing or discontinuing support for system features on which people still depend. Leaders should thoroughly investigate viable alternatives to removing support for a legacy system. If these alternatives are unacceptably risky or impractical, the developer should assist stakeholders’ graceful migration from the system to an alternative. Users should be notified of the risks of continued use of the unsupported system long before support ends. Computing professionals should assist system users in monitoring the operational viability of their computing systems, and help them understand that timely replacement of inappropriate or outdated features or entire systems may be needed.

3.7 Recognize and take special care of systems that become integrated into the infrastructure of society.

Even the simplest computer systems have the potential to impact all aspects of society when integrated with everyday activities such as commerce, travel, government, healthcare, and education. When organizations and groups develop systems that become an important part of the infrastructure of society, their leaders have an added responsibility to be good stewards of these systems. Part of that stewardship requires establishing policies for fair system access, including for those who may have been excluded. That stewardship also requires that computing professionals monitor the level of integration of their systems into the infrastructure of society. As the level of adoption changes, the ethical responsibilities of the organization or group are likely to change as well. Continual monitoring of how society is using a system will allow the organization or group to remain consistent with their ethical obligations outlined in the Code. When appropriate standards of care do not exist, computing professionals have a duty to ensure they are developed.

4. COMPLIANCE WITH THE CODE.

4.1 uphold, promote, and respect the principles of the code..

The future of computing depends on both technical and ethical excellence. Computing professionals should adhere to the principles of the Code and contribute to improving them. Computing professionals who recognize breaches of the Code should take actions to resolve the ethical issues they recognize, including, when reasonable, expressing their concern to the person or persons thought to be violating the Code.

4.2 Treat violations of the Code as inconsistent with membership in the ACM.

Each ACM member should encourage and support adherence by all computing professionals regardless of ACM membership. ACM members who recognize a breach of the Code should consider reporting the violation to the ACM, which may result in remedial action as specified in the ACM’s Code of Ethics and Professional Conduct Enforcement Policy .

The Code and guidelines were developed by the ACM Code 2018 Task Force: Executive Committee Don Gotterbarn (Chair), Bo Brinkman, Catherine Flick, Michael S Kirkpatrick, Keith Miller, Kate Varansky, and Marty J Wolf. Members: Eve Anderson, Ron Anderson, Amy Bruckman, Karla Carter, Michael Davis, Penny Duquenoy, Jeremy Epstein, Kai Kimppa, Lorraine Kisselburgh, Shrawan Kumar, Andrew McGettrick, Natasa Milic-Frayling, Denise Oram, Simon Rogerson, David Shama, Janice Sipior, Eugene Spafford, and Les Waguespack. The Task Force was organized by the ACM Committee on Professional Ethics. Significant contributions to the Code were also made by the broader international ACM membership. This Code and its guidelines were adopted by the ACM Council on June 22nd, 2018.

This Code may be published without permission as long as it is not changed in any way and it carries the copyright notice. Copyright (c) 2018 by the Association for Computing Machinery.

Be sure to check out our guide on Using the Code for decision making for practicing engineers.

The official repository of the ACM Code of Ethics and Professional Conduct is https://www.acm.org/about-acm/acm-code-of-ethics-and-professional-conduct . This Code constitutes Bylaw 15 of the Bylaws of the Association for Computing Machinery.

- Search for:

- Find a company

Unethical and Illegal Practices in Coding: From Prevention to Action

Jun 04, 2019