- Comprehensive Learning Paths

- 150+ Hours of Videos

- Complete Access to Jupyter notebooks, Datasets, References.

Introduction to Linear Algebra for Data Science

- August 21, 2023

Let’s dive into the Core Concepts behind Linear Algebra with Examples & Illustrations. This is a series of lessons on Linear Algebra, covering the main ideas behind this important topics. Let’s get started.

Introduction

The world of data science is vast, complex , and intriguing. At the heart of many algorithms, especially in machine learning and deep learning, lies a foundational mathematical tool Linear Algebra .

If you’re diving into data science or just curious about its mathematical backbone, this post is for you.

You will understand the essential components of linear algebra, its significance in Data Science, and learn from tangible examples for better comprehension.

Let’s get started.

1. What is Linear Algebra?

Linear Algebra is a branch of mathematics that deals with vectors, vector spaces, linear transformations, and matrices. These entities can be used to depict and solve systems of linear equations, among other tasks.

Let’s now understand the fundamental concepts used in Linear Algebra.

2. Fundamental Concepts

To get started with linear algebra, you need to understand few basic terms. Let’s define them.

Matrix : A matrix is a m x n , two-dimensional array of numbers. It’s essentially a collection of vectors. You can think of numbers arranged in rows and columns. For example:

Here, the matrix A has three rows and two columns.

Tensor : An n-dimensional array where n can be more than 2.

Linear Transformations : Transformations between vector spaces while preserving the operations of vector addition and scalar multiplication. Matrices can represent these transformations.

Dot Product : This is the sum of the products of corresponding elements of two vectors.

For vectors a = [a1, a2] and b = [b1, b2] , the dot product is a1*b1 + a2*b2 .

Example: For vectors v1 = [2,3] and v2 = [4,5] , the dot product is 2*4 + 3*5 = 8 + 15 = 23 .

Matrix Multiplication : Involves taking the dot product of rows of the first matrix with columns of the second matrix.

Example: To multiply matrices A and B, the entry in the 1st row, 1st column of the resulting matrix is the dot product of the 1st row of A and the 1st column of B.

Matrix Addition and Subtraction :

Addition: The element at row i, column j in the resulting matrix is the sum of the elements at row i, column j in the two matrices being added.

Subtraction: Same as addition but with subtraction of elements.

Determinant and Inverse :

Determinant: A scalar value that indicates the “volume scaling factor” of a linear transformation.

Inverse: If matrix A’s inverse is B, then the multiplication of A and B yields the identity matrix.

3. Why is Linear Algebra Essential for Data Scientists?

There are multiple reasons as to why Linear Algebra matters for Data Scientists.

Moreover, concepts like transformations, eigenvalues, and eigenvectors are used in algorithms like Principal Component Analysis (PCA), t-SNE which are used for dimensionality reduction of data and visualizing high dimensional data.

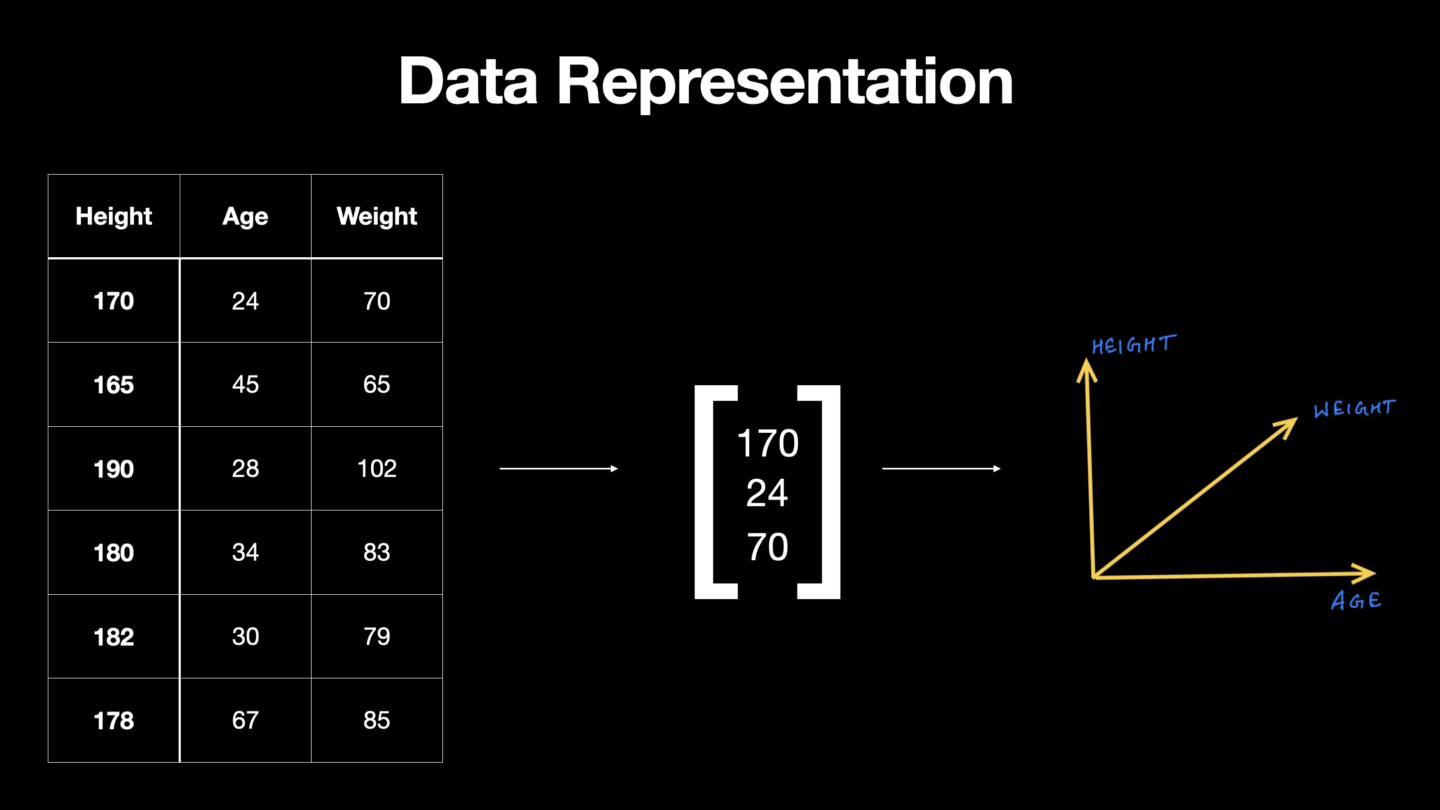

To represent data : In data science, data is often represented as matrices or tensors (multi-dimensional arrays). For example, an image in a computer can be represented as a matrix of pixel values. Understanding how to manipulate these matrices is needed for many data tasks.

Memory Efficient computations : Operations on matrices and vectors can be highly optimized in modern computational libraries. Knowing how to use linear algebra allows one to tap into these optimizations, making computations faster and more memory efficient.

Libraries like NumPy (in Python) or MATLAB are grounded in linear algebra. These tools are staples in the data science toolbox, and they are designed to handle matrix operations efficiently.

Conceptual Understanding : Beyond the computational benefits, a solid grasp of linear algebra provides a deeper conceptual understanding of many data science techniques. For example, understanding the geometric interpretation of vectors and matrices can provide intuition about why certain algorithms work and how they can be improved.

Optimization : Optimization problems in machine learning and statistics, like linear regression, can be formulated and solved using linear algebraic techniques. Techniques such as gradient descent involve vector and matrix calculations. Techniques such as ridge and lasso regression employ linear algebra for regularization to prevent overfitting.

Signal Processing : For those working with time series data or images, Fourier transforms and convolution operations, which are rooted in linear algebra, are crucial.

Network Analysis : If you’re working with graph data or network data, the adjacency matrix and the Laplacian matrix are foundational, and understanding their properties requires knowledge of linear algebra.

4. Use of Linear Algebra in Machine Learning Algorithms

Neural Networks : Neural networks are made of data connections called Neurons . Each neuron’s output is nothing but a linear transformation (via weights, which are matrices) of the input, passed through an activation function. This is mostly multiplication operations of linear algebra.

Support Vector Machines : SVM’s use the dot product to determine the margin between classes in classification problems. So, use Linear algebra is used.

Image Processing : Filters applied to images are matrices that transform the pixels.

Principal Component Analysis (PCA) : PCA is a shining application of linear algebra. At its core, PCA is about finding the “principal components” (or directions) in which the data varies the most.

Step-by-Step Process (each involve linear algebra) :

Standardization : Ensure all features have a mean of 0 and standard deviation of 1.

Covariance Matrix Computation : A matrix capturing the variance between features.

Eigendecomposition : Find the eigenvectors (the principal components) and eigenvalues of the covariance matrix.

Projection : Data is projected onto the top eigenvectors, reducing its dimensions while preserving as much variance as possible.

Linear algebra concepts is used in Data Science, day in and day out. It offers a framework to manipulate, transform, and interpret data, making it essential for various ML algorithms and processes. As you advance in data science, knowing the foundation in linear algebra will help.

More Articles

- Linear Algebra

Principal Component Analysis – A Deep Dive into Principal Component Analysis and its Linear Algebra Foundations

Linear regression algorithm – applications and concepts of linear algebra using the linear regression algorithm, system of equations – understanding linear algebra system of equations and plotting, singular value decomposition – a comprehensive guide on singular value decomposition, affine transformation – a comprehensive guide on affine transformation in linear algebra, eigenvectors and eigenvalues – detailed explanation on eigenvectors and eigenvalues, similar articles, complete introduction to linear regression in r, how to implement common statistical significance tests and find the p value, logistic regression – a complete tutorial with examples in r.

Subscribe to Machine Learning Plus for high value data science content

© Machinelearningplus. All rights reserved.

Machine Learning A-Z™: Hands-On Python & R In Data Science

Free sample videos:.

How Linear Algebra Powers Computer Science And Ai

As an essential pillar of mathematics, linear algebra equips computer scientists with fundamental tools to solve complex problems. From machine learning to computer graphics, linear algebra enables many critical applications of computing we rely on today.

If you’re short on time, here’s the key point: Linear algebra provides the mathematical foundation for representing and manipulating data in multidimensional space, which allows computers to process data for tasks like machine learning, computer vision, and graphics .

In this comprehensive guide, we’ll explore the indispensable role linear algebra plays across computer science and AI. You’ll learn foundational linear algebra concepts, how they enable multidimensional data analysis, and specific applications powering technologies like self-driving cars, facial recognition, and video games.

Core Concepts in Linear Algebra

Linear algebra is a fundamental branch of mathematics that plays a crucial role in various fields, including computer science and artificial intelligence (AI). Understanding the core concepts of linear algebra is essential for anyone interested in these disciplines.

In this section, we will explore some of the key concepts in linear algebra that power computer science and AI.

Vectors and Vector Spaces

Vectors are mathematical objects that represent both magnitude and direction. In the context of linear algebra, vectors are often used to represent quantities such as position, velocity, and force. They are an essential tool in computer science and AI for representing and manipulating data.

Vector spaces are sets of vectors that satisfy certain properties. They provide a framework for performing operations on vectors, such as addition, subtraction, and scalar multiplication. Vector spaces are fundamental to linear algebra and form the basis for many algorithms and computations in computer science and AI.

Matrices are rectangular arrays of numbers or symbols arranged in rows and columns. They are widely used in computer science and AI to represent and manipulate data. Matrices are used to perform operations on vectors, such as rotations, scaling, and transformations.

They are also used in solving systems of linear equations, which is a common problem in computer science and AI.

Matrix Operations

Matrix operations are fundamental to linear algebra and play a vital role in computer science and AI. Some of the key matrix operations include addition, subtraction, multiplication, and transposition. These operations are used to perform computations on matrices and solve various problems.

Eigenvalues and Eigenvectors

Eigenvalues and eigenvectors are important concepts in linear algebra that have applications in computer science and AI. Eigenvalues represent the scalar values associated with a given matrix, while eigenvectors represent the corresponding vectors.

They are used in various algorithms, such as principal component analysis, image compression, and recommendation systems.

Understanding the core concepts in linear algebra, such as vectors, matrices, matrix operations, and eigenvalues/eigenvectors, is essential for anyone working in computer science and AI. These concepts provide the foundation for solving complex problems and developing innovative algorithms.

To learn more about linear algebra and its applications in computer science and AI, you can visit reputable websites such as Khan Academy and Coursera .

Why Linear Algebra Is Critical for Multidimensional Data

Linear algebra plays a crucial role in computer science and artificial intelligence by enabling the representation, manipulation, and analysis of multidimensional data. This branch of mathematics provides powerful tools and techniques that allow researchers and developers to make sense of complex datasets and solve problems in various fields, from image recognition to natural language processing.

Representing Data Points in Space

One of the fundamental concepts in linear algebra is the ability to represent data points in space. In a two-dimensional Cartesian coordinate system, each data point can be represented as a vector with two components: the x-coordinate and the y-coordinate.

However, in real-world applications, data is often much more complex and can have hundreds, or even thousands, of dimensions. Linear algebra allows us to represent these high-dimensional data points as vectors in an n-dimensional space, where each component of the vector represents a different feature or attribute of the data.

This representation is essential for understanding the relationships and patterns within the data.

Transformations and Projections

Linear algebra also enables us to perform transformations and projections on multidimensional data. Transformations involve manipulating the data points using matrices, which can stretch, rotate, or reflect the data in different ways.

These transformations are crucial for tasks like image processing, where resizing, rotating, and applying filters to images require matrix operations. Projections, on the other hand, involve reducing the dimensions of the data while preserving its essential characteristics.

This technique is used in dimensionality reduction algorithms, such as Principal Component Analysis (PCA), which extract the most relevant features from high-dimensional data.

Analyzing Multidimensional Relationships

Another key application of linear algebra in computer science and AI is analyzing the relationships between multidimensional data. Linear algebra provides tools for measuring distances between data points, determining angles between vectors, and calculating similarities or dissimilarities among data points.

These calculations are crucial for tasks like clustering, classification, and recommendation systems. For example, in recommendation systems, linear algebra is used to calculate similarities between users based on their preferences, allowing the system to suggest relevant items or content.

Linear Algebra Applications in Machine Learning

Linear algebra plays a crucial role in various aspects of machine learning, enabling the development and advancement of powerful models and algorithms. Here are some key areas where linear algebra is applied in machine learning:

Implementing Models like Neural Networks

Neural networks, which are widely used in artificial intelligence and machine learning, heavily rely on linear algebra. These models consist of interconnected layers of artificial neurons, and linear algebra provides the mathematical framework for defining and manipulating these connections.

By representing the weights and biases of neurons as vectors and matrices, linear algebra enables efficient computation and optimization of neural network models.

Training Algorithms and Optimization

Linear algebra is also essential in training machine learning algorithms and optimizing their performance. The process of training involves adjusting the parameters of a model to minimize the difference between predicted outputs and actual outputs.

This optimization process often involves solving systems of linear equations or performing matrix factorizations. These techniques help in finding the best set of parameters that lead to accurate predictions.

Improving Predictions through Dimensionality Reduction

Dimensionality reduction techniques are used to reduce the number of features or variables in a dataset while preserving its essential information. Linear algebra provides powerful tools such as singular value decomposition (SVD) and principal component analysis (PCA) for performing dimensionality reduction.

These techniques help in reducing the complexity of machine learning models, improving their efficiency, and avoiding overfitting. By representing data in lower-dimensional spaces, it becomes easier to visualize and understand complex datasets.

Understanding and applying linear algebra concepts in machine learning is crucial for developing sophisticated models, training algorithms, and improving prediction accuracy. By leveraging the power of linear algebra, computer scientists and AI practitioners can push the boundaries of what is possible in the field of machine learning.

Enabling Computer Vision and Graphics

Image processing and facial recognition.

Linear algebra plays a crucial role in enabling computer vision and graphics. In the field of image processing, linear algebra is used to manipulate and enhance digital images. Algorithms based on linear algebra are used to perform tasks such as image denoising, image segmentation, and image compression.

Facial recognition, which is widely used in security systems and biometrics, relies heavily on linear algebra algorithms for feature extraction, dimensionality reduction, and classification. These algorithms analyze the facial features and patterns in images or videos, allowing for accurate identification and authentication.

3D Modeling and Rendering

Linear algebra is fundamental to 3D modeling and rendering, which are essential in various industries such as architecture, film production, and game development. In 3D modeling, linear transformations are used to represent the position, orientation, and scale of objects in a virtual 3D space.

This allows for the creation of realistic and interactive 3D environments. Rendering, on the other hand, involves the calculation of lighting and shading effects to generate the final image or animation.

Linear algebra is used to solve complex equations and matrices that describe the interactions between light sources, materials, and surfaces, resulting in visually stunning graphics.

Computer Animations and Video Games

Linear algebra forms the foundation of computer animations and video games. Animation involves the manipulation of objects and characters to create movement and simulate real-world physics. By representing objects as matrices and applying linear transformations, animators can achieve fluid motion and realistic effects.

In video games, linear algebra is used for collision detection, physics simulations, and character movements. Game engines rely on linear algebra algorithms to calculate the positions, velocities, and accelerations of objects in real-time, providing an immersive gaming experience.

Other Key Uses in Computer Science Fields

In addition to its foundational role in areas like machine learning and data analysis, linear algebra plays a vital role in several other computer science fields. Let’s explore some of these key uses:

Cryptography and Cybersecurity

Cryptography , the practice of secure communication, heavily relies on linear algebra concepts. It helps in designing secure encryption algorithms and protocols that protect sensitive information from unauthorized access.

Linear algebra techniques are used to create and solve systems of linear equations, which form the basis of cryptographic algorithms like RSA and AES.

Cybersecurity also benefits from linear algebra’s ability to detect patterns and anomalies in large datasets. By applying linear algebra methods to network traffic analysis, security experts can identify and mitigate potential threats in real-time.

Signal Processing

Signal processing is a field that deals with the analysis, modification, and synthesis of signals. Linear algebra provides the mathematical tools required to analyze and manipulate signals efficiently.

Techniques like Fourier analysis, which decomposes signals into their frequency components, and linear filtering, which removes noise from signals, rely on linear algebra operations.

Linear algebra is widely used in audio and image processing applications. For example, it helps in compressing and decompressing audio and image files, enhancing image quality, and removing unwanted noise from sound recordings.

Recommender Systems

Recommender systems are algorithms that suggest items or content to users based on their interests and preferences. Linear algebra plays a crucial role in building recommender systems by modeling user-item interactions as matrices.

These matrices represent user ratings or preferences for different items.

Using linear algebra techniques like matrix factorization and singular value decomposition, recommender systems can accurately predict user preferences and make personalized recommendations. This has applications in various domains like e-commerce, streaming platforms, and social media.

These are just a few examples of the wide-ranging applications of linear algebra in computer science. Its versatility and power make it an essential tool for solving complex problems and advancing technology in various fields.

Linear algebra empowers computer scientists and data analysts to work effectively in multidimensional vector spaces. Mastering concepts like matrices, transformations, and eigenvalues provides the foundation to tackle machine learning, computer vision, graphics, and many other critical computing applications.

While linear algebra may seem abstract at first, understanding its role in encoding and manipulating complex, high-dimensional data unlocks its immense value within computer science and artificial intelligence.

Similar Posts

Is Usc Good For Computer Science?

With its location in the tech hub of Los Angeles and brand as a prestigious private university, USC seems like a strong choice for computer science. But how does its computer science program actually stack up? This in-depth guide examines USC’s CS academics, resources, outcomes, and more to determine if it delivers a top-tier education….

Has Science Gone Too Far?

Science and technology are advancing at an exponential rate, raising ethical questions about how far is too far in fields like AI, genetic engineering, and human enhancement. It’s a complex issue with compelling arguments on both sides. If you’re short on time, here’s a quick answer: In some cases science has gone too far, but…

San Jose State University Computer Science Ranking: How Does It Measure Up?

With tech giants like Apple, Google, and Facebook nearby, San Jose State University is situated in the heart of Silicon Valley. But how highly ranked is SJSU’s computer science program compared to other colleges? If you’re short on time, here’s a quick answer to your question: San Jose State University’s computer science program is ranked…

A Comprehensive Guide To The Ucsd Minor In Computer Science

Are you a student at UCSD interested in complementing your major with useful technical skills? Adding a minor in computer science can equip you with computational thinking, coding abilities, and knowledge of core CS concepts. This comprehensive guide will provide you with everything you need to know about the requirements, courses, and benefits of the…

What Is The Most Important Thing In Science?

Science has made astounding advances over the centuries, transforming our understanding of the natural world. But among the many discoveries and innovations, what is the single most important thing in science? If you’re short on time, here’s a quick answer to your question: the scientific method. Now let’s dive deeper into the details. In this…

Trade Schools For Computer Science

A trade school education can provide a fast, affordable path to starting a successful tech career. Intensive programs focused on in-demand technical skills allow trade school graduates to fill roles like software developer, IT specialist, data analyst, and more. If you’re interested in a quicker, cheaper alternative to a 4-year degree, some of the top…

BloomTech’s Downfall: A Long Time Coming

Coursera’s 2023 Annual Report: Big 5 Domination, Layoffs, Lawsuit, and Patents

Coursera sees headcount decrease and faces lawsuit in 2023, invests in proprietary content while relying on Big 5 partners.

- [2024] 1300+ Free SWAYAM + NPTEL Courses

- 6 Best Crystal Programming Courses for 2024

- 10 Best Pandas Courses for 2024

- 10 Best React Native Courses for 2024

- Revolutionizing Web Animation: Best Ways to Learn GSAP in 2024

600 Free Google Certifications

Most common

- digital marketing

- graphic design

Popular subjects

Web Development

Communication Skills

Social Media Marketing

Popular courses

Understanding Multiple Sclerosis (MS)

Mechanical Ventilation for COVID-19

Tsinghua Chinese: Start Talking with 1.3 Billion People

Organize and share your learning with Class Central Lists.

View our Lists Showcase

Class Central is learner-supported. When you buy through links on our site, we may earn an affiliate commission.

Coding the Matrix: Linear Algebra through Computer Science Applications

Brown University via Coursera Help

This course may be unavailable.

Limited-Time Offer: Up to 75% Off Coursera Plus!

- The Function

- The Vector Space

- Gaussian Elimination

- The Inner Product

- Orthogonalization

- united states

Related Courses

Linear algebra - foundations to frontiers, linear algebra with applications, linear algebra through geometry, linear algebra basics, introduction to linear algebra, related articles, ivy league online courses, 10 best applied ai & ml courses, 1700 coursera courses that are still completely free, 250 top free coursera courses of all time, massive list of mooc-based microcredentials.

3.6 rating, based on 16 Class Central reviews

Select rating

Start your review of Coding the Matrix: Linear Algebra through Computer Science Applications

- Prose Simian 9 years ago Now finished, I remain torn about this course. But I've bumped it up to four stars. Positives: - using Python - having to use doctests (yes, seriously, I didn't really understand the funny comments preceded by >>> before this :s) - building my own… Read more Now finished, I remain torn about this course. But I've bumped it up to four stars. Positives: - using Python - having to use doctests (yes, seriously, I didn't really understand the funny comments preceded by >>> before this :s) - building my own sparse simple matrix and vector classes - GF2 ( = "binary arithmetic without the carry digit" when this hayseed finally figured it out) - carefully crafted material and lectures - linking lin alg concepts to applications (my faves: perspective correction, and factorising big numbers) - multiple interpretations of matrix multiplication - Prof Klein and TAs extremely active and helpful on forums Negatives: - no (freely available) text to go to for clarification - lectures a little fast-paced - several times lectures omitted steps, which left me agonising about whether I really understood what was going on for hours. - quite a few errors in lectures - abrupt changes in volume of lecture audio - problems with submitting answers (grader tests are different to the doctests provided, and - because some of us didn't know the grader test case was available by running it with a flag - passing the doctests but failing the grader lead to a horrible sinking feeling) - horrible marking scheme (pass/ distinction thresholds are applied by section, with lowest dropped, not to overall average. 20% late submission penalty made getting a distinction if more than one section was late was impossible, even with 100% unadjusted.) perhaps just for me - difficulty grokking proofs in lecture form (perhaps I just need to write them down & think about them more) - using Python 3.x is a bit of a pain. Overall, a somewhat flawed execution of an otherwise excellent idea. Unfortunately with flaws that made an already tricky subject quite a bit harder - so I hope they can be ironed out over repeated iterations. But interesting, a MOOC I feel proud to have completed. Looking forward to retaking it - to make sure I really 'get it' - and the promised follow-ups from Prof Klein. (EDIT: but not for quite a while; from the forum: "The follow-on course has not been scheduled yet. I'm not yet sure when it will run. I will announce on the codingthematrix mailing list. It will likely not be for at least a year due to an upcoming big project on my part.") Helpful

- MW Mark Wilbur 10 years ago This is another course I felt torn about. On the good side, the idea is fantastic! Why not use programmers’ systemic thinking abilities as a springboard to learn linear algebra more quickly? When I studied linear algebra long ago, it used quite a few examples from calculus and electricity and magnetism. While that was a good approach, I feel that linear algebra is a subject that is worth studying for more people than multivariate calculus is. That alone made me optimistic about the course. Unfortunately, the automated grader was horribly buggy and so much of a pain to deal with that I decided to use my study time on other classes. Helpful

- VP Vlad Podgurschi 9 years ago I found this to be a great preparatory course for "practical" topics in data science, such as linear models and machine learning. The homeworks and labs are well thought out, interesting, and very valuable for a good understanding of the material.… Read more I found this to be a great preparatory course for "practical" topics in data science, such as linear models and machine learning. The homeworks and labs are well thought out, interesting, and very valuable for a good understanding of the material. There is also the bonus of getting to learn a bit of python (very popular programming language in data science) if you're not already familiar with the language. To respond to older reviews of this course, the matters must have improved considerably with respect to submitting and grading the homeworks and labs. I didn't experienced any problems with my submissions, and I have completed successfully almost all problems (wrapping up the last two!). With respect to the ratio of abstract theory vs concrete applications, I felt that the theory presented was necessary for a good understanding of the methods applied in the practical examples. The following course was for me a great follow-up to Dr. Klein's course: HarvardX: PH525.2x Matrix Algebra and Linear Models (https://courses.edx.org/courses/HarvardX/PH525.2x/1T2015/) Helpful

- AA Anonymous 10 years ago Simply stated, this course was a disappointment. I initially had high hopes, based on the description. However, I found a few aspects disturbing. The first was the use of a relatively recent feature of the latest version of Python. The second was th… Read more Simply stated, this course was a disappointment. I initially had high hopes, based on the description. However, I found a few aspects disturbing. The first was the use of a relatively recent feature of the latest version of Python. The second was that the optional text was not offered until well into the course. The third was that the lectures did not seem to match the quiz material. The fourth was that several of the student-posted forum questions went unanswered even through dozens of requests for clarification. My technical background should have been sufficient, based on the course description, but I withdrew from the class in frustration. I note that I have been pleased with several other online technical classes, and some are even rated in this forum. I will not attempt another offering from this instructor. Helpful

- AA Anonymous 10 years ago For me this has been the first course I've taken in coursera and it wasn't a disappointment at all. The lectures, although a bit hard to understand at the beginning, become clearer when you get to the assignments. The instructor was great at the exp… Read more For me this has been the first course I've taken in coursera and it wasn't a disappointment at all. The lectures, although a bit hard to understand at the beginning, become clearer when you get to the assignments. The instructor was great at the explanations, and the forum was a very good resource as well. I have strong programming background in languages other than python, but it's a really easy language to work with and we didn't get to any advance feature. I'll hope for new offering from this instructor, and hopefully a continuation of the topics from this course, like linear programming. I hope there will be new offering from this instructor, and hopefully a continuation of the topics from this course, like linear programming. Helpful

- EL Eng Eliane Letnar 10 years ago I liked very much, but some problems to submit the exercises ...I wasted a lot of time to find where were the problems... you need to submit the exercise in the order they appear...it took me a long time to figured out ... Helpful

- AA Anonymous 9 years ago Unfortunately the course is much more about learning the specifics of Python 3 than about Linear Algebra. As I progressed through the course, I found I was learning a little bit about Python's capabilities and a very little about Linear Algebra. I decided to use a book instead. Helpful

- AA Anonymous 9 years ago This is a great course.The Professor interacts with the students and the course materials are exhaustive. Programming assignments are both challenging and rewarding and requires due diligence on the student's part. Helpful

- AA Anonymous 6 years ago One of the best MOOC I have ever take. A rigorous and innovative introduction to linear algebra with very interesting examples and applications. Professor Klein make clear and simple every concept. Helpful

- Benjamin Karlog 6 years ago Poor quality would not recoment using this, look at ex trevtur on youtube or anything else as this is a bad source. Helpful

- Ajay Mathias 9 years ago Helpful

- Michael A. Alcorn 8 years ago Helpful

- AA Anonymous 8 years ago Helpful

- MB Mark Henry Butler 8 years ago Helpful

- MM Mihailo 10 years ago Helpful

- CK Colin Khein 8 years ago Helpful

Never Stop Learning.

Get personalized course recommendations, track subjects and courses with reminders, and more.

Math 136 Project: Applications of Linear Algebra

Project descriptions, general information, reports and grading.

Background (15 points) This is mostly a discussion of how the non-mathematical and mathematical portions of your topic fit together. In other words, you need to talk about what you needed to know about your topic in order to do the associated problems and how linear algebra fits into the picture. So you might include the definitions of the words I've given you, the linear algebra ideas you used (e.g. matrix multiplication, solving linear systems, etc), and some explanation about why these ideas were useful. Solutions (35 points) You need to include solutions to the problems included in this packet. Don't just give the answers, however. Include a full, detailed explanation of what you're doing at each step. You'll want to use words and write in full sentences, though you can also have the occasional formula or sequence of equalities. Bibliography (5 points) List the references you used to complete this report. You don't need to get out your Strunk & White or anything, just list title and author for any books you used. You should also include a list of people that you consulted or any other form of help that you received. For example, you might obtain some of your information from the internet; in this case, you could include the website. You'll need at least one book as a reference, preferably two , and a total of at least two references.

How Machine Learning Uses Linear Algebra to Solve Data Problems

Machines or computers only understand numbers. And these numbers need to be represented and processed in a way that lets machines solve problems by learning from the data instead of learning from predefined instructions (as in the case of programming).

All types of programming use mathematics at some level. Machine learning involves programming data to learn the function that best describes the data.

The problem (or process) of finding the best parameters of a function using data is called model training in ML.

Therefore, in a nutshell, machine learning is programming to optimize for the best possible solution – and we need math to understand how that problem is solved.

The first step towards learning Math for ML is to learn linear algebra.

Linear Algebra is the mathematical foundation that solves the problem of representing data as well as computations in machine learning models.

It is the math of arrays — technically referred to as vectors, matrices and tensors.

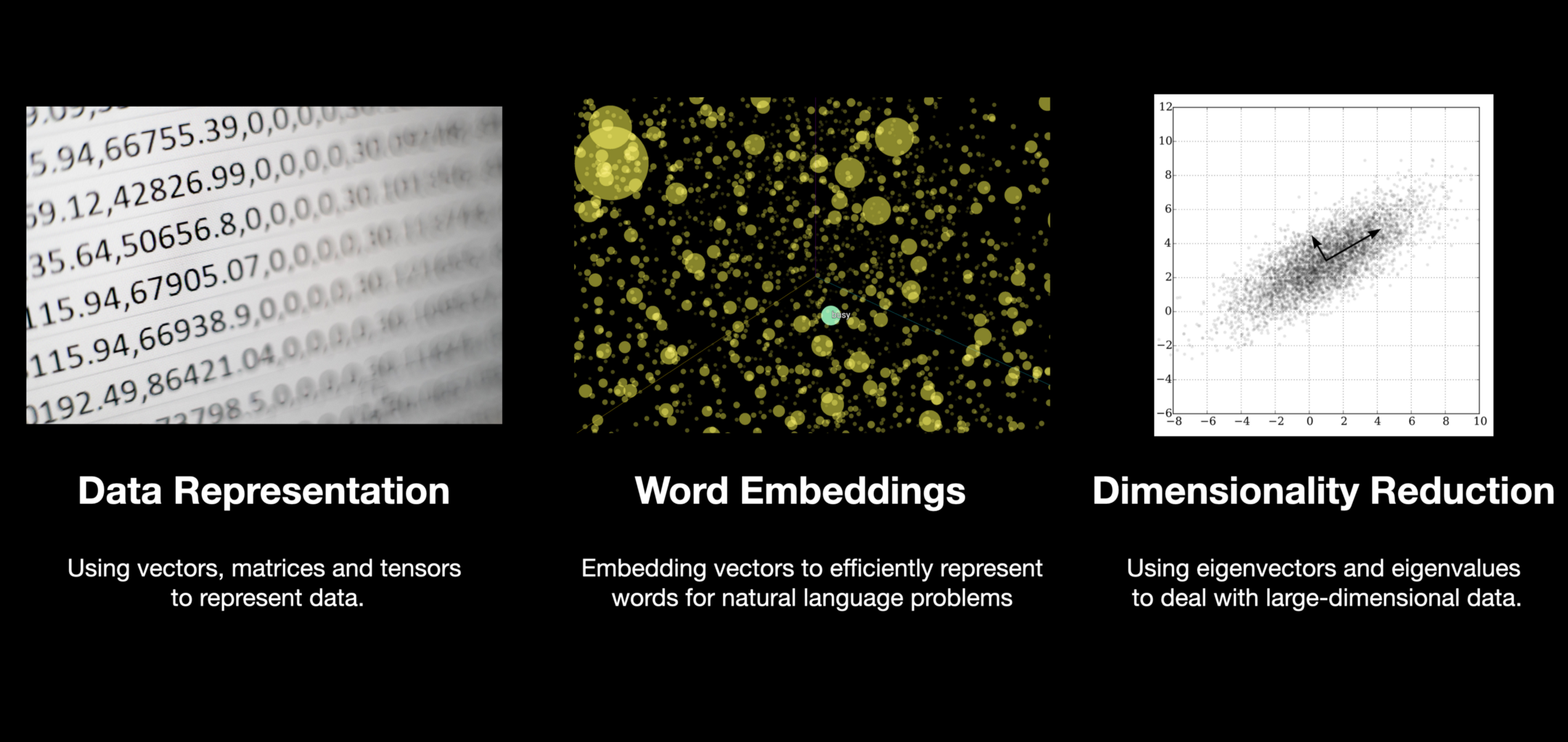

Common Areas of Application — Linear Algebra in Action

In the ML context, all major phases of developing a model have linear algebra running behind the scenes.

Important areas of application that are enabled by linear algebra are:

- data and learned model representation

- word embeddings

- dimensionality reduction

Data Representation

The fuel of ML models, that is data , needs to be converted into arrays before you can feed it into your models. The computations performed on these arrays include operations like matrix multiplication (dot product). This further returns the output that is also represented as a transformed matrix/tensor of numbers.

Word embeddings

Don’t worry about the terminology here – it is just about representing large-dimensional data (think of a huge number of variables in your data) with a smaller dimensional vector.

Natural Language Processing (NLP) deals with textual data. Dealing with text means comprehending the meaning of a large corpus of words. Each word represents a different meaning which might be similar to another word. Vector embeddings in linear algebra allow us to represent these words more efficiently.

Eigenvectors (SVD)

Finally, concepts like eigenvectors allow us to reduce the number of features or dimensions of the data while keeping the essence of all of them using something called principal component analysis.

From Data to Vectors

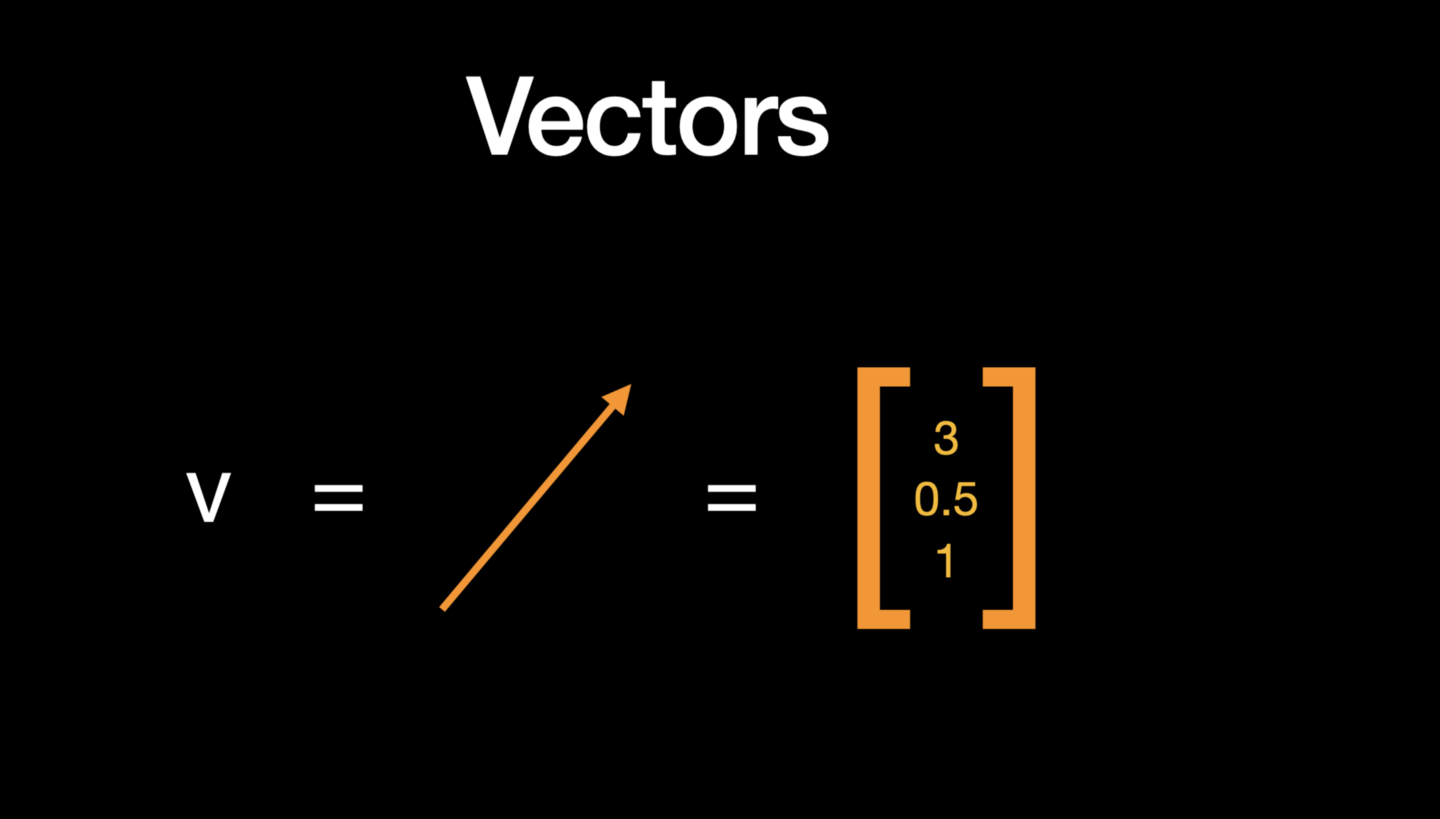

Linear algebra basically deals with vectors and matrices (different shapes of arrays) and operations on these arrays. In NumPy, vectors are basically a 1-dimensional array of numbers but geometrically, they have both magnitude and direction.

Our data can be represented using a vector. In the figure above, one row in this data is represented by a feature vector which has 3 elements or components representing 3 different dimensions. N-entries in a vector makes it n-dimensional vector space and in this case, we can see 3-dimensions.

Deep Learning — Tensors Flowing Through a Neural Network

We can see linear algebra in action across all the major applications today. Examples include sentiment analysis on a LinkedIn or a Twitter post (embeddings), detecting a type of lung infection from X-ray images (computer vision), or any speech to text bot (NLP).

All of these data types are represented by numbers in tensors. We run vectorized operations to learn patterns from them using a neural network. It then outputs a processed tensor which in turn is decoded to produce the final inference of the model.

Each phase performs mathematical operations on those data arrays.

Dimensionality Reduction — Vector Space Transformation

When it comes to embeddings, you can basically think of an n-dimensional vector being replaced with another vector that belongs to a lower-dimensional space. This is more meaningful and it's the one that overcomes computational complexities.

For example, here is a 3-dimensional vector that is replaced by a 2-dimensional space. But you can extrapolate it to a real-world scenario where you have a very large number dimensions.

Reducing dimensions doesn’t mean dropping features from the data. Instead, it's about finding new features that are linear functions of the original features and preserving the variance of the original features.

Finding these new variables (features) translates to finding the principal components (PCs). This then converges to solving eigenvectors and eigenvalues problems.

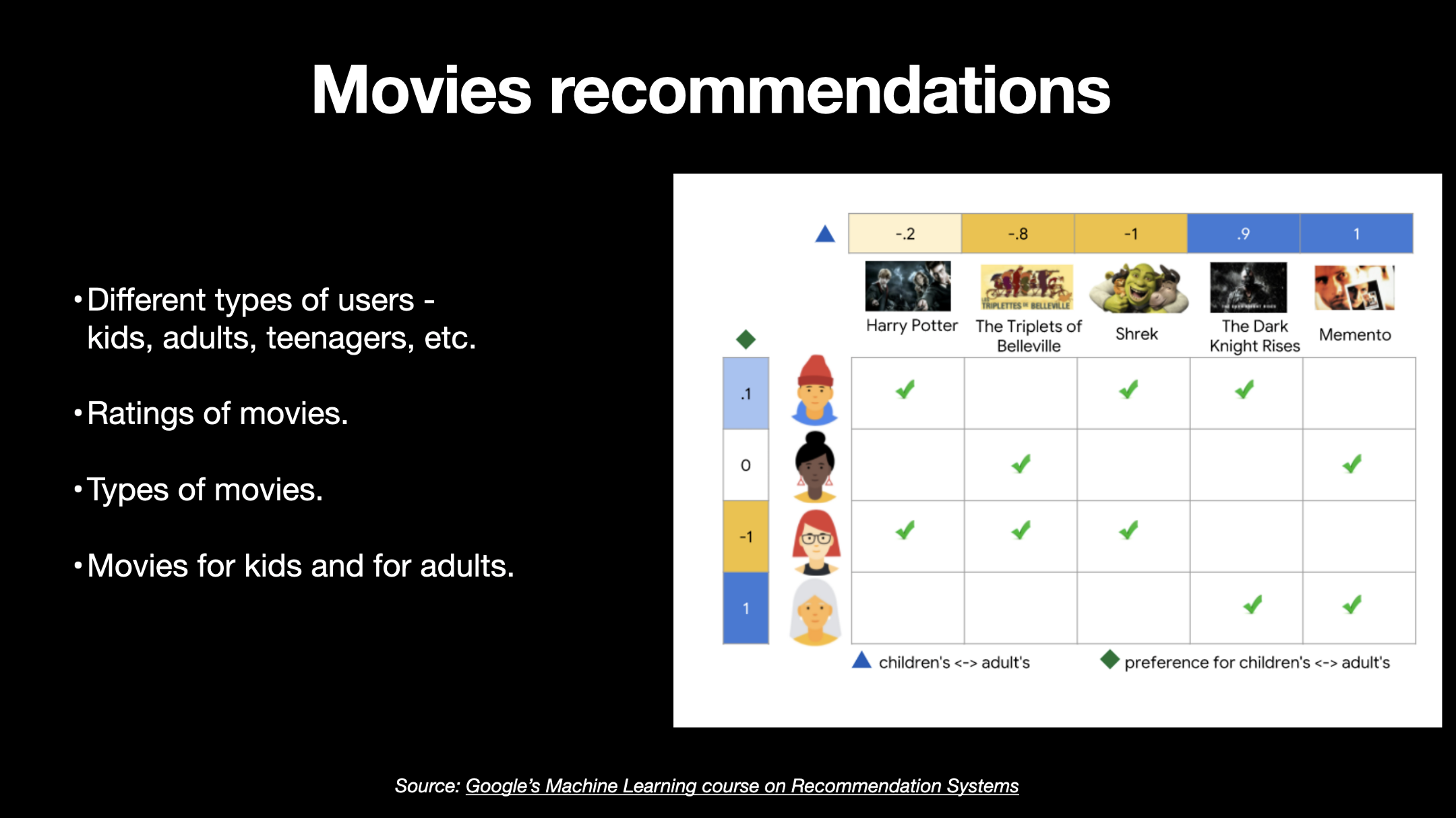

Recommendation Engines — Making use of embeddings

You can think of Embedding as a 2D plane being embedded in a 3D space and that’s where this term comes from. You can think of the ground you are standing on as a 2D plane that is embedded into this space in which you live.

Just to give you a real-world use case to relate to all of this discussion on vector embeddings, all applications that are giving you personalized recommendations are using vector embedding in some form.

For example, the above is a graphic from Google’s course on recommendation systems where we are given this data on different users and their preferred movies. Some users are kids and others are adults, some movies were are all-time classics while others are more artistic. Some movies are targeted towards a younger audience while movies like memento are preferred by adults.

Now, we not only need to represent this information in numbers but also need to find new smaller dimensional vector representations that capture all these features well.

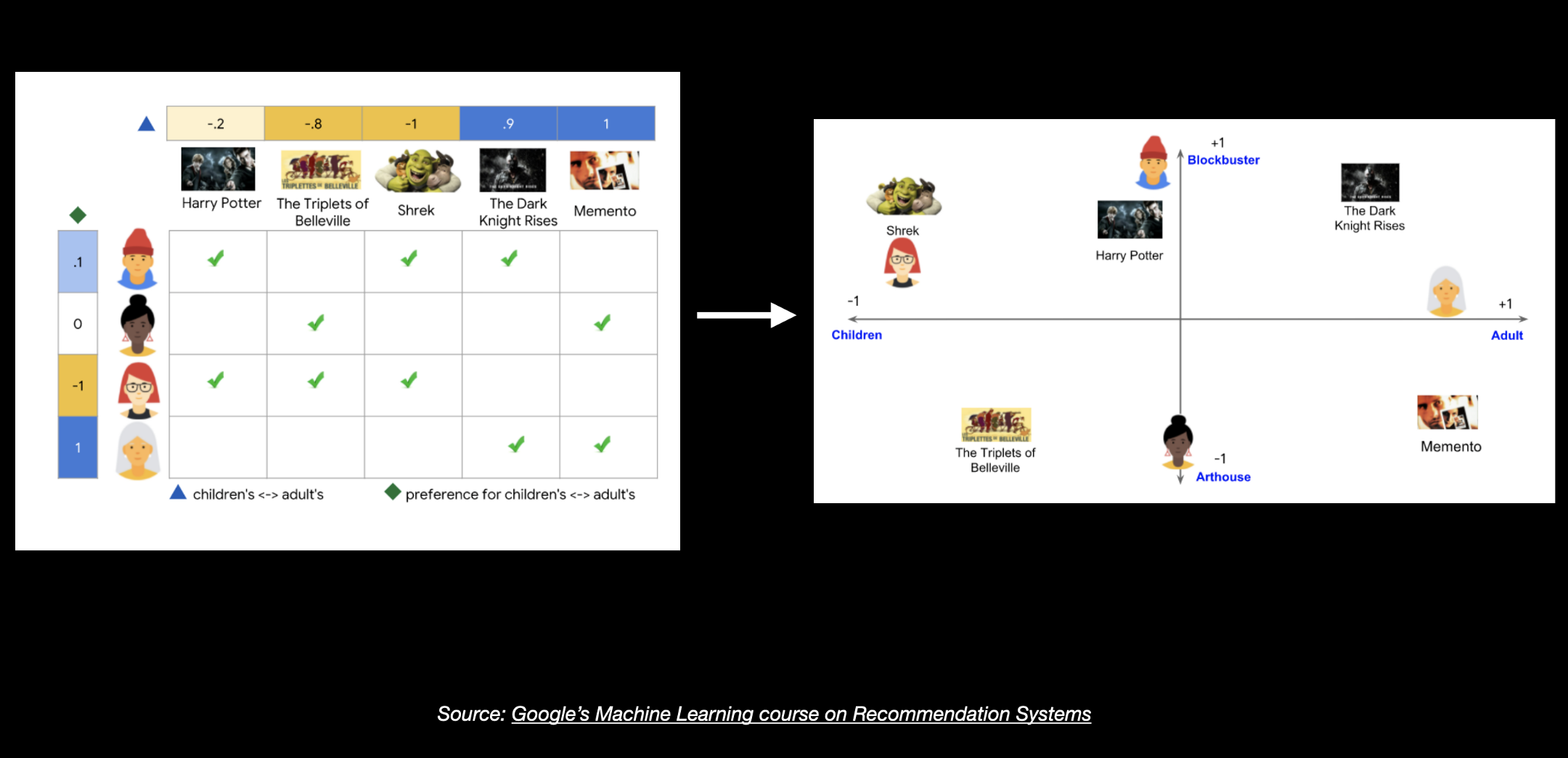

A very quick way to understand how we can pull off this task is by understanding something called Matrix Factorization which allows us to break a large matrix down into smaller matrices.

Ignore the numbers and colors for now and just try to understand how we have broken down one big matrix into two smaller ones.

For example, here this matrix of 4X5, 4 rows, and 5 features, was broken down into two matrices, one that's 4X2 and the other that's 2X5. We basically have new smaller dimensional vectors for users and movies.

And this allows us to plot this on a 2D vector space. Here you’ll see that user #1 and the movie Harry Potter are closer and user #3 and the movie Shrek are closer.

The concept of a dot product (matrix multiplication) of vectors tells us more about the similarity of two vectors. And it has applications in correlation/covariance calculation, linear regression, logistic regression, PCA, convolutions, PageRank and numerous other algorithms.

Industries where Linear Algebra is used heavily

By now, I hope you are convinced that Linear algebra is driving the ML initiatives in a host of areas today. If not, here is a list to name a few:

- Chemical Physics

- Word Embeddings — neural networks/deep learning

- Image Processing

- Quantum Physics

How much Linear Algebra should you know to get started with ML / DL?

Now, the important question is how you can learn to program these concepts of linear algebra. The answer is you don’t have to reinvent the wheel, you just need to understand the basics of vector algebra computationally and you then learn to program those concepts using NumPy.

NumPy is a scientific computation package that gives us access to all the underlying concepts of linear algebra. It is fast as it runs compiled C code and it has a large number of mathematical and scientific functions that we can use.

Recommended resources

- Playlist on Linear Algebra by 3Blue1Brown — very engaging visualizations that explains the essence of linear algebra and its applications. Might be a little too hard for beginners.

- Book on Deep Learning by Ian Goodfellow & Yoshua Bengio — a fantastic resource for learning ML and applied math. Give it a read, few folks may find it too technical and notation-heavy, to begin with.

Foundations of Data Science & ML — I have created a course that gives you enough understanding of Programming, Math (Basic Algebra, Linear Algebra & Calculus) and Statistics. A complete package for first steps to learning DS/ML.

👉 You can use the code FREECODECAMP10 to get 10% off.

Check out the course outline here:

Web and Data Science Consultant | Instructional Design

If you read this far, thank the author to show them you care. Say Thanks

Learn to code for free. freeCodeCamp's open source curriculum has helped more than 40,000 people get jobs as developers. Get started

- School Guide

- Mathematics

- Number System and Arithmetic

- Trigonometry

- Probability

- Mensuration

- Maths Formulas

- Integration Formulas

- Differentiation Formulas

- Trigonometry Formulas

- Algebra Formulas

- Mensuration Formula

- Statistics Formulas

- Trigonometric Table

Linear Algebra

Linear Algebra is the branch of mathematics that focuses on the study of vectors, vector spaces, and linear transformations. It deals with linear equations, linear functions, and their representations through matrices and determinants. It has a wide range of application in Physics and Mathematics. It is the basic concept for machine learning and data science. We have explained the Linear Algebra, types of Linear Algebra.

Let’s learn about Linear Algebra, like linear function, including its branches, formulas, and examples.

What is Linear Algebra?

Linear Algebra is a branch of Mathematics that deals with matrices , vectors , finite and infinite spaces. It is the study of vector spaces, linear equations, linear functions, and matrices.

Linear Algebra Equation s

The general linear equation is represented as u 1 x 1 + u 2 x 2 +…..u n x n = v

- u’s – represents the coefficients

- x’s – represents the unknowns

- v – represents the constant

There is a collection of equations called a System of linear algebraic equations. It obeys the linear function such as –

(x 1 ,……..x n ) → u 1 x 1 +……….+u n x n

Linear Algebra Topics

Below is the list of important topics in Linear Algebra.

- Matrix inverses and determinants

- Linear transformations

- Singular value decomposition

- Orthogonal matrices

- Mathematical operations with matrices (i.e. addition, multiplication)

- Projections

- Solving systems of equations with matrices

- Eigenvalues and eigenvectors

- Euclidean vector spaces

- Positive-definite matrices

- Linear dependence and independence

- The foundational concepts essential for understanding linear algebra, detailed here, include:

- Linear Functions

- Vector spaces

These foundational ideas are interconnected, allowing for the mathematical representation of a system of linear equations. Generally, vectors are entities that can be combined, and linear functions refer to vector operations that encompass vector combination.

Branches of Linear Algebra

Linear Algebra is divided into different branches based on the difficulty level of topics, which are,

Elementary Linear Algebra Advanced Linear Algebra Applied Linear Algebra

Elementary Linear Algebra

Elementary Linear algebra covers the topics of basic linear algebra such as Scalars and Vectors, Matrix and matrix operation , etc.

Linear Equations

Linear equations form the basis of linear algebra and are equations of the first order . These equations represent straight lines in geometry and are characterized by constants and variables without exponents or products of variables. Solving systems of linear equations involves finding the values of the variables that satisfy all equations simultaneously.

A linear equation is the simplest form of equation in algebra, representing a straight line when plotted on a graph.

Example : 2x + 3x = 6 is a linear equation. If you have two such equations, like 2x + 3y = 6, and 4x + 6y =12, solving them together would give you the point where the two lines intersect.

Advanced Linear Algebra

Advanced linear algebra mostly covers all the advanced topics related to linear algebra such as Linear function, Linear transformation, Eigenvectors, and Eigenvalues, etc.

Eigenvalues and Eigenvectors

Eigenvalues and eigenvectors are fundamental concepts in linear algebra. It offers deep insights into the properties of linear transformations. An eigenvector of a square matrix is a non-zero vector that, when the matrix multiplies it, results in a scalar multiple of itself. This scalar is known as the eigenvalue associated with the eigenvector. They are essential in various applications, including stability analysis, quantum mechanics, and the study of dynamical systems.

Consider a transformation that changes the direction or length of vectors, except for some special vectors that only get stretched or shrunk. These special vectors are eigenvectors , and the factor by which they are stretched or shrunk is the eigenvalue .

Example : For the matrix A = [2, 0, 0, 3], the vector v = 1,0 is an eigenvector because Av = 2v, and 2 is the eigenvalue.

Singular Value Decomposition

Singular Value Decomposition (SVD) is a powerful mathematical technique used in signal processing, statistics, and machine learning . It decomposes a matrix into three other matrices, where one represents the rotation, another the scaling, and the third the final rotation. It’s essential for identifying the intrinsic geometric structure of data.

Vector Space in Linear Algebra

A vector space (or linear space) is a collection of vectors, which may be added together and multiplied (“scaled”) by numbers, called scalars. Scalars are often real numbers, but can also be complex numbers. Vector spaces are central to the study of linear algebra and are used in various scientific fields.

- Basic vectors in Linear Algebra

A linear map (or linear transformation) is a mapping between two vector spaces that preserves the operations of vector addition and scalar multiplication. The concept is central to linear algebra and has significant implications in geometry and abstract algebra.

A linear map is a way of moving vectors around in a space that keeps the grid lines parallel and evenly spaced.

Example : Scaling objects in a video game world without changing their basic shape is like applying a linear map.

Positive definite matrices.

A positive definite matrix is a symmetric matrix where all its eigenvalues are positive. These matrices are significant in optimisation problems, as they ensure the existence of a unique minimum in quadratic forms.

Example : The matrix A = [2, 0, 0, 2] is positive definite because it always produces positive values for any non-zero vector.

Matrix Exponential

The matrix exponential is a function on square matrices analogous to the exponential function for real numbers. It is used in solving systems of linear differential equations, among other applications in physics and engineering.

Matrix exponentials stretch or compress spaces in ways that depend smoothly on time, much like how interest grows continuously in a bank account.

Example : The exponential of the matrix A = [0, −1, 1, 0] represents rotations, where the amount of rotation depends on the “time” paramet er.

Linear Computations

Linear computations involve numerical methods for solving linear algebra problems, including systems of linear equations, eigenvalues, and eigenvectors c alculations. These computations are essential in c omputer simulations, optimisations, and modelling.

These are techniques for crunching numbers in linear algebra problems, like finding the best-fit line through a set of points or solving systems of equations quickly and accurately.

Linear Independence

A set of vectors is linearly independent if no vector in the set is a linear combination of the others. The concept of linear independence is central to the study of vector spaces, as it helps define bases and dimension.

Vectors are linearly independent if none of them can be made by combining the others. It’s like saying each vector brings something unique to the table that the others don’t.

Example : 1,0 and 0,1 are linearly independent in 2D space because you can’t create one of these vectors by scaling or adding the other.

Linear Subspace

A linear subspace (or simply subspace) is a subset of a vector space that is closed under vector addition and scalar multiplication. A subspace is a smaller space that lies within a larger vector space, following the same rules of vector addition and scalar multiplication.

Example : The set of all vectors of the form a, 0 in 2D space is a subspace, representing all points along the x-axis.

Applied Linear Algebra

In Applied Linear Algebra, the topics covered are generally the practical implications of Elementary and advanced linear Algebra topics such as the Complement of a matrix, matrix factorization and norm of vectors, etc.

Linear Programming

Linear programming is a method to achieve the best outcome in a mathematical model whose requirements are represented by linear relationships. It is widely used in business and economics to maximize profit or minimize cost while considering constraints.

This is a technique for optimizing (maximizing or minimizing) a linear objective function, subject to linear equality and inequality constraints. It’s like planning the best outcome under given restrictions.

Example : Maximizing profit in a business while considering constraints like budget, material costs, and labor.

Linear Equation Systems

Systems of linear equations involve multiple linear equations that share the same set of variables. The solution to these systems is the set of values that satisfy all equations simultaneously, which can be found using various methods, including substitution, elimination, and matrix operations.

Example : Finding the intersection point of two lines represented by two equations.

Gaussian Elimination

Gaussian elimination is a systematic method for solving systems of linear equations. It involves applying a series of operations to transform the system’s matrix into its row echelon form or reduced row echelon form, making it easier to solve for the variables. It is a step-by-step procedure to simplify a system of linear equations into a form that’s easier to solve.

Example : Systematically eliminating variables in a system of equations until each equation has only one variable left to solve for.

Vectors in Linear Algebra

In linear algebra, vectors are fundamental mathematical objects that represent quantities that have both magnitude and direction.

- Vectors operations like a ddition and scalar multiplication are mainly used concepts in linear algebra. They can be used to solve systems of linear equations and represent linear transformation, and perform matrix operations such as multiplication and inverse matrices.

- The representation of many physical processes’ magnitude and direction using vectors, a fundamental component of linear algebra, is essential.

- In linear algebra, vectors are elements of a vector space that can be scaled and added. Essentially, they are arrows with a length and direction.

Linear Function

A formal definition of a linear function is provided below:

f(ax) = af(x), and f(x + y) = f(x) + f(y) where a is a scalar, f(x) and f(y) are vectors in the range of f, and x and y are vectors in the domain of f .

A linear function is a type of function that maintains the properties of vector addition and scalar multiplication when mapping between two vector spaces. Specifically a function T: V ->W is considered linear if it satisfies two key properties:

| A linear transformation’s ability to preserve vector addition. | T(u+v)=T(u) +T(v) | |

| A linear transformation’s ability to preserve scalar multiplication. | T(cu)=cT(u) |

- V and W : Vector spaces

- u and v : Vectors in vector space V

- T : Linear transformation from V to W

- The additional property requires that the function T preserves the vector addition operation, meaning that the image of the sum of two vectors is equal to the sum of two images of each individual vector.

For example, we have a linear transformation T that takes a two-dimensional vector (x, y) as input and outputs a new two-dimensional vector (u, v) according to the following rule:

T(x, y) = (2x + y, 3x – 4y)

To verify that T is a linear transformation, we need to show that it satisfies two properties:

Additivity: T(u + v) = T(u) + T(v) Homogeneity: T(cu) = cT(u)

Let’s take two input vectors (x 1 , y 1 ) and (x 2 , y 2 ) and compute their images under T :

- T(x 1 , y 1 ) = (2x 1 + y 1 , 3x 1 – 4y 1 )

- T(x 2 , y 2 ) = (2x 2 + y 2 , 3x 2 – 4y 2 )

Now let’s compute the image of their sum:

T(x 1 + x 2 , y 1 + y 2 ) = (2(x 1 + x 2 ) + (y 1 + y 2 ), 3(x 1 + x 2 ) – 4(y 1 + y 2 )) = (2x 1 + y 1 + 2x 2 + y 2 , 3x 1 + 3x 2 – 4y 1 – 4y 2 ) = (2x 1 + y 1 , 3x 1 – 4y 1 ) + (2x 2 + y 2 , 3x 2 – 4y 2 ) = T(x 1 , y 1 ) + T(x 2 , y 2 )

So T satisfies the additivity property.

Now let’s check the homogeneity property. Let c be a scalar and (x, y) be a vector:

T(cx, cy) = (2(cx) + cy, 3(cx) – 4(cy)) = (c(2x) + c(y), c(3x) – c(4y)) = c(2x + y, 3x – 4y) = cT(x, y)

So T also satisfies the homogeneity property. Therefore, T is a linear transformation.

Linear Algebra Matrix

- A linear matrix in algebra is a rectangular array of integers organized in rows and columns in linear algebra. The letters a, b, c, and other similar letters are commonly used to represent the integers that make up a matrix’s entries.

- Matrices are often used to represent linear transformation, such as scaling, rotation, and reflection.

- Its size is determined by the rows and columns that are present.

- A matrix has three rows and two columns, for instance. A matrix is referred to as be 3×2 matrix, for instance, if it contains three rows and two columns.

- Matrix basically works on operations including addition, subtraction, and multiplication.

- The appropriate elements are simply added or removed when matrices are added or subtracted.

- Scalar multiplication involves multiplying every entry in the matrix by a scalar(a number).

- Matrix multiplication is a more complex operation that involves multiplying and adding certain entries in the matrices.

- The number of columns and rows in the matrix determines its size. For instance, a matrix with 4 rows and 2 columns is known as a 4×2 matrix. The entries in the matrix are integers, and they are frequently represented by letters like u, v, and w .

For example: Let’s consider a simple example to understand more, suppose we have two vectors, v 1 , and v 2 in a two-dimensional space. We can represent these vectors as a column matrix, such as:

v 1 = , v 2 =

Now we will apply a linear transformation that doubles the value of the first component and subtracts the value of the second component. Now we can represent this transformation as a 2×2 linear matrix A

To apply this to vector v 1 , simply multiply the matrix A with vector v 1

The resulting vector, [0,-2] is the transformed version of v 1 . Similarly, we can apply the same transformation to v 2

The resulting vector , [3,-4] is the transformed version of v 2 .

Numerical Linear Algebra

Numerical linear algebra, also called applied linear algebra, explores how matrix operations can solve real-world problems using computers. It focuses on creating efficient algorithms for continuous mathematics tasks. These algorithms are vital for solving problems like least-square optimization, finding Eigenvalues, and solving systems of linear equations. In numerical linear algebra, various matrix decomposition methods such as Eigen decomposition, Single value decomposition, and QR factorization are utilized to tackle these challenges.

Linear Algebra Applications

Linear algebra is ubiquitous in science and engineering, providing the tools for modelling natural phenomena, optimising proc esses, and solving complex calculations in computer science, physics, economics, and beyond.

Linear algebra, with its concepts of vectors, matrices, and linear transformations, serves as a foundational tool in numerous fields, enabling the solving of complex problems across science, engineering, computer science, economics, and more. Following are some specific applications of linear algebra in real-world.

1. Computer Graphics and Animation

Linear algebra is indispensable in computer graphics, gaming, and animation. It helps in transforming the shapes of objects and their positions in scenes through rotations, translations, scaling, and more. For instance, when animating a character, linear transformations are used to rotate limbs, scale objects, or shift positions within the virtual world.

2. Machine Learning and Data Science

In machine learning, linear algebra is at the heart of algorithms used for classifying information, making predictions, and understanding the structures within data. It’s crucial for operations in high-dimensional data spaces, optimizing algorithms, and even in the training of neural networks where matrix and tensor operations define the efficiency and effectiveness of learning.

3. Quantum Mechanics

The state of quantum systems is described using vectors in a complex vector space. Linear algebra enables the manipulation and prediction of these states through operations such as unitary transformations (evolution of quantum states) and eigenvalue problems (energy levels of quantum systems).

4. Cryptography

Linear algebraic concepts are used in cryptography for encoding messages and ensuring secure communication. Public key cryptosystems, such as RSA, rely on operations that are easy to perform but extremely difficult to reverse without the key, many of which involve linear algebraic computations.

5. Control Systems

In engineering, linear algebra is used to model and design control systems. The behavior of systems, from simple home heating systems to complex flight control mechanisms, can be modeled using matrices that describe the relationships between inputs, outputs, and the system’s state.

6. Network Analysis

Linear algebra is used to analyze and optimize networks, including internet traffic, social networks, and logistical networks. Google’s PageRank algorithm, which ranks web pages based on their links to and from other sites, is a famous example that uses the eigenvectors of a large matrix representing the web.

7. Image and Signal Processing

Techniques from linear algebra are used to compress, enhance, and reconstruct images and signals. Singular value decomposition (SVD), for example, is a method to compress images by identifying and eliminating redundant information, significantly reducing the size of image files without substantially reducing quality.

8. Economics and Finance

Linear algebra models economic phenomena, optimizes financial portfolios, and evaluates risk. Matrices are used to represent and solve systems of linear equations that model supply and demand, investment portfolios, and market equilibrium.

9. Structural Engineering

In structural engineering, linear algebra is used to model structures, analyze their stability, and simulate how forces and loads are distributed throughout a structure. This helps engineers design buildings, bridges, and other structures that can withstand various stresses and strains.

10. Robotics

Robots are designed using l inear algebra to control their movements and perform t asks with precision. Kinematics, which involves the movement of parts in space, relies on linear transformations to calculate the positions, rotations, and scaling of robot parts.

Linear Algebra Problems

Here are some solved questions on linear algebra to better your understanding of the concept:.

= (2-1)i + (2 + 3)j + (5 + 1)k = i + 5j + 6k

= -2i(i – 2j + k) + j(i – 2j + k) + 3k(i – 2j + k) = -2i -2j + 3k

Q3: Find the solution of x + 2y = 3 and 3x + y = 5

From x + 2y = 3 we get x = 3 – 2y Putting this value of x in the second equation we get 3(3 – 2y) + y = 5 ⇒ 9 – 6y + y = 5 ⇒ 9 – 5y = 5 ⇒ -5y = -4 ⇒ y = 4/5 Putting this value of y in 1st equation we get x + 2(4/5) = 3 ⇒ x = 3 – 8/5 ⇒ x = 7/5

Read More | |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Conclusion of Linear Algebra

Linear algebra is a branch of mathematics that deals with vector spaces and linear mappings between these spaces. Linear algebra serves as a foundational pillar in mathematics with wide-ranging applications across numerous fields. Its concepts, including vectors, matrices, eigenvalues, and eigenvectors , provide powerful tools for solving systems of equations, analyzing geometric transformations, and understanding fundamental properties of linear mappings .

The versatility of linear algebra is evident in its application in diverse areas such as physics, engineering, computer science, economics, and more.

Linear Algebra – FAQs

Linear Algebra is a branch of mathematics focusing on the study of vectors, vector spaces, linear mappings, and systems of linear equations. It includes the analysis of lines, planes, and subspaces.

Why is Linear Algebra Important?

Linear Algebra is fundamental in almost all areas of mathematics and is widely applied in physics, engineering, computer science, economics, and more, due to its ability to systematically solve systems of linear equations.

What are Vectors and Vector Spaces?

Vectors are objects that can be added together and multiplied (“scaled”) by numbers, called scalars. Vector spaces are collections of vectors, which can be added together and multiplied by scalars, following certain rules.

What are Eigenvalues and Eigenvectors?

Eigenvalues and eigenvectors are concepts in Linear Algebra where, given a linear transformation represented by a matrix, an eigenvector does not change direction under that transformation, and the eigenvalue represents how the eigenvector was scaled during the transformation.

What is Singular Value Decomposition?

Singular Value Decomposition (SVD) is a method of decomposing a matrix into three simpler matrices, providing insights into the properties of the original matrix, such as its rank, range, and null space.

How Can Linear Algebra Solve Systems of Equations?

Linear Algebra solves systems of linear equations using methods like substitution, elimination, and matrix operations (e.g., inverse matrices), allowing for efficient solutions to complex problems.

What is the Difference Between Linear Dependence and Independence?

Vectors are linearly dependent if one vector can be expressed as a linear combination of the others. If no vector in the set can be written in this way, the vectors are linearly independent.

What is Linear Algebra in Real Life?

Linear algebra plays an important role to determine unknown quantities. Linear Algebra basically used for calculating speed, Distance, or Time. It is also used for projecting a three-dimensional view into a two-dimensional plane, handled by linear maps. It is also used to create ranking algorithms for search engines such as Google.

How is Linear Algebra used in Engineering?

Linear Algebra is used in Engineering for finding the unknown values, and defining various parameters of any functions.

Please Login to comment...

Similar reads.

- linear algebra

- Maths-Categories

- Engineering Mathematics

- School Learning

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Stack Exchange Network

Stack Exchange network consists of 183 Q&A communities including Stack Overflow , the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.

Q&A for work

Connect and share knowledge within a single location that is structured and easy to search.

How does linear algebra help with computer science?

I'm a Computer Science student. I've just completed a linear algebra course. I got 75 points out of 100 points on the final exam. I know linear algebra well. As a programmer, I'm having a difficult time understanding how linear algebra helps with computer science?

Can someone please clear me up on this topic?

- linear-algebra

- soft-question

- computer-science

- applications

- 3 $\begingroup$ Linear transformations have many applications in graphics. $\endgroup$ – user10444 Commented Mar 28, 2013 at 16:44

- $\begingroup$ @user10444 can you please give me some examples? $\endgroup$ – Billie Commented Mar 28, 2013 at 16:58

- 3 $\begingroup$ A nice way to represent graphs is as an adjacency matrix. Many algorithms use this matrix representation and matrix operation to manipulate the graph. Graphs are absolutely fundamental for computer science. Programming is not computer science. $\endgroup$ – DanielV Commented Mar 4, 2016 at 2:38

5 Answers 5

The page Coding The Matrix: Linear Algebra Through Computer Science Applications (see also this page ) might be useful here.

In the second page you read among others

In this class, you will learn the concepts and methods of linear algebra, and how to use them to think about problems arising in computer science.

I guess you have been giving a standard course in linear algebra, with no reference to applications in your field of interest. Although this is standard practice, I think that an approach in which the theory is mixed with applications is to be preferred. This is surely what I did when I had to teach Mathematics 101 to Economics majors, a few years ago.

- $\begingroup$ Good-to-know. thank-you. $\endgroup$ – Billie Commented Mar 28, 2013 at 21:03

Linear algebra applies to many areas of machine learning. Here is just a small set of examples.

Support Vector Machines find a best separating hyperplane between two sets of vectors. The optimization problem minimizes an objective function that is most clearly expressed using linear algebra, the minimization algorithms are often solved in the dual space using linear algebra, and proofs regarding the algorithms involve linear algebra.

Many semi-supervised label propagation graph algorithms can be expressed as optimization of formulae involving the graph's Laplacian matrix .

Spectral clustering separates data points into groups of related points by finding the eigenvalues of a graph's Laplacian matrix that have small eigenvectors.

Neural nets use linear algebra in various ways. For example, densely connected neural net layers perform matrix/tensor multiplication to propagate values between them.

Convex optimization algorithms, which are used throughout machine learning, use linear algebra. The most common algorithm is Low-Memory BFGS .

Optimization algorithms used for non-convex problems, such as AdaGrad , are often formulated and implemented using linear algebra.

PageRank (which uses stochastic matrices and eigenvectors at its heart) is arguably one of the most useful applications of computer science https://en.wikipedia.org/wiki/PageRank

- $\begingroup$ From what I've understood, PageRank is just an iterative fixed-point finder, the main idea being that you start with some ranking and then iteratively improve it by convoluting it with the edge weights. You can view this as finding the $1$-eigenvector of a stochastic matrix $A$ iteratively by applying $A$ repeatedly to an arbitrary vector; but this viewpoint is not needed for the implementation! Linear algebra only becomes useful if you want to rigorously analyze the efficiency of the method. If linear algebra wasn't around, PageRank might have easily been invented without it. $\endgroup$ – darij grinberg Commented Aug 22, 2018 at 14:30

- $\begingroup$ @darijgrinberg yes this iterative procedure is known as the "power method" introduced by von Mises to find eigenvalues and (crucially for PageRank) eigenvectors. I might be in the minority of mathematicians that think of applied math as being a useful language and I sympathize that problem solving is more important than theory building. But can we separate them? Should we ignore the mindset that we obtain by studying foundational topics such as linear algebra in solving problems in tech. The fine tuning of PageRank is a painful hit-and-miss with no Linear Algebra and Perron-Frobenius. $\endgroup$ – Oskar Limka Commented Jan 7, 2019 at 10:45

Algebra is used in computer science in many ways: boolean algebra for evaluating code paths, error correcting codes, processor optimization, relational database design/optimization, and so forth.

Matrix computations are used in computer programming in many ways: graphics, state-space modeling, arithmetic, ad hoc business logic, and so forth.

Linear algebra as a sub-discipline is often taught in one of two ways: from a computational aspect of things, which focuses on matrices, their properties, and operations on matrices; or, algebraically, where linear mappings are treated as algebraic structures, and one studies, for instance, the group theoretic relations that arise.

In either case, you will not need to try too hard to find situations where knowledge of either theoretical linear algebra or matrix mathematics will be necessary.

A computer scientist needs various algebraic theories: semigroups, rings, fields, categories. Linear algebra is a base for most of them. Besides, it is used in all other mathematical sciences (differencial equations, probability etc.)

You must log in to answer this question.

Not the answer you're looking for browse other questions tagged linear-algebra soft-question computer-science big-list applications ..

- Featured on Meta

- We spent a sprint addressing your requests — here’s how it went

- Upcoming initiatives on Stack Overflow and across the Stack Exchange network...

Hot Network Questions

- Where can I access records of the 1947 Superman copyright trial?

- Tikz Border Problem

- Old animated film with flying creatures born from a pod

- Turning Misty step into a reaction to dodge spells/attacks

- How many steps are needed to turn one "a" into at least 100,000 "a"s using only the three functions of "select all", "copy" and "paste"?

- Times of a hidden order

- Examples of distribution for which first-order condition is not enough for MLE

- Why does Paul's fight with Feyd-Rautha take so long?

- How do I prevent losing the binoculars?

- What are these courtesy names and given names? - confusion in translation

- What's the point of Dream Chaser?

- Are there conditions for an elliptic Fq-curve to have a quadratic Fq-cover of the line without ramification Fq-points?

- Why is Uranus colder than Neptune?

- If a lambda is declared as a default argument, is it different for each call site?

- What does '\($*\)' mean in sed regular expression in a makefile?

- Dagesh on final letter kaf

- Why is a game's minor update (e.g., New World) ~15 GB to download?

- Can you help me to identify the aircraft in a 1920s photograph?

- Help identifying hollow spring / inverted header sockets

- Can you arrange 25 whole numbers (not necessarily all different) so that the sum of any three successive terms is even but the sum of all 25 is odd?

- Is it prohibited to consume things that unclean animals produce?

- What’s the highest salary the greedy king can arrange for himself?

- Create a phone-book using LaTeX

- Is arxiv strictly for new stuff?

Get the Reddit app

A subreddit for all questions related to programming in any language.

What are some good programming projects that require linear algebra?

I'm in a linear algebra course right now and I enjoy it, but it's focusing on calculations, such as how to calculate eigenstuff, the determinant, etc. I'm really interested in applying this knowledge and learning about how linear algebra can be used to solve computer science problems and how it can be used in code.

Does anyone have any ideas for some programming projects that can help me solidify my linear algebra knowledge and help learn how it can be applied to CS?

Stack Exchange Network

Stack Exchange network consists of 183 Q&A communities including Stack Overflow , the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.

Q&A for work

Connect and share knowledge within a single location that is structured and easy to search.

What parts of linear algebra are used in computer science?

I've been reading Linear Algebra and its Applications to help understand computer science material (mainly machine learning), but I'm concerned that a lot of the information isn't useful to CS. For example, knowing how to efficiently solve systems of linear equations doesn't seem very useful unless you're trying to program a new equation solver. Additionally, the book has talked a lot about span, linear dependence and independence, when a matrix has an inverse, and the relationships between these, but I can't think of any application of this in CS. So, what parts of linear algebra are used in CS?

- linear-algebra

- mathematical-foundations

- 2 $\begingroup$ Are you asking for your own benefit, or are you a teacher looking for strategies for motivating your students? $\endgroup$ – Raphael Commented Mar 2, 2015 at 11:52

- $\begingroup$ Linear algebra is useful in many parts of computer graphics (you can find a lot of related information googling). $\endgroup$ – Juho Commented Mar 2, 2015 at 15:54

- 1 $\begingroup$ Solving systems of linear equations is incredibly useful in computer science. For example: en.m.wikipedia.org/wiki/Combinatorial_optimization $\endgroup$ – Ant P Commented Mar 2, 2015 at 16:12

- 2 $\begingroup$ Matrices are used heavily in game development, IE for projections, rotations, and quaternion math. $\endgroup$ – Paul Commented Mar 2, 2015 at 16:49

- $\begingroup$ @Paulpro The question is for applications of linear algebra (a body of work), not matrices (a set of objects). $\endgroup$ – Raphael Commented Mar 2, 2015 at 17:42

6 Answers 6

The parts that you mentioned are basic concepts of linear algebra. You cannot understand the more advanced concepts (say, eigenvalues and eigenvectors) before first understanding the basic concepts. There are no shortcuts in mathematics. Without an intuitive understanding of the concepts of span and linear independence you won't get far in linear algebra.

Some algorithms only work with full rank matrices – Do you know what that means? Do you know what can make a matrix not full rank? How to handle this? You will have no clue if you don't know what linear independence is.